Microsoft Azure Event Hubs

Event Hubs is a simple, dependable, and scalable real-time data intake solution. Learn more about its features and functions.

Join the DZone community and get the full member experience.

Join For FreeIntroduction

With big data streaming platform and event ingestion service Azure Event Hubs, millions of events can be received and processed in a single second. Any real-time analytics provider or batching/storage adaptor can transform and store data supplied to an event hub. Event Hubs is a simple, dependable, and scalable real-time data intake solution. Build dynamic data pipelines that stream millions of events per second from any source to quickly address business concerns. During emergencies, continue processing data by utilizing the geo-disaster recovery and geo-replication functionalities.

Effortlessly integrate with other Azure services to gain insightful information. You get a managed Kafka experience without having to maintain your own clusters when you enable existing Apache Kafka clients and applications to communicate with Event Hubs without any code changes. Experience both micro-batching and real-time data intake in the same stream.

Some of the instances in which you can use Event Hubs are the ones listed below:

- Anomaly detection (fraud/outliers)

- Application logging

- Analytics pipelines, such as clickstreams

- Live dashboards

- Archiving data

- Transaction processing

- User telemetry processing

- Device telemetry streaming

Why Use Event Hubs?

Only when processing data is simple and timely can insights be drawn from data sources is it considered worthwhile. To create your full big data pipeline, Event Hubs offers a distributed stream processing platform with low latency and seamless connectivity with data and analytics services both inside and outside of Azure.

The "front door" for an event pipeline, also known as an event investor in solution designs, is represented by event hubs. To separate the creation of an event stream from its consumption, an event investor is a component or service that lies between event publishers and event consumers. By separating the interests of event producers and event consumers, Event Hubs offers a unified streaming platform with a time retention buffer.

Key Features of the Azure Event Hubs Service

Fully Managed PaaS

You can concentrate on your business solutions since Event Hubs is a fully managed Platform-as-a-Service (PaaS) with minimum configuration or administrative overhead. You can enjoy PaaS Kafka with Event Hubs for Apache Kafka ecosystems without having to manage, set up, or run your clusters.

Support for Real-time and Batch Processing

Real-time streams ingest, buffering, archive, and process to produce insights that can be put to use. With Event Hubs' partitioned consumer paradigm, you can regulate the processing speed while allowing multiple applications to process the stream simultaneously. Azure Functions and Azure Event Hubs are also integrated for a serverless architecture.

Capture Event Data

Capture your data in near-real time and save it in an Azure Data Lake or Blob storage for micro-batch processing or long-term archiving. On the same stream that you use to get real-time statistics, you can achieve this Behaviour. It takes little time to set up event data collecting. It scales automatically with Event Hubs throughput units or processing units and has no administrative overhead. With the aid of event hubs, you may concentrate on data processing rather than data acquisition.

Scalable

With Event Hubs, you can start with megabyte-sized data streams and scale them up to gigabyte- or terabyte-sized ones. One of the various options available to scale the number of throughput units or processing units to suit your usage requirements is the auto-inflate feature.

Rich Ecosystem

Event Hubs is based on the widely-used AMQP 1.0 protocol, which is available in many languages and has a sizable ecosystem. You may quickly begin processing your streams from Event Hubs using.NET, Java, Python, and JavaScript. Low-level integration is offered by all client languages that are supported. You may create serverless architectures using the ecosystem's seamless connectivity with Azure services like Azure Stream Analytics and Azure Functions.

Event Hubs for Apache Kafka

Additionally, Apache Kafka (1.0 and later) clients and applications can communicate with Event Hubs using Event Hubs for Apache Kafka ecosystems. It is not necessary for you to use a Kafka-as-a-Service solution that is not built into Azure or to build up, configure, and manage your own Zookeeper and Kafka clusters.

Event Hubs Premium and Dedicated

High-end streaming demands that call for better isolation, predictable latency and low interference in a managed multitenant PaaS environment are catered to by Event Hubs premium. The premium tier offers various extra features, such as dynamic partition scale-up, extended retention, and customer-managed keys, in addition to all the benefits of the basic offering. Visit Event Hubs Premium for additional details.

For clients with the most demanding streaming requirements, Event Hub's dedicated tier offers single-tenant deployments. This single-tenant service is only available on our dedicated pricing tier and has a guaranteed SLA of 99.99 percent. Millions of events per second can be ingested by an Event Hubs cluster with guaranteed capacity and sub-second latency. The dedicated cluster's namespaces and event hubs have all the functionality of the premium version and more. Visit Event Hubs Dedicated for additional details.

Event Hubs on Azure Stack Hub

The Azure Stack Hub's Event Hubs let you implement hybrid cloud scenarios. Both on-premises and Azure cloud processing are supported, as are streaming and event-based solutions. Your solution can enable the processing of events/streams at a high scale regardless of whether your scenario is hybrid (connected) or unconnected. The only restriction on your scenario is the size of the Event Hubs cluster, which you can provision as needed.

There is substantial feature parity between the Azure Stack Hub and Azure editions of Event Hubs. This parity means that there are few changes in the experiences offered by SDKs, samples, PowerShell, CLI, and portals.

Key Architecture Components

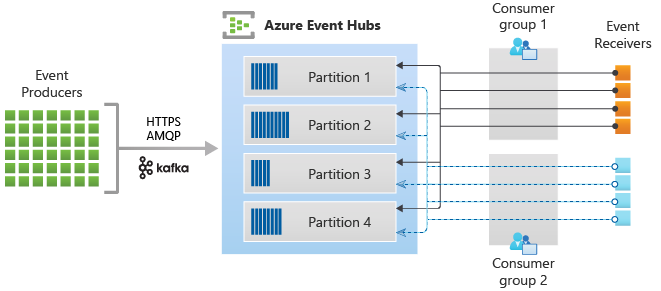

Event Hubs contains the following key components:

- Event producers: Any organization that transmits information to an event hub. Event publishers can use HTTPS, AMQP 1.0, or Apache Kafka to post events (1.0 and above)

- Each consumer only reads a particular portion, or partition, of the message stream.

- A perspective (state, position, or offset) of a complete event hub for the consumer group. Each consuming application can have a unique perspective of the event stream, thanks to consumer groups. They each independently read the stream at their own rate and using their own offsets.

- Event Hubs' throughput capacity is governed by pre-purchased capacity units known as throughput units (standard tier), processing units (premium tier), or capacity units (dedicated).

- Any entity that reads event data from an event hub is an event receiver. Each user of Event Hub connects using an AMQP 1.0 session. As events become available, the Event Hubs service presents them through a session. All Kafka consumers connect using version 1.0 or later of the Kafka protocol.

The architecture for Event Hubs' stream processing is depicted in the following figure:

Why Choose Event Hubs?

Instead of managing infrastructure, concentrate on getting insights from your data. Create real-time big data pipelines to address immediate business concerns.

Simple

Construct real-time data pipelines with a few clicks. Integrate seamlessly with Azure data services to find insights more quickly.

Secure

Safeguard your current info. Event Hubs has earned certification from the CSA STAR, ISO, SOC, GxP, HIPAA, HITRUST, and PCI bodies.

Scalable

Pay only for what you use by dynamically adjusting throughput based on your consumption requirements.

Open

With support for common protocols like AMQP, HTTPS, and Apache Kafka, you can ingest data from anywhere and develop across platforms.

Features Of Microsoft Azure Event Hubs

Ingest Millions of Events Per Second

Data is continuously ingested from millions of sources with minimal latency and programmable time retention.

Enable Real-Time and Micro-Batch Processing Concurrently

Utilize Event Hubs Capture to easily transmit data to Blob storage or Data Lake storage for long-term archival or micro-batch processing.

Get a Managed Service With an Elastic Scale

Maintain control over when and how much to scale while easily scaling from streaming megabytes of data to terabytes.

Easily Connect With the Apache Kafka Ecosystem

With Azure Event Hubs for Apache Kafka, you can easily link Event Hubs with your Kafka apps and clients.

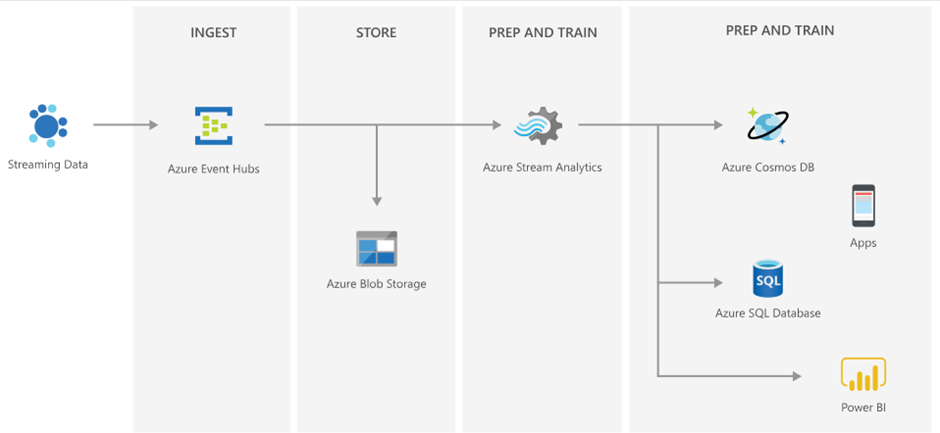

Build a Serverless Streaming Solution

Create a serverless streaming solution from beginning to end by natively integrating with Stream Analytics.

Ingest Events on Azure Stack Hub and Realize Hybrid Cloud Solutions

Utilize Azure services to further analyze, visualize, or store your data while implementing hybrid cloud architectures by locally ingesting and processing data at a massive scale on your Azure Stack Hub.

Serverless Streaming With Event Hubs

With Event Hubs and Stream Analytics, create a complete serverless streaming infrastructure.

Conclusion

Azure Event Hubs is a big data streaming platform and event ingestion service. It can receive and process millions of events per second. Data sent to an event hub can be transformed and stored by using any real-time analytics provider or batching/storage adapters. Event Hubs is a fully managed, real-time data ingestion service that’s simple, trusted, and scalable. Stream millions of events per second from any source to build dynamic data pipelines and immediately respond to business challenges. Keep processing data during emergencies using the geo-disaster recovery and geo-replication features.

Opinions expressed by DZone contributors are their own.

Comments