The Delegated Chain of Thought Architecture

The D-CoT architecture decouples reasoning from execution in LLM by centralizing reasoning in a "modulith" and delegating execution tasks to specialized modules.

Join the DZone community and get the full member experience.

Join For FreeThis article introduces the Delegated Chain of Thought (D-CoT) Architecture, a novel framework for large language models (LLMs) that decouples reasoning from execution.The architecture centralises reasoning in a "modulith" model while delegating execution tasks to smaller, specialised models.

We are collaborating with AI engineers to create tools that software engineers can easily use. Merging these two disciplines is essential to mastering tools and integrating them into our information system effectively. This approach draws heavily on software architecture analogies and focuses on integrating advanced LLM techniques, such as Chain-of-Thought (CoT) prompting, ReAct, Toolformer, and modular AI design principles.

By embedding these techniques into specific components of the architecture, D-CoT aims to achieve modularity, scalability, and cost efficiency while addressing challenges like error propagation and computational inefficiency.

Introduction

Large language models (LLMs) have demonstrated remarkable capabilities in reasoning and problem-solving through techniques such as Chain of Thought (CoT) prompting [1]. However, as tasks grow more complex, traditional CoT approaches face challenges that are analogous to those encountered in software architecture evolution.

Historically, the architecture of information systems has evolved to solve new problems arising from increasing user demands and system complexity [2]. This evolution often relied on hardware improvements but also required fundamental changes in how systems were structured — moving from monolithic designs to modular, scalable architectures like microservices.

In a similar vein, LLM systems are undergoing a rapid transformation. It is possible to perceive changes in software architecture that have taken place over the last 20 years within a few months or years.

Despite the rapid evolution of this field, there remains a critical issue that must be addressed.

Challenges in Deploying LLMs

Deploying LLMs in modern information systems reveals several limitations:

- Error propagation. Mistakes in intermediate reasoning steps can cascade into incorrect final outputs.

- Computational inefficiency. Reasoning and execution are often closely linked, resulting in resource-intensive operations. For instance, recent techniques such as CoT are highly efficient for larger models (100B and above), but this comes at a significant cost [3].

- Lack of modularity. Monolithic architectures are often unable to adapt to specific business needs or optimize costs. Information systems are subject to rapid change, and it is essential to have an architecture that can adapt to these changes in a cost-effective manner [4].

These challenges mirror those faced by traditional software systems before the adoption of modern architectural principles like decoupling, modularity, scalability, and cost-efficiency.

Background and Related Work

To contextualize our architecture within the current landscape of LLM research, we review existing techniques and frameworks that have inspired our approach.

Chain of Thought Prompting

Chain of Thought (CoT) prompting enables LLMs to reason step-by-step by generating intermediate reasoning steps before arriving at a final answer [1][12]. Variants such as Tree-of-Thoughts extend this idea by exploring multiple reasoning paths in parallel [5].

While CoT has significantly improved performance on reasoning tasks, its monolithic nature often couples reasoning with execution (e.g., tool usage or external API calls). This tight coupling introduces challenges:

- It limits flexibility by making it difficult to evolve specific components independently.

- It increases computational costs by requiring the same model to handle both reasoning and execution tasks.

These issues echo architectural challenges in software systems where tightly coupled components hinder scalability and maintainability [6].

Multi-Agent Systems

Multi-agent systems (MAS) decompose tasks into subtasks handled by specialized agents. This approach has been adapted for LLMs through agent-based architectures where each agent focuses on specific roles (e.g., retrieval, synthesis). Recent notable works include:

- Toolformer. Trains LLMs to decide when and how to use external tools like calculators or APIs autonomously [7].

- ReAct. Combines reasoning and acting by enabling LLMs to dynamically interact with tools during problem-solving [8].

Both approaches explore modularity but differ in their focus:

- Toolformer emphasizes tool usage for enhancing task-specific performance while maintaining efficiency.

- ReAct demonstrates how interleaving reasoning with actions can improve interpretability and adaptability across diverse tasks.

Interestingly, ReAct highlights efficiency gains through fine-tuning smaller models:

"PaLM-8B fine-tuned ReAct outperforms all PaLM-62B prompting methods, and PaLM-62B fine-tuned ReAct outperforms all PaLM-540B prompting methods."

This suggests that smaller models can achieve competitive performance when specialized for specific roles — a key principle in our proposed architecture.

While MAS improve modularity, they often lack a centralized framework for coordinating subtasks effectively.

Reasoning Techniques

This section complements the previous one by focusing on frameworks that enhance reasoning workflows within modular AI architectures:

ReWOO (Reasoning Without Observation)

Decouples reasoning from observation by pre-planning reasoning steps before executing external actions. This approach reduces computational overhead and improves robustness [9].

Graph of Thoughts (GoT)

Introduces a graph-based structure for modular reasoning workflows where multiple paths are explored simultaneously before consolidating results [10].

These techniques emphasize decoupling components for efficiency but do not explicitly address cost optimization or draw parallels with software architecture principles — an area where our proposal adds value.

Delegated Chain of Thought Architecture

This concept originates from foundational principles in software architecture, particularly the necessity of reducing coupling between distinct responsibilities while accepting certain trade-offs. The primary goal is to isolate responsibilities effectively, ensuring flexibility and maintainability in the system.

A useful comparison can be drawn with Hexagonal Architecture, where the core logic (domain) is isolated, and interactions with external systems (e.g., databases, APIs) are managed through ports and adapters [14]. This approach increases architectural complexity but provides a clear separation of concerns, enabling better scalability, testability, and adaptability to change.

Core Concept

At its core, D-CoT consists of two major components:

A Central Reasoning Model ("Modulith")

- Task decomposition. Breaks down complex queries into manageable subtasks.

- Coordination. Assigns subtasks to specialized models or modules based on their capabilities.

- Reasoning. Synthesizes intermediate results into a coherent and accurate response.

Specialized Execution Modules ("Microservices")

- Handle domain-specific computations (e.g., financial modeling, medical diagnostics, text-to-SQL model, RAG, etc.).

- Perform external tool usage or API calls for retrieving data or performing calculations.

- Validate and verify intermediate results before passing them back to the central reasoning model.

This modular design mirrors the evolution of software architectures from monolithic systems to modular microservices while introducing a central "modulith" that maintains domain logic and orchestrates specialized tasks. By decoupling reasoning from execution, D-CoT achieves modularity without sacrificing centralized control over complex workflows.

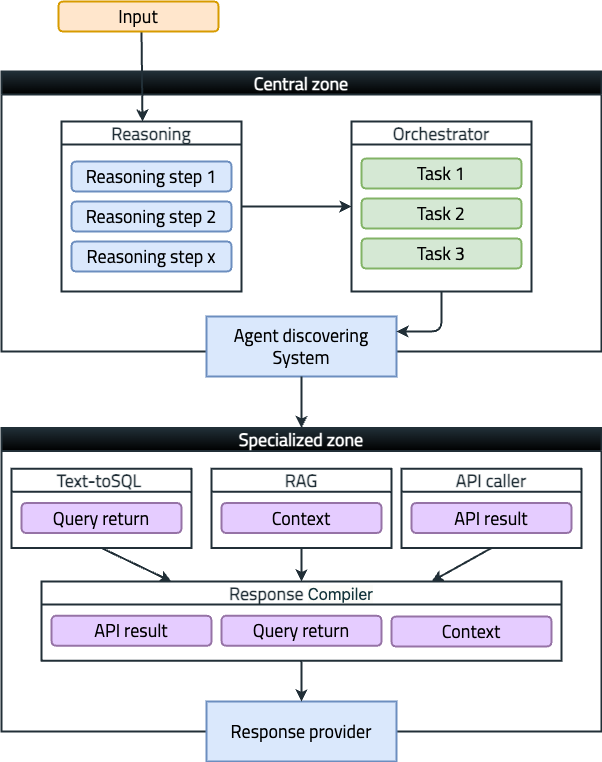

Workflow and Components

The Delegated Chain of Thought (D-CoT) architecture processes queries by combining centralized reasoning with specialized execution.

Components

1. Input. The entry point where the user submits a query (e.g., "What is the GDP growth rate of the U.S. in 2023 compared to the global average?"). This query is passed to the central reasoning model for processing.

2. Central zone.

- Reasoning. The central reasoning model performs step-by-step analysis to break down the query into manageable subtasks. These steps include understanding the query, identifying required data, and creating logical subtasks.

- Orchestrator. Coordinates task execution by assigning subtasks to appropriate specialized modules. It ensures efficient delegation and tracks task progress.

3. Agent discovering system. This component dynamically identifies and connects to available specialized modules in the system. It ensures that the most suitable module is selected for each subtask, enhancing flexibility and scalability. This could be compared to service discovery in microservices architecture [11].

4. Specialized zone.

- Text-to-SQL. Executes database queries to retrieve structured data (e.g., querying a database for U.S. GDP growth rates).

- API caller. Interacts with external APIs to fetch specific information (e.g., retrieving global GDP growth rates from an economic API).

- Retrieval-augmented generation (RAG). Retrieves additional contextual information from knowledge bases or documents to support reasoning.

5. Response compiler. Aggregates intermediate results from specialized modules verifies their accuracy and synthesizes them into a coherent final response.

6. Response provider. Delivers the final output back to the user in a clear and formatted manner (e.g., "The U.S. GDP growth rate in 2023 was below the global average by 0.9 percentage points.").

Workflow

The D-CoT workflow begins when a user submits a query through the input component. The central reasoning model decomposes the query into subtasks, which are delegated by the orchestrator to specialized modules via the Agent Discovering System.

Each module processes its assigned task independently (e.g., Text-to-SQL retrieves database results, API Caller fetches external data, RAG gathers context). The results are returned to the Response Compiler, which aggregates and formats them into a final response. This response is then delivered to the user through the Response Provider. The workflow ensures modularity, scalability, and efficient task execution.

Key Features

The D-CoT architecture introduces several key features that address limitations in traditional LLM deployments:

Resilience

Errors or failures in execution modules are isolated from the central reasoning process, preventing cascading failures across the system.

Cost efficiency

By delegating simpler tasks to smaller, specialized models, computational overhead is significantly reduced compared to relying solely on a large monolithic LLM for all operations.

Scalability

New specialized modules can be added seamlessly without requiring changes to the central modulith, enabling easy adaptation to new domains or functionalities.

Modularity With Central Control

While execution tasks are modularized into independent microservices, the central modulith retains control over task decomposition and coordination, ensuring consistency and coherence across workflows.

Explainability and Transparency

The architecture provides clear reasoning paths by separating task decomposition, execution, and aggregation into distinct layers. This modularity enhances explainability for users and developers alike.

Incorporating Techniques into the Architecture

The Delegated Chain of Thought (D-CoT) architecture is not just a theoretical framework; it is a practical system designed to integrate modern reasoning and execution techniques seamlessly. These methods, such as Chain-of-Thought (CoT), ReAct, Toolformer, and ReWOO, are not only compatible with the architecture but also validate its modular design by addressing key challenges like scalability, cost efficiency, and adaptability. By embedding these techniques into specific components of the architecture, D-CoT ensures that each part of the system operates efficiently while contributing to a cohesive whole.

Central Zone: Reasoning and Orchestration

At the heart of D-CoT lies the reasoning component, where advanced techniques guide task decomposition and decision-making:

- Chain-of-Thought (CoT) enables step-by-step reasoning, breaking down complex queries into manageable subtasks. This improves transparency and accuracy by ensuring that each reasoning step is logical and traceable.

- ReWOO (Reasoning Without Observation) enhances efficiency by pre-planning reasoning steps before execution. This decoupling avoids unnecessary interactions with external modules, reducing computational overhead.

The Orchestrator Component leverages dynamic frameworks to coordinate subtasks:

-

ReAct allows the orchestrator to interleave reasoning with acting dynamically. This ensures that subtasks are delegated in real time based on their dependencies and context, making task delegation adaptive and efficient.

Specialized Zone: Execution Modules

In the Specialized Zone, modules handle domain-specific tasks independently while integrating seamlessly with the central reasoning model:

- The Text-to-SQL module applies Toolformer principles to autonomously decide when and how to query structured databases. This reduces reliance on the central model for execution-related decisions.

- The API caller module also uses Toolformer principles to determine when API calls are necessary and how to incorporate their results efficiently into the workflow.

Challenges and Future Directions

While the D-CoT architecture improves modularity and efficiency, it inherits challenges common to distributed systems. Below, we outline key hurdles and propose actionable solutions to address them.

Challenges

Complex Coordination

Managing multiple specialized modules in the D-CoT architecture introduces inherent complexity. Fault tolerance becomes a critical challenge, as the failure of a single module (e.g., a database query module) could disrupt the entire workflow without proper fallback mechanisms.

Additionally, debugging errors across distributed modules requires robust logging and tracing systems to pinpoint root causes effectively. Dependency management between reasoning steps and execution modules must also be carefully orchestrated to avoid bottlenecks and ensure smooth task execution.

Latency

Delegating tasks across multiple modules introduces latency due to communication overhead and execution delays. For instance, API calls or database queries may add significant response times, particularly in scenarios where external services are involved. This latency can compound as the complexity of the workflow increases, making optimization strategies essential for maintaining system responsiveness.

Large-Scale Systems

In large-scale deployments with numerous specialized agents, identifying which agent is available and capable of handling a specific task becomes increasingly complex. Dynamic module discovery mechanisms are necessary to maintain an up-to-date registry of available agents while ensuring that task routing remains efficient and low-latency. Without such mechanisms, scalability could be severely limited.

How This Fits the Architecture

These challenges are not unique to D-CoT but are common in distributed systems and decentralized architectures. By addressing these issues through techniques like dynamic service discovery, retry logic for fault tolerance, and caching for latency reduction, D-CoT can maintain its modularity and scalability while ensuring robust performance.

These challenges mirror those in distributed software systems, where centralized control must balance modular execution.

Conclusion

The Delegated Chain of Thought (D-CoT) architecture represents a significant step forward in addressing the limitations of traditional LLM systems by decoupling reasoning from execution. By centralizing reasoning in a modulith while leveraging microservice-inspired specialized modules, D-CoT achieves modularity, scalability, and cost efficiency.

The incorporation of cutting-edge techniques such as Chain-of-Thought prompting for reasoning workflows and Toolformer for autonomous task execution demonstrates how state-of-the-art advancements can be operationalized within a cohesive framework. Moreover, drawing parallels with decentralized software architectures highlights how established engineering principles can inform AI system design.

However, like any distributed system, D-CoT introduces challenges such as increased complexity in coordination, latency due to task delegation, and fault tolerance across modules. Addressing these trade-offs will require further exploration into dynamic service discovery mechanisms, reinforcement learning for task optimization, and robust error recovery patterns inspired by microservices ecosystems.

Future work should focus on validating D-CoT through real-world implementations and benchmarks to assess its performance under varying workloads. Additionally, exploring hybrid orchestration models that balance centralized control with distributed execution could further enhance scalability and resilience.

In conclusion, D-CoT offers a promising blueprint for deploying LLMs in modern information systems by combining modular design principles with advanced AI techniques. As AI systems continue to evolve rapidly, architectures like D-CoT will play a pivotal role in ensuring their adaptability to increasingly complex tasks and environments.

References

- Wei, J., Wang, X., Schuurmans, D., Bosma, M., Chi, E. H., Le, Q. V., & Zhou, D. (2022). Chain of Thought Prompting Elicits Reasoning in Large Language Models. arXiv preprint arXiv:2201.11903. Retrieved from https://arxiv.org/abs/2201.11903

- DZone (2024). Evolution of Software Architecture: From Monoliths to Microservices and Beyond. Retrieved February 17, 2025, from https://dzone.com/articles/evolution-of-software-architecture-from-monoliths

- Kojima, T., Gu, S. S., Reid, M., Matsuo, Y., & Iwasawa, Y. (2022). Large Language Models are Zero-Shot Reasoners. arXiv preprint arXiv:2205.11916. Retrieved from https://arxiv.org/abs/2205.11916

- DZone (2024). Architecture Style: Modulith vs Microservices. Retrieved February 17, 2025, from https://dzone.com/articles/architecture-style-modulith-vs-microservices

- Yao, S., Zhao, Z., Yu, D., Cao, Y., & Zhao, Y. (2023). Tree-of-Thoughts: Deliberate Problem Solving with Large Language Models. arXiv preprint arXiv:2305.10601. Retrieved from https://arxiv.org/abs/2305.10601

- Harvard Business School (2017). The Impact of Modular Architectures on System Scalability and Maintainability (Working Paper No. 17-078). Retrieved from https://www.hbs.edu/ris/Publication%20Files/17-078_caaa9a9c-74ac-4eff-b68e-7090ed06cb81.pdf

- Schick, T., Dwivedi-Yu, J., Singh, P., & Andreas, J. (2023). Toolformer: Language Models Can Teach Themselves to Use Tools. arXiv preprint arXiv:2302.04761. Retrieved from https://arxiv.org/abs/2302.04761

- Yao, S., Zhao, Z., Yu, D., Cao, Y., & Zhao, Y. (2022). ReAct: Synergizing Reasoning and Acting in Language Models. arXiv preprint arXiv:2210.03629. Retrieved from https://arxiv.org/abs/2210.03629

- Zhou, D., Schuurmans, D., Le, Q. V., & Chi, E. H. (2023). ReWOO: Reasoning Without Observation for Language Models. arXiv preprint arXiv:2306.04872. Retrieved from https://arxiv.org/abs/2306.04872

- Liu, L., Zhang, X., Wang, J., & Li, L. (2023). Graph of Thoughts: Modular Reasoning Framework for Complex Problem Solving in AI Systems. arXiv preprint arXiv:2308.09687. Retrieved from https://arxiv.org/abs/2308.09687

- Baeldung. (n.d.). Service discovery in microservices. Retrieved February 17, 2025 from https://www.baeldung.com/cs/service-discovery-microservices

- DZone (2024). Chain-of-Thought Prompting: A Comprehensive Analysis of Reasoning Techniques in Large Language Models. Retrieved February 17, 2025 from https://dzone.com/articles/chain-of-thought-prompting

- Retrieved February 17, 2025, from https://dzone.com/articles/architecture-style-modulith-vs-microservices

- Cockburn, A. (2005). Hexagonal Architecture. Retrieved February 17, 2025, from https://alistair.cockburn.us/hexagonal-architecture/

Opinions expressed by DZone contributors are their own.

Comments