The Role of AI Ethics: Balancing Innovation with Social Responsibility

Artificial Intelligence represents a rapidly expanding domain; it’s crucial to acknowledge the ethical dilemmas that AI poses.

Join the DZone community and get the full member experience.

Join For FreeArtificial Intelligence (AI) represents a rapidly expanding domain characterized by its swiftly evolving technologies and methodologies. Its transformative potential spans across various sectors of the economy and society. Nonetheless, it’s crucial to acknowledge the ethical dilemmas that AI poses. Companies at the forefront of AI development, alongside businesses, communities, administrations, and individuals incorporating it into their daily lives, must remain mindful of these issues.

The Primary Domains of Artificial Intelligence

AI can be categorized into various domains, each with unique attributes tailored to achieve specific goals in particular applications. These objectives and applications stem from the technical capabilities and specific challenges inherent in the respective domains, addressing diverse use cases.

Machine learning (ML) represents an AI subset that empowers machines to learn autonomously without direct programming. Its extensive application spans across a diverse array of use cases, such as:

- Medical analysis

- Customer retention modeling

- SPAM identification

- Product suggestion

- Fraud detection

- Automotive: Assisted navigation…

Deep learning, an extension of machine learning, goes beyond constructing complex hierarchical models through artificial neural networks arranged in layers. These interconnected networks, comprising nodes akin to the human brain, are engineered to identify patterns. Deep learning is instrumental in sophisticated applications, including:

- Medical analysis

- Image recognition

- Voice recognition

- Language processing

- Robotics

- Cybersecurity

- Bioinformatics …

Computer vision is a branch of AI that enables machines to perceive their surroundings, process images, and interpret videos. Its application spans a diverse array of use cases, including object identification, motion sensing, and independent navigation.

Conversational artificial intelligence enables machines to communicate and interact with humans in natural language. This technology proves its efficacy in various applications like chatbots, virtual assistants, customer support interfaces, and recommendation systems, to name a few.

Robotics enables machines to move and act in the real world, either autonomously or in interaction with humans. Robotics is used in manufacturing, logistics, food processing, machine loading and unloading, and medicine.

The Special Case of Generative AI

Generative artificial intelligence (Gen AI) is a type of artificial intelligence that generates new data, such as images, text, music, or code. It relies on machine learning algorithms to learn from existing data and generate new data.

Despite being an emerging technology, it has experienced impressive achievements since the worldwide release of OpenAI's ChatGPT. Since then, players such as Mistral AI and Hugging Face have appeared on the scene, as have big techs such as Google (Bard AI), Meta (LLaMA), IBM (WatsonX), Salesforce (Einstein GPT), as well as Microsoft, Oracle, and AWS, to name but a few.

The fields of application of generative AI are numerous:

- Content creation: Create images, videos, or music. For example, it can be used to create animated films, video games, or photos.

- Machine translation: Translate languages more accurately and fluently than traditional methods.

- Research and development: to generate new ideas and concepts and to test new hypotheses.

- Improve customer interactions with optimized chat and search functionalities.

- Explore large amounts of unstructured data via conversational interfaces and summaries,

- Improve the sales force, for example, by flagging risks, recommending next interactions, or identifying the optimal customer interaction based on conversation history.

- Identify production errors, anomalies, and defects from images

- Write code and documentation to speed up and evolve developments

- Summarize and highlight changes in large volumes of regulatory documents ...

Alan Turing vs Sam Altman

Generative AI vs. Traditional AI

The concepts of traditional AI and generative AI highlight distinct perspectives and consequences in the field of artificial intelligence. Their synergy attests to the ever-changing trajectory and impact of these technological advances.

Traditional artificial intelligence, often referred to as narrow or weak AI, focuses on the execution of specific tasks. It refers to systems designed to react to a specific set of inputs. These systems have the ability to learn from data and help make decisions or predictions based on that data.

To understand this, let's think of a machine translation system that uses predefined rules to translate texts from one language to another without creating new linguistic structures. This is traditional AI: It presents itself as a proficient entity capable of executing particular tasks based on a predefined set of rules.

Other well-known examples of traditional AI are Apple's Siri and Netflix's recommendation engine. Perhaps the best-known is Google's search algorithm, which handles over 5 billion searches a day. These AIs have been trained to follow specific rules, perform a particular task, and do it well, but they don't generate anything new.

Generative AI, on the other hand, can be considered the next generation of artificial intelligence. It’s a form of AI capable of generating something new on the basis of the gigantic repository on which it has been trained.

For example, if I show a generative model a set of images of cars and trucks, the model must now fully "understand" which features belong to these two classes and how they can be used to generate similar images. The same goes for texts by Victor Hugo or Martin Luther King, for example.

Generative AI models are trained on datasets and learn their underlying patterns to generate new data reflecting the training sets.

AI Ethics

The growing incorporation of AI across all sectors has resulted in numerous use cases, each more pertinent and groundbreaking than the preceding one. These range from the personalization of business recommendations to the prediction of market trends to the implementation of advanced medical diagnostics. The most recent advancement lies in the vast capabilities of generative AI.

These exciting technological developments do, however, raise essential questions about the ethics of AI.

As we make the most of the benefits and opportunities offered by AI, it's crucial to proactively consider the ethical implications associated with its deployment. AI ethics is positioning itself at the heart of current debates in companies but also within governments, highlighting the need to ensure transparency, accountability, and fairness in the design, deployment, and use of these innovative technologies.

"There's a great phrase, written in the '70s: 'The definition of today's AI is a machine that can make a perfect chess move while the room is on fire.' It really speaks to the limitations of AI. In the next wave of AI research, if we want to make more helpful and useful machines, we've got to bring back the contextual understanding" —

Fei-Fei Li AI Researcher and Professor, Stanford University

The ethical risks of AI are diverse. They include:

- Discrimination: AI systems can replicate the biases prevalent in society, resulting in discrimination against certain groups, including but not limited to specific ethnicities, genders, or religions.

- Opacity: AI systems can become increasingly complex and difficult to understand. This can lead to situations where humans are no longer able to explain the decisions made by machines.

- Malicious use: AI systems can be utilized for malicious intentions, including citizen surveillance, weaponization, cyberbullying, or disseminating misinformation.

- Breach of regulations: The Artificial Intelligence Act (AI Act) presents a risk-based European regulatory approach to AI. Its objectives tend to guarantee the safety of AI systems on the European market while respecting fundamental rights and EU values, strengthen governance and enforcement to foster investment and innovation in AI, and promote a single market for legal, safe, and reliable AI applications to avoid fragmentation.

Many risks are important to consider, such as data confidentiality, copyright issues, or the inclusion of intentional or unintentional biases. This last point will be the subject of a future article.

Certain ethical risks are particularly relevant to generative AI:

- Hallucinations: The term "hallucination" was chosen to name AI behavior when an LLM generates information that turns out to be false. Sometimes, they associate words, names, and ideas that seem to make sense but don't. They can thus contradict logic factual reality or appear to be a chaotic mix of facts. Example: "You can tell chicken eggs from cow eggs by their size and color; cow eggs are generally larger than chicken eggs” — ChatGPT

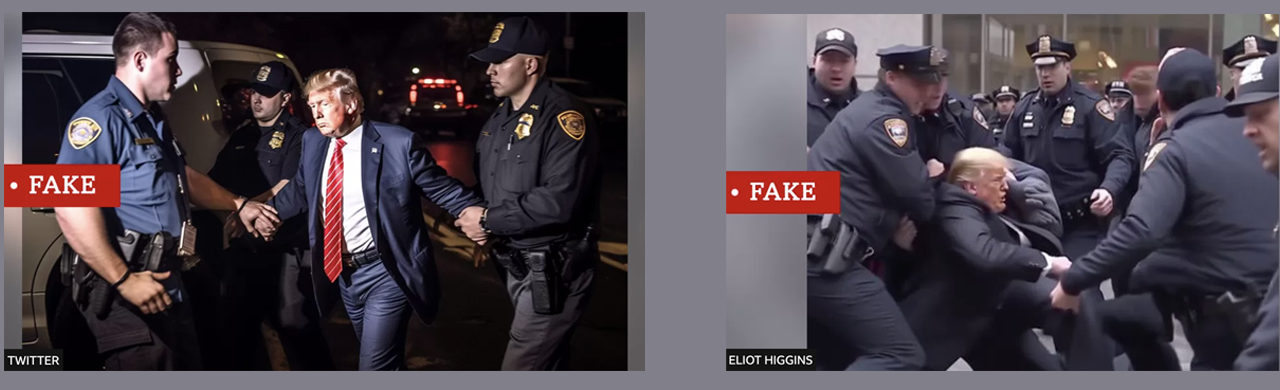

- Deepfakes: In this case, Artificial intelligence is used to create image, audio, and video hoaxes and scams. Deepfakes can transform existing content, images, voice, or video by replacing one person with another. They can also create content from scratch featuring a person in a fictitious situation.

Source bbc.com

An Ethical Approach To AI

Confronted with these risks, it's crucial for companies to adopt an ethical approach to AI. This means that companies must:

- Consider the ethical implications of their AI projects from the very beginning of the constitution of the company's AI strategy upstream of the development processes.

- Implement measures to prevent ethical risks, such as training teams in AI ethics or setting up mechanisms to monitor and control AI systems.

- Be transparent about their AI practices and report on their efforts to comply with ethical principles.

"Despite the growing awareness of the importance of ethical aspects in the development of artificial intelligence (AI) and the crucial role that AI professionals play in the economy and society, in 12 of the 14 countries for which data is available, less than 1% of the job offers published online in 2022 concerning professionals with AI skills mentioned aspects related to ethics in AI" —

Source: "OECD Skills Outlook 2023 : Skills for a Resilient Ecological and Digital Transition" report.

Concrete Actions Taken by Companies

Businesses can implement a range of tangible measures to incorporate ethics into their AI development strategy. These measures may involve:

- Create an AI ethics manifesto that outlines the ethical principles the company is dedicated to upholding.

- Establish protocols to oversee and regulate AI systems.

- Formalize the role of "Chief Artificial Intelligence Officer" (CAIO) to take on the growing responsibility that AI-related issues are placing on the company. Dividing this responsibility between the CDO or CTO may become less feasible, particularly if companies aim to meet these challenges as efficiently as possible.

Conclusion

Integrating ethics into AI strategy is a major challenge for companies. By adopting an ethical approach, companies can help ensure that AI is used responsibly and for the benefit of society.

All experts in information technology, Artificial Intelligence, and especially in ethics, as well as in ESG, should (must?) help instruct and enhance companies' understanding of the significance of incorporating ethics into their AI development strategy.

Among the actions to be taken to raise companies' awareness of AI ethics:

- Create educational resources, such as guides, articles, or training courses, to help companies understand the ethical challenges of AI.

- Organize events, such as conferences, MeetUp, or workshops, to debate the ethical challenges of AI and share best practices.

- Establish partnerships with organizations that share similar values, such as human rights advocacy groups or regulatory bodies, as well as schools and stakeholders from Big Tech who are receptive to these values.

I am convinced that these actions will contribute to making ethics an essential component of AI's development strategy. What other measures do you think should be implemented?

Opinions expressed by DZone contributors are their own.

Comments