Unlocking the Power of Search: Keywords, Similarity, and Semantics Explained

An overview of keyword, similarity, and semantic search techniques, providing insights into how each one works and guidance on when to use them effectively.

Join the DZone community and get the full member experience.

Join For FreeDelving Into Different Search Techniques

To set the context, let’s say we have a collection of texts about various technical topics and would like to look for information related to “Machine Learning.” We will now look at how Keyword Search, Similarity Search, and Semantic Search offer different levels of depth and understanding, from simple keyword matching to recognizing related concepts and contexts.

Let us first look at the standard code components used for the program.

1. Standard Code Components Used

A. Libraries Imported

import os

import re

from whoosh.index import create_in

from whoosh.fields import Schema, TEXT

from whoosh.qparser import QueryParser

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.metrics.pairwise import cosine_similarity

from transformers import pipeline

import numpy as np

The following necessary libraries are imported in this block:

osfor file system operations.refor regular expressions.whooshfor creating and managing a search index.scikit-learnfor TF-IDF vectorization and similarity computation.transformersfor using a deep learning model for feature extraction.numpyfor numerical operations, specifically sorting.

B. Sample Document Initialization

# Sample documents used for demonstrating all three search techniques

documents = [

"Machine learning is a field of artificial intelligence that uses statistical techniques.",

"Natural language processing (NLP) is a part of artificial intelligence that deals with the interaction between computers and humans using natural language. ",

"Deep learning models are a subset of machine learning algorithms that use neural networks with many layers.",

"AI is transforming the world by automating tasks, providing insights through data analysis, and enabling new technologies like autonomous vehicles and advanced robotics. ",

"Natural language processing can be challenging due to the complexity and variability of human language. ",

"The application of machine learning in healthcare is revolutionizing the way diseases are diagnosed and treated.",

"Autonomous vehicles rely heavily on AI and machine learning to navigate and make decisions.",

"Speech recognition technology has advanced considerably thanks to deep learning models. "

]

Defines a list of sample documents containing text related to various topics in artificial intelligence, machine learning, and natural language processing.

C. Highlight Function

def highlight_term(text, term):

return re.sub(f"({term})", r'\033[1;31m\1\033[0m', text, flags=re.IGNORECASE)

Used to beautify the output to highlight the search term within the text.

2. Keyword Search

A traditional method that matches search queries with exact or partial keywords found in the documents.

Relies heavily on exact term matching and simple query operators (AND, OR, NOT).

A. How Keyword Search Works

Since our search query is “Machine Learning,” the keyword search looks for exact text matches and only returns text that contains “Machine Learning.” Some examples of texts that will be returned are “Machine learning is transforming many industries.” “A course on machine learning was introduced recently.”

B. Let’s Examine the Code Behind the Keyword Search

# Function for Keyword Search using Whoosh

def keyword_search(query_str):

schema = Schema(content=TEXT(stored=True))

if not os.path.exists("index"):

os.mkdir("index")

index = create_in("index", schema)

writer = index.writer()

for doc in documents:

writer.add_document(content=doc)

writer.commit()

with index.searcher() as searcher:

query = QueryParser("content", index.schema).parse(query_str)

results = searcher.search(query)

highlighted_results = [(highlight_term(result['content'], query_str), result.score) for result in results]

return highlighted_resultsI used the Whoosh library to perform the keyword search.

SchemaandTEXTdefine the schema with a single field contentos.path.existsandos.mkdir: Check if theindexdirectory exists and create it if not.create_in: Establishes an index in a directory named index.writer: Opens a writer to add documents to the index.add_document: Adds documents to the index.commit: Commits the changes to the indexwith index.searcher(): Opens a searcher to search the index.QueryParser: Parses the query string.searcher.search: Searches the index with the parsed query.highlighted_results: Highlights the search term in the results and stores the results with their scores.

We will check the keyword search output and other search techniques later in this article.

3. Similarity Search

This method finds text similar to the search query by comparing the provided text to other texts based on features like the presence of related words or themes.

A. How Similarity Search Works

Going back to the same search query "Machine Learning" as before, Similarity search will bring back conceptually similar texts such as "AI applications in healthcare use machine learning techniques" and "Predictive modeling often relies on machine learning."

B. Let’s Examine the Code Behind the Similarity Search

# Function for Similarity Search using Scikit-learn

def similarity_search(query_str):

vectorizer = TfidfVectorizer()

tfidf_matrix = vectorizer.fit_transform(documents)

query_vec = vectorizer.transform([query_str])

similarity = cosine_similarity(query_vec, tfidf_matrix)

similar_docs = similarity[0].argsort()[-3:][::-1] # Top 3 similar documents

similarities = similarity[0][similar_docs]

highlighted_results = [(highlight_term(documents[i], query_str), similarities[idx]) for idx, i in enumerate(similar_docs)]

return highlighted_resultsI used the Scikit-learn library to write a function to perform a similarity search

TfidfVectorizer: Converts documents into TF-IDF features. Learn more about TF-IDF here.fit_transform: It fits the vectorizer to the documents and transforms the documents into a TF-IDF matrix. Fit learns the vocabulary from the document list and identifies the unique words to calculate their TF and IDF values.transform: Transforms the query string into a TF-IDF vector using the same vocabulary and statistics learned during thefitstepcosine_similarity: Computes cosine similarity between the query vector and the TF-IDF matrix.argsort()[-3:][::-1]: Gets the indices of the top 3 similar documents in descending order of similarity. This step is only pertinent to this article, and we can eliminate this if we want to not restrict the search results to the top 3.highlighted_results: Highlights the search term in the results and stores the results with their similarity scores.

4. Semantic Search

We are now entering the realm of powerful search techniques. This method understands the meaning/context of the searched term and returns texts using the concept even though the searched term is not directly mentioned.

A. How Semantic Search Works

The same search query, "Machine Learning," when applied with semantic search, yields texts related to the concept of Machine Learning, such as "AI and data-driven decision-making are changing industries" and "Neural networks are a key component of many AI systems."

B. Let’s Examine the Code Behind the Semantic Search

# Function for Semantic Search using Transformers

def semantic_search(query_str):

semantic_searcher = pipeline("feature-extraction", model="distilbert-base-uncased")

query_embedding = semantic_searcher(query_str)[0][0]

def get_similarity(query_embedding, doc_embedding):

return cosine_similarity([query_embedding], [doc_embedding])[0][0]

doc_embeddings = [semantic_searcher(doc)[0][0] for doc in documents]

similarities = [get_similarity(query_embedding, embedding) for embedding in doc_embeddings]

sorted_indices = np.argsort(similarities)[-3:][::-1]

highlighted_results = [(highlight_term(documents[i], query_str), similarities[i]) for i in sorted_indices]

return highlighted_resultsA function to perform Semantic search using the Hugging Face transformers library.

There is a lot going on in the semantic_searcher = pipeline("feature-extraction", model="distilbert-base-uncased") code snippet.

pipeline: This is the function imported from thetransformerslibrary that helps in setting up various types of NLP tasks using pre-trained modelsfeature-extraction: Pipeline performs the Feature extraction task to convert text into numerical representations (embeddings) that can be used for various downstream tasks.- The pre-trained model used for this task is the

distilbert-base-uncasedmodel, which is a smaller, faster version of the BERT model, which is trained to understand English text without case sensitivity. query_embedding: Gets the embedding for the query string.get_similarity: A nested function to compute the cosine similarity between the query embedding and a document embedding.doc_embeddings: Gets embeddings for all documents.similarities: Computes similarities between the query embedding and all document embeddings.argsort()[-3:][::-1]: Gets the indices of the top 3 similar documents in descending order of similarity.highlighted_results: Highlights the search term in the results and stores the results with their similarity scores.

Output

Now that we have the context about various search techniques, we have set up documents to be able to search, let us look at the output based on the search query for each of the search techniques.

# Main execution

if __name__ == "__main__":

query = input("Enter your search term: ")

print("\nKeyword Search Results:")

keyword_results = keyword_search(query)

for result, score in keyword_results:

print(f"{result} (Score: {score:.2f})")

print("\nSimilarity Search Results:")

similarity_results = similarity_search(query)

for result, similarity in similarity_results:

print(f"{result} (Similarity: {similarity * 100:.2f}%)")

print("\nSemantic Search Results:")

semantic_results = semantic_search(query)

for result, similarity in semantic_results:

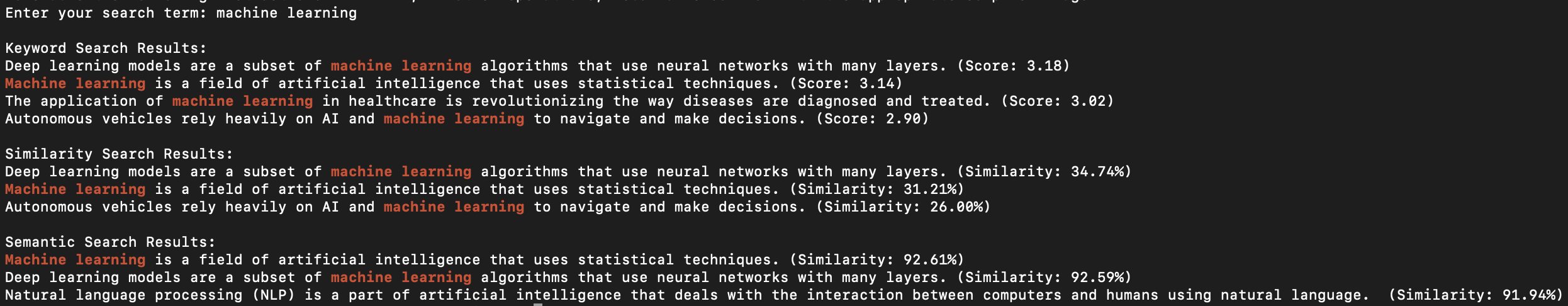

print(f"{result} (Similarity: {similarity * 100:.2f}%)")Let us now search our documents using the search term "Machine Learning" and the image of the search results below.

Highlights from the search results:

- The

highlighted_resultsfunction helps us highlight the search term. - Only 3 results are returned for similarity search and semantic search, and that is because our code

limited the search results to 3for both those search techniques. - Keyword Search uses TF-IDF to calculate a score based on the frequency and importance of terms in the document relative to the query.

- Similarity Search uses vectorization and cosine similarity to measure how similar the documents are to the query in a vector space.

- Semantic Search uses embeddings from transformer models and cosine similarity to capture the semantic meaning and relevance of the documents to the query.

- Notice how semantic search, being powerful, retrieves text regarding Natural language processing as it is closer in context to machine learning.

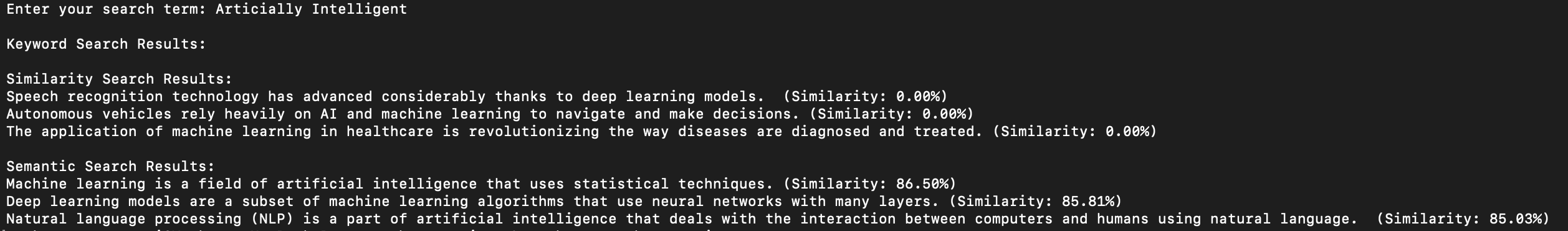

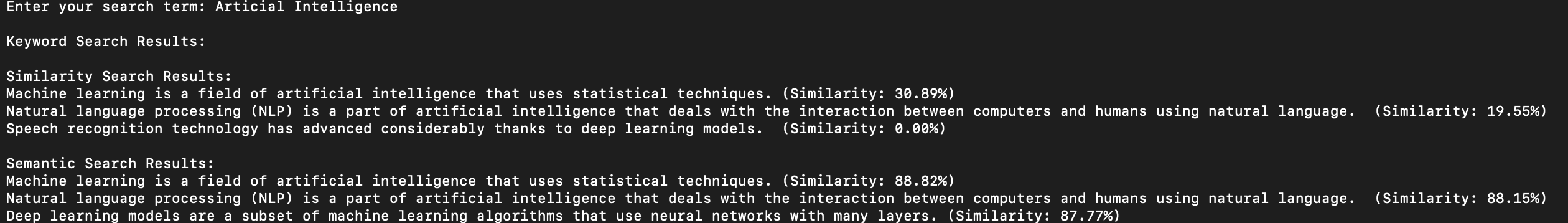

Now let us take a look at the search results using other search terms, "Artificially Intelligent" and "Artificial Intelligence," (Please notice the incorrect spelling of Artificial is on purpose), and discuss the findings

Highlights from the search results:

- The search on "Artificially Intelligent" yielded no results for keyword search due to lack of exact or partial terms

- Similarity Search came up with zero similarity due to a mismatch in vector representations or similarity

- Semantic Search effectively found contextually relevant documents, showing the strength of understanding and matching concepts beyond exact words

- Misspelling artificial intelligence in the second search yielded no results for keyword search rightfully but yielded a similarity score for one of the texts due to a matching case on intelligence, semantic search, as usual, retrieved the contextually matching texts from the documents.

Conclusion

Now that you have a good understanding of how the various search techniques perform, let us look at the key takeaways:

- The right choice of the search method should depend on the requirement of the task. Perform a thorough analysis of the task and plug in the right search method for optimal performance

- Use

keyword searchfor simple, straightforward term matching - Use

similarity searchwhen you need to find documents with slight term variations but still based on keyword-like matching - Use

semantic searchfor tasks requiring a deep understanding of content, such as when dealing with varied terminology or needing to capture the underlying meaning of the query. - We can also consider combining these methods to achieve a good balance in our approach, leveraging each method's strengths to improve overall performance.

- There is a scope for further optimization of each of these techniques, which has not been covered as part of this article.

References

The following references have text that has been used by our documents for searching in this article:

Opinions expressed by DZone contributors are their own.

Comments