The Imitation Game

In this article, we will delve into Alan Turing's famous game and explore some of the many critiques and rebuttals to this famous experiment.

Join the DZone community and get the full member experience.

Join For FreeArtificial Intelligence (AI) has become one of the most discussed software concepts in the past few years and its influence has seeped into casual conversations. While AI is not a new concept, the advancements of modern technology have finally progressed to the point where practical AI is possible. From the mind-boggling computational power of modern graphics processors to the early phases of 5G, the Utopia dream of seeing AI play a role in daily life is nearly a reality.

Even with this evolution in technology, AI continues to root itself in some of the veteran laws and concepts developed in the 20th century. Foremost among these concepts is the Imitation Game. In this article, we will delve into Alan Turing's famous game and explore some of the many critiques and rebuttals to this famous experiment. We will also explore some of Turing's refutations of these criticisms and the vision the famous computer scientist had for AI.

The Game

In most cases, the classical question of AI is phrased as follows: Can a computer think? While this question is deeper and more metaphysical than it first appears, it is rather abstract and is difficult to answer precisely. For example, what would a computer have to do in order for an observer to be convinced that is it actually thinking and thus intelligent?

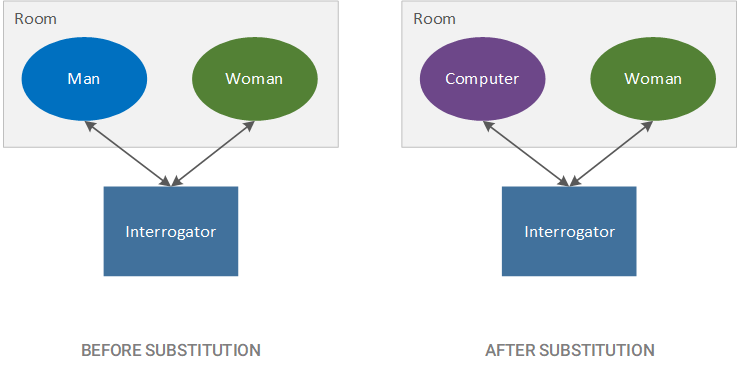

Alan Turing decided to rephrase the question and instead proposed a distinct criterion for deciding if a computing machine could think. The Imitation Game (also called the Turing Test) is a contest of equivalence, where an interrogator interacting with a human and a machine — or a computer — is challenged to distinguish between the two. Turing defined the game in Computing Machinary and Intelligence (1950) as the following:

The new form of the problem can be described in terms of a game which we call the imitation game. It is played with three people, a man (A), a woman (B), and an interrogator (C) who may be of either sex. The interrogator stays in a room apart from the other two. The object of the game for the interrogator is to determine which of the other two is the man and which is the woman. He knows them by labels X and Y, and at the end of the game he says either "X is A and Y is B" or "X is B and Y is A." The interrogator is allowed to put questions to A and B thus: Will X please tell me the length of his or her hair? Now suppose X is actually A, then A must answer. It is A's object in the game to try and cause C to make the wrong identification. His answer might therefore be

"My hair is shingled, and the longest strands are about nine inches long..."

We now ask the question, "What will happen when a machine takes the part of A in this game?" Will the interrogator decide wrongly as often when the game is played like this as he does when the game is played between a man and a woman? These questions replace our original, "Can machines think?"

Turing establishes a scenario where an interrogator is placed outside of a room that contains a man and woman; the goal of the man is to dupe the interrogator into misidentifying who is the man and who is the woman. Turing then proposes that the man be replaced with a computer and the game run in exactly the same manner as before. He posits that if the computer can dupe the interrogator just as effectively as the man could, then the computer, in this scenario, is an effective substitute for the man. Thus, from the perspective of intelligence, the computer can think just as well as the man.

Turing also places an important stipulation on this game: The communication between the interrogator and the man, the interrogator and the computer, and the interrogator and the woman is done through typewritten messages — or even better, through an intermediary. This ensures that the speaking ability of the man and the woman does not unfairly disadvantage the computer (i.e., we are trying to determine if the computer can think as well as the man, not speak as well as him).

What is interesting about this game is that it does not necessarily determine the ability of the computer to think, but rather, imitate a human so effectively that another human cannot surmise the difference between a human and the computer. In essence, this test is structured to determine if a computer can effectively replace a human without an observer distinguishing the substitution.

This approach was so groundbreaking that it still remains one of the most popular instruments for measuring the effectiveness of AI and is still hotly debated to this day. Although the Imitation Game is profound in its conception, it is by no means perfect. In fact, it is still fiercely debated whether or not the Turing Test is actually an effective means of measuring the intelligence of a computer.

The Criticisms

The main criticism of the Turing Test is that the test does not actually measure the thinking ability of a computer, but rather, it measures the conversational ability of a computer — or at best, the ability of a computer to replace a human rather than intelligently think like one. This is a serious concern for proponents of the Turing Test, as there is a fine line between the ability to truly think and the ability to mimic (even if it is mimicking thinking).

Apart from this main concern, there are also other criticisms that were raised about both AI and the Imitation Game, in particular, some of which were directly addressed by Turing and others that began to sprout after his death in 1954.

Addressed by Turing

Even at the time of writing, Turing was aware of the criticisms of his test — and more generally, AI. In Computing Machinery and Intelligence, Turing addresses nine of the most popular objections against the conception of a computing machine that could sufficiently mimic the behavior of humans:

- The Theological Objection: God has only given souls to humans (rather than animals or machines) and thinking is a faculty of the soul; therefore, machines cannot think in the same sense as humans. Turing refutes this twofold: (1) he claims that we as humans do not know for sure that only we possess souls and (2) he states that the idea that a literal soul supports thinking may fade as we continue to scientifically progress.

- The "Heads in the Sand" Objection: The consequences of a machine out-thinking a human is too dreadful to fathom, and thus we should not consider the question of if such a thinking machine could be constructed. Turning chalks this up to the fallacy of The Superiority of Man — in Turing’s words, “We like to believe that Man is in some subtle way superior to the rest of creation” — which he claims is false.

- The Mathematical Objection: There exist mathematic laws, such as Godel’s Theorems, that restrict the computational ability of a machine, which solely relies on logical and mathematical laws to perform computation, and is therefore wholly restricted by these same set of laws. Turing refutes this by stating that the human intellect also has similar restrictions — but no person would claim that humans do not possess intellect (as this is the presupposition that machines can mimic the intellect of humans).

- The Argument from Consciousness: As stated by Professor Jefferson in 1949, a computer cannot truly think because it is incapable of consciousness — creating poetry from stirring of the spirit, feeling pleasure at success, and warmth from flattery. Turing rejects this argument, stating that even with humans, one would be forced to enter the mind of another to truly know if he or she is conscious in the same sense we know we ourselves to be conscious. Applying this logic to a computer, we would be forced to enter the mind of the machine in order to definitively reject that is it conscious in the same sense; since we cannot know for sure that it is not, this argument is moot.

- Arguments from Various Disabilities: Through induction, one could claim that a computer can do A, B, and C, but it is currently incapable of doing D — such as falling in love, being friendly, or having initiative. Turing posits that (1) there is no indication that, given enough time, there could not be a computer created that can do these things and (2) some of these characteristics are not desirable for computers.

- Lady Lovelace's Objection: According to Lady Lovelace (in 1842 when describing the Babbage Engine — or Analytical Engine): "The Analytical Engine has no pretensions to originate anything." Turing refutes this by claiming that there is no evidence that a computer could not create new things. He even goes so far as to state that a theoretical computer could alter its own program to better solve a problem, which would appear to be an origination on the part of the machine.

- Argument from Continuity in the Nervous System: While the human body is a continuous system, computers (in the theoretical sense, and currently in the practical sense) are discrete machines. While Turing admits that a discrete device cannot perfectly imitate a continuous system (i.e., it can only approximate it), he also restates that a computer need not perfectly mimic a human: It only needs to convince the interrogator that it is the human (i.e., the approximation only needs to be sufficient, not perfect).

- The Argument from Informality of Behaviour: Humans are not machines and therefore, it is impossible to describe every possible behavior that a human can or will perform given a certain situation. Turing claims that although there is no such law yet, we have not discovered that in general, there is no law of human behavior. In essence, we do not know, and this is sufficient to refute the claim that it is impossible to map all human behavior.

- The Argument from Extrasensory Perception: Extrasensory Perception (ESP), otherwise known as a sixth sense, could theoretically allow humans to communicate in a way not possible between computers and humans. Turing suggests that there is no strong refutation of this idea and since ESP is not totally understood, anything could happen. For example, ESP could affect the computer in much the same way as another human, and therefore, no difference would emerge; or conversely, it could allow communication between humans not possible with machines, causing the interrogator to instantly recognize the difference between the machine and human (if the interrogator were capable of ESP).

While Turing is thorough in addressing the criticisms known at the time, some his defenses rest upon disputable presuppositions. Furthermore, even after his passing in 1954, the debate continued to intensify, bringing with it an entirely new set of critiques.

Presented After Turing

Even in modern times, criticisms of this veteran experiment continue to be brought to the fore. One of the prominent challenges to the Turing Test is the Chinese Room, created by John Searle in Minds, Brains, and Programs (1980). In this thought experiment, Searle devised the following scenario:

Suppose that I'm locked in a room and given a large batch of Chinese writing. Suppose furthermore (as is indeed the case) that I know no Chinese, either written or spoken, and that I'm not even confident that I could recognize Chinese writing as Chinese writing distinct from, say, Japanese writing or meaningless squiggles. To me, Chinese writing is just so many meaningless squiggles.

Now suppose further that after this first batch of Chinese writing I am given a second batch of Chinese script together with a set of rules for correlating the second batch with the first batch. The rules are in English, and I understand these rules as well as any other native speaker of English. They enable me to correlate one set of formal symbols with another set of formal symbols, and all that 'formal' means here is that I can identify the symbols entirely by their shapes. Now suppose also that I am given a third batch of Chinese symbols together with some instructions, again in English, that enable me to correlate elements of this third batch with the first two batches, and these rules instruct me how to give back certain Chinese symbols with certain sorts of shapes in response to certain sorts of shapes given me in the third batch. Unknown to me, the people who are giving me all of these symbols call the first batch "a script," they call the second batch a "story. ' and they call the third batch "questions." Furthermore, they call the symbols I give them back in response to the third batch "answers to the questions." and the set of rules in English that they gave me, they call "the program."

Now just to complicate the story a little, imagine that these people also give me stories in English, which I understand, and they then ask me questions in English about these stories, and I give them back answers in English. Suppose also that after a while I get so good at following the instructions for manipulating the Chinese symbols and the programmers get so good at writing the programs that from the external point of view that is, from the point of view of somebody outside the room in which I am locked—my answers to the questions are absolutely indistinguishable from those of native Chinese speakers. Nobody just looking at my answers can tell that I don't speak a word of Chinese.

Let us also suppose that my answers to the English questions are, as they no doubt would be, indistinguishable from those of other native English speakers, for the simple reason that I am a native English speaker. From the external point of view — from the point of view of someone reading my "answers" — the answers to the Chinese questions and the English questions are equally good. But in the Chinese case, unlike the English case, I produce the answers by manipulating uninterpreted formal symbols. As far as the Chinese is concerned, I simply behave like a computer; I perform computational operations on formally specified elements. For the purposes of the Chinese, I am simply an instantiation of the computer program.

In essence, Searle claims that the computer in the Turing Test is not thinking at all; it is blindly running through a series of instructions. According to Searle, this is far from cognition, and had a human replicated these steps — as devised in his Chinese Room — an observer would hardly claim that the human was intelligent. Instead, the observer would claim that the human could have shut his brain off and simply walked through the motions of the program, responding with the expected results in much the same way as the computer could.

Searle deduces that there are two types of AI:

- Weak AI: The ability of a computer to simulate thinking

- Strong AI: "The appropriately programmed computer with the right inputs and outputs would thereby have a mind in exactly the same sense human beings have minds."

While the Chinese Room is thought-provoking and brings up an important distinction between actually thinking and simulation of thinking, it is just as hotly contested as the Imitation Game — and with the same fervor.

Conclusion

AI is one of the most prominent topics in modern software, and for good reason. Although some put too much hope (and too much tragedy) in AI, it does promise to bring about some great advancements, including cures for diseases, replacement of mundane tasks, and solutions for previously-impossible problems. Even with this modern enthusiasm, Alan Turing's near 70-year-old Imitation Game still remains at the forefront of AI, and its implications are likely to increase as AI comes to fruition in the following years.

Opinions expressed by DZone contributors are their own.

Comments