Develop XR With Oracle, Ep 3: Computer Vision AI, ML, and Metaverse

In this third article of the series, focus on XR applications of computer vision AI and ML and its related use in the metaverse.

Join the DZone community and get the full member experience.

Join For FreeThis is the third piece in a series on developing XR applications and experiences using Oracle and focuses on XR applications of computer vision AI and ML and its related use in the metaverse. Find the links to the first two articles below:

- Develop XR With Oracle Cloud, Database on HoloLens, Ep 1: Spatial, AI/ML, Kubernetes, and OpenTelemetry

- Develop XR With Oracle Cloud, Database on HoloLens, Ep 2: Property Graphs, Data Visualization, and Metaverse

As with the previous posts, here I will again specifically show applications developed with Oracle database and cloud technologies HoloLens 2, Mixed Reality Toolkit, and Unity platform.

Throughout the blog, I will reference this corresponding demo video below.

Extended Reality (XR), Metaverse, and HoloLens

I will refer the reader to the first article in this series for an overview of XR and HoloLens (linked above). That post was based on a data-driven microservices workshop and demonstrated a number of aspects that will be present in the metaverse, such as online shopping, by interacting with 3D models of food/products, 3D/spatial real-world maps, etc., as well as backend DevOps (Kubernetes and OpenTelemetry tracing), etc.

The second article of the series was based on a number of graph workshops and demonstrated visualization, creation, and manipulation of models, notebooks, layouts, and highlights for property graph analysis used in social graphs, neural networks, and the financial sector (e.g., money laundering detection).

In both of these articles as well as in this one, the subject matter can be shared and actively collaborated up, even in real-time, remotely. These types of abilities are key to the metaverse concept and will be expanded upon and extended to concepts such as digital doubles in these future pieces.

This blog will not go into computer vision AI in-depth, but will instead focus on the XR-enablement of it, the Oracle database, and the cloud.

Capabilities and Possibilities of Computer Vision With XR

Computer vision AI provides a number of capabilities including image classification, object detection, text detection, and document AI.

I predominantly use the HoloLens to demonstrate concepts in this series, as it is the technology closest to what will be the most common and everyday usage of XR in the future. However, the concepts I show in these blogs can be applied to one extent or another in different flavors of XR and devices (and indeed I will be giving examples of such in future blogs).

One thing that most, if not all, of these devices, have in common is a visual interface (i.e., computer and camera) between the user and the real world. Inherently, this has the capability of capturing and processing the visual stimuli surrounding the user. Therefore, the link between it and computer vision AI is a logical and synergistic one.

This is also true of AI audio and speech, which I will also be demonstrating in a future piece.

Image Classification and Object Detection

Imagine the potential to help those with vision impairment, Alzheimer’s, ... by having the XR device give contextual audio and visual feedback about one's surroundings.

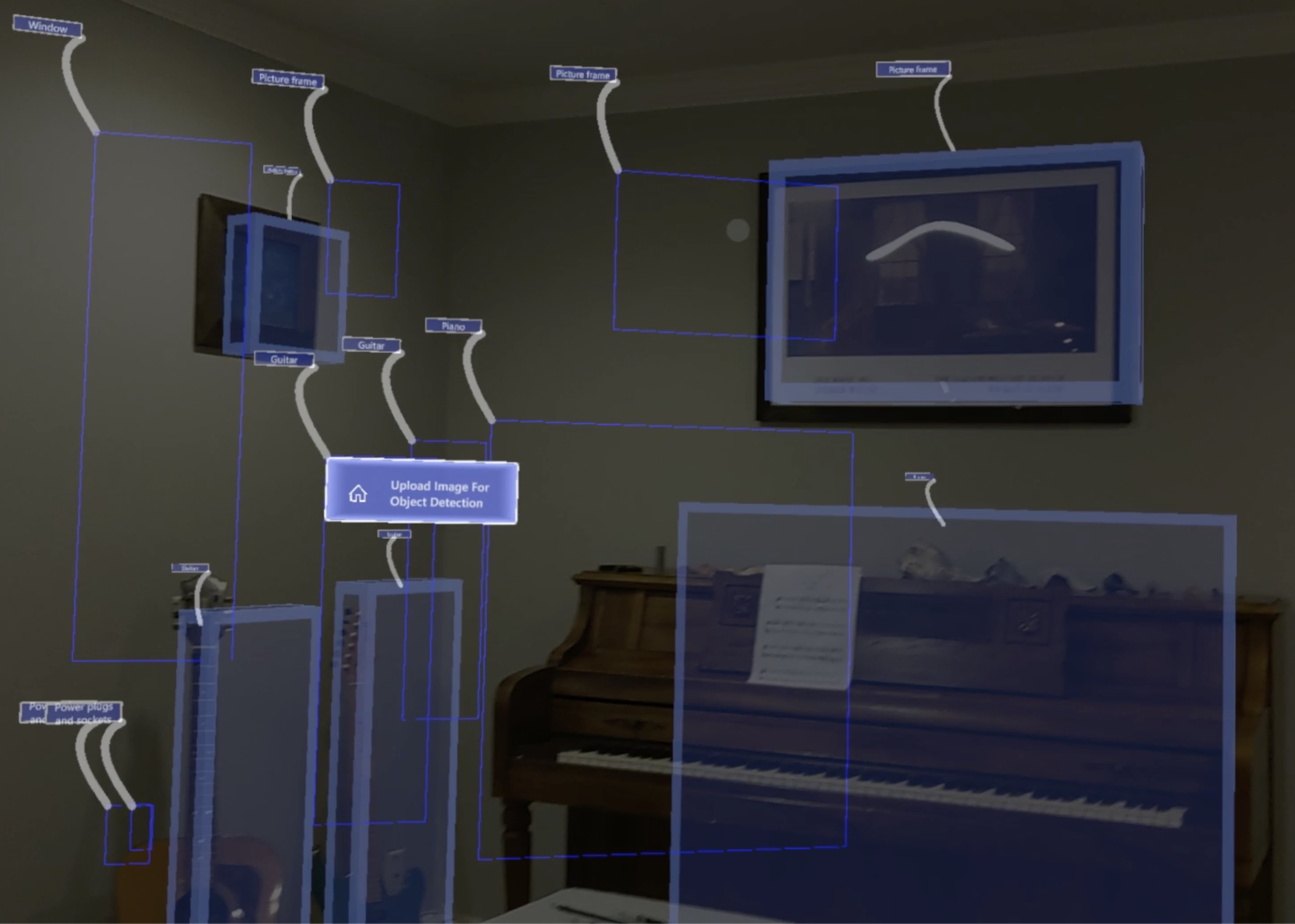

The first part of the video shows object detection applied to XR. These are the steps involved:

- A picture of the user's current view is taken by the HoloLens (I use an explicit button for this but of course, it could be done automatically, periodically, in reaction to voice command, etc.).

- This image is automatically uploaded to the Oracle object store and database for further analysis. This in and of itself is a handy feature for storing data retrieved from the users' surroundings without the user needing to explicitly instruct it to or even be aware of the various contextual, etc. information being gathered.

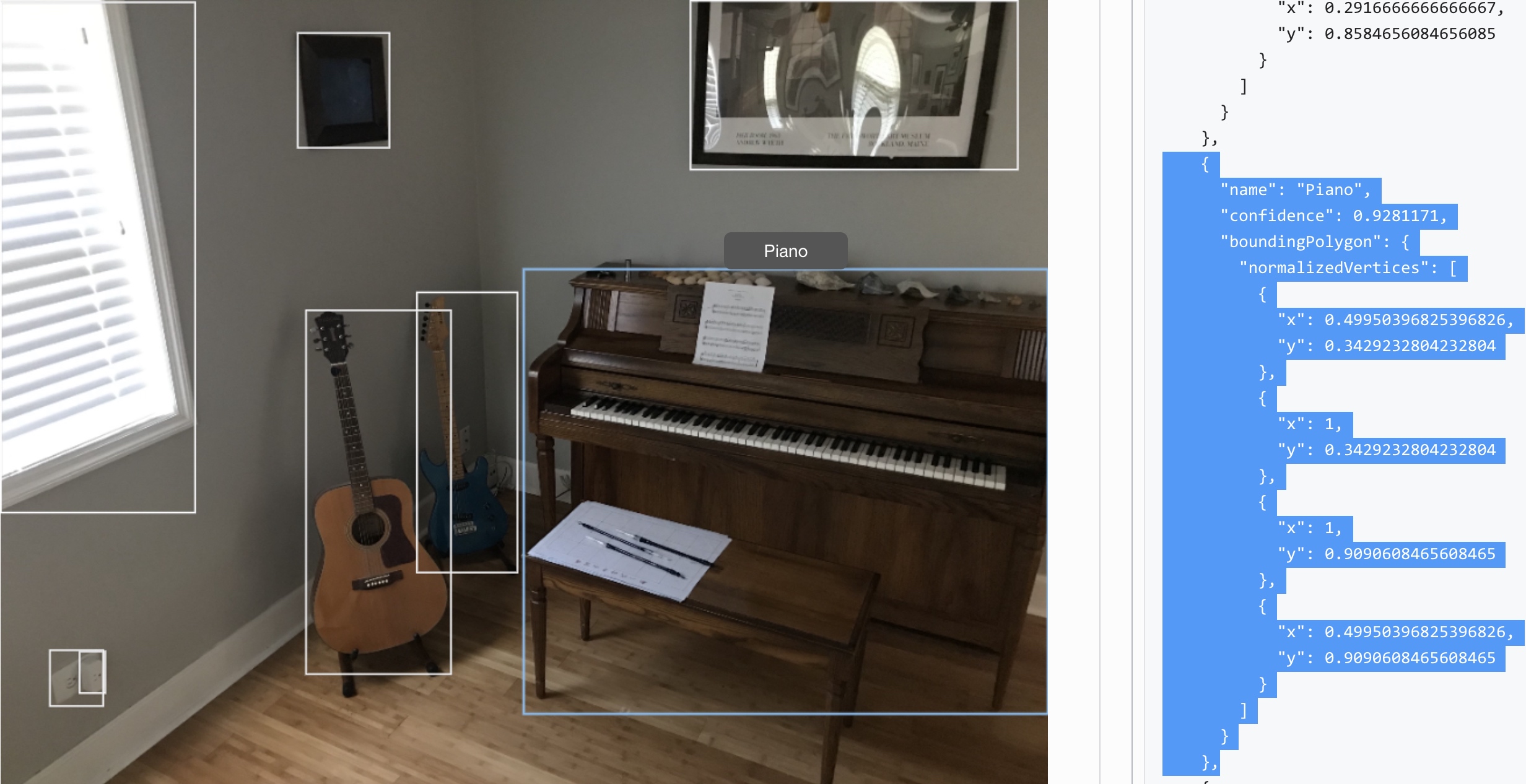

- The image is then processed by the Vision AI service. A JSON response containing the name, confidence, bondingPolygon, normalizedVertices, categorization, etc. is returned to the HoloLens. This is what the image processing and JSON response sent to the HoloLens look like in the Oracle cloud console:

![What the image processing and JSON response sent to the HoloLens look like in the Oracle cloud console]()

- The HoloLens app then processes this JSON, using the vertices/coordinates to recreate the polygons/rectangles and labels.

- The location of the user (e.g., the HoloLens headset camera) was saved when the initial picture was taken. A raycast is made from that point through the coordinates of the 2D rectangles and onto the 3D spatial surface mesh of the room. (Note: The 2D representation is only shown in the demonstration to illustrate the routine described. In an actual app, is likely that only the end result of the spatially mapped cubes would exist.)

- 3D cubes are then created at the intersection points of these raycasts on the surface mesh.

- In addition, once created, the labels are fed to a speech-to-text program that speaks the object's name. This audio is also 3D spatially mapped.

- This provides an extremely efficient and fast technique, as a single 2D image is used to map the enter view visually and audibly in 3D. This mapping persists in the exact same locations beyond restarts of the HoloLens/app. (The accuracy and so forth could of course be enhanced further with multiple takes/pics, and captured automatically without the user needing to push a button, etc.)

Imagine the potential to assist with vision impairment, Alzheimer’s, identification of unknown and difficult to isolate items, analysis of threats, interests, etc., by having the XR device give contextual audio and visual feedback about one's surroundings!

This information/representation can in turn be shared in the metaverse across any number of different XR devices (that includes basic phones and simple computer monitors) to facilitate digital doubles, collaboration, etc. in a very efficient and lightweight manner that simultaneously takes advantage of the powerful capabilities of the Oracle database and/in the cloud.

Document AI

Imagine using XR and AI to enhance social interactions and engage in more meaningful conversations IN REAL LIFE.

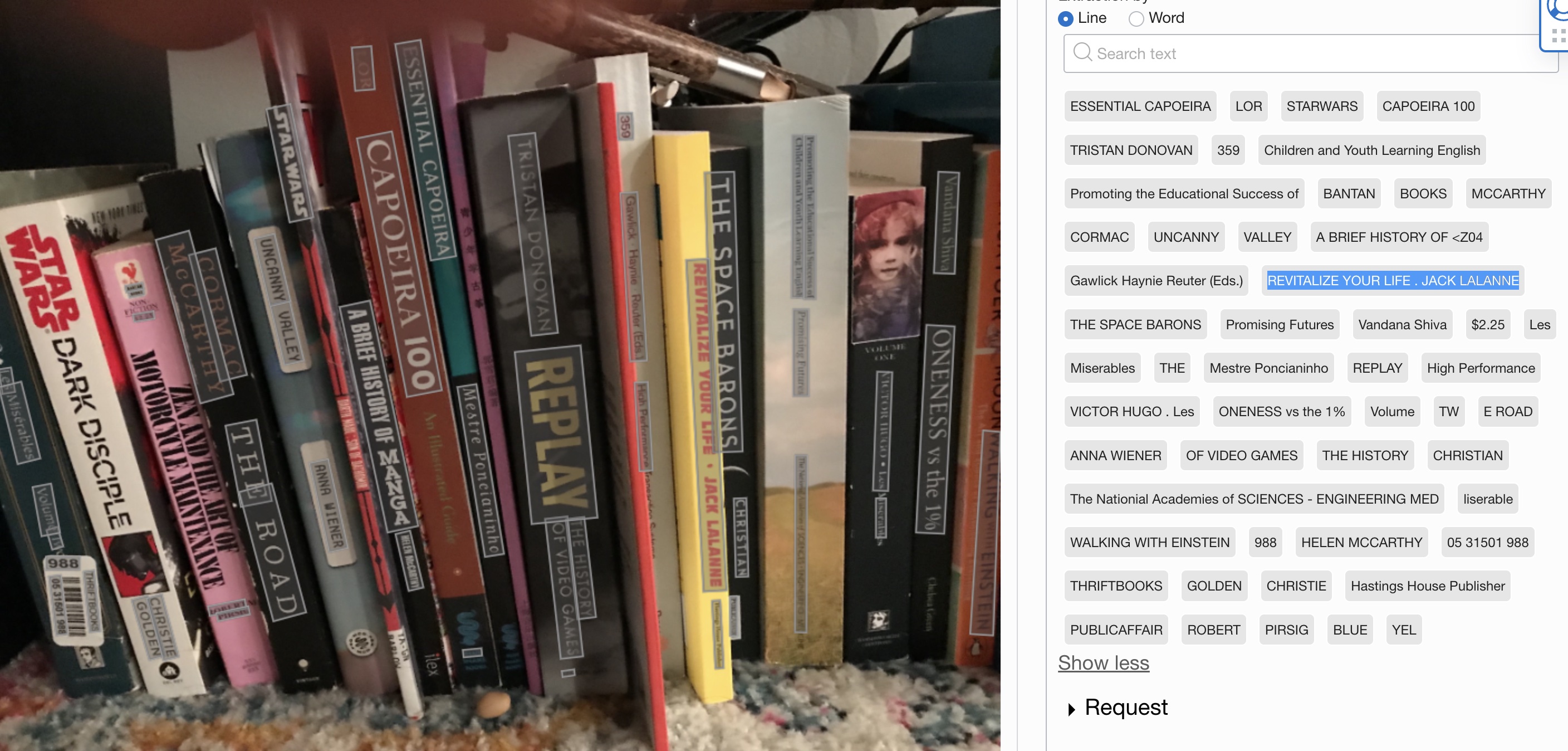

The second part of the video demonstration shows the use of the document AI service, again with the HoloLens camera capture technique used in the first part of the video, except this time text from the picture (with varying orientation, distance, etc.) is identified. Again, this can be used for helping the user read, for example, as in the object detection example, and can also be fed into the Oracle database's powerful ML capabilities to run processing against any number of models, notebooks, etc. In this case, I scan books. This is a shot of that picture with the text processed, in the OCI console.

We could, as I did in the first blog, use this to make suggestions as far as other books that are related; or, as I did in the second blog, do some graph analysis to find correlations and commonalities. In this particular example, however, I have fed the text to a number of GPT-3 conversation models which then feedback a conversational response. This response, or again any information from various models, can be given to the user to strike up a conversation with the owner of the books, for example.

This of course is not limited to books or conversations. The possibilities really are endless as far as the use of this combination of XR and providing the user with information and analysis about the environment they are in (something Oracle tech enables).

I can also imagine the user advertising or "wearing" information about themselves the same way they wear clothes, etc., but in a potentially more complex, conveying fashion (meaning "fashion" in both senses of the word and meaning "senses" in both definitions of that word). The metaverse is full of talk of companies finding new ways to advertise and interact in a virtual world. Users should be at least as empowered to express themselves and to do so in the real world.

Additional Thoughts

I have given some ideas and examples of how computer vision AI and XR can be used together. I look forward to putting out more blogs on this topic and other areas of XR with Oracle Cloud and Database soon.

Please see the articles I publish for more information on XR and Oracle cloud and converged database as well as various topics around microservices, observability, transaction processing, etc. Also, please feel free to contact me with any questions or suggestions for new blogs and videos as I am very open to suggestions. Thanks for reading and watching.

Opinions expressed by DZone contributors are their own.

Comments