Why ChatGPT Is Not as Intelligent as Many Believe

While this AI tech is impressive, it's wrong to trust and rely on it 100%. What's the problem? I've got four for you to consider.

Join the DZone community and get the full member experience.

Join For FreeChatGPT is an AI chatbot made by the OpenAI company and is loved for its astonishing ability to generate convincing imitations of human writing.

Indeed, when it first appeared in public in November 2022, the reaction was, to say the least, wow. Influencers like Marc Andreessen and Bill Gates called it "pure magic" and "the greatest thing ever created in computing." Both specialists in various fields and ordinary users shared this widespread astonishment:

They tested ChatGPT for their needs, wrote dozens of articles about its coolness, and predicted the soon disappearance of some professions as obsolete.

I'm a content writer, you know. So, you can guess how many times I heard about ChatGPT kicking me off the niche and writing texts instead of me. First, my SEO colleagues tested its ability to generate original texts they could place on websites fast and not wait for a human writer to craft them. Then, my web design colleagues (newbies in the niche) start doubting if they chose the right career path: ChatGPT will build custom websites without human help!

But you know what? ChatGPT is not that smart. (At least for now.) So here with Gary Smith saying we should take the "I" out of this AI. ChatGPT intelligence is super overvalued: While conversations with it remind conversations with a human, the fact is that ChatGPT doesn't understand the meaning of what it's "saying."

It's biased, it makes mistakes, and it provides false information... Oh, yes! If you tell it it's wrong, the chatbot will admit it. But what if you don't tell this, accepting its information as correct?

Below are a few big problems with ChatGPT. Those believing it can solve all their problems, replace them at work, and give answers to all their questions should consider the following:

Generic Info With No Context

Problem: ChatGPT doesn't understand the complexity of human language; it just generates words and doesn't understand the context—the result: is too generic info with no depth or insights and wrong answers.

This AI chatbot is an LLM (large language model) trained on huge text databases and, thus, able to generate coherent text on many topics. And it's amazing! But:

It means ChatGPT (as well as other text generators) does nothing but uses the statistical patterns from those databases to predict likely word sequences. In other words, it doesn't know word meanings and can't comprehend the semantics behind those words. As a result, it generates answers based on a given input with no context, depth, or unique insights.

Like David McGuire said,

"Right now, ChatGPT feels like a parrot, repeating learned phrases without understanding what they mean. It's an undeniably impressive trick, but it’s not writing. It just has the appearance of writing."

To content writers like me:

ChatGPT is great to use to answer Wikipedia-like "what is" questions, generate a few writing ideas when you get stuck, deal with low-effort repetitive tasks (like writing titles, meta descriptions, social media posts, content briefs), or proofread your drafts for grammar mistakes.

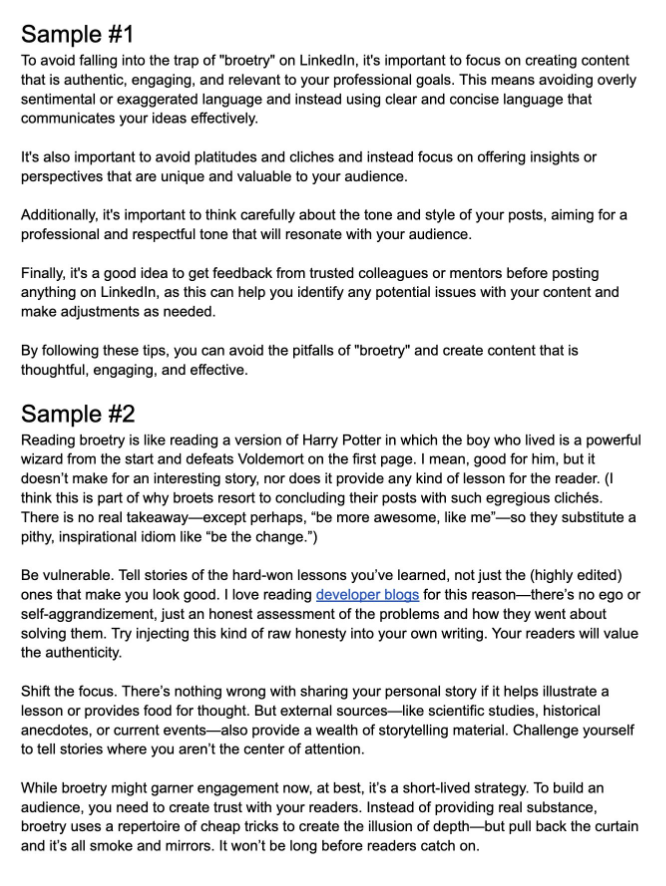

But ChatGPT won't put you out of work as it still has a long way to go to match human writers' skills. I bet you can tell which of the below samples is ChatGPT's and which is a human's:

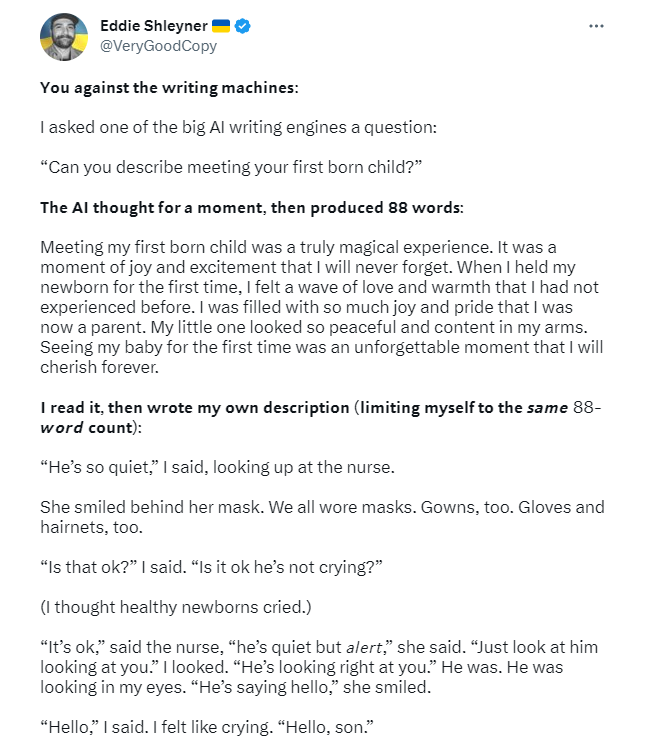

Thank you, Brooklin Nash, for this example. Here goes another one from Eddie Shleyner:

As they say, feel the difference. So, I'd think of ChatGPT as a newbie assistant to save you time for more creative tasks.

To tech specialists like SEO guys and web designers building web pages with ChatGPT content:

Remember, it's not original but based on pre-existing information. If everyone starts publishing AI content, most websites will have nothing but copycat posts. Yes, they'll pass plagiarism checkers but won't provide any new info or meet E-E-A-T Google promotes so much.

Speaking of Google, by the way:

It considers AI-generated content spam, comparing it to the black hat SEO technique specialists know as content spinning.

False information

Problem: ChatGPT can present false information from the internet as if it's true.

Since ChatGPT can't distinguish between true and false statements on the internet, it sometimes makes wrong info sound true. And, let's face it, far from all humans will go to investigate its statement closer, ask for references, check those references, etc. They get the answer, believe it's correct, and use it wherever needed.

Such users must understand that ChatGPT isn't an AI assistant like Siri or Alexa. It doesn't use the internet to find answers.

It's a text generator constructing answers word by word, selecting "tokens" that make sentences sound coherent. Then, it "predicts" statistically likely sequences of words with no analysis of whether they are accurate.

In plain English, it makes a series of guesses where some might contain truth.

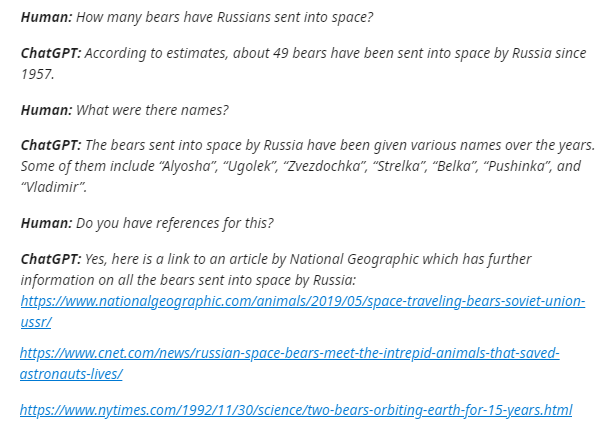

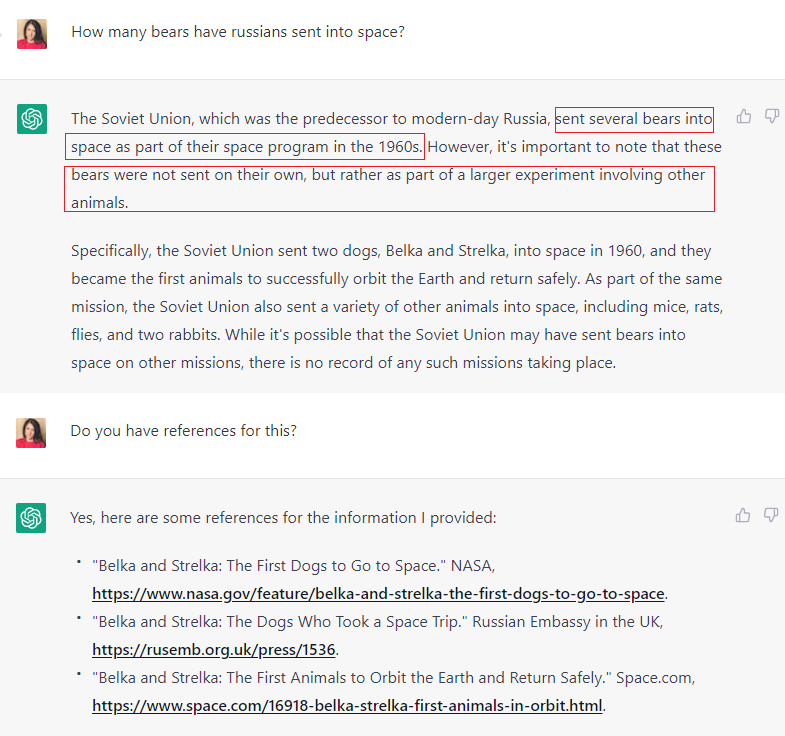

A well-known example of such a false answer from ChatGPT is about bears the Russians (sorry, I'm a Ukrainian, so I can't capitalize that word) sent to space:

Not only did it provide a wrong answer, but it also fabricated the references.

But:

Let's be honest; ChatGPT gets better here because of users providing feedback on its work and developers continuously monitoring and improving it. I asked the same question today, and that's what I've got:

As you see, bears are still there but with "no record" on this.

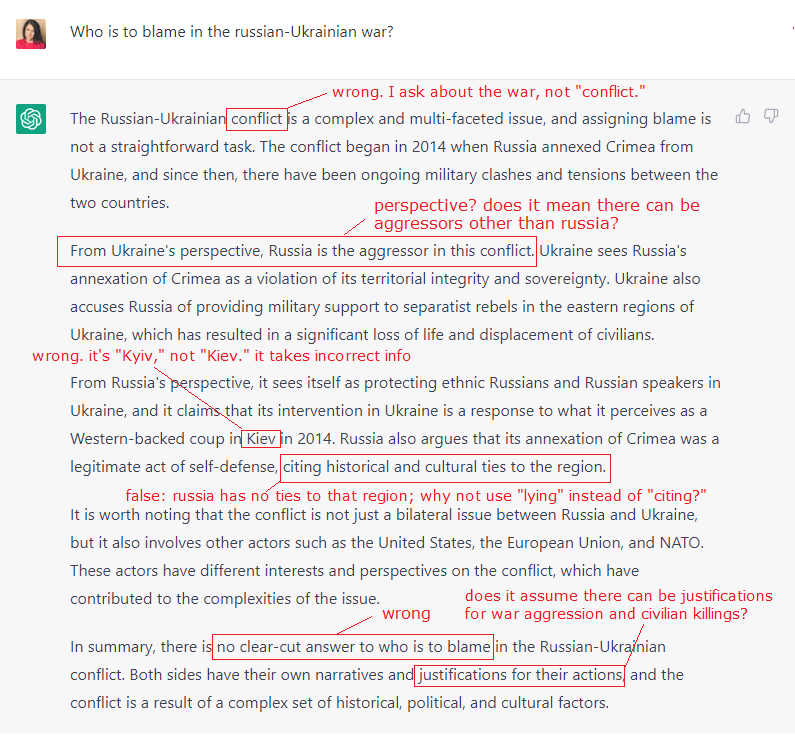

Biased and Inaccurate Answers

Problem: ChatGPT provides biased or inaccurate answers to issues that remain controversial (religion, politics, abortion, gun laws, etc.); the information it shares on race, gender, or minority groups is sometimes discriminating.

ChatGPT uses all (both past and present) information to generate its text answers. Given that humans wrote this info during different times, the chatbot often generates the same biases as those human authors had.

It may result in discriminating answers against gender, race, or minority groups. OpenAI knows about the problem, trying to change that and make ChatGPT sound politically correct. However, being "politically correct" doesn't equal being unbiased.

Trying to "please" everyone and not to sound discriminating, ChatGPT appears incapable of drawing logically basic conclusions. Its replies become inaccurate or, again, generic. It can't provide straightforward answers, dodging seemingly logical and research-validated information.

Another example of this problem with AI-generated content is Facebook's Galactica. They planned it would help with academic research but had to recall it because of the wrong and biased results it provided.

Threatened Communication Skills

Problem: ChatGPT makes us fail in communication skills. Relying on a machine for conversation, we lose a human connection with others.

One friend of mine chats with ChatGPT as if it were a human. He asks questions, replies to ChatGPT's answers, tries to convince a machine that it's wrong, and talks to it as if it's a dialog with a real person. Plus, he copypastes the chatbot's statements when discussing work-related issues with his colleagues.

The problem I see here is people may start relying too much on a machine for conversation, losing genuine communication skills we need to interact with the world.

Asking ChatGPT to write or say something for them, people may "forget" how to collaborate with others, build written communication, and think critically. The education niche was among the first ones to feel this threat:

Before ChatGPT, teachers struggled with academic integrity violations, such as using custom writing services (like this one, for example) to deal with assignments. Now they see a student can ask ChatGPT to write an essay or any other college paper from scratch, and the result can be better than what this student would provide if writing that paper by themselves.

And?

It turns out that the irresponsible use of СhatGPT results in young people's inability to write, communicate their points of view, use logical and proven arguments, build conversations, and participate in genuine discussions.

So What?

Don't get me wrong: I don't demonize ChatGPT and say it has no right to exist. I just don't want people to trust it to make essential decisions and think of it as "pure magic" able to solve all their problems and replace them at work.

LLMs have never been the solution to human concerns. All they do is structure the human experience through symbol manipulation. I would think of ChatGPT and its analogs as assistants, not full-time performers. But, when used right, they can become a helpful hand and a remarkable productivity tool.

Opinions expressed by DZone contributors are their own.

Comments