Kafka Detailed Design and Ecosystem

Learn about the design of the Kafka ecosystem: Kafka Core, Kafka Streams, Kafka Connect, Kafka REST Proxy, and the Schema Registry.

Join the DZone community and get the full member experience.

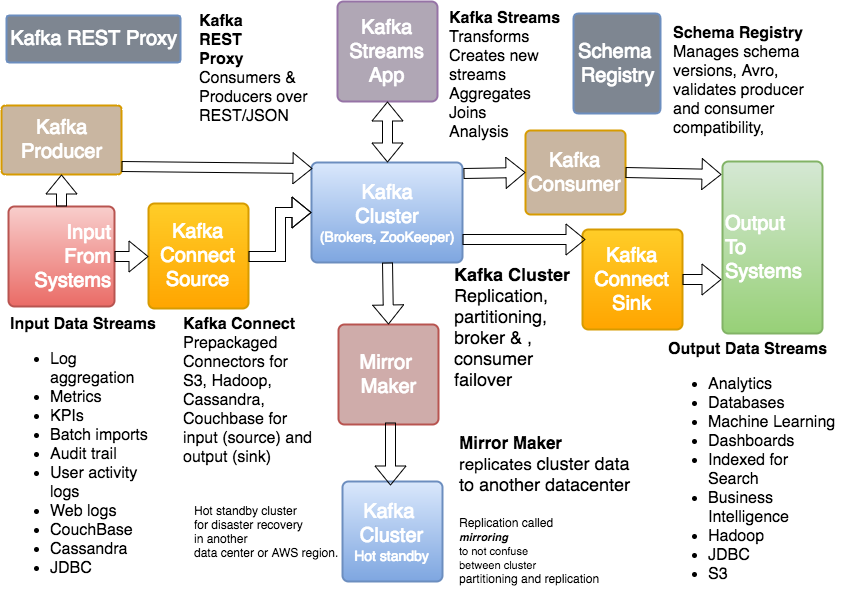

Join For Freethe kafka ecosystem - kafka core, kafka streams, kafka connect, kafka rest proxy, and the schema registry

the core of kafka is the brokers, topics, logs, partitions, and cluster. the core also consists of related tools like mirrormaker. the aforementioned is kafka as it exists in apache.

the kafka ecosystem consists of kafka core, kafka streams, kafka connect, kafka rest proxy, and the schema registry. most of the additional pieces of the kafka ecosystem comes from confluent and is not part of apache.

kafka stream is the streams api to transform, aggregate, and process records from a stream and produces derivative streams. kafka connect is the connector api to create reusable producers and consumers (e.g., stream of changes from dynamodb). the kafka rest proxy is used to producers and consumer over rest (http). the schema registry manages schemas using avro for kafka records. the kafka mirrormaker is used to replicate cluster data to another cluster.

jean-paul azar works at cloudurable . cloudurable provides kafka training , kafka consulting , kafka support and helps setting up kafka clusters in aws .

kafka ecosystem: diagram of connect source, connect sink, and kafka streams

kafka connect sources are sources of records. kafka connect sinks are the destination for records.

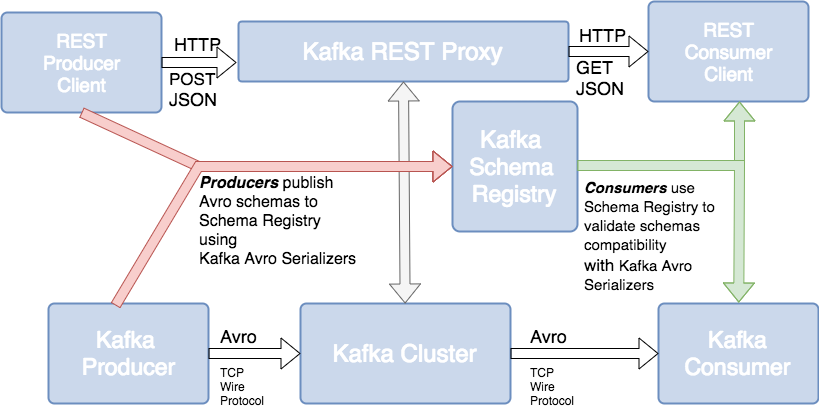

kafka ecosystem: kafka rest proxy and confluent schema registry

kafka streams - kafka streams for stream processing

the kafka stream api builds on core kafka primitives and has a life of its own. kafka streams enables real-time processing of streams. kafka streams supports stream processors. a stream processor takes continual streams of records from input topics, performs some processing, transformation, aggregation on input, and produces one or more output streams. for example, a video player application might take an input stream of events of videos watched, and videos paused, and output a stream of user preferences and then gear new video recommendations based on recent user activity or aggregate activity of many users to see what new videos are hot. kafka stream api solves hard problems with out of order records, aggregating across multiple streams, joining data from multiple streams, allowing for stateful computations, and more.

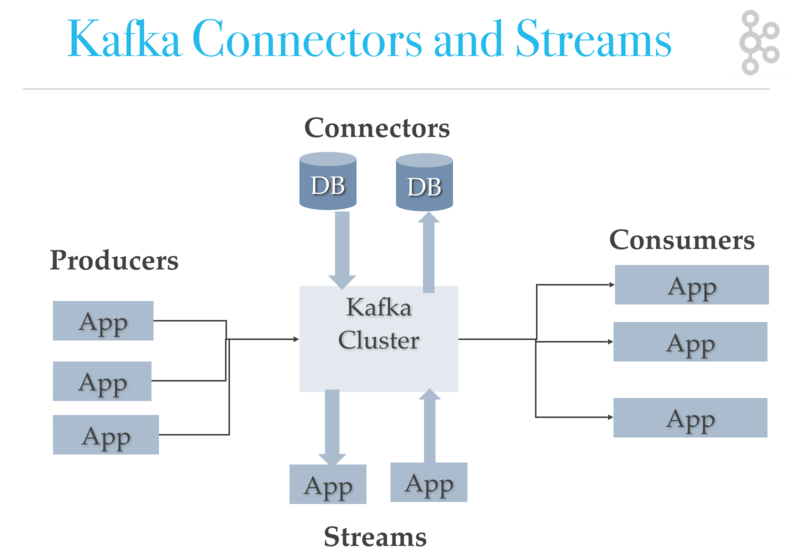

kafka ecosystem: kafka streams and kafka connect

kafka ecosystem review

what is kafka streams?

kafka streams enable real-time processing of streams. it can aggregate across multiple streams, joining data from multiple streams, allowing for stateful computations, and more.

what is kafka connect?

kafka connect is the connector api to create reusable producers and consumers (e.g., stream of changes from dynamodb). kafka connect sources are sources of records. kafka connect sinks are a destination for records.

what is the schema registry?

the schema registry manages schemas using avro for kafka records.

what is kafka mirror maker?

the kafka mirrormaker is used to replicate cluster data to another cluster.

when might you use kafka rest proxy?

the kafka rest proxy is used to producers and consumer over rest (http). you could use it for easy integration of existing code bases.

if you are not sure what kafka is, see what is kafka?

kafka architecture: low-level design

this post really picks off from our series on kafka architecture which includes kafka topics architecture , kafka producer architecture , kafka consumer architecture , and kafka ecosystem architecture.

this article is heavily inspired by the kafka section on design . you can think of it as the cliff notes.

kafka design motivation

linkedin engineering built kafka to support real-time analytics. kafka was designed to feed analytics system that did real-time processing of streams. linkedin developed kafka as a unified platform for real-time handling of streaming data feeds. the goal behind kafka, build a high-throughput streaming data platform that supports high-volume event streams like log aggregation, user activity, etc.

to scale to meet the demands of linkedin kafka is distributed, supports sharding and load balancing. scaling needs inspired kafka’s partitioning and consumer model. kafka scales writes and reads with partitioned, distributed, commit logs. kafka’s sharding is called partitioning ( kinesis, which is similar to kafka , calls partitions "shards").

according to wikipedia, "a database shard is a horizontal partition of data in a database or search engine. each individual partition is referred to as a shard or database shard. each shard is held on a separate database server instance, to spread load."

kafka was designed to handle periodic large data loads from offline systems as well as traditional messaging use-cases, low-latency.

mom is message oriented middleware; think ibm mqseries, jms , activemq, and rabbitmq. like many moms, kafka is fault-tolerance for node failures through replication and leadership election. however, the design of kafka is more like a distributed database transaction log than a traditional messaging system. unlike many moms, kafka replication was built into the low-level design and is not an afterthought.

persistence: embrace filesystem

kafka relies on the filesystem for storing and caching records.

the disk performance of hard drives performance of sequential writes is fast ( really fast ). jbod is just a bunch of disk drives. jbod configuration with six 7200rpm sata raid-5 array is about 600mb/sec. like cassandra tables, kafka logs are write only structures, meaning, data gets appended to the end of the log. when using hdd, sequential reads and writes are fast, predictable, and heavily optimized by operating systems. using hdd, sequential disk access can be faster than random memory access and ssd.

while jvm gc overhead can be high, kafka leans on the os a lot for caching, which is big, fast and rock solid cache. also, modern operating systems use all available main memory for disk caching. os file caches are almost free and don’t have the overhead of the os. implementing cache coherency is challenging to get right, but kafka relies on the rock solid os for cache coherence. using the os for cache also reduces the number of buffer copies. since kafka disk usage tends to do sequential reads, the os read-ahead cache is impressive.

cassandra, netty, and varnish use similar techniques. all of this is explained well in the kafka documentation , and there is a more entertaining explanation at the varnish site .

big fast hdds and long sequential access

kafka favors long sequential disk access for reads and writes. like cassandra, leveldb, rocksdb, and others kafka uses a form of log structured storage and compaction instead of an on-disk mutable btree. like cassandra, kafka uses tombstones instead of deleting records right away.

since disks these days have somewhat unlimited space and are very fast, kafka can provide features not usually found in a messaging system like holding on to old messages for a long time. this flexibility allows for interesting applications of kafka.

kafka producer load balancing

the producer asks the kafka broker for metadata about which kafka broker has which topic partitions leaders thus no routing layer needed. this leadership data allows the producer to send records directly to kafka broker partition leader.

the producer client controls which partition it publishes messages to, and can pick a partition based on some application logic. producers can partition records by key, round-robin or use a custom application-specific partitioner logic.

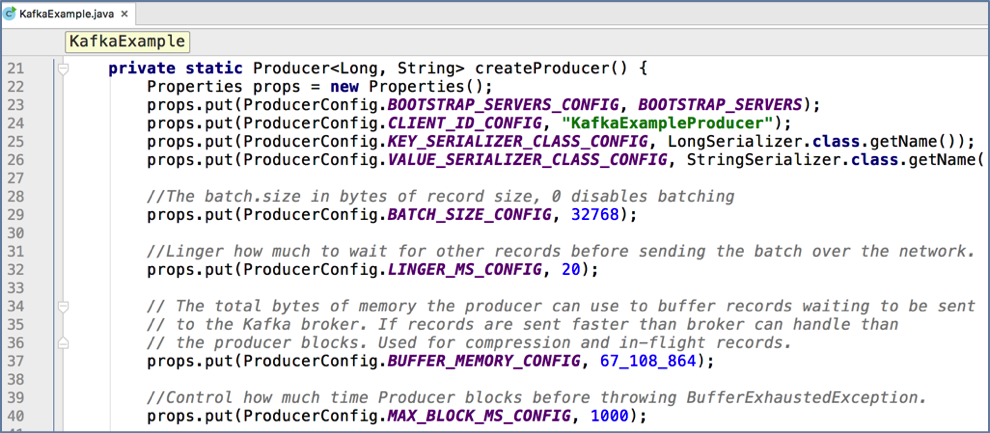

kafka producer record batching

kafka producers support record batching. batching can be configured by the size of records in bytes in batch. batches can be auto-flushed based on time.

batching is good for network io throughput and speeds up throughput drastically.

buffering is configurable and lets you make a tradeoff between additional latency for better throughput. or in the case of a heavily used system, it could be both better average throughput and reduces overall latency.

batching allows accumulation of more bytes to send, which equate to few larger i/o operations on kafka brokers and increase compression efficiency. for higher throughput, kafka producer configuration allows buffering based on time and size. the producer sends multiple records as a batch with fewer network requests than sending each record one by one.

kafka producer batching

kafka compression

in large streaming platforms, the bottleneck is not always cpu or disk but often network bandwidth. there are even more network bandwidth issues in the cloud, as containerized and virtualized environments as multiple services could be sharing a nic card. also, network bandwidth issues can be problematic when talking datacenter to datacenter or wan.

batching is beneficial for efficient compression and network io throughput.

kafka provides end-to-end batch compression instead of compressing a record at a time, kafka efficiently compresses a whole batch of records. the same message batch can be compressed and sent to kafka broker/server in one go and written in compressed form into the log partition. you can even configure the compression so that no decompression happens until the kafka broker delivers the compressed records to the consumer.

kafka supports gzip, snappy, and lz4 compression protocols.

pull vs. push/streams

with kafka consumers pull data from brokers. other systems brokers push data or stream data to consumers. messaging is usually a pull-based system (sqs, most mom use pull). with the pull-based system, if a consumer falls behind, it catches up later when it can.

since kafka is pull-based, it implements aggressive batching of data. kafka like many pull based systems implements a long poll (sqs, kafka both do). a long poll keeps a connection open after a request for a period and waits for a response.

a pull-based system has to pull data and then process it, and there is always a pause between the pull and getting the data.

push based push data to consumers (scribe, flume, reactive streams, rxjava, akka). push-based or streaming systems have problems dealing with slow or dead consumers. it is possible for a push system consumer to get overwhelmed when its rate of consumption falls below the rate of production. some push-based systems use a back-off protocol based on back pressure that allows a consumer to indicate it is overwhelmed see reactive streams . this problem of not flooding a consumer and consumer recovery is tricky when trying to track message acknowledgments.

push-based or streaming systems can send a request immediately or accumulate requests and send in batches (or a combination based on back pressure). push-based systems are always pushing data. the consumer can accumulate messages while it is processing data already sent which is an advantage to reduce the latency of message processing. however, if the consumer died when it was behind processing, how does the broker know where the consumer was and when does data get sent again to another consumer. this problem is not an easy problem to solve. kafka gets around these complexities by using a pull-based system.

traditional mom consumer message state tracking

with most mom it is the broker’s responsibility to keep track of which messages are marked as consumed. message tracking is not an easy task. as consumer consumes messages, the broker keeps track of the state.

the goal in most mom systems is for the broker to delete data quickly after consumption. remember most moms were written when disks were a lot smaller, less capable, and more expensive.

this message tracking is trickier than it sounds (acknowledgment feature), as brokers must maintain lots of states to track per message, sent, acknowledge, and know when to delete or resend the message.

kafka consumer message state tracking

remember that kafka topics get divided into ordered partitions. each message has an offset in this ordered partition. each topic partition is consumed by exactly one consumer per consumer group at a time.

this partition layout means, the broker tracks the offset data not tracked per message like mom, but only needs the offset of each consumer group, partition offset pair stored. this offset tracking equates to a lot fewer data to track.

the consumer sends location data periodically (consumer group, partition offset pair) to the kafka broker, and the broker stores this offset data into an offset topic.

the offset style message acknowledgment is much cheaper compared to mom. also, consumers are more flexible and can rewind to an earlier offset (replay). if there was a bug, then fix the bug, rewind consumer and replay the topic. this rewind feature is a killer feature of kafka as kafka can hold topic log data for a very long time.

message delivery semantics

there are three message delivery semantics: at most once, at least once and exactly once. at most once is messages may be lost but are never redelivered. at least once is messages are never lost but may be redelivered. exactly once is each message is delivered once and only once. exactly once is preferred but more expensive, and requires more bookkeeping for the producer and consumer.

kafka consumer and message delivery semantics

recall that all replicas have exactly the same log partitions with the same offsets and the consumer groups maintain its position in the log per topic partition.

to implement “at-most-once” consumer reads a message, then saves its offset in the partition by sending it to the broker, and finally process the message. the issue with “at-most-once” is a consumer could die after saving its position but before processing the message. then the consumer that takes over or gets restarted would leave off at the last position and message in question is never processed.

to implement “at-least-once” the consumer reads a message, process messages, and finally saves offset to the broker. the issue with “at-least-once” is a consumer could crash after processing a message but before saving last offset position. then if the consumer is restarted or another consumer takes over, the consumer could receive the message that was already processed. the “at-least-once” is the most common set up for messaging, and it is your responsibility to make the messages idempotent, which means getting the same message twice will not cause a problem (two debits).

to implement “exactly once” on the consumer side, the consumer would need a two-phase commit between storage for the consumer position, and storage of the consumer’s message process output. or, the consumer could store the message process output in the same location as the last offset.

kafka offers the first two, and it up to you to implement the third from the consumer perspective.

kafka producer durability and acknowledgement

kafka’s offers operational predictability semantics for durability. when publishing a message, a message gets “committed” to the log which means all isrs accepted the message. this commit strategy works out well for durability as long as at least one replica lives.

the producer connection could go down in middle of send, and producer may not be sure if a message it sent went through, and then the producer resends the message. this resend-logic is why it is important to use message keys and use idempotent messages (duplicates ok). kafka did not make guarantees of messages not getting duplicated from producer retrying until recently (june 2017).

the producer can resend a message until it receives confirmation, i.e. acknowledgment received. the producer resending the message without knowing if the other message it sent made it or not, negates “exactly once” and “at-most-once” message delivery semantics.

producer durability

the producer can specify durability level. the producer can wait on a message being committed. waiting for commit ensures all replicas have a copy of the message.

the producer can send with no acknowledgments (0). the producer can send with just get one acknowledgment from the partition leader (1). the producer can send and wait on acknowledgments from all replicas (-1), which is the default.

improved producer (june 2017 release)

kafka now supports “exactly once” delivery from producer , performance improvements and atomic write across partitions. they achieve this by the producer sending a sequence id, the broker keeps track if producer already sent this sequence, if producer tries to send it again, it gets an ack for duplicate message, but nothing is saved to log. this improvement requires no api change.

kafka producer atomic log writes (june 2017 release)

another improvement to kafka is the kafka producers having atomic write across partitions. the atomic writes mean kafka consumers can only see committed logs (configurable). kafka has a coordinator that writes a marker to the topic log to signify what has been successfully transacted. the transaction coordinator and transaction log maintain the state of the atomic writes.

the atomic writes do require a new producer api for transactions.

here is an example of using the new producer api.

new producer api for transactions

producer.inittransaction();

try {

producer.begintransaction();

producer.send(debitaccountmessage);

producer.send(creditotheraccountmessage);

producer.sentoffsetstotxn(...);

producer.committransaction();

} catch (producerfencedtransactionexception pfte) {

...

producer.close();

} catch (kafkaexception ke) {

...

producer.aborttransaction();

}kafka replication

kafka replicates each topic’s partitions across a configurable number of kafka brokers. kafka’s replication model is by default, not a bolt-on feature like most moms as kafka was meant to work with partitions and multi-nodes from the start. each topic partition has one leader and zero or more followers.

leaders and followers are called replicas. a replication factor is the leader node plus all of the followers. partition leadership is evenly shared among kafka brokers. consumers only read from the leader. producers only write to the leaders.

the topic log partitions on followers are in-sync to leader’s log, isrs are an exact copy of the leaders minus the to-be-replicated records that are in-flight. followers pull records in batches from their leader like a regular kafka consumer.

kafka broker failover

kafka keeps track of which kafka brokers are alive. to be alive, a kafka broker must maintain a zookeeper session using zookeeper’s heartbeat mechanism and must have all of its followers in-sync with the leaders and not fall too far behind.

both the zookeeper session and being in-sync is needed for broker liveness which is referred to as being in-sync. an in-sync replica is called an isr. each leader keeps track of a set of “in sync replicas”.

if isr/follower dies, falls behind, then the leader will remove the follower from the set of isrs. falling behind is when a replica is not in-sync after the

replica.lag.time.max.ms

period.

a message is considered “committed” when all isrs have applied the message to their log. consumers only see committed messages. kafka guarantee: a committed message will not be lost, as long as there is at least one isr.

replicated log partitions

a kafka partition is a replicated log. a replicated log is a distributed data system primitive. a replicated log is useful for implementing other distributed systems using state machines. a replicated log models “coming into consensus” on an ordered series of values.

while a leader stays alive, all followers just need to copy values and ordering from their leader. if the leader does die, kafka chooses a new leader from its followers which are in-sync. if a producer is told a message is committed, and then the leader fails, then the newly elected leader must have that committed message.

the more isrs you have; the more there are to elect during a leadership failure.

kafka and quorum

quorum is the number of acknowledgments required and the number of logs that must be compared to elect a leader such that there is guaranteed to be an overlap for availability. most systems use a majority vote, kafka does not use a simple majority vote to improve availability.

in kafka, leaders are selected based on having a complete log. if we have a replication factor of 3, then at least two isrs must be in-sync before the leader declares a sent message committed. if a new leader needs to be elected then, with no more than 3 failures, the new leader is guaranteed to have all committed messages.

among the followers, there must be at least one replica that contains all committed messages. problem with majority vote quorum is it does not take many failures to have an inoperable cluster.

kafka quorum majority of isrs

kafka maintains a set of isrs per leader. only members in this set of isrs are eligible for leadership election. what the producer writes to partition is not committed until all isrs acknowledge the write. isrs are persisted to zookeeper whenever isr set changes. only replicas that are members of isr set are eligible to be elected leader.

this style of isr quorum allows producers to keep working without the majority of all nodes, but only an isr majority vote. this style of isr quorum also allows a replica to rejoin isr set and have its vote count, but it has to be fully re-synced before joining even if replica lost un-flushed data during its crash.

all nodes die at the same time. now what?

kafka’s guarantee about data loss is only valid if at least one replica is in-sync.

if all followers that are replicating a partition leader die at once, then data loss kafka guarantee is not valid. if all replicas are down for a partition, kafka, by default, chooses first replica (not necessarily in isr set) that comes alive as the leader (config unclean.leader.election.enable=true is default). this choice favors availability to consistency.

if consistency is more important than availability for your use case, then you can set config

unclean.leader.election.enable=false

then if all replicas are down for a partition, kafka waits for the first isr member (not first replica) that comes alive to elect a new leader.

producers pick durability

producers can choose durability by setting acks to - none (0), the leader only (1) or all replicas (-1 ).

the acks=all is the default. with all, the acks happen when all current in-sync replicas (isrs) have received the message.

you can make the trade-off between consistency and availability. if durability over availability is preferred, then disable unclean leader election and specify a minimum isr size.

the higher the minimum isr size, the better the guarantee is for consistency. but the higher minimum isr, the more you reduces availability since partition won’t be unavailable for writes if the size of isr set is less than the minimum threshold.

quotas

kafka has quotas for consumers and producers to limits bandwidth they are allowed to consume. these quotas prevent consumers or producers from hogging up all the kafka broker resources. the quota is by client id or user. the quota data is stored in zookeeper, so changes do not necessitate restarting kafka brokers.

kafka low-level design and architecture review

how would you prevent a denial of service attack from a poorly written consumer?

use quotas to limit the consumer’s bandwidth.

what is the default producer durability (acks) level?

all. which means all isrs have to write the message to their log partition.

what happens by default if all of the kafka nodes go down at once?

kafka chooses the first replica (not necessarily in isr set) that comes alive as the leader as

unclean.leader.election.enable=true

is the default to support availability.

why is kafka record batching important?

optimized io throughput over the wire as well as to the disk. it also improves compression efficiency by compressing an entire batch.

what are some of the design goals for kafka?

to be a high-throughput, scalable streaming data platform for real-time analytics of high-volume event streams like log aggregation, user activity, etc.

what are some of the new features in kafka as of june 2017?

producer atomic writes, performance improvements and producer not sending duplicate messages.

what is the different message delivery semantics?

there are three message delivery semantics: at most once, at least once and exactly once.

jean-paul azar

works at

cloudurable

. cloudurable provides

kafka training

,

kafka consulting

,

kafka support

and helps

setting up kafka clusters in aws

.

Opinions expressed by DZone contributors are their own.

Comments