What Is Deep Data Observability?

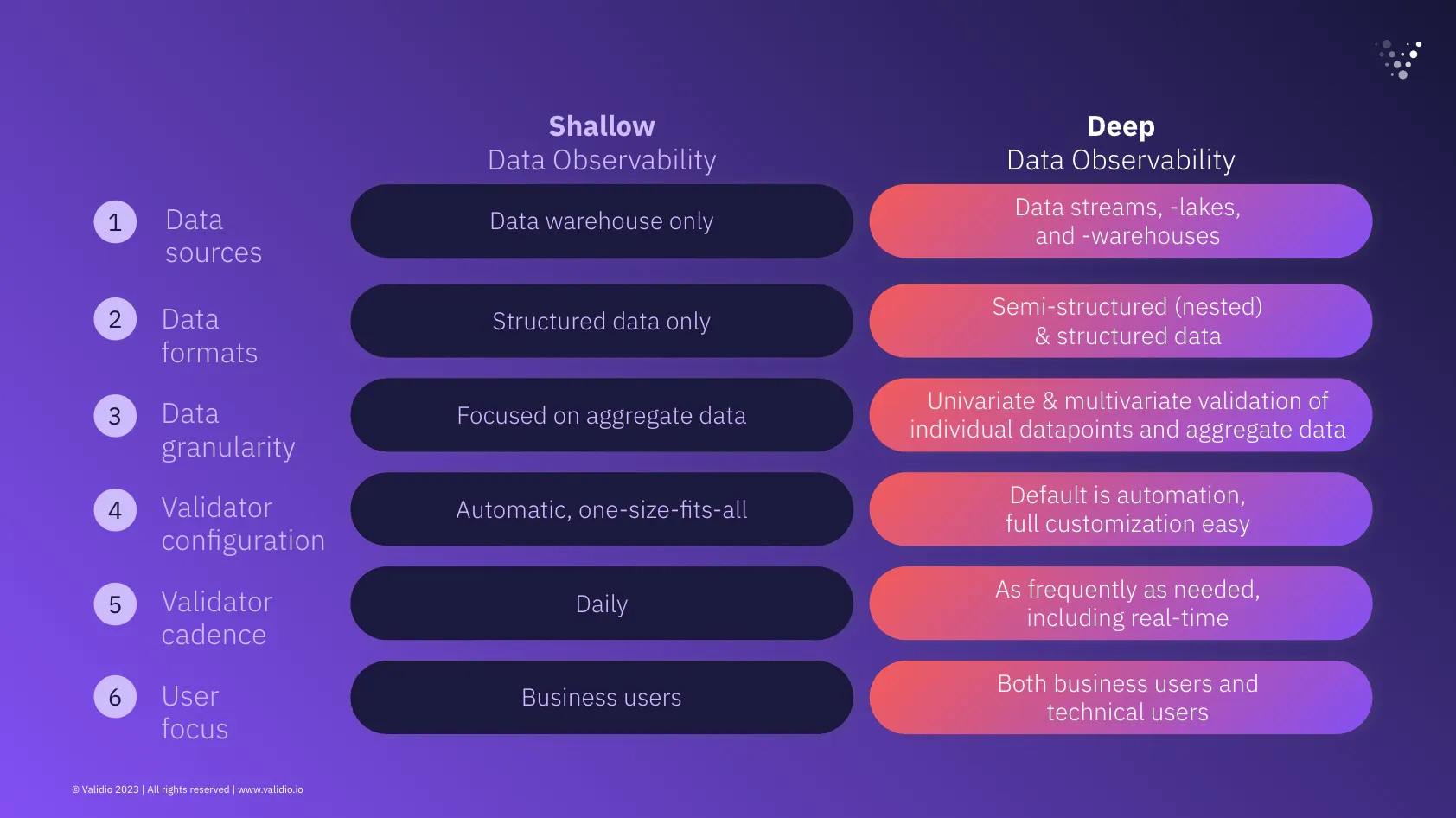

Deep data observability is truly comprehensive in terms of data sources, data formats, data granularity, validator configuration, cadence, and user focus.

Join the DZone community and get the full member experience.

Join For FreeThis article explains why Deep data observability is different from shallow: deep data observability is truly comprehensive in terms of data sources, data formats, data granularity, validator configuration, cadence, and user focus.

The Need for “Deep” Data Observability

2022 was the year when data observability really took off as a category (as opposed to old-school “data quality tools”), with the official Gartner terminology for the space. Similarly, Matt Turck consolidated the data quality and data observability categories in the 2023 MAD Landscape analysis. Nevertheless, the industry is nowhere near fully formed. In his 2023 report titled “Data Observability—the Rise of the Data Guardians,” Oyvind Bjerke at MMC Ventures discusses the space as having massive amounts of untapped potential for further innovation.

With the backdrop of this dynamic space, we go ahead and define data observability as:

The degree to which an organization has visibility into its data pipelines. A high degree of data observability enables data teams to improve data quality.

However, not all data observability platforms, i.e., tools specifically designed to help organizations reach data observability, are created equal. The tools differ in terms of the degree of data observability they can help data-driven teams achieve. We thus distinguish between deep data observability and shallow data observability. They differ on the following dimensions: Data sources, data formats, data granularity, validator configuration, validator cadence, and user focus.

In the rest of this article, we dive deep into deep data observability and explain the six dimensions that distinguish “Deep” data observability from “Shallow” data observability.

The Six Pillars of Deep Data Observability

1. Data Sources: Truly End-to-End

Shallow data observability solutions tend to focus only on the data warehouse through SQL queries. Deep data observability solutions, on the other hand, provide data teams with equal degrees of observability across data streams, data lakes, and data warehouses. There are two reasons why this is important:

First, data does not magically appear in the data warehouse. It often comes through a streaming source and lands in a data lake before it gets pushed to the data warehouse. Bad data can appear anywhere along the way, and you want to identify issues as soon as possible and pinpoint their origin.

Secondly, in an increasing amount of data use cases such as machine learning and automated decision-making, data never touches the data warehouse. For a data observability tool to be proactive and future-proof, it needs to be truly end-to-end, also in lakes and streams.

2. Data Formats: Structured and Semi-Structured

Data streams and lakes segue nicely into the next section: data formats. Shallow data observability is focused on the data warehouse, meaning it obtains observability for structured data. However, to reach a high degree of data observability end-to-end in your data stack, the data observability solution must support data formats that are common in data streams and lakes (and increasingly warehouses). With deep data observability, data teams can obtain high-quality data by monitoring data quality not only in structured datasets but also for semi-structured data in nested formats, e.g., JSON blobs.

3. Data Granularity: Univariate and Multivariate Validation of Individual Datapoints and Aggregate Data

Shallow data observability originally rose to fame based on analyzing one-dimensional (univariate) statistics about aggregate data (e.g., metadata). For example, looking at the average number of null values in one column.

However, countless cases of bad data have told us that data teams need to validate not only summary statistics and distributions but also individual data points. In addition, they need to look at dependencies (multivariate) between fields (or columns), and not just individual fields—real-world data does come with dependencies so most data quality problems are multivariate in nature. Deep data observability helps data-driven teams do exactly this: univariate and multivariate validation of individual data points and aggregated data. Let’s have a look at an example of when multivariate validation is needed.

The dataset below is segmented by country and on product_type (multiple variables, not just one), which is necessary in order to validate each individual subsegment (set of records). Each subsegment will likely have unique volume, freshness, anomalies, and distribution, which means it must be validated individually. Let’s say this dataset tracks all transaction data from an e-comm business. Then each country is likely to display individual purchasing behaviors, which means they need to be validated individually. Double-clicking one more time, we might also find that within each country, each product_type is subject to different purchasing behaviors too. Thus, we need to segment both columns to validate the data truly.

4. Validator Configuration: Automatically Suggested as Well as Manually Configured

Depending on your organization, you might be looking for various degrees of scalability in your data systems. For example, suppose you’re looking for a “set it and forget it” type of solution that alerts you whenever something unexpected happens; then, shallow data observability is what you’re after. In that case, you will get a bird’s eye view of, e.g., all tables in your data warehouse and whether they behave as expected.

Conversely, your business might have unique business logic or custom validation rules you’ll want to set up. The degree to which you can do this custom setup in a scalable way determines the degree to which you have deep data observability. If each custom rule requires a data engineer to write SQL, you’re looking at a not-so-scalable setup, and it will be very challenging to reach the state of deep data observability. Instead, if you have a quick-to-implement menu of validators that can be combined in a tailored way to suit your business, then deep data observability is within reach. Setting up custom validators should not be reserved for code-savvy data team members only.

5. Multi-Cadence Validation: As Frequently as Needed, Including Real-Time

Again, depending on your business needs, you might have different requirements for data observability on various time horizons. For example, suppose you use a standard type of setup where data is loaded into your warehouse daily. In that case, shallow data observability, which only supports a standard daily cadence, fulfills your needs.

Instead, if your data infrastructure is more complex, with some sources being updated in real-time, some daily, and others less frequently, you will need support to validate data with all of these cadences. This multi-cadence need is especially true for companies relying on any kind of data for rapid decision-making or real-time product features, e.g., dynamic pricing, IoT applications, retail businesses that rely heavily on digital marketing, etc. A deep data observability platform has full support for validating data for all these use cases. It ensures that you get insights into your data at the right time according to your business context. It also means that you can act on bad data right when it occurs and before it hits your downstream use cases.

6. User Focus: Both Technical and Non-Technical

Data quality is an inherently cross-functional problem, which is part of the reason why it can be so challenging to solve. For example, the person who knows what “good” data looks like in a CRM dataset might be a salesperson with their boots on the ground in sales calls. Thus, the person that moves (or ingests) data from the CRM system into the data warehouse might have no insight into this at all and might naturally be more concerned with whether the data pipelines ran as scheduled.

Shallow data observability solutions primarily cater to one single user group. They either focus on the data engineer, who cares mostly about the nuts and bolts of the pipelines and whether the system scales. Or, they focus on business users, who might care mostly about dashboards and summary statistics.

Deep data observability is obtained when both types of users are kept in mind. In practice, this means providing multiple modes of controlling a data observability platform: through a command line interface and through a graphical user interface. It might also entail multiple access levels and privileges. In this way, all users can collaborate on configuring data validation and obtain a high degree of visibility into data pipelines. This, in turn, effectively democratizes data quality within the whole business.

What’s Next?

We’ve now covered the six dimensions differentiating shallow and deep data observability. We hope that this report gives you two frameworks to rely on when evaluating your business needs for data quality and data observability tooling.

Published at DZone with permission of Patrik Liu Tran. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments