What Is Data Locality?

This article covers leveraging Data Locality in your Big Data processing.

Join the DZone community and get the full member experience.

Join For FreeFrom Software Mistakes and Trade-offs book

This article explains the concept of data locality and how it can help with big data processing.

In big data applications, both streaming and batch processing, we often need to use data from multiple sources to get insights and business value. The data locality pattern allows us to move computation to data. The data can live in the database or the file system. The situation is simple as long as our data fits into the disk or memory of our machines. Processing can be local and fast. In big data applications, it is not feasible to store large amounts of data on one machine in the real world. We need to employ techniques such as partitioning to split the data among multiple machines. Once the data is on multiple physical hosts, however, it gets harder to get insights from data that are distributed in locations that are reachable via the network. Joining data in such a scenario is not a trivial task and needs careful planning. In this article, we will follow the process of merging data in such a big data scenario.

Let's start this article by understanding a concept that plays a crucial role in processing a nontrivial amount of data: data locality. To understand why this concept solves many problems, we will look at a simple system that does not leverage data locality.

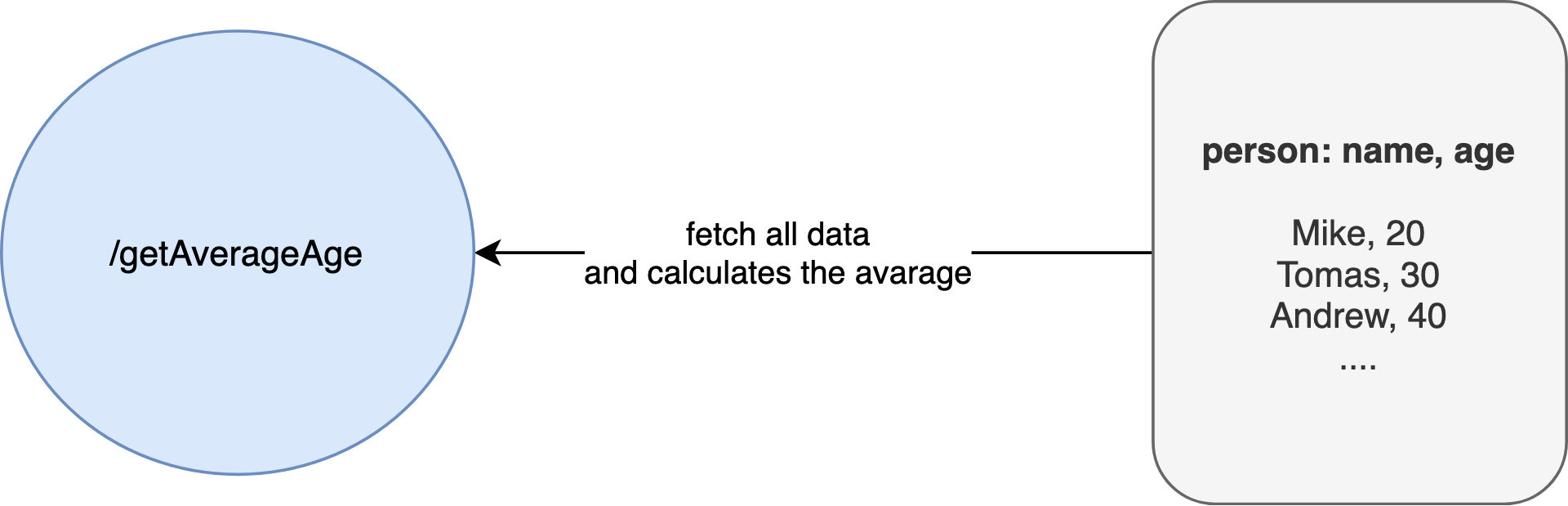

Let's imagine that we have /getAverageAge HTTP endpoint that returns the average age of all users managed by the service.

Figure 1. Move data to computation.

When the client executes this HTTP call, the service fetches all the data from an underlying data store. It can be a database, file, or anything persistent. Once all data is transferred to the service, it executes the logic of calculating an average. It adds all people's ages, counts the number of people, and divides the sum by the number of persons. Such a value is returned to the end-user. It's important to note that only one number is returned.

We can describe this scenario as moving data to computations. There are a couple of important observations here to make. The first one is that we need to fetch all data. It can be tens of gigabytes. As long as this data fits in the machine's memory that calculates the average, there is no problem. The problem appears when operating on big data sets that can have terabytes or petabytes of data. For such a scenario, transferring all data to the machine may not be feasible or very complex. We could, for example, use a splitting technique and process data in batches. The second important observation to make is that we will need to send and retrieve a lot of data via the network. The I/O operations are the slowest ones in the processing. This involves the file system reads and blocking. In such cases, we are transferring a substantial amount of data. Therefore, there is a non-negligible probability that some of the network packets will get lost, and we will need to retransmit parts of the data. Finally, we can see that the end-user is not interested in any data besides the result: in this case, an average.

One of the pros of this solution is simplicity from the programming perspective, assuming that the amount of data we want to process fits machine memory.

These observations and drawbacks were the main reasons why processing in such a scenario is inverted, and we are moving computations to data.

Moving Computations to Data

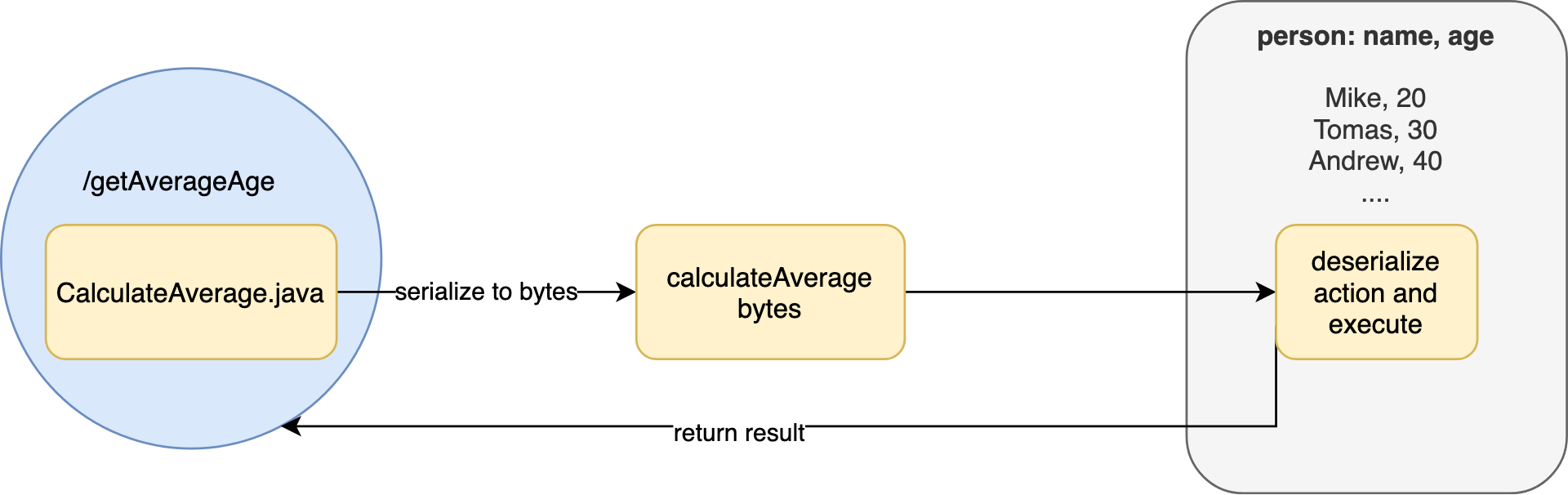

At this point, we know that sending data to computations has a lot of drawbacks, and it may be not feasible for big data sets. Let's solve the same problem by leveraging the data locality technique. In this scenario, the end-user sees the same HTTP endpoint responsible for calculating an average. The underlying processing, however, changes a lot. The calculation of an average is simple logic, but still, it involves some coding. We need to extract the age field from every person, add this data, and divide by the count. The big data processing frameworks expose an API that allows engineers to code such transformations and concatenation easily. Let's assume that we are using java to code this logic. The logic responsible for computation is created in the service. We need a way to transfer it to the machine that has the actual data.

Figure 2. Move computation to data.

The first step that needs to be done is to serialize the caclauteAverage.java to bytes. We need this form of representation to be able to send the data easily via a network. The data node (a machine that store data) needs to have a running process responsible for retrieving the serialized logic. Next, it transforms the bytes (deserializing them) into a form that can be executed on the data node. Most big data frameworks (such as Apache Spark or Hadoop) provide their mechanism for serializing and deserializing the logic. Once the logic is deserialized it is executed on the data node itself. The logic is operating on the data that resides on the local file system from the calculated average function perspective. There is no need to send any person's data to the service that exposes the HTTP endpoint. When the logic calculates the average successfully, only the resulting number is transferred to the service. The service returns the data to the end-user.

There are important observations here to make. First, the amount of data that we needed to transform via a network is very small. We need to transform only the serialized function and the resulting number. Since the network and I/O is a bottleneck in such processing, this solution will perform substantially better. We turn the processing that was I/O bound into processing that is CPU bound. If we have to speed up the average calculation, we can, for example, increase the number of cores on the data node. For the move data to computation use case, it was harder to speed up the processing because increasing network throughput is not always feasible.

The solution that leverages data locality is more complex because we need logic for serializing processing. Such logic may get complicated for more advanced processing. Also, we need a dedicated process that runs on the data node. This process needs to be able to deserialize the data and execute the logic. Fortunately, both steps are implemented and provided by big data frameworks such as Apache Spark.

Some readers may notice that the same data locality pattern is applied for databases. If you wish to calculate the average, you are issuing a query (e.g. SQL) that is transferred to a database. Next, the database is deserializing the query and executing the logic that leverages data locality. Those solutions are similar. However, big data frameworks give you more flexibility. You can execute the logic on data nodes that contain all kinds of data: Avro, JSON, parquet, and any other format. You are not tied to a database-specific execution engine.

Scaling Processing Using Data Locality

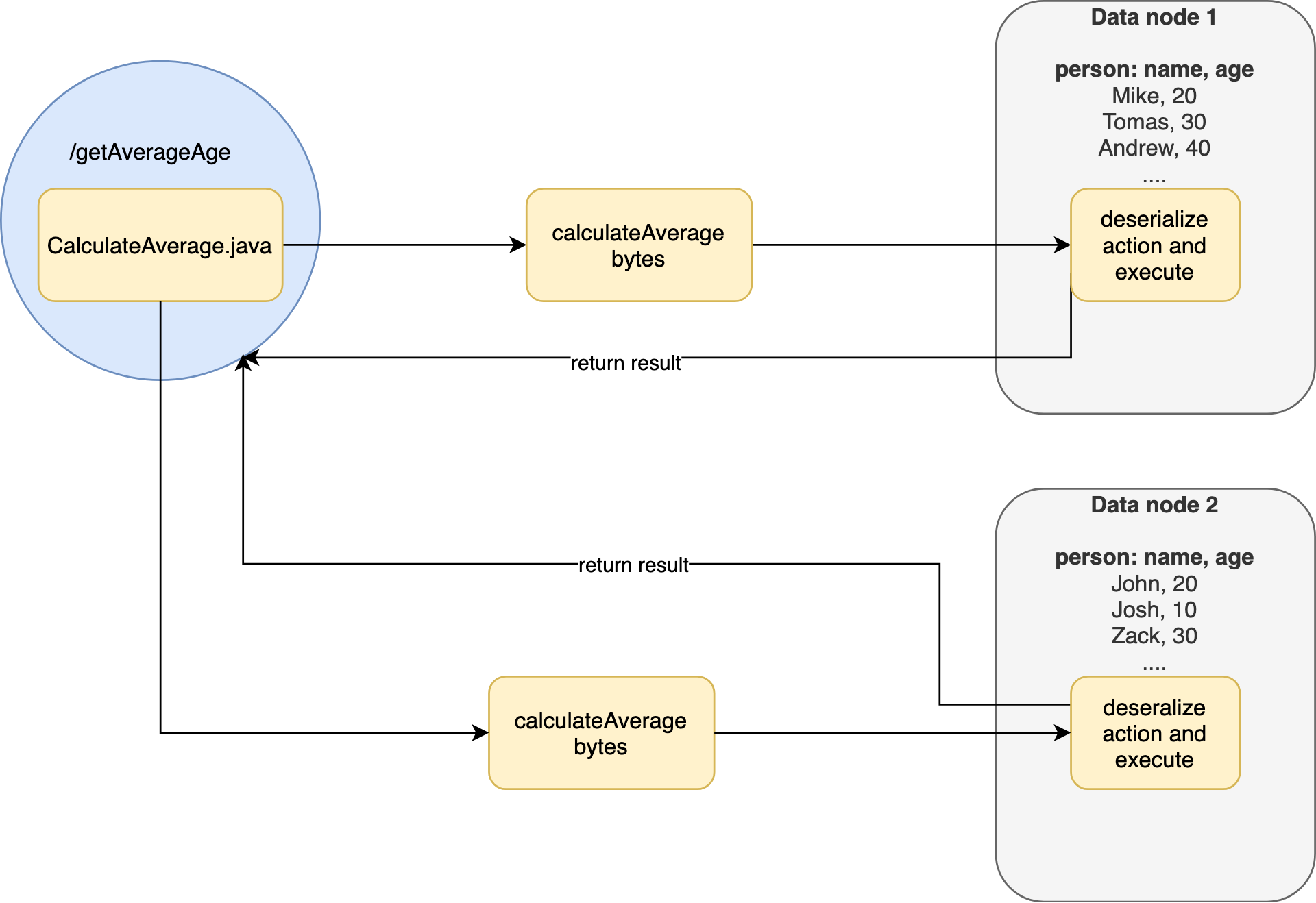

Data locality plays a crucial role in Big Data processing because it allows us to scale and parallelize the processing very easily. Imagine a scenario in which our data is stored on the data node doubled, and the total amount of data does not fit the disk space of one node. We cannot store all the data on one physical machine, so we decided to divide it between two machines.

If we used the technique of moving data to computations, the amount of data we need to transfer via the network would double. This would slow the processing substantially, and get even worse if we're to add more nodes.

Figure 3. Scaling processing using data locality

Scaling and parallelizing processing while leveraging data locality is fairly easy to achieve. Instead of sending the serialized processing to one data node, we will send it to two data nodes. Each of the data nodes will have a process responsible for the deserialization of logic and running it. Once the processing is completed, the resulting data is sent to a service that coalesces it and returns it to end-users.

At this point, we know the benefits of data locality. Next, we need to understand how to split the big amount of data into N data nodes. This is essential to understand if we want to operate on big data and get business value. This is discussed at length in the book.

That’s all for this article.

Opinions expressed by DZone contributors are their own.

Comments