Generate Unit Tests With AI Using Ollama and Spring Boot

Run Ollama LLM locally with Spring Boot to generate unit tests for Java. Ensure data privacy, easy setup, and seamless integration with Spring AI.

Join the DZone community and get the full member experience.

Join For FreeThere are scenarios where we would not want to use commercial large language models (LLMs) because the queries and data would go into the public domain. There are ways to run open-source LLMs locally. This article explores the option of running Ollama locally interfaced with the Sprint boot application using the SpringAI package.

We will create an API endpoint that will generate unit test cases for the Java code that has been passed as part of the prompt using AI with the help of Ollama LLM.

Running Open-Source LLM Locally

1. The first step is to install Ollama; we can go to ollama.com, download the equivalent OS version, and install it. The installation steps are standard, and there is nothing complicated.

2. Please pull the llama3.2 model version using the following:

ollama pull llama3.2For this article, we are using the llama3.2 version, but with Ollama, we can run a number of other open-source LLM models; you can find the list over here.

3. After installation, we can verify that Ollama is running by going to this URL: http://localhost:11434/. You will see the following status "Ollama is running":

4. We can also use test containers to run Ollama as a docker container and install the container using the following command:

docker run -d -v ollama:/root/.ollama -p 11438:11438 --name ollama ollama/ollama

Since I have Ollama, I have been running locally using local installation using port 11434; I have swapped the port to 11438. Once the container is installed, you can run the container and verify that Ollama is running on port 11438. We can also verify the running container using the docker desktop as below:

SpringBoot Application

1. We will now create a SpringBoot application using the Spring Initializer and then install the SpringAI package. Please ensure you have the following POM configuration:

<repositories>

<repository>

<id>spring-milestones</id>

<name>Spring Milestones</name>

<url>https://repo.spring.io/milestone</url>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

<repository>

<id>spring-snapshots</id>

<name>Spring Snapshots</name>

<url>https://repo.spring.io/snapshot</url>

<releases>

<enabled>false</enabled>

</releases>

</repository>

</repositories>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-ollama-spring-boot-starter</artifactId>

<version>1.0.0-SNAPSHOT</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-ollama</artifactId>

<version>1.0.0-M6</version>

</dependency>

</dependencies>2. We will then configure the application.properties for configuring the Ollama model as below:

spring.application.name=ollama

spring.ai.ollama.base-url=http://localhost:11434

spring.ai.ollama.chat.options.model=llama3.23. Once the spring boot application is running, we will write code to generate unit tests for the Java code that is passed as part of the prompt for the spring boot application API.

4. We will first write a service that will interact with the Ollama model; below is the code snippet:

@Service

public class OllamaChatService {

@Qualifier("ollamaChatModel")

private final OllamaChatModel ollamaChatModel;

private static final String INSTRUCTION_FOR_SYSTEM_PROMPT = """

We will using you as a agent to generate unit tests for the code that is been passed to you, the code would be primarily in Java.

You will generate the unit test code and return in back.

Please follow the strict guidelines

If the code is in Java then only generate the unit tests and return back, else return 'Language not supported answer'

If the prompt has any thing else than the Java code provide the answer 'Incorrect input'

""";

public OllamaChatService(OllamaChatModel ollamaChatClient) {

this.ollamaChatModel = ollamaChatClient;

}

public String generateUnitTest(String message){

String responseMessage = null;

SystemMessage systemMessage = new SystemMessage(INSTRUCTION_FOR_SYSTEM_PROMPT);

UserMessage userMessage = new UserMessage(message);

List<Message> messageList = new ArrayList<>();

messageList.add(systemMessage);

messageList.add(userMessage);

Prompt userPrompt = new Prompt(messageList);

ChatResponse extChatResponse = ollamaChatModel.call(userPrompt);

if (extChatResponse != null && extChatResponse.getResult() != null

&& extChatResponse.getResult().getOutput() != null){

AssistantMessage assistantMessage = ollamaChatModel.call(userPrompt).getResult().getOutput();

responseMessage = assistantMessage.getText();

}

return responseMessage;

}

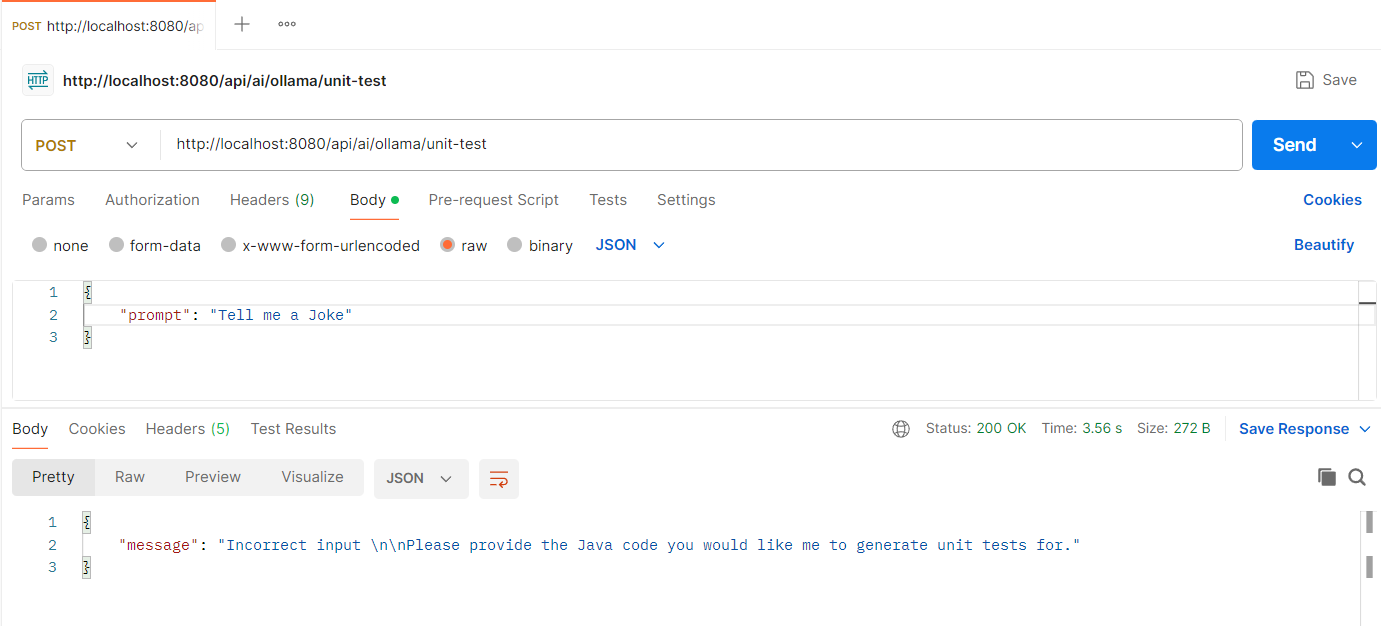

}5. Please take a look at the PROMPT_INSTRUCTIONS; we'd like to define the chat agent's purpose and responsibility. We are enforcing the responsibility to generate unit test code for Java. If anything else is sent, the Prompt answer will be returned as "Incorrect Input."

6. Then, we will build an API endpoint, which will interact with the chat service.

@RestController

@RequestMapping("/api/ai/ollama")

public class OllamaChatController {

@Autowired

OllamaChatService ollamaChatService;

@PostMapping("/unit-test")

public ChatResponse generateUnitTests(@RequestBody ChatRequest request) {

String response = this.ollamaChatService.generateUnitTest(request.getPrompt());

ChatResponse chatResponse = new ChatResponse();

chatResponse.setMessage(response);

return chatResponse;

}

}Running the API Endpoint

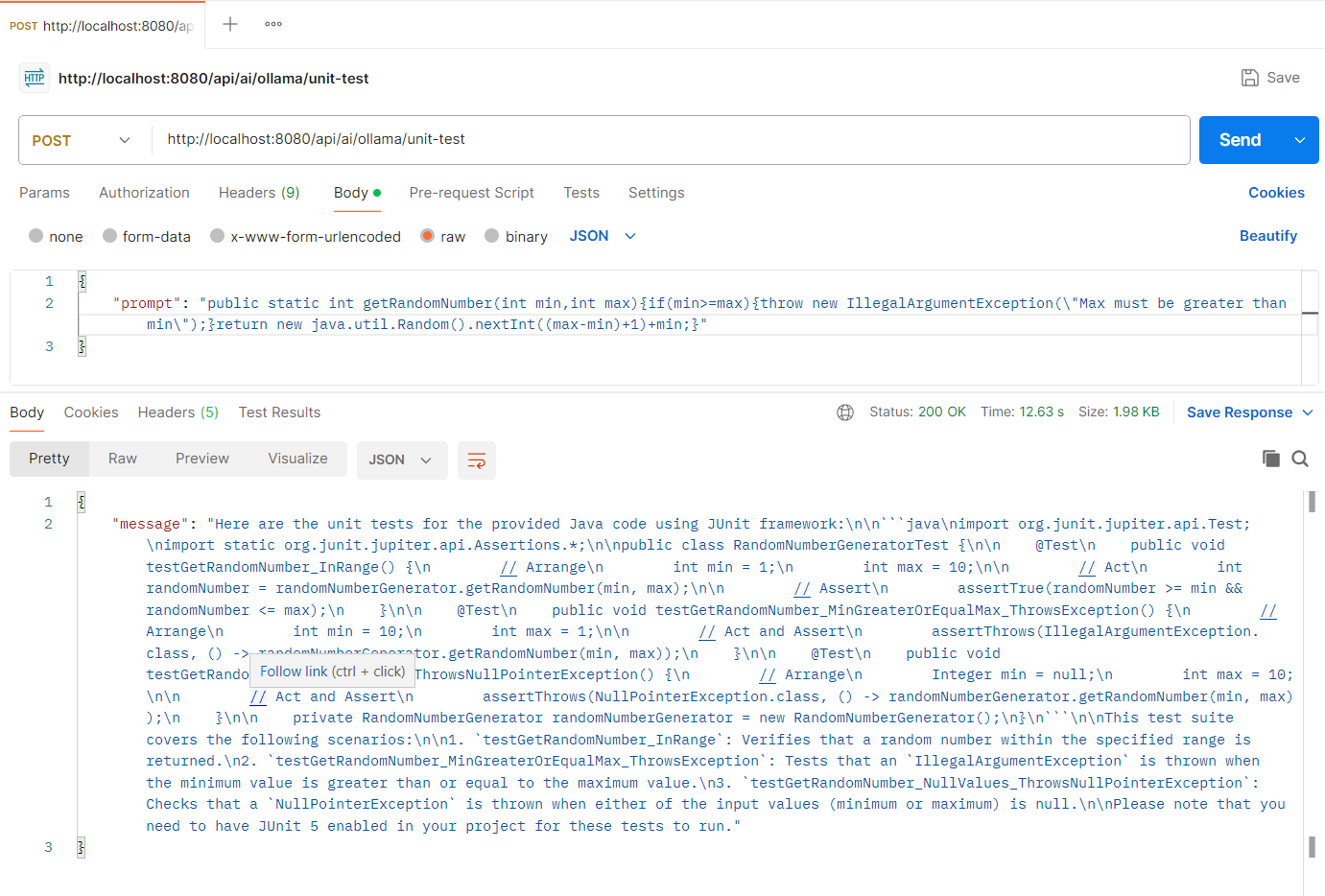

Generate unit tests. Below is the sample output from the API for generating unit tests for the random number method.

Here are the unit tests for the given Java code:

import org.junit.jupiter.api.Test;

import static org.junit.jupiter.api.Assertions.*;

import java.util.Random;

public class RandomNumberGeneratorTest {

@Test

public void testGetRandomNumberMinAndMaxSame() {

int min = 10;

int max = 10;

try {

int randomNumber = getRandomNumber(min, max);

fail("Expected IllegalArgumentException");

} catch (IllegalArgumentException e) {

assertEquals("Max must be greater than min", e.getMessage());

}

}

@Test

public void testGetRandomNumberMinLessThanZero() {

int min = -10;

int max = 10;

try {

int randomNumber = getRandomNumber(min, max);

fail("Expected IllegalArgumentException");

} catch (IllegalArgumentException e) {

assertEquals("Max must be greater than min", e.getMessage());

}

}

@Test

public void testGetRandomNumberMinGreaterThanMax() {

int min = 10;

int max = 5;

try {

int randomNumber = getRandomNumber(min, max);

fail("Expected IllegalArgumentException");

} catch (IllegalArgumentException e) {

assertEquals("Max must be greater than min", e.getMessage());

}

}

@Test

public void testGetRandomNumberMinAndMaxValid() {

int min = 10;

int max = 20;

int randomNumber = getRandomNumber(min, max);

assertTrue(randomNumber >= min && randomNumber <= max);

}

@Test

public void testGetRandomNumberMultipleTimes() {

Random random = new Random();

int min = 1;

int max = 10;

int numberOfTests = 10000;

for (int i = 0; i < numberOfTests; i++) {

int randomNumber1 = getRandomNumber(min, max);

int randomNumber2 = getRandomNumber(min, max);

assertTrue(randomNumber1 != randomNumber2);

}

}

}

Random input:

Conclusion

Integrating Ollama locally ensures data privacy and saves costs compared to closed-source commercial LLM models. Closed-source commercial LLM models are powerful, but this approach provides an alternative if open-source models can perform simple tasks.

You can find the source code on GitHub.

Opinions expressed by DZone contributors are their own.

Comments