Untangling Deadlocks Caused by Java’s "parallelStream"

In this post, we dive deep into a real-world deadlock saga triggered by the seemingly innocent use of Java’s “parallelStream.”

Join the DZone community and get the full member experience.

Join For FreeConcurrency is both the boon and bane of software development. The promise of enhanced performance through parallel processing comes hand in hand with intricate challenges, such as the notorious deadlock. Deadlocks, those insidious hiccups in the world of multithreaded programming, can bring even the most robust application to its knees. It describes a situation where two or more threads are blocked forever, waiting for each other.

In this blog post, we dive deep into a real-world deadlock saga triggered by the seemingly innocent use of Java’s parallelStream. We’ll dissect the root cause and scrutinize thread stack traces.

The Scenario

Imagine a serene codebase that utilizes Java’s parallelStream on a Collection to boost processing speed. However, as our application grew more complex, an unexpected and insidious problem emerged — a deadlock. Threads, once allies, are now trapped in a paradoxical embrace. Later in this post, we’ll take a closer look at the stack trace of some threads, where the protagonists are ForkJoinPool.commonPool-worker-0 and ForkJoinPool.commonPool-worker-1.

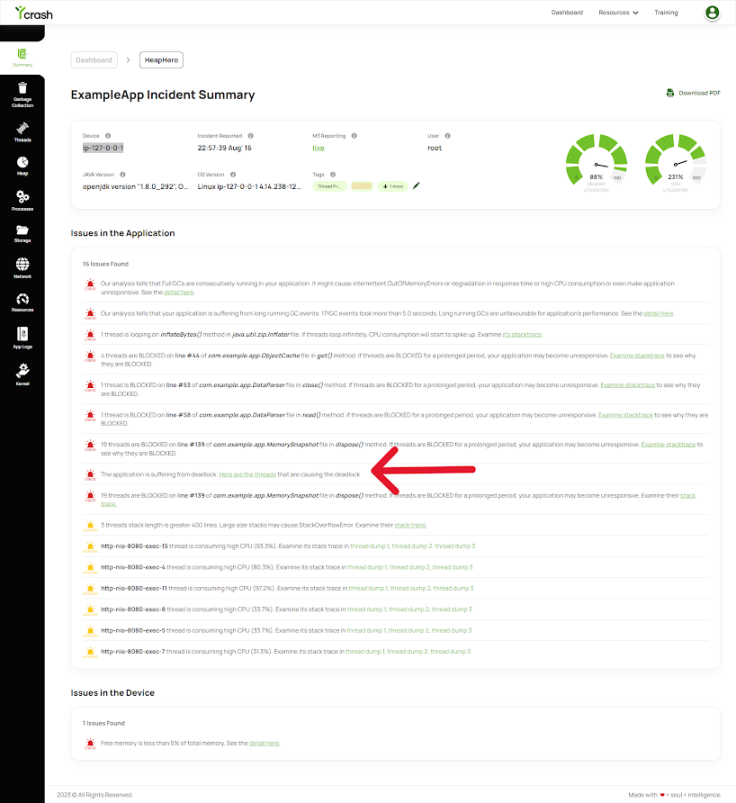

Leveraging yCrash for Resolution

In our journey to untangle this deadlock, we turned to a powerful troubleshooting tool: yCrash, which played the role of a detective in unraveling our deadlock enigma. This tool specializes in analyzing complex Java applications and identifying performance bottlenecks, deadlocks, and other issues. yCrash allows us to visualize the interactions of threads, analyze thread dumps, and pinpoint the exact source of contention. Armed with this insight, we are able to understand the deadlock’s origin and devise a solution. When we employed yCrash to troubleshoot the problem, the tool provided us with a Root Cause Analysis (RCA) summary page, as depicted in the screenshot below:

fig: Image from RCA Report

fig: Image from RCA Report

Thread1: ForkJoinPool.commonPool-worker-0

stackTrace:

java.lang.Thread.State: BLOCKED (on object monitor)

at com.example.app.DataParser.read(DataParser.java:58)

- waiting to lock <0x00000001d6ff09f0> (a com.example.app.DataParser)

at com.example.app.ObjectLoader.read(ObjectLoader.java:196)

at com.example.app.MemorySnapshot$ObjectCacheManager.load(MemorySnapshot.java:2152)

at com.example.app.MemorySnapshot$ObjectCacheManager.load(MemorySnapshot.java:1)

at com.example.app.ObjectCache.get(ObjectCache.java:52)

- locked <0x00000001d6fafb00> (a com.example.app.MemorySnapshot$ObjectCacheManager)

at com.example.app.MemorySnapshot.getObject(MemorySnapshot.java:1453)

:

:

:

at com.example.app.AnalyzerImpl.lambda$37(AnalyzerImpl.java:3248)

at com.example.app.AnalyzerImpl$$Lambda$150/179364589.apply(Unknown Source)

at java.util.stream.ReferencePipeline$3$1.accept(ReferencePipeline.java:193)

at java.util.ArrayList$ArrayListSpliterator.forEachRemaining(ArrayList.java:1384)

at java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:482)

at java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:472)

at java.util.stream.ReduceOps$ReduceTask.doLeaf(ReduceOps.java:747)

at java.util.stream.ReduceOps$ReduceTask.doLeaf(ReduceOps.java:721)

at java.util.stream.AbstractTask.compute(AbstractTask.java:327)

at java.util.concurrent.CountedCompleter.exec(CountedCompleter.java:731)

at java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:289)

at java.util.concurrent.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1056)

at java.util.concurrent.ForkJoinPool.runWorker(ForkJoinPool.java:1692)

at java.util.concurrent.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:175)

Locked ownable synchronizers:

- NoneThread2: ForkJoinPool.commonPool-worker-1

stackTrace:

java.lang.Thread.State: BLOCKED (on object monitor)

at com.example.app.ObjectCache.get(ObjectCache.java:44)

- waiting to lock <0x00000001d6fafb00> (a com.example.app.MemorySnapshot$ObjectCacheManager)

at com.example.app.MemorySnapshot.getObject(MemorySnapshot.java:1453)

at com.example.app.DataParser.readObjectArrayDump(DataParser.java:135)

at com.example.app.DataParser.read(DataParser.java:65)

- locked <0x00000001d6ff09f0> (a com.example.app.DataParser)

at com.example.app.ObjectLoader.read(ObjectLoader.java:196)

at com.example.app.ObjectInstance.read(ObjectInstance.java:135)

- locked <0x00000001e5822bf8> (a com.example.app.ObjectInstance)

at com.example.app.ObjectInstance.getAllFields(ObjectInstance.java:101)

:

:

:

at com.example.app.AnalyzerImpl.lambda$37(AnalyzerImpl.java:3248)

at com.example.app.AnalyzerImpl$$Lambda$150/179364589.apply(Unknown Source)

at java.util.stream.ReferencePipeline$3$1.accept(ReferencePipeline.java:193)

at java.util.ArrayList$ArrayListSpliterator.forEachRemaining(ArrayList.java:1384)

at java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:482)

at java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:472)

at java.util.stream.ReduceOps$ReduceTask.doLeaf(ReduceOps.java:747)

at java.util.stream.ReduceOps$ReduceTask.doLeaf(ReduceOps.java:721)

at java.util.stream.AbstractTask.compute(AbstractTask.java:327)

at java.util.concurrent.CountedCompleter.exec(CountedCompleter.java:731)

at java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:289)

at java.util.concurrent.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1056)

at java.util.concurrent.ForkJoinPool.runWorker(ForkJoinPool.java:1692)

at java.util.concurrent.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:175)

Locked ownable synchronizers:

- NoneThe thread stack traces offer a glimpse into the heart of the deadlock. Interestingly, both threads are entangled in the same method — a clear sign that they are competing for a shared resource. The resource in question is an object monitor, which is often a culprit in deadlock scenarios.

Further analysis reveals that the threads are attempting operations that necessitate exclusive access to an object cache within the com.example.app package. This competition for resource access is the root cause of the deadlock. This resource contention forms the core of the deadlock, each thread waiting for the other to relinquish control. Surprisingly, the culprit behind this ordeal is the ever-familiar “parallelStream”…

The Culprit: parallelStream

A deeper examination of the situation leads us to the utilization of Java’s parallelStream on a Collection. For those who are unfamiliar, parallelStream is a mechanism that aims to enhance processing speed by harnessing parallelism. While the parallelStream mechanism promises to turbocharge processing by leveraging parallelism, it has the potential to introduce complications, including deadlocks.

The threads involved in the deadlock were both utilizing the default “fork-join pool” — a shared resource that facilitates parallel operations. Our threads, unwittingly but inevitably, became rivals for the same resource within this pool. This shared pool inadvertently created a situation where the threads ended up competing for resources, leading to a deadlock.

A Smoother Path: Choosing Stream Over ParallelStream

In our quest to untangle the deadlock puzzle, we made a discovery that changed the game. As we navigated through the tangled web of deadlocks, we realized that the usage of parallelStream on our collection had inadvertently escalated resource contention.

Our use of parallelStream in our collection was like inviting multiple friends to use the same toy at once — it led to a lot of pushing and shoving. This commotion caused our threads to clash and resulted in a deadlock. But we didn’t give up; we decided to play differently.

Instead of letting everyone play with the toy together, we asked them to take turns. We switched from parallelStream to a simpler way of doing things — just stream. This change meant that only one friend played with the toy at a time. This friendly turn-taking reduced the chances of fights and made our threads work together without locking horns.

This switch wasn’t just about changing a word in our code; it was like choosing a new strategy in a game. And guess what? It worked! The threads, now playing one after the other, stopped bumping into each other. This meant no more deadlock, and our application could breathe a sigh of relief.

Below, you’ll find the code snippets that highlight this pivotal transition:

Original Code:

List<?> elements = …

elements.parallelStream().map(e -> {

// Some code here that is causing deadlock…

}).collect(Collectors.toList());Revised Code:

List<?> elements = …

elements.stream().map(e -> {

// Some code here that was causing deadlock…

}).collect(Collectors.toList());A Change That Makes a Difference

Our deadlock challenge revealed that small changes can yield substantial results. Switching from “parallelStream” to a regular stream, though simple, made a remarkable difference.

Moving forward, we keep in mind that the best solution isn’t always the most complex. A prudent choice turned a deadlock obstacle into a smoother path, supported by yCrash’s insightful analysis. Our journey in coding echoes the essence of exploration, learning, and making informed decisions.

Published at DZone with permission of Mahesh Devda. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments