Unlocking Efficiency in Big Data: The Power of Data Deduplication Revealed!

In this article, let's understand why data deduplication is crucial in the context of Big Data and the various methods to achieve deduplication.

Join the DZone community and get the full member experience.

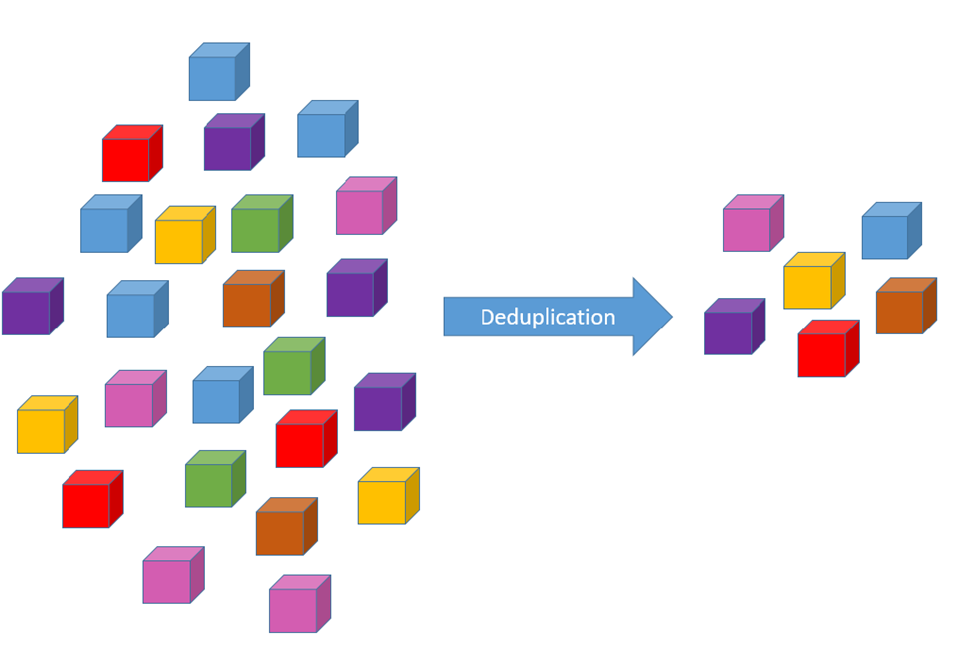

Join For FreeData deduplication is a technique used to eliminate duplicate records or rows from a dataset. Data deduplication holds significant importance in the Big Data world due to the scale and volume of data handled in Big Data environments.

Here are some key reasons why data deduplication is crucial in the context of Big Data:

- Storage efficiency: Big Data systems deal with massive amounts of data generated from various sources. Storing redundant data consumes a considerable amount of storage space. Data deduplication eliminates duplicate records, reducing storage requirements and optimizing storage efficiency.

- Cost savings: Storing and managing large volumes of data can be expensive. By deduplicating data, organizations can reduce their storage costs significantly, leading to cost savings in infrastructure and maintenance.

- Faster processing: When processing large datasets, data deduplication can improve data access times and query performance. With less redundant data to process, queries and analysis can be executed faster, enabling quicker insights and decision-making.

- Data quality and consistency: Duplicate data can lead to data inconsistency and errors in analysis. By removing duplicates, data quality improves, ensuring that analytics and business intelligence reports are accurate and reliable.

- Streamlining data workflows: Big Data workflows often involve data integration from multiple sources. Data deduplication simplifies the integration process by reducing the number of unique data records to be processed.

- Enhanced data analytics: Big Data analytics and machine learning models can be more accurate when working with clean and deduplicated data. Eliminating duplicates ensures that algorithms aren't influenced by repeated data points.

- Backup and disaster recovery: Data deduplication can also be valuable in backup and disaster recovery scenarios. Storing unique data in backups reduces backup storage requirements and improves recovery times.

- Data privacy and compliance: In scenarios where sensitive data needs to be anonymized or pseudonymized for privacy and regulatory compliance, data deduplication can help maintain data privacy while minimizing the risk of reidentification through duplicates.

- Data governance: Maintaining clean and deduplicated data supports effective data governance practices. It ensures that data is consistent, well-maintained, and adheres to data governance policies.

- Scalability: Data deduplication techniques need to be scalable to handle the vast amount of data generated in Big Data environments. Efficient deduplication algorithms and distributed computing can ensure scalability and high-performance processing.

Several topics like this are discussed on my YouTube channel. Please visit. I appreciate your support.

In Hive, data deduplication can be achieved using various methods, such as using the DISTINCT keyword, GROUP BY, or window functions like ROW_NUMBER(). Let's explore these methods with code examples and a real-time scenario.

Suppose we have a Hive table called sales_data with the following structure:

transaction_id |

product_id |

sale_amount |

sale_date |

|

|

|

|

|

|

|

|

Created through the DDL:

CREATE TABLE sales_data (

transaction_id INT,

product_id STRING,

sale_amount DOUBLE,

sale_date DATE);Let's assume we have a dataset with sales data for an online store. The dataset may contain duplicate records due to various reasons, such as system glitches, data integration issues, or multiple entries for the same transaction.

transaction_id |

product_id |

sale_amount |

sale_date |

1 |

ABC123 |

100 |

2023-07-01 |

2 |

DEF456 |

50 |

2023-07-02 |

3 |

GHI789 |

75 |

2023-07-03 |

4 |

ABC123 |

100 |

2023-07-01 |

5 |

XYZ999 |

200 |

2023-07-04 |

Method 1: Using DISTINCT Keyword

The DISTINCT keyword is used to eliminate duplicate rows from the result set.

-- Create a new table with deduplicated records

CREATE TABLE sales_data_dedup AS

SELECT DISTINCT transaction_id, product_id, sale_amount, sale_date

FROM sales_data;

transaction_id |

product_id |

sale_amount |

sale_date |

1 |

ABC123 |

100 |

2023-07-01 |

2 |

DEF456 |

50 |

2023-07-02 |

3 |

GHI789 |

75 |

2023-07-03 |

5 |

XYZ999 |

200 |

2023-07-04 |

In Hive, the DISTINCT keyword internally uses a hash-based aggregation to identify and remove duplicates. This can be resource-intensive for large datasets and may not be an efficient method!

Method 2: Using GROUP BY

We can use GROUP BY to group the records based on specific columns and then apply aggregate functions like SUM, COUNT, etc. In this case, we'll use GROUP BY to remove duplicates.

To use GROUP BY to remove duplicates, we can select the unique rows by grouping the data based on the columns that define uniqueness and then select the first row from each group. The "first row" can be chosen arbitrarily since we are not using any aggregate functions.

Here's the Hive query using GROUP BY to remove duplicates:

-- Create a new table with deduplicated records using GROUP BY

CREATE TABLE sales_data_dedup AS

SELECT transaction_id, product_id, sale_amount, sale_date

FROM sales_data

GROUP BY transaction_id, product_id, sale_amount, sale_date;

transaction_id |

product_id |

sale_amount |

sale_date |

1 |

ABC123 |

100 |

2023-07-01 |

2 |

DEF456 |

50 |

2023-07-02 |

3 |

GHI789 |

75 |

2023-07-03 |

5 |

XYZ999 |

200 |

2023-07-04 |

In this example, we grouped the rows based on the columns transaction_id, product_id, sale_amount, and sale_date. As a result, the duplicates with the same values in these columns were combined into groups, and then we selected the "first row" from each group, effectively removing the duplicates.

It's important to note that when using GROUP BY to remove duplicates, the order of rows within each group is not guaranteed. If the order of rows is significant, consider using the ROW_NUMBER() window function to remove duplicates while maintaining the desired order.

Method 3: Using ROW_NUMBER() Window Function

The ROW_NUMBER() window function assigns a unique integer to each row based on the specified order. By using this function and selecting only rows with ROW_NUMBER() = 1, we can deduplicate the data.

-- Create a new table with deduplicated records using ROW_NUMBER()

CREATE TABLE sales_data_dedup AS

SELECT transaction_id, product_id, sale_amount, sale_date

FROM (

SELECT

transaction_id,

product_id,

sale_amount,

sale_date,

ROW_NUMBER() OVER (PARTITION BY transaction_id, product_id, sale_amount, sale_date ORDER BY transaction_id) as row_num

FROM sales_data

) t

WHERE row_num = 1;In all the methods, we successfully deduplicated the sales data and created a new table sales_data_dedup containing unique records.

Data deduplication is an essential step in data processing pipelines, as it helps in maintaining data quality, reduces storage costs, and improves query performance. In real-time scenarios, data deduplication can be applied to various datasets like customer data, transaction data, log files, etc., to ensure data consistency and efficiency.

In conclusion, data deduplication plays a vital role in the Big Data world by optimizing storage, improving data quality, enhancing data processing efficiency, and facilitating accurate analytics and decision-making. As organizations continue to deal with ever-growing volumes of data, data deduplication remains a critical aspect of managing and utilizing Big Data effectively.

Must Reads for Continuous Learning

- Mastering System Design

- Head First Design Patterns

- Clean Code: A Handbook of Agile Software Craftsmanship

- Java Concurrency in Practice

- Java Performance: The Definitive Guide

- Designing Data-Intensive Applications

- Designing Distributed Systems

- Clean Architecture

- Kafka — The Definitive Guide

- Becoming An Effective Software Engineering Manager

Published at DZone with permission of Roopa Kushtagi. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments