Tribute to the Passing of Teradata Automation

On February 15, 2023, Teradata officially withdrew from China after 26 years. As a professional data company like Teradata, I feel so regretful about this.

Join the DZone community and get the full member experience.

Join For FreeOn February 15, 2023, Teradata officially withdrew from China after 26 years. As a professional data company like Teradata, I feel so regretful about this. As an editor of WhaleOps, I am also a fan of Teradata, and keep an eye on the development of Teradata’s various product lines. When everyone is thinking about the future of the Teradata data warehouse, they ignore that Teradata actually has a magic weapon, that is, the data warehouse scheduling suite Teradata Automation that comes with the Teradata data warehouse.

The rapid development of Teradata in the world, especially in Greater China, is inseparable from the assistance of Teradata Automation. Today, we are here to remember the history of Teradata Automation and the prospect of the future. We also hope that DolphinScheduler and WhaleScheduler, which have paid tribute to Automation since their birth, can take over the mantle and continue to benefit the next generation of schedulers.

Architecture Evolution of Teradata Automation

Teradata was different from other data warehouses (Oracle, DB2) at the beginning of its birth. It abandoned the commonly-used ETL architecture, but the ELT model that people feel delighted in talking about in the field of big data, that is, its overall solution does not need to use Informatica/DataStage/Talend extracts and transforms the data source, but convert the source system into an interface file, and then enters the data warehouse preparation layer through FastLoad/Multiload/TPump/Parallel Transporter (TPT) (Those who are interested in the alternative tools can refer to Apache SeaTunnel open source version or WhaleOps commercial version WhaleTunnel), and then execute TeradataSQL scripts through BTEQ, and execute all scripts effectively according to the triggers and dependencies (DAG) between tasks.

Does this architecture of Teradata Automation sound familiar? Yes, Oozie, Azkaban, and Airflow, which are popular later in the big data field, all have this logical architecture. Oozie, Azkaban, and Airflow pale into insignificance by comparison with Teradata Automation which was developed in 199x!

Although Automation has been there for ages, as the originator of ELT scheduling tools and the most used ELT scheduling tool in the enterprise industry, it is very advantageous in terms of comprehensive consideration of business cases. Therefore, considering the business case needs, the design of Apache DolphinScheduler, including the design of many functions of the commercial version of WhaleScheduler, can still see the shadow of tribute to Teradata Automation, but Teradata Automation is task-level scheduling control, while DolphinScheduler and WhaleScheduler are workflow + task Level scheduling, this design is to pay tribute to Informatica, another global ETL scheduling predecessor (I will tell its story later).

The first version of Automation was written by Teradata Taiwan employee Jet Wu using Perl. It is famous for its lightweight, simple structure and stable system. It also uses flag files to avoid the low performance of Teradata’s OLTP and quickly became popular in Teradata’s global projects. Later, it was modified by great engineers such as Wang Yongfu(Teradata) in China to further improve usability. The catalog of Automation in Greater China looks like this:

/ETL (Automation home directory)

| - -/APP stores ETL task scripts. In this directory, first, create the subsystem directory, and then create the ETL task directory

| - -/DATA

| - - - /complete Store the data that has been successfully executed. Create a subdirectory with the system name and date

| - - - /fail

| - - - - -/bypass Store files that do not need to be executed. Create subdirectories with system names and dates

| - - - - -/corrupt Store files that do not match the size. Create a subdirectory with the system name and date

| - - - - -/duplicate Store duplicate received files. Create subdirectories with system name and date

| - - - - -/error Store files that generate errors during operation. Create subdirectories with system names and dates

| - - - - -/unknown Store files not defined in the ETL Automation mechanism. Create subdirectories with dates

| - - - /message Store the control file to send message notification

| - - - /process stores the data files and control files used by the jobs being executed

| - - - /queue stores data files and control files used by jobs ready to be executed

| - - - /receive is used to receive data files and control files from various source systems

| - -/LOG Store the ETL Automation system program and the record files generated during the execution of each operation

| - -/bin stores the execution files of the ETL Automation system program

| - -/etc store some configuration files of the ETL Automation mechanism

| - -/lock store ETL Automation system programs and lock files generated during the execution of each job

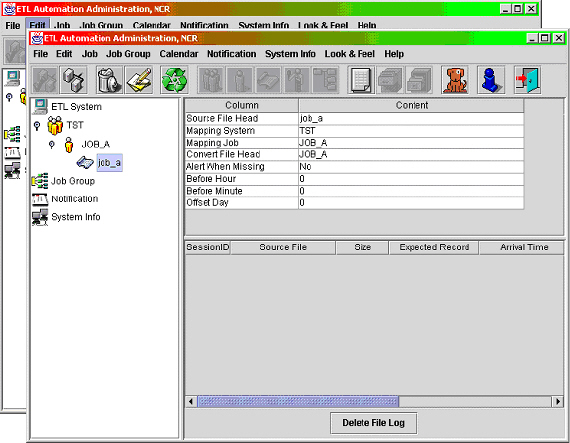

| - -/tmp temporary buffer directory, store temporary filesIn the beginning, the interface is like this:

This version of Automation has been enduring for more than 10 years. Later, due to the increasing number of tasks, the old version of Automation that relies on file + Teradata metadata storage is insufficient in performance, and the operation status management is also quite complicated, so it was updated, and a new version was generated in China, which added task parameters into memory and preloaded them to quickly improve task parallelism, reduce data latency, and added complex running state management. Until now, Teradata Automation is still used by many financial systems.

Hats off to Teradata Automation!

In the beginning, the open-source community of Apache DolphinScheduler integrated all the scheduling system concepts at that time, with many functions paying tribute to Teradata Automation. For example, the cross-project workflow/task dependency task (Dependent) is exactly the same as Teradata Automation’s dependency setting. At that time, Airflow, Azkaban, and Oozie did not have such a function. Apache DolphinScheduler relies on its excellent performance, excellent UI, and functional design, so Wang Yongfu, one of the core developers of Teradata Automation in China, migrated his company’s scheduling leadership from Teradata Automation tasks to Apache DolphinScheduler and showed great appreciation for it. Looking back, I still remember how excited the community was to be recognized by Yongfu, proving that DolphinScheduler has been a leading project in the world and it can only be worthy of the name if it becomes the top Apache project.

Now WhaleOps gathers talents from Internet companies + Informatica + IBM + Teradata, and there are many die-hard fans of Teradata, so we boldly put some concepts of Automation into WhaleScheduler. Teradata Automation Users are familiar with these functions, and external users pat their thighs and say that the design is so creative! But to be honest, WhaleScheduler is standing on the shoulders of giants:

- Dependency/Trigger Distributed Memory Engine Design

- Trigger mechanism (in addition to file trigger, also add Kafka, SQL detection trigger)

- Running state-weighted (TD slang-dictatorship mode, mainly for scenarios where data arrives late, but supervisory reports are guaranteed first)

- Running state-isolation (TD slang-anti-epidemic mode, a tribute to a certain big guy, mainly in the face of dirty data in the data, to avoid continuing to pollute the function of downstream tasks)

- blacklist

- whitelist

- A preheating mechanism, etc.

WhaleScheduler has absorbed the import and export function of Teradata Automation Excel so that business departments can easily maintain complex DAGs through Excel tables without configuring complex tasks through the interface. These are all tributes to Teradata Automation. Without the continuous innovation and exploration of Automation predecessors in business cases and without generations of Teradata predecessors who have continued to create data warehouse specifications, how can we create the world’s outstanding open-source communities and commercial products out of thin air?

Although Teradata has withdrawn from China, its innovative technical architecture and the spirit of Teradata professionals have always inspired our younger generations to keep forging ahead. Although Teradata Automation can no longer serve you, the TD fans of WhaleOps also sincerely hope that our WhaleScheduler, which integrates Internet cloud native scheduling + traditional scheduling, can take over the mantle of Teradata Automation and continue to contribute to the world.

Also, we hope that together with Apache DolphinScheduler, WhaleScheduler of WhaleOps will open up a more innovative way for future scheduling systems builders!

Finally, I would like to pay tribute to Teradata Automation and the users and practitioners who have worked tirelessly on the scheduling system with this article!

Published at DZone with permission of Jieguang Zhou. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments