Let's Unblock: Spark Setup (Intellij)

Starting a series 'Let's Unblock' for the common blockers that programmers/developers face while developing an application specifically Spark with Scala.

Join the DZone community and get the full member experience.

Join For FreeBefore getting our hands dirty into code let's set up our environment and prepare our IDE to understand the Scala language and SBT plugins.

Prerequisites:

- Java (preferably JDK 8+).

- Intellij IDE (community or ultimate).

IDE Setup:

So let's start configuring the plugin required for Scala and SBT environment by the following steps:

- Click on Configure in the bottom right of the IDE.

- Then click plugins.

- Click on Browse Repositories and search Scala.

- Now, enter SBT in the search section and install the SBT plugin there.

Woohoo, the IDE is now ready to understand the language you try to communicate it with.

The Project Setup:

We will learn here to set up a scala spark project using 2 build tools one by using our favorite maven and the other one using the Scala family's SBT.

Let's Start With The SBT

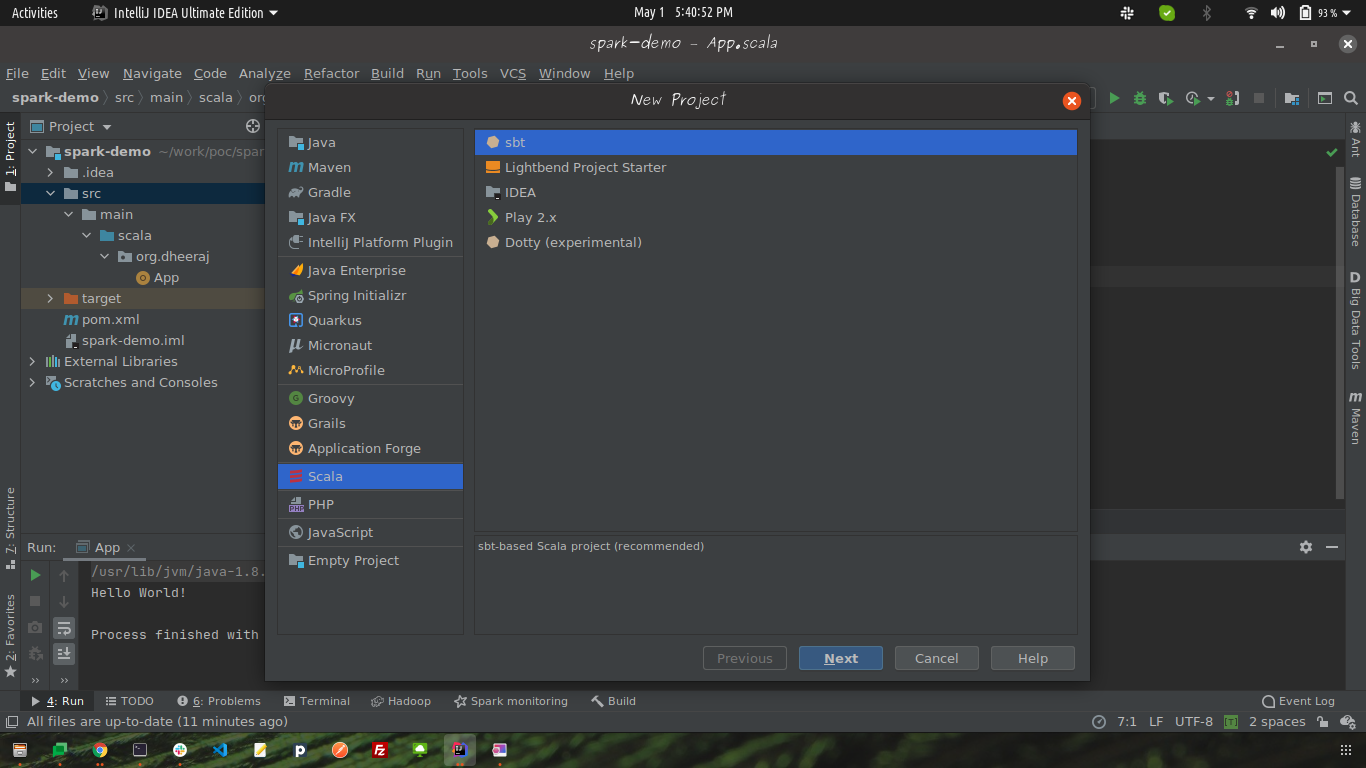

- Click on create new Project.

- Select Scala and then choose SBT from the options shown in the right pane:

![Select Scala and Then Choose SBT]()

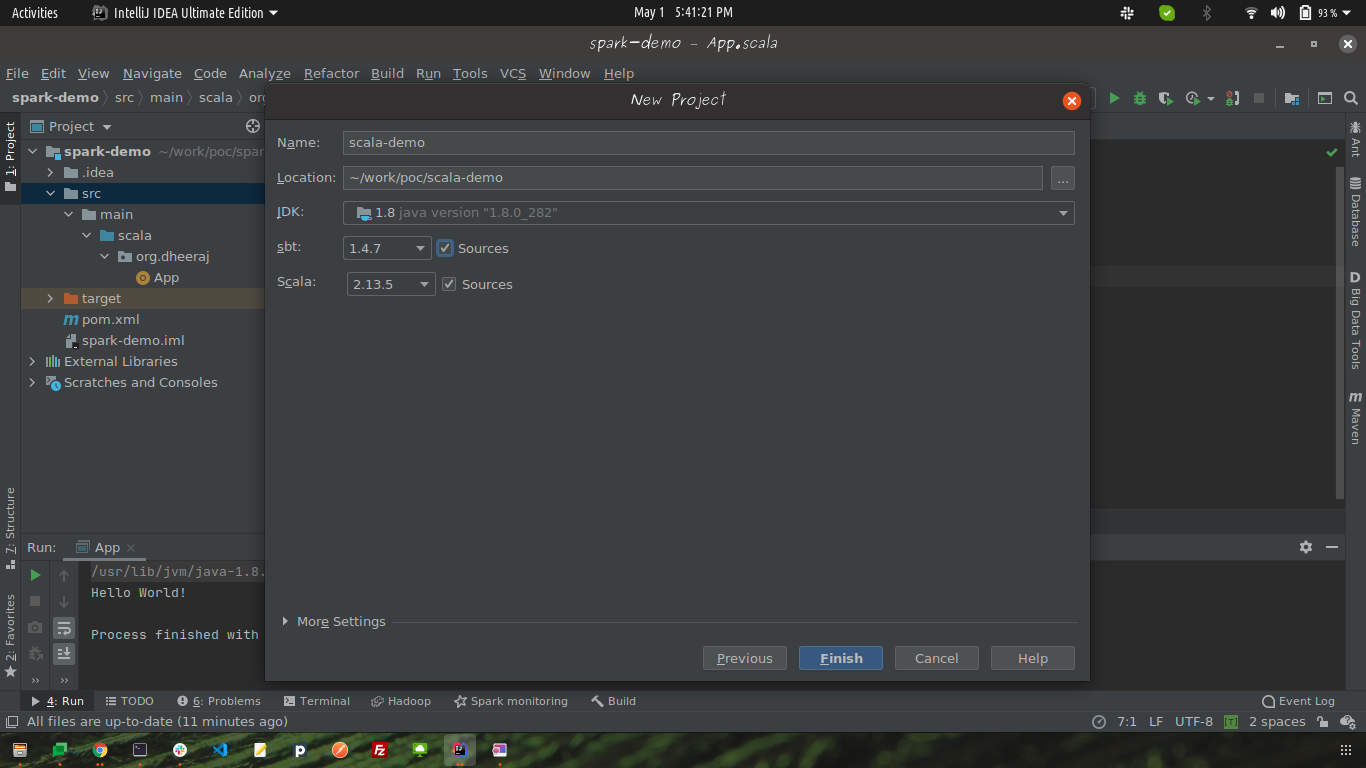

- Add a name to your project:

![Add a Name to Your Project]()

- Click on the finish button.

- Congrats, your project structure is created and you will be shown a new window with its structure.

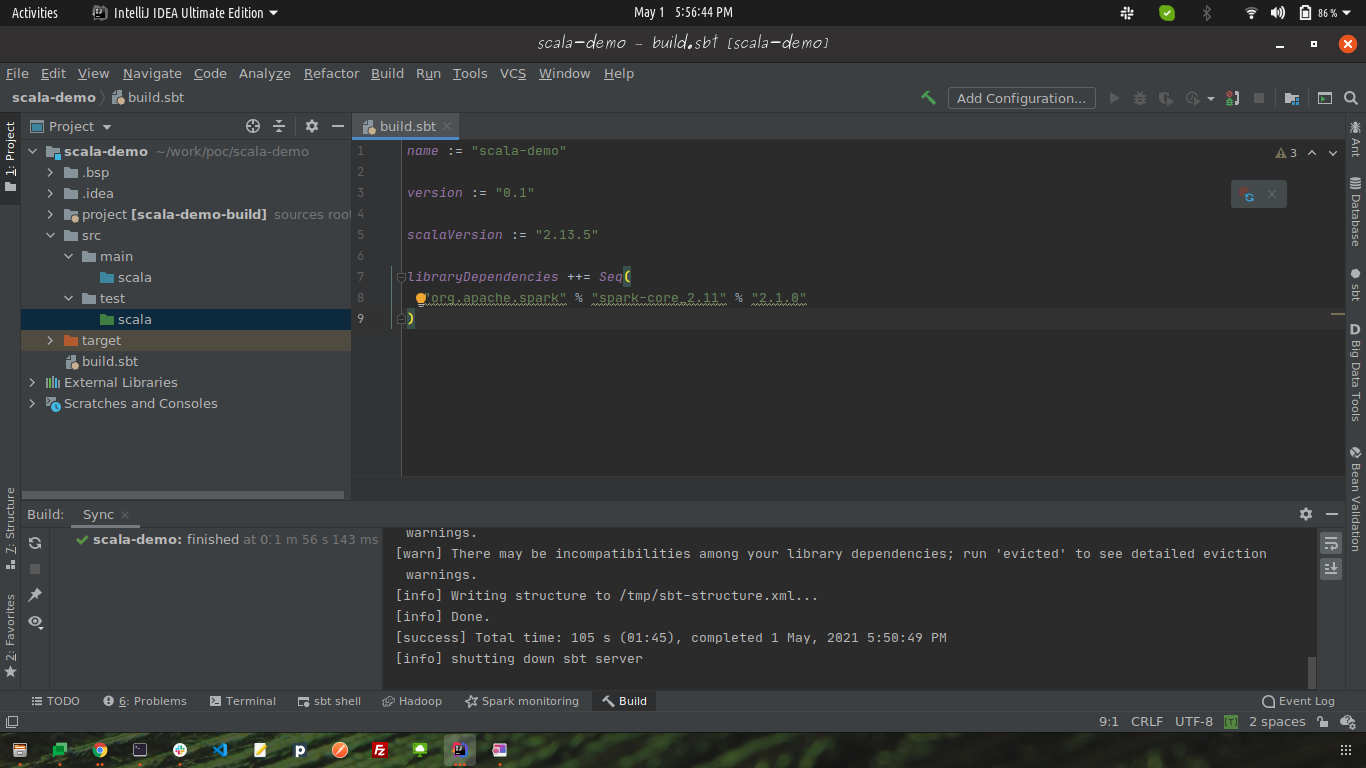

- Let's edit the build.sbt to make our scala project a spark one.

- Add this spark dependency in the project to make it one:

libraryDependencies ++= Seq( "org.apache.spark" % "spark-core_2.11" % "2.1.0" ) ![Adding Spark Dependency]() Click on the refresh icon appearing on the top right in the editor to download the relevant dependency.

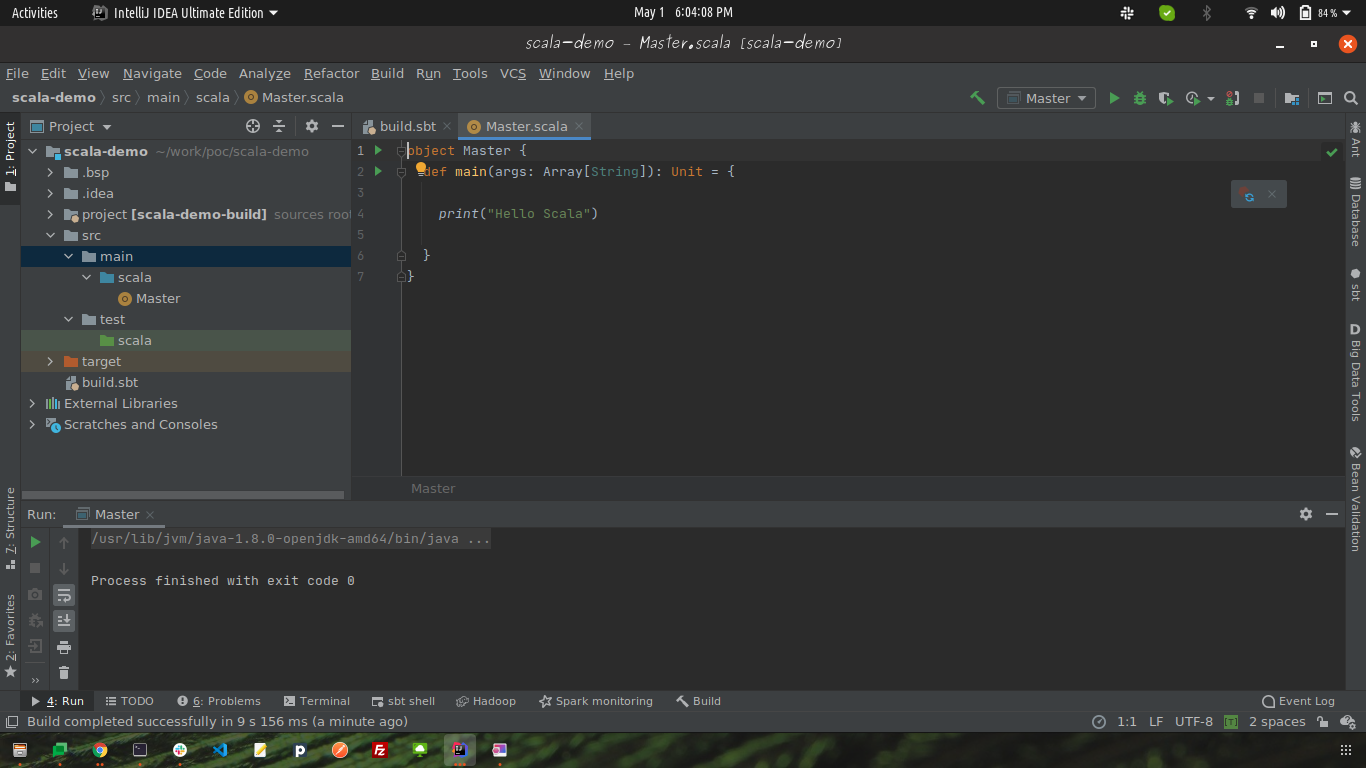

Click on the refresh icon appearing on the top right in the editor to download the relevant dependency.- Create a Scala class (e.g., Master) by right-clicking on the Scala folder in the project structure window.

![Adding Scala Class]()

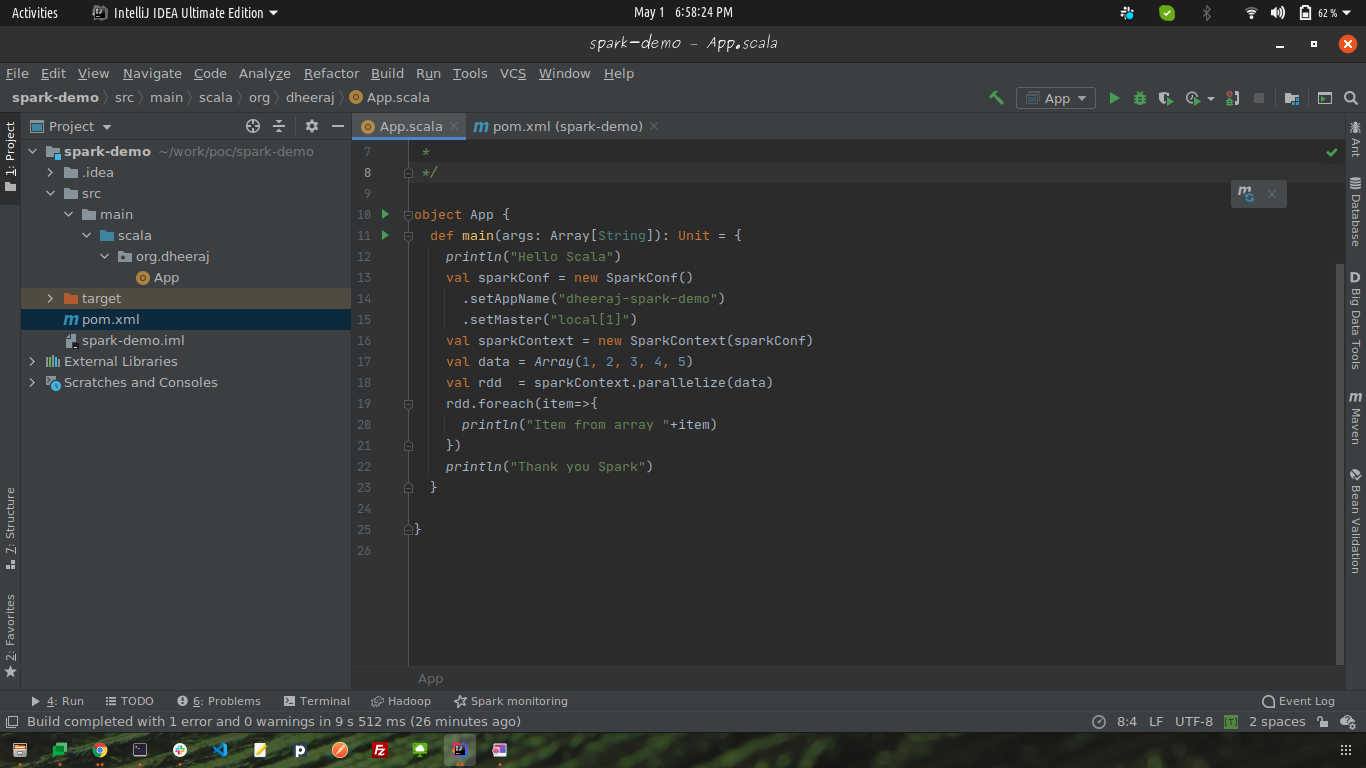

- To see your code running paste this code in your master class:

import org.apache.spark.SparkContext

import org.apache.spark.SparkConf

object Master {

def main(args: Array[String]): Unit = {

println("Hello Scala")

val sparkConf = new SparkConf()

.setAppName("dheeraj-spark-demo")

.setMaster("local[1]")

val sparkContext = new SparkContext(sparkConf)

val data = Array(1, 2, 3, 4, 5)

val rdd = sparkContext.parallelize(data)

rdd.foreach(item=>{

println("Item from array "+item)

})

println("Thank you Spark")

}

}

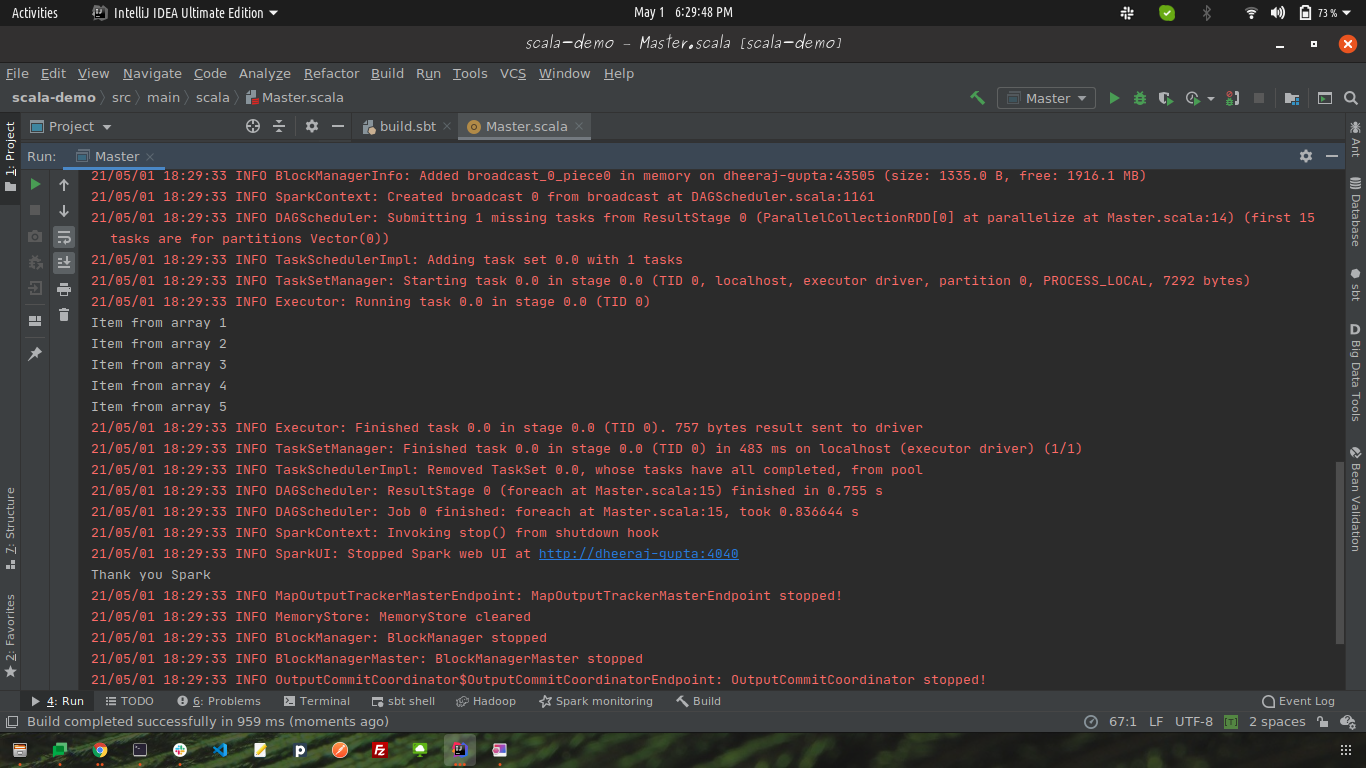

Click on the play button and you will be able to see your output in the Run window below.

Output

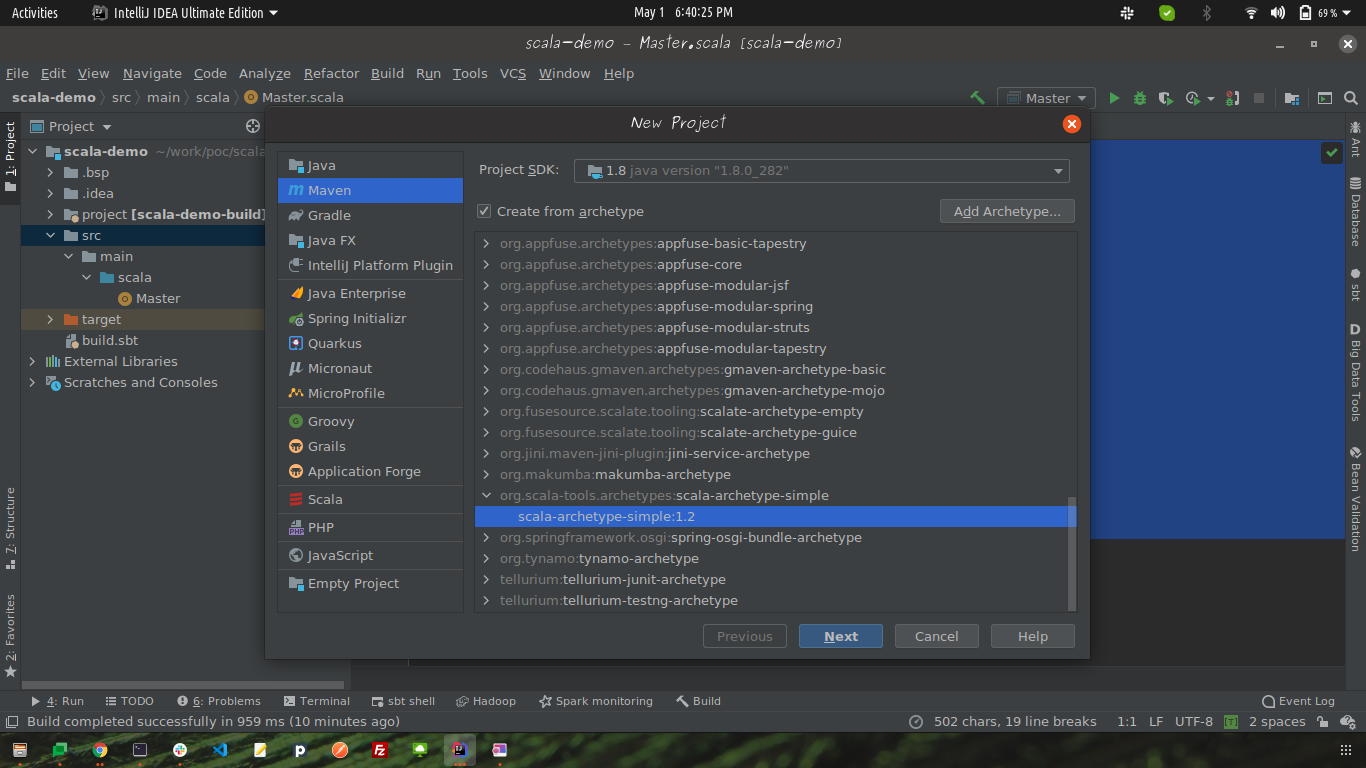

Maven (Java Friends favorite) way of creating a project:

- Click on create new Project from the file menu.

- Click on Maven, proceed with create from an archetype, and hit the checkbox

- Select archetype

scala-archetype-simple:1.2

- Woohoo, no need to make the main class here, it will already be freshly prepared for you.

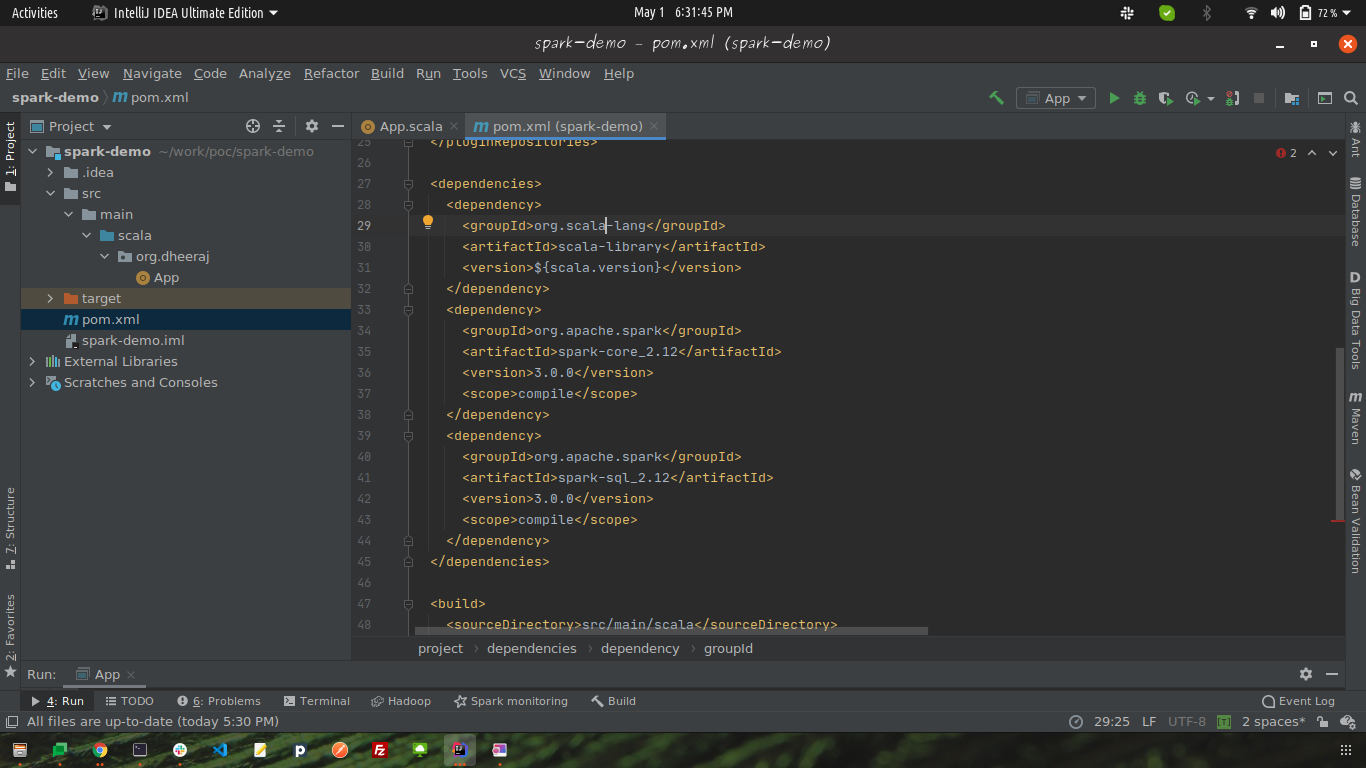

- Let's make our Scala project a Spark one by adding the following dependencies to pom.xml:

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.12</artifactId>

<version>3.0.0</version>

<scope>compile</scope>

</dependency>

Follow the remaining steps from above by copying the same code snippet.

Github Links:

SBT project: https://github.com/dheerajgupta217/spark-demo-sbt.

Maven Project: https://github.com/dheerajgupta217/spark-demo-maven.

Opinions expressed by DZone contributors are their own.

Click on the refresh icon appearing on the top right in the editor to download the relevant dependency.

Click on the refresh icon appearing on the top right in the editor to download the relevant dependency.

Comments