Upgrading Spark Pipelines Code: A Comprehensive Guide

Discuss the strategic importance of Spark code upgrades and explore an introduction to a powerful toolkit designed to streamline this process: Scalafix.

Join the DZone community and get the full member experience.

Join For FreeIn today's data-driven world, keeping your data processing pipelines up-to-date is crucial for maintaining efficiency and leveraging new features. Upgrading Spark versions can be a daunting task, but with the right tools and strategies, it can be streamlined and automated.

Upgrading Spark pipelines is essential for leveraging the latest features and improvements. This upgrade process not only ensures compatibility with newer versions but also aligns with the principles of modern data architectures like the Open Data Lakehouse (Apache Iceberg). In this guide, we will discuss the strategic importance of Spark code upgrades and introduce a powerful toolkit designed to streamline this process.

Toolkit Overview

Project Details

For our demonstration, we used a sample project named spark-refactor-demo, which covers upgrades from Spark 2.4.8 to 3.3.1. The project is written in Scala and utilizes sbt and gradle for builds. Key files to note include build.sbt, plugins.sbt, gradle.properties, and build.gradle.

Introducing Scalafix

What Is Scalafix?

Scalafix is a refactoring and linting tool for Scala, particularly useful for projects undergoing version upgrades. It helps automate the migration of code to newer versions, ensuring the codebase remains modern, efficient, and compatible with new features and improvements.

Features and Use Cases

Scalafix offers numerous benefits:

- Version upgrades: Updates deprecated syntax and APIs

- Coding standards enforcement: Ensures a consistent coding style

- Code quality assurance: Identifies and fixes common issues and anti-patterns

- Large-scale refactoring: Applies uniform transformations across the codebase

- Custom rule creation: Allows users to define specific rules tailored to their needs

- Automated code refactoring: Rewrites Scala-based Spark code based on custom rules

- Linting: Checks code for potential issues and ensures adherence to coding standards

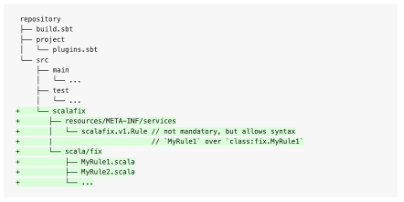

Developing Custom Scalafix Rules

- Custom rules can be defined to target specific codebases or repositories.

- The rules are written in Scala and can be integrated into the project's build process.

package examplefix

import scalafix.v1._

class MyCustomRule extends SyntacticRule("MyCustomRule") {

override def fix(implicit doc: SyntacticDocument): Patch = {

doc.tree.collect {

case t @ Importer(_, importees) if importees.exists(_.is[Importee.Wildcard]) =>

Patch.replaceTree(t, "import mypackage._") + Patch.lint(Diagnostic("Rule", "Avoid wildcard imports", t.pos))

}.asPatch } }

Best Practices for Scalafix Rule Development

- Align the Scala binary version with your build.

- Use

scalafixDependenciessetting key for any external Scalafix rule.

// build.sbt for a single-project build

libraryDependencies +=

"ch.epfl.scala" %% "scalafix-core" % _root_.scalafix.sbt.BuildInfo.scalafixVersion % ScalafixConfig

// Command to run Scalafix with your custom rule

sbt "scalafix MyCustomRule"Upgrade and Refactoring Process

Step-By-Step Guide

- Define versions: Set the initial and target versions of Spark.

INITIAL_VERSION=${INITIAL_VERSION:-2.4.8}

TARGET_VERSION=${TARGET_VERSION:-3.3.1}- Build current project: Clean, compile, test, and package the current project using

sbt.

sbt clean compile test package- Add Scalafix dependencies: Update

build.sbtandplugins.sbtto include Scalafix dependencies.

cat >> build.sbt <<- EOM

scalafixDependencies in ThisBuild +=

"com.holdenkarau" %% "spark-scalafix-rules-${INITIAL_VERSION}" % "${SCALAFIX_RULES_VERSION}"

semanticdbEnabled in ThisBuild := true

EOM

cat >> project/plugins.sbt <<- EOM

addSbtPlugin("ch.epfl.scala" % "sbt-scalafix" % "0.10.4")

EOM

- Define Scalafix rules: Specify the rules for refactoring in

.scalafix.conf.

rules = [

UnionRewrite,

AccumulatorUpgrade,

ScalaTestImportChange,

………………………………… more

- Run Scalafix rules:

sbt scalafix- Identify potential issues: Define and run Scalafix warn rules to identify any anti-patterns or potential issues in

.scalafix.conf.

rules = [

GroupByKeyWarn,

MetadataWarnQQ

]

UnionRewrite.deprecatedMethod {

"unionAll" = "union"

}

OrganizeImports {

……………… more- Run Scalafix warn rules:

sbt scalafix || (echo "Linter warnings were found"; prompt)Code Review and Final Build

Review Changes

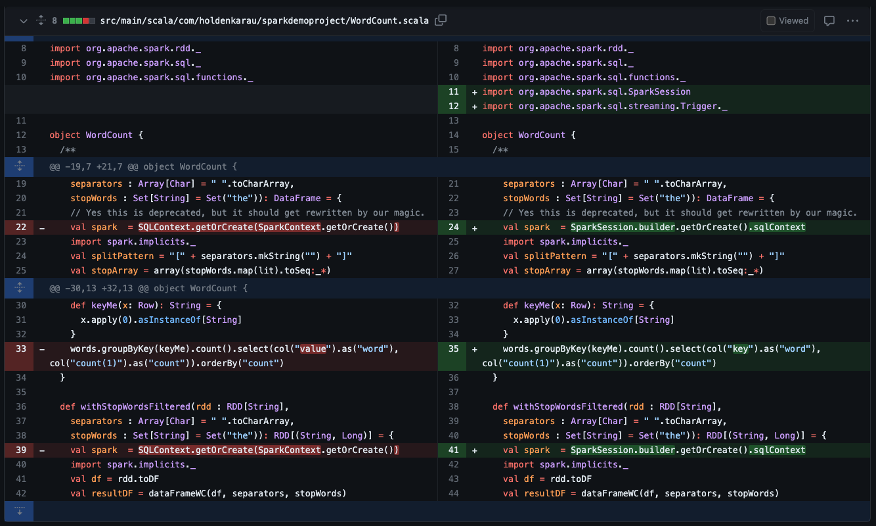

Use tools like Git diff or VS Code to review the changes made by Scalafix. Verify that the refactored code aligns with your expectations and coding standards.

Here are two example PR's as part of this spark-refactor-demo project:

Update Dependencies for Final Build

Update the library dependencies in build.sbt to reflect the target Spark version, and build the new codebase.

sparkVersion := "3.3.1"

libraryDependencies ++= Seq(

"org.apache.spark" %% "spark-streaming" % "3.3.1" % "provided",

"org.apache.spark" %% "spark-sql" % "3.3.1" % "provided",

"org.scalatest" %% "scalatest" % "3.2.2" % "test",

"org.scalacheck" %% "scalacheck" % "1.15.2" % "test",

"com.holdenkarau" %% "spark-testing-base" % "3.3.1_1.3.0" % "test"

)

scalafixDependencies in ThisBuild +=

"com.holdenkarau" %% "spark-scalafix-rules-3.3.1" % "0.1.9"

semanticdbEnabled in ThisBuild := true

Build new codebase:

sbt clean compile test packageNow the Code base is Upgraded from Spark 2.4.8 to Spark 3.3.1.

Conclusion

Upgrading Spark pipelines doesn't have to be a challenging task. With tools like Scalafix, you can automate and streamline the process, ensuring your codebase is modern, efficient, and ready to leverage the latest features of Spark. Follow this guide to facilitate a smooth upgrade and enjoy the benefits of a more robust and powerful Spark environment.

Opinions expressed by DZone contributors are their own.

Comments