Run Hundreds of Experiments with OpenCV and Hydra

In this tutorial, we'll cover how to run multiple OpenCV feature matching tasks using Hydra

Join the DZone community and get the full member experience.

Join For FreeFeature Matching Problem

Image matching is an important task in computer vision. Real-world objects may be captured on different photos from any angle with any lightning conditions and may be occluded. But while images contain the same objects they must be categorized accordingly. For this purpose, computer vision gives us invariant feature extractors that help to match objects on different images

Detectors, Descriptors, and Matchers

Image matching is a three-step algorithm. Fortunately, they are all covered in the OpenCV library

The first step is to find distinctive key points for the query image (that contains the object we are looking for) and the target image (in OpenCV it is called training image).

The second is to compute local descriptors around these key points. Each key point is assigned with a vector of numbers that describe the appearance of that key point. Two latter steps are facilitated by detector and descriptor objects that might be of the same class or might be different.

def feature_extractor_factory(detector, descriptor):

def extractor(image):

keypoints = detector.detect(image, None)

keypoints, descriptors = descriptor.compute(image, keypoints)

return keypoints, descriptors

return extractorIn the code above, for example, we parameterize feature extractor with two objects whose types are unknown in runtime. They both might be instantiated from SIFT or one of them may be SIFT while the other would be SURF. There are plenty of options to choose a detector and a descriptor

- SIFT --- Scale Invariant Feature Transform (OpenCV Reference)

- SURF --- Speeded Up Robust Features (OpenCV Reference)

The third step is to match obtained descriptors against each other, i.e. to find similar regions on two different images. Possible ways to match descriptors is to run brute-force search over all pairs or to run the FLANN based matcher (Fast Library for Approximate Nearest Neighbors). These two options provide different results and thus generate plenty of experiment configurations

Hydra Config Manager

There are plenty of problems that arise when you try to run data science experiments with different parameters. I'll list some of them:

- How to control experiment configuration between runs?

- How to maintain the results saved after each experiment?

- How to run multiple experiments with different parameters simultaneously?

To this extent, one could use Hydra, a framework for configuring applications (and experiments)

Configuring Experiments

Using Hydra is convenient as using vanilla YAML configurations, one can refer to a config object within a Python script with ease. It is needed to add the following modifications.

Add configuration file to your project:

# conf/config.yaml

model:

detector_type: SIFT

descriptor_type: SIFT

matcher_type: FLANNAdd Hydra decorator above your main function:

import hydra

@hydra.main(config_path="conf", config_name="config")

def my_app(cfg):

# now contents of config file inside `cfg`But it also provides some advanced functionality. Each experiment you run will be stored under "date/time" directory with full configuration used for this experiment. It enables users to find results of previously performed experiments and track differences between them.

Hydra detaches the code from a directory when it was executed, which means that os.getcwd() will output different paths each run. But one could refer to the original project directory using ${hydra:runtime.cwd} in configuration file. For example, to specify a data source directory that shares the same root as the code one could type data_path: ${hydra:runtime.cwd}/data within config.yaml.

Running Multiple Experiments

The possibility to run multiple experiments with different parameters is also a great feature of Hydra. In our feature matching case for example we have a set of possible methods for each of three steps. Hydra multirun is exactly the tool that allows you to perform a search of the best method in a cartesian product of possible methods. We can run our Python experiments with:

python my_app.py --multirun model.detector_type=SIFT,SURF,KAZE,AKAZE,ORB,BRISK model.descriptor_type=SIFT,SURF,KAZE,AKAZE,ORB,BRISK,BRIEF,FREAK model.matcher_type=BF,FLANN

That will result of nearly a hundred different experiments run (precisely 95). Some of them will end unsuccessfully but it's not a big deal if they properly handle exceptions and log problems. The ones that end successfully give us results for a comparison

Results Section

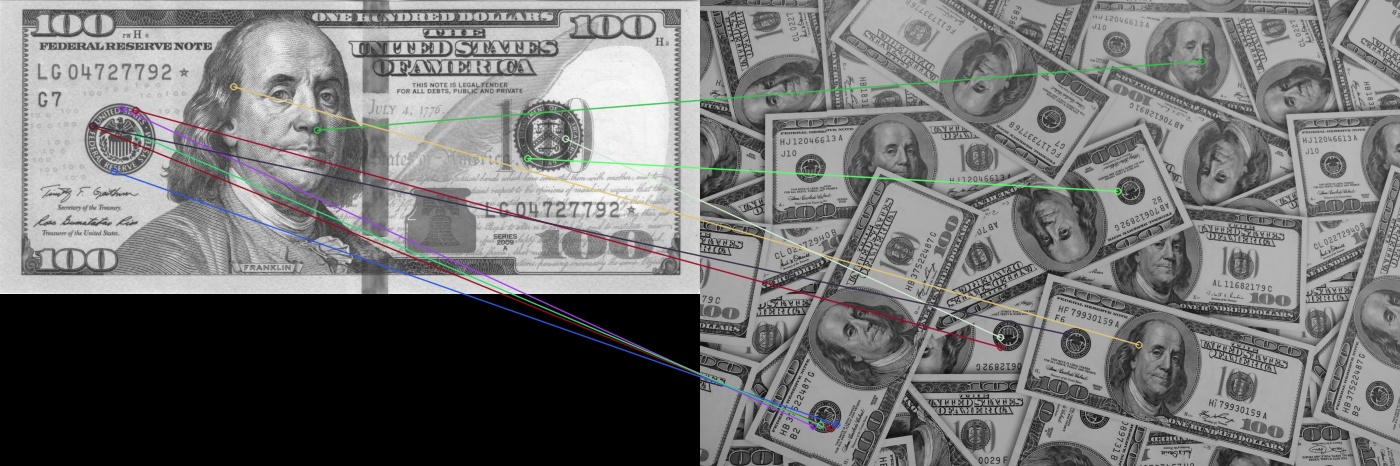

In this section, I'll show you the results of different method combinations. I've chosen to work with One Hundred Dollar Bill as a reference image, consonant with the hundred experiments that we are conducting. I match features of a downsampled dollar bill from Wikipedia to some downsampled images taken from Unsplash image stock. The purpose of experimenting is to find qualitatively the best combination of feature matching steps.

Code is available in my GitHub repo.

Implementation

To instantiate different types of detectors, descriptors and matchers I decided to go with factory methods.

def detector_factory(cfg):

type = cfg.model.detector_type

if type == "SIFT":

detector = cv.xfeatures2d.SIFT_create()

elif type == "SURF":

detector = cv.xfeatures2d.SURF_create()

....

detector = cv.ORB_create()

else:

raise NotImplementedError(f"Detector of type {type} is not supported")

return detectorMatcher factory is also a clause

def matcher_factory(cfg):

matcher_type = cfg.model.matcher_type

descriptor_type = cfg.model.descriptor_type

if matcher_type == "BF":

def matcher(queryDescriptors, trainDescriptors):

....

return matches

elif matcher_type == "FLANN":

def matcher(queryDescriptors, trainDescriptors):

....

return good_matches

else:

raise NotImplementedError(f"Matcher of type {descriptor_type} is not supported")

return matcherEverything inside the main script is parameterized with a cfg variable that contain information from a config file config.yaml.

model:

# one of SIFT, SURF, KAZE, AKAZE, ORB, BRISK

detector_type: SIFT

# one of SIFT, SURF, KAZE, AKAZE, ORB, BRISK, BRIEF, FREAK

descriptor_type: SIFT

# one of BF, FLANN

matcher_type: FLANN

data:

query_image_path: ${hydra:runtime.cwd}/data/query/

train_set_path: ${hydra:runtime.cwd}/data/train_set/

num_matches_show: 10

output_dir_path: results/

image_target_width: 700

hydra:

sweep:

subdir: ${hydra.job.override_dirname}Here you can see all parameters including possible alterations in model parts types.

The main function of the script looks like this

@hydra.main(config_path="conf", config_name="config")

def my_app(cfg):

# get detector, descriptor, extractor and matcher using factory methods

try:

detector = factories.detector_factory(cfg)

descriptor = factories.descriptor_factory(cfg)

extractor = factories.feature_extractor_factory(detector, descriptor)

matcher = factories.matcher_factory(cfg)

except Exception as e:

logging.critical(e, exc_info=True)

logging.info(f"Failed on model initialization")

return None

# load query image

path = os.path.join(

cfg.data.query_image_path,

os.listdir(cfg.data.query_image_path)[0]

)

query_image = cv.imread(filename = path,

flags = cv.IMREAD_GRAYSCALE)

query_image = image_resize(query_image, width=cfg.data.image_target_width)

try:

query_keypoints, query_descriptors = extractor(query_image)

except Exception as e:

logging.critical(e, exc_info=True)

logging.info(f"Broke on query image feature extraction")

return None

# check output directory

if not os.path.exists(cfg.data.output_dir_path):

os.mkdir(cfg.data.output_dir_path)

# for each image in training set directory find matches and draw results

for item in os.listdir(cfg.data.train_set_path):

train_image_path = os.path.join(cfg.data.train_set_path, item)

train_image = cv.imread(filename = train_image_path,

flags = cv.IMREAD_GRAYSCALE)

train_image = image_resize(train_image, width=cfg.data.image_target_width)

try:

train_keypoints, train_descriptors = extractor(train_image)

except Exception as e:

logging.critical(e, exc_info=True)

logging.info(f"Broke on {train_image_path} image feature extraction")

return None

try:

matches = matcher(query_descriptors, train_descriptors)

logging.info(f"Found {len(matches)} matches between query and {item}")

except Exception as e:

logging.critical(e, exc_info=True)

logging.info(f"Broke on {train_image_path} image feature matching")

return None

output = cv.drawMatches(img1 = query_image,

keypoints1 = query_keypoints,

img2 = train_image,

keypoints2 = train_keypoints,

matches1to2 = matches[:cfg.data.num_matches_show],

outImg = None,

flags = cv.DrawMatchesFlags_NOT_DRAW_SINGLE_POINTS)

cv.imwrite(os.path.join(cfg.data.output_dir_path, item), output)

logging.info("Execution succeeded!")

Regarding model parameters it decides what to instantiate and run matching loop looking for a query features among target images.

Results Comparison

Let's have a look at the resulting pictures.

C1. Compare two <detector,descriptor> pairs

model.descriptor_type=BRIEF,model.detector_type=AKAZE,model.matcher_type=FLANN

Not even close

The same picture pair with model.descriptor_type=AKAZE,model.detector_type=AKAZE,model.matcher_type=FLANN

Much better!

C2. Match objects captured under different angles

model.descriptor_type=AKAZE,model.detector_type=AKAZE,model.matcher_type=FLANN

FLANN won't give any valid match

While brute-force do

C3. BRISK features vs SIFT

model.descriptor_type=BRISK,model.detector_type=BRISK,model.matcher_type=FLANN

It is seen on the first image BRISK rely heavily on the horizontal line near the border of the bill

model.descriptor_type=SIFT,model.detector_type=SIFT,model.matcher_type=FLANN

While SIFT relies on the vertical border on the first image and maps one point of the second to different features of the query.

Conclusion

Computer Vision and other fields that work with data in order to find insights are heavily relied on for experiments. It is crucial to have a possibility to prototype and run them fast, the faster you can check a hypothesis the faster you can find a direction for the new hypothesis. And hopefully, it'll lead to a desirable result... But anyway, when you do a lot of experiments progress tracking is also important. It is very great that Hydra provides a framework that adequately stores results on disk. Hydra is often used in experiments with neural network frameworks such as PyTorch and TensorFlow. But OpenCV has repeatedly shown usefulness in different CV tasks and this time isn't an exception. I hope that this tutorial gives you a fresh glance at how to maintain your CV experiments even with OpenCV not only with neural networks frameworks.

Acknowledgments

Some parts in my GitHub project are inspired by https://github.com/whoisraibolt/Feature-Detection-and-Matching repository

Opinions expressed by DZone contributors are their own.

Comments