Navigating API Challenges in Kubernetes

Traditional API management solutions struggle to keep pace with the distributed nature of Kubernetes. Dive into some solutions for efficient Kubernetes-native API management.

Join the DZone community and get the full member experience.

Join For FreeKubernetes has become the standard for container orchestration. Although APIs are a key part of most architectures, integrating API management directly into this ecosystem requires careful consideration and significant effort. Traditional API management solutions often struggle to cope with the dynamic, distributed nature of Kubernetes. This article explores these challenges, discusses solution paths, shares best practices, and proposes a reference architecture for Kubernetes-native API management.

The Complexities of API Management in Kubernetes

Kubernetes is a robust platform for managing containerized applications, offering self-healing, load balancing, and seamless scaling across distributed environments. This makes it ideal for microservices, especially in large, complex infrastructures where declarative configurations and automation are key. According to a 2023 CNCF survey, 84% of organizations are adopting or evaluating Kubernetes, highlighting the growing demand for Kubernetes-native API management to improve scalability and control in cloud native environments. However, API management within Kubernetes brings its own complexities. Key tasks like routing, rate limiting, authentication, authorization, and monitoring must align with the Kubernetes architecture, often involving multiple components like ingress controllers (for external traffic) and service meshes (for internal communications). The overlap between these components raises questions about when and how to use them effectively in API management. While service meshes handle internal traffic security well, additional layers of API management may be needed to manage external access, such as authentication, rate limiting, and partner access controls.

Traditional API management solutions, designed for static environments, struggle to scale in Kubernetes’ dynamic, distributed environment. They often face challenges in integrating with native Kubernetes components like ingress controllers and service meshes, leading to inefficiencies, performance bottlenecks, and operational complexities. Kubernetes-native API management platforms are better suited to handle these demands, offering seamless integration and scalability.

Beyond these points of confusion, there are other key challenges that make API management in Kubernetes a complex task:

- Configuration management: Managing API configurations across multiple Kubernetes environments is complex because API configurations often exist outside Kubernetes-native resources (kinds), requiring additional tools and processes to integrate them effectively into Kubernetes workflows.

- Security: Securing API communication and maintaining consistent security policies across multiple Kubernetes clusters is a complex task that requires automation. Additionally, some API-specific security policies and enforcement mechanisms are not natively supported in Kubernetes.

- Observability: Achieving comprehensive observability for APIs in distributed Kubernetes environments is difficult, requiring users to separately configure tools that can trace calls, monitor performance, and detect issues.

- Scalability: API management must scale alongside growing applications, balancing performance and resource constraints, especially in large Kubernetes deployments.

Embracing Kubernetes-Native API Management

As organizations modernize, many are shifting from traditional API management to Kubernetes-native solutions, which are designed to fully leverage Kubernetes' built-in features like ingress controllers, service meshes, and automated scaling. Unlike standard API management, which often requires manual configuration across clusters, Kubernetes-native platforms provide seamless integration, consistent security policies, and better resource efficiency, as a part of the Kubernetes configurations.

Here are a few ways that you can embrace Kubernetes-native API management:

- Represent APIs and related artifacts the Kubernetes way: Custom Resource Definitions (CRDs) allow developers to define their own Kubernetes-native resources, including custom objects that represent APIs and their associated policies. This approach enables developers to manage APIs declaratively, using Kubernetes manifests, which are version-controlled and auditable. For example, a CRD could be used to define an API's rate-limiting policy or access controls, ensuring that these configurations are consistently applied across all environments.

- Select the right gateway for Kubernetes integration:Traditional Kubernetes ingress controllers primarily handle basic HTTP traffic management but lack the advanced features necessary for comprehensive API management, such as fine-grained security, traffic shaping, and rate limiting. Kubernetes-native API gateways, built on the Kubernetes Gateway API Specification, offer these advanced capabilities while seamlessly integrating with Kubernetes environments. These API-specific gateways can complement or replace traditional ingress controllers, providing enhanced API management features.

- It's important to note that the Gateway API Specification focuses mainly on routing capabilities and doesn't inherently cover all API management functionalities like business plans, subscriptions, or fine-grained permission validation. API management platforms often extend these capabilities to support features like monetization and access control. Therefore, selecting the right gateway that aligns with both the Gateway API Specification and the organization's API management needs is critical. In API management within an organization, a cell-based architecture may be needed to isolate components or domains. API gateways can be deployed within or across cells to manage communication, ensuring efficient routing and enforcement of policies between isolated components.

- Control plane and portals for different user personas: While gateways manage API traffic, API developers, product managers, and consumers expect more than basic traffic handling. They need features like API discovery, self-service tools, and subscription management to drive adoption and business growth. Building a robust control plane that lets users control these capabilities is crucial. This ensures a seamless experience that meets both technical and business needs.

- GitOps for configuration management: GitOps with CRDs extends to API management by using Git repositories for version control of configurations, policies, and security settings. This ensures that API changes are tracked, auditable, and revertible, which is essential for scaling. CI/CD tools automatically sync the desired state from Git to Kubernetes, ensuring consistent API configuration across environments. This approach integrates well with CI/CD pipelines, automating testing, reviewing, and deployment of API-related changes to maintain the desired state.

- Observability with OpenTelemetry: In Kubernetes environments, traditional observability tools struggle to monitor APIs interacting with distributed microservices. OpenTelemetry solves this by providing a vendor-neutral way to collect traces, metrics, and logs, offering essential end-to-end visibility. Its integration helps teams monitor API performance, identify bottlenecks, and respond to issues in real-time, addressing the unique observability challenges of Kubernetes-native environments.

- Scalability with Kubernetes' built-in features: Kubernetes' horizontal pod autoscaling (HPA) adjusts API gateway pods based on load, but API management platforms must integrate with metrics like CPU usage or request rates for effective scaling. API management tools should ensure that rate limiting and security policies scale with traffic and apply policies specific to each namespace, supporting multi-environment setups. This integration allows API management solutions to fully leverage Kubernetes' scalability and isolation features.

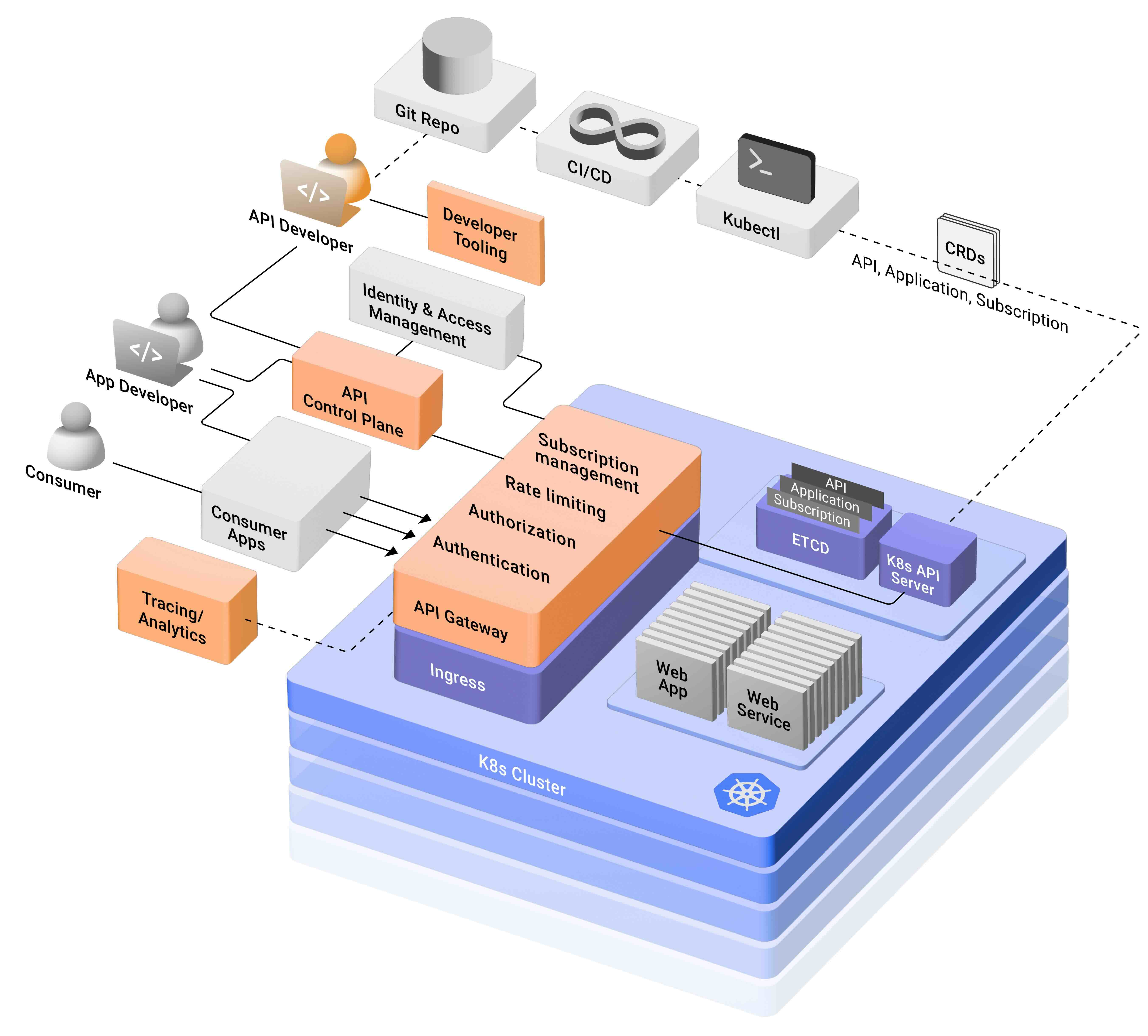

Reference Architecture for Kubernetes-Native API Management

To design a reference architecture for API management in a Kubernetes environment, we must first understand the key components of the Kubernetes ecosystem and their interactions. If you're already familiar with Kubernetes, feel free to move directly to the architecture details.

Below is a list of the key components of the Kubernetes ecosystem and the corresponding interactions.

- API gateway: Deploy an API gateway as ingress or as another gateway that supports the Kubernetes Gateway API Specification.

- CRDs: Use CRDs to define APIs, security policies, rate limits, and observability configurations.

- GitOps for lifecycle management: Implement GitOps workflows to manage API configurations and policies.

- Observability with OpenTelemetry: Integrate OpenTelemetry to collect distributed traces, metrics, and logs.

- Metadata storage with etcd: Use etcd, Kubernetes’ distributed key-value store, for storing metadata such as API definitions, configuration states, and security policies.

- Security policies and RBAC: In Kubernetes, RBAC provides consistent access control for APIs and gateways, while network policies ensure traffic isolation between namespaces, securing API communications.

Key components like the control plane, including consumer and producer portals, along with rate limiting, key and token management services, and developer tools for API and configuration design, are essential to this reference architecture.

Figure: Reference architecture for Kubernetes-native API management

Conclusion: Embracing Kubernetes-Native API Management for Operational Excellence

Managing APIs in Kubernetes introduces unique challenges that traditional API management solutions are not equipped to handle. Kubernetes-native, declarative approaches are essential to fully leverage features like autoscaling, namespaces, and GitOps for managing API configurations and security. By adopting these native solutions, organizations can ensure efficient API management that aligns with Kubernetes' dynamic, distributed architecture. As Kubernetes adoption grows, embracing these native tools becomes critical for modern API-driven architectures.

This article was shared as part of DZone's media partnership with KubeCon + CloudNativeCon.

View the Event

Opinions expressed by DZone contributors are their own.

Comments