Retrieval-Augmented Generation (RAG): Enhancing AI-Language Models With Real-World Knowledge

In this post, we'll take a closer look at RAG, how it works, where it's being used, and how it might change our interactions with AI in the future.

Join the DZone community and get the full member experience.

Join For FreeIn recent years, AI has made big leaps forward, mainly because of large language models (LLMs). LLMs are really good at understanding and generating text that’s human-like, and they led to the creation of several new tools like advanced chatbots and AI writers.

While LLMs are great at generating text that’s fluent and human-like, they sometimes struggle with getting facts right. This can be a huge problem when accuracy is really important

So what’s the solution for this? The answer is Retrieval Augmented Generation (RAG).

RAG integrates all the powerful features of models like GPT and also adds the ability to look up information from outside sources, like proprietary databases, articles, and content. This helps the AI to produce text that's not only well-written but also more factually and contextually correct.

By combining the ability to generate text with the power to find and use accurate and relevant information, RAG opens up a lot of new possibilities. It helps to bridge the gap between AI that just writes text and AI that can use actual knowledge.

In this post, we'll take a closer look at RAG, how it works, where it's being used, and how it might change our interactions with AI in the future.

What Is Retrieval Augmented Generation (RAG)?

Let's start with a formal definition of RAG:

Retrieval Augmented Generation (RAG) is an AI framework that enhances large language models (LLMs) by connecting them with external knowledge bases. This allows access to up-to-date, accurate information, improving the relevance and factual accuracy of its results.

Now, let's break into simple language so that it's easy to understand.

We’ve all used AI chatbots like ChatGPT in the last 2 years that can answer our questions. These are powered by Large Language Models (LLMs), which were trained and built on huge amounts of internet content/data. They're great at producing human-like text on almost any topic. It looks like they are perfectly capable of answering all our questions, but that’s not quite true all the time. They sometimes share information that may not be accurate and factually correct.

This is where RAG comes into play. Here's how it works (at a very high level):

- You ask a question.

- RAG searches a curated knowledge base of reliable info.

- It retrieves relevant information.

- It passes this to the LLM.

- The LLM uses this accurate info to answer you.

The result of this process is responses that are backed by accurate information.

Let's understand this with an example: Imagine you want to know about the baggage allowance for an international flight. A traditional LLM like ChatGPT might say: "Typically, you get one checked bag up to 50 pounds and one carry-on. But check with your airline for specifics." A RAG-enhanced system would say: "For X airline, economy passengers get one 50-pound checked bag and a 17-pound carry-on. Business class gets two 70-pound bags. Watch out for special rules on items like sports gear, and always verify at check-in."

Did you notice the difference? RAG provides specific, more accurate information tailored to the actual airline policies. In summary, RAG makes these systems more reliable and trustworthy. It's very important in developing AI systems that are more dependable for real-world applications.

How RAG Works

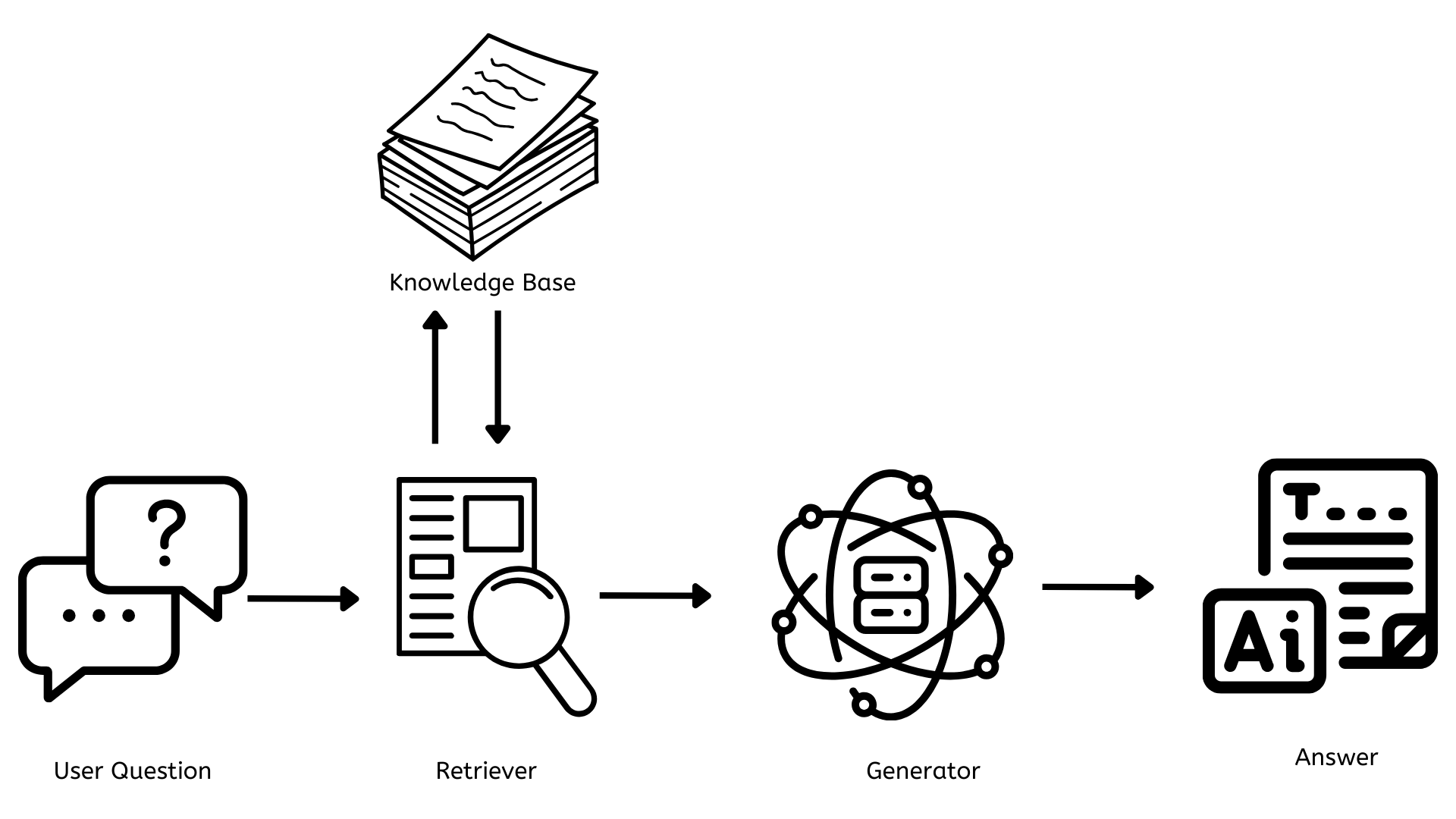

Now that we have a good idea of what RAG is, let’s understand how it works. First, let’s start with a simple architecture diagram.

The Key Components of RAG

From the architecture diagram above, between the user question and the final answer to the question, there are 3 key components that are crucial for RAG to work.

- Knowledge base

- Retriever

- Generator

Now, let’s understand each one by one.

The Knowledge Base

This is the repository that contains all the documents, articles, or data that can be referenced to answer all the questions. This needs to be constantly updated with new and relevant information so that the responses are accurate and users are furnished with the most relevant and up-to-date information.

From a technology standpoint, this typically uses vector databases like Pinecone, FAISS, etc. to store text as numerical representations (embeddings), thus allowing for quick and efficient searches.

The Retriever

This is responsible for finding relevant documents or data that are related to the user question. When a question is asked, the retriever quickly searches through the knowledge base to find the most relevant information.

From a technology standpoint, this often uses dense retrieval methods such as Dense Passage Retrieval or BM25. These methods convert the user questions into the same type of numerical representation used in the knowledge base and match them with relevant information.

The Generator

This is responsible for generating content that’s coherent and contextually relevant to the user question. It takes the information from the retriever and uses it to craft a response that answers the question.

From a technology standpoint, this is powered by a Large Language Model (LLM) such as GPT-4 or open-source alternatives like LLAMA or BERT. These models are trained on massive datasets and can generate human-like text based on the input they receive.

Benefits and Applications of RAG

Now that we know what RAG is and how it works, let’s explore some of the benefits that it offers as well as applications of RAG.

Benefits of RAG

Up-To-Date Knowledge

Unlike traditional AI models (ChatGPT) that are limited to their training data, RAG systems can access and utilize the most current information available in their knowledge base.

Enhanced Accuracy and Reduced Hallucinations

RAG improves the accuracy of responses by using factual, up-to-date information in the knowledge base. This reduces the problem of "hallucinations" for the most part - instances where AI generates more plausible but incorrect information.

Customization and Specialization

Companies can build RAG systems to their specific needs by using specialized knowledge bases and creating AI assistants that are experts in specific domains.

Transparency and Explainability

RAG systems can often provide the sources of their information, making it easier for users to understand the sources, verify claims, and understand the reasoning behind responses.

Scalability and Efficiency

RAG allows for the efficient use of computational resources. Instead of constantly retraining large models or building new ones, organizations can update their knowledge bases, making it easier to scale and maintain AI systems.

Applications of RAG

Customer Service

RAG makes customer support chatbots smarter and more helpful. These chatbots can access the most up-to-date information from the knowledge base and provide precise and contextual answers.

Personalized Assistants

Companies can create customized AI Assistants that can tap into their unique and proprietary data. By leveraging the organization’s internal documents on policies, procedures, and other data, these assistants can answer employee queries quickly and efficiently.

Voice of Customer

Organizations can use RAG to analyze, and derive actionable insights from a wide array of customer feedback channels that allow to create a comprehensive understanding of customer experiences, sentiments, and needs. This enables them to quickly identify and address critical issues, make data-driven decisions, and continuously improve their products based on a complete picture of customer feedback across all touch points.

The Future of RAG

RAG has emerged as a game-changing technology in the field of artificial intelligence, combining the power of large language models with dynamic information retrieval. Many organizations are already taking advantage of this and building custom solutions for their needs.

As we look to the future, RAG is going to transform how we interact with information and make decisions. Future RAG systems will:

- Have greater contextual understanding and enhanced personalization

- Be multi-modal by going beyond just text and incorporating image, audio/video

- Have real-time knowledge base updates

- Have seamless integration with many workflows to improve productivity and enhance collaboration

Conclusion

In conclusion, RAG is going to revolutionize how we interact with AI and Information. By closing the gap between AI-generated content and its factual accuracy, RAG is going to set the stage for intelligent AI systems that are not only more capable but also more accurate and trustworthy. As this continues to evolve, our engagement with information will be more efficient and accurate than ever before.

Opinions expressed by DZone contributors are their own.

Comments