Exploring Foundations of Large Language Models (LLMs): Tokenization and Embeddings

Learn more about tokenization and embeddings, which play a vital role in understanding human queries and converting knowledge bases to generate responses.

Join the DZone community and get the full member experience.

Join For FreeHave you ever wondered how various Gen AI tools like ChatGPT or Bard efficiently answer all our complicated questions? What goes behind the scenes to process our question and generate a human-like response with the size of data in magnitudes? Let’s dive deep.

In the era of Generative AI, natural language processing plays a crucial role in how machines understand and generate human language. The applications for this cut through various implementations like smart chatbots, translation, sentimental analysis, developing knowledge basis, and many more. The central theme in implementing this Gen AI application is to store the data from various sources and query those to generate human language responses. But how does this work internally? In this article, we will explore concepts of tokenization and embeddings, which play a vital role in understanding human queries and converting knowledge bases to generate responses.

What Is Tokenization?

For LLMs, it is necessary to convert human language into a format that the model can process. Tokenization is the process of breaking down human text into smaller units known as "tokens." These tokens can be sentences, words, or characters, depending on the model. Each token is then assigned a token ID for further processing.

Let's dive into understanding how each works:

1. Sentence-Level Tokenizers

In this method, we split large texts into individual sentences. These are often used for summarization and translation. Examples are:

- SpaCy sentence tokenizer

- NLTK punkt sentence tokenizer

2. Word-Level Tokenization

In this method, sentences are broken into words. For example: "The weather is unbelievable today,” is broken down into smaller tokens [ "weather”, ”is”, ”unbelievable”, ”today”]. As seen in the example, this method pretty much works with known words; however, it struggles with rare/unknown or complex words. This method struggles to understand the context of “unbelievable” and might ignore it. Examples are:

- NLTK Tokenizer: Part of the natural language toolkit (Python)

- SpaCy Word tokenizer: This is used with SpaCy’s NLP pipeline and is famous for speed and accuracy

3. Subword Tokenization

The mentioned limitation of “word-level tokenization” is addressed by this technique and it helps handle rare or complex words in smaller units. For example, it breaks down “unbelievable” to [“un”,” believe”, “able”]. This helps with processing compound and unfamiliar words. Examples are:

- Byte pair encoding: Widely used subword tokenizer

- Wordpiece tokenizer: Used in BERT

4. Character-Level Tokenization

Some models tokenize to individual character levels. This method is highly granular and loses contextual meaning as characters alone are too abstract. Examples:

- Deep moji

- Character-level OpenAI GPT-2

5. Hybrid Tokenizer

In this method, different tokenizers are combined, such as word and subword tokenizations, to optimize efficiency and flexibility.

- GPT-3 Tokenizer: This uses hybrid BPE and character-level tokenization breaking down words into subwords and transitioning to character-level tokens for unknown or compound words

To summarize chunks of text (also known as corpus), data are translated into smaller chunks that can be further processed for generating human-like responses. Once the tokenization is complete the next step is embeddings.

What Are Embeddings?

After tokenization is complete, this text needs to be converted to numerical format so the model can process it. This process is called embeddings. These embeddings are dense, fixed-size vectors representing each token. Vectors allow models to capture the syntactic meaning.

There are three known types of embeddings.

1. Traditional Word Embeddings

These are traditional embedding methods where each word has a fixed representation regardless of the context. It led to issues as words can have different meanings based on context. For example, “bank” is a financial institution and area near the river.

2. Contextual Embeddings

To overcome this deficiency, modern transformer-based models generate contextual embeddings in which the vector values vary depending on the surrounding words.

3. Positional Embeddings

In this method, numerical values are added to token embeddings to encode the position of each word in sequence. For example, “the car was in front of the truck” and “the truck was in front of the car” would have different embeddings since the position of words differ in the meaning

How Do Tokenization and Embedding Actually Work in LLMs?

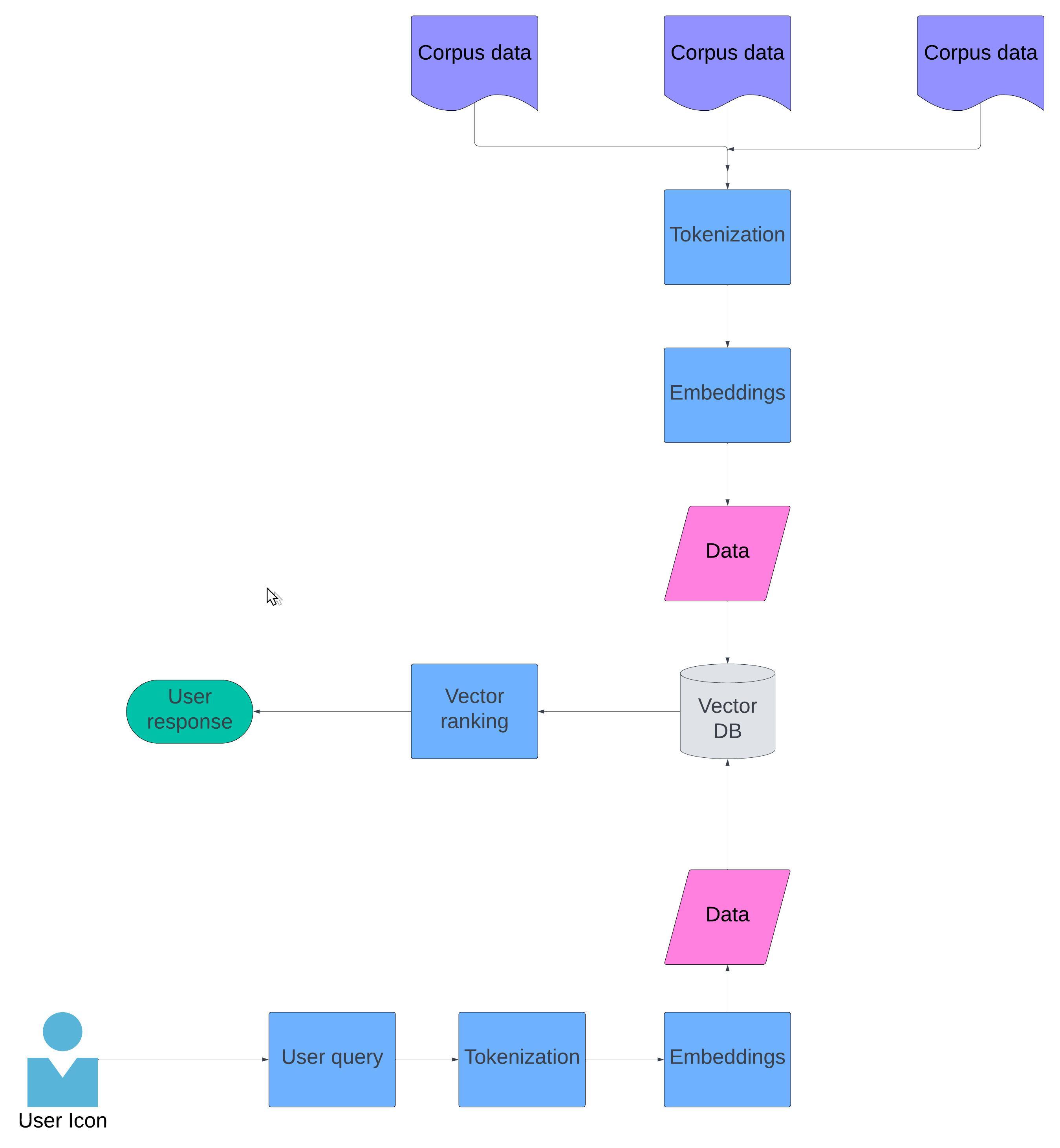

- At first, the corpus data from various data sources is fed to LLM which undergoes vectorization and embeddings.

- This embedded data is then stored in Vector DB.

- When the User inputs the query, this query undergoes the same process of vectorization and embeddings.

- This embedded data is then compared with different vectors stored in Vector DB through various methods like:

- Cosine similarity

- Euclidean distance

- Once the similarity scores between the query vector and corpus vector are calculated, the system then sorts the data by vector scores and ranks them.

- Based on the ranking, the user output is generated.

Conclusion

Tokenization and embeddings are two critical processes that enable LLMs to process and generate human language. By breaking down huge amounts of data into tokens and translating them into vectors, LLMs can interpret and produce text efficiently and accurately. As GenAI evolves, improving tokenization methods and embeddings will be key to building more efficient and powerful language models.

Opinions expressed by DZone contributors are their own.

Comments