Publish Keycloak Events to Kafka With a Custom SPI

Learn how to write a Keycloak custom extension and publish events to Apache Kafka. Use the consumer application to analyze the service account usage.

Join the DZone community and get the full member experience.

Join For FreeIn this post, you will build a custom extension known as Service Provider Interfaces (SPI) for Keycloak. The purpose of this SPI is to listen to the Keycloak events and publish these events to an Apache Kafka cluster as a topic per event type. These events will be consumed by a Quarkus consumer client application which will store and expose the API end-points that can be used for analysis like login counts when client x was created etc. For the demo, I am limiting the event types to only two events: Client and Client login. but all event types can be analyzed.

Event types:

- CLIENT: When a client is created in Keycloak.

- CLIENT_LOGIN: When a client_credentials grant request is made to the token endpoint.

Using these two events, we can determine when a client was created and when the client makes a request for login. This information can help us determine active clients and further analysis.

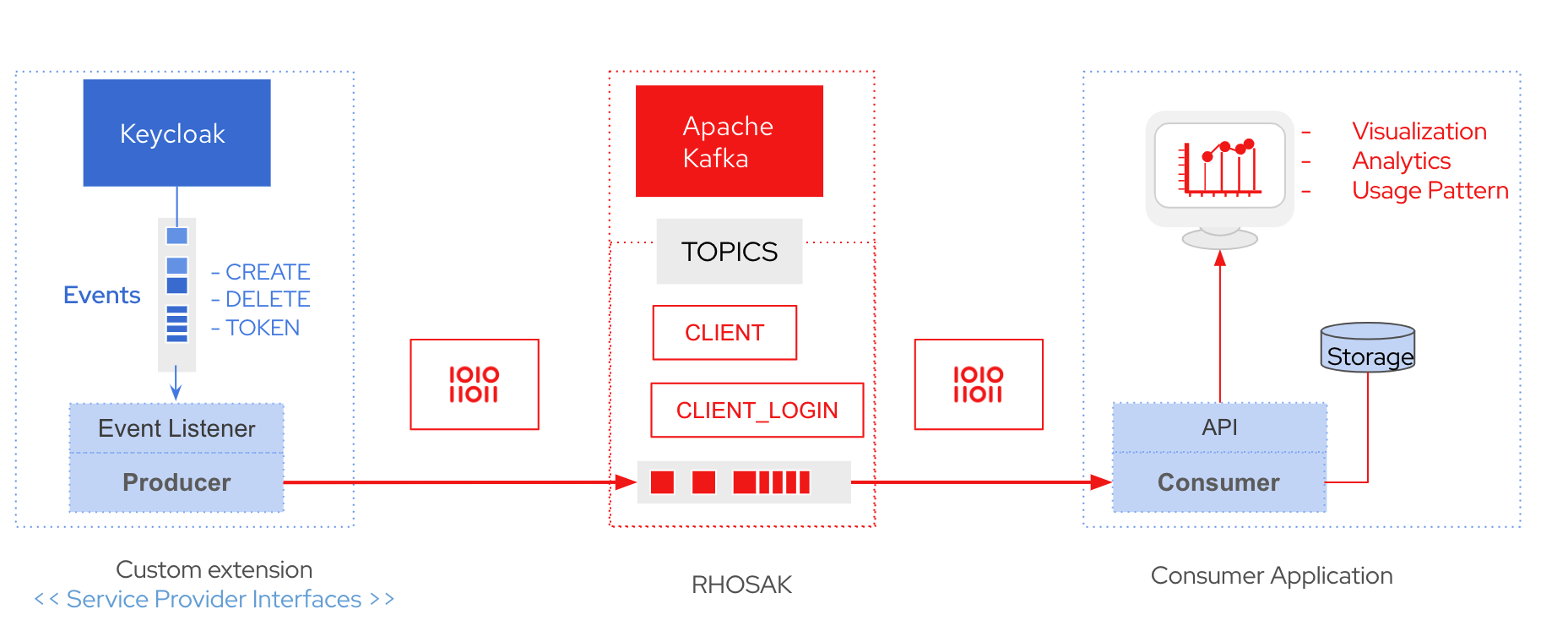

Architecture Overview

Custom Extension: The SPI Involves Two Components

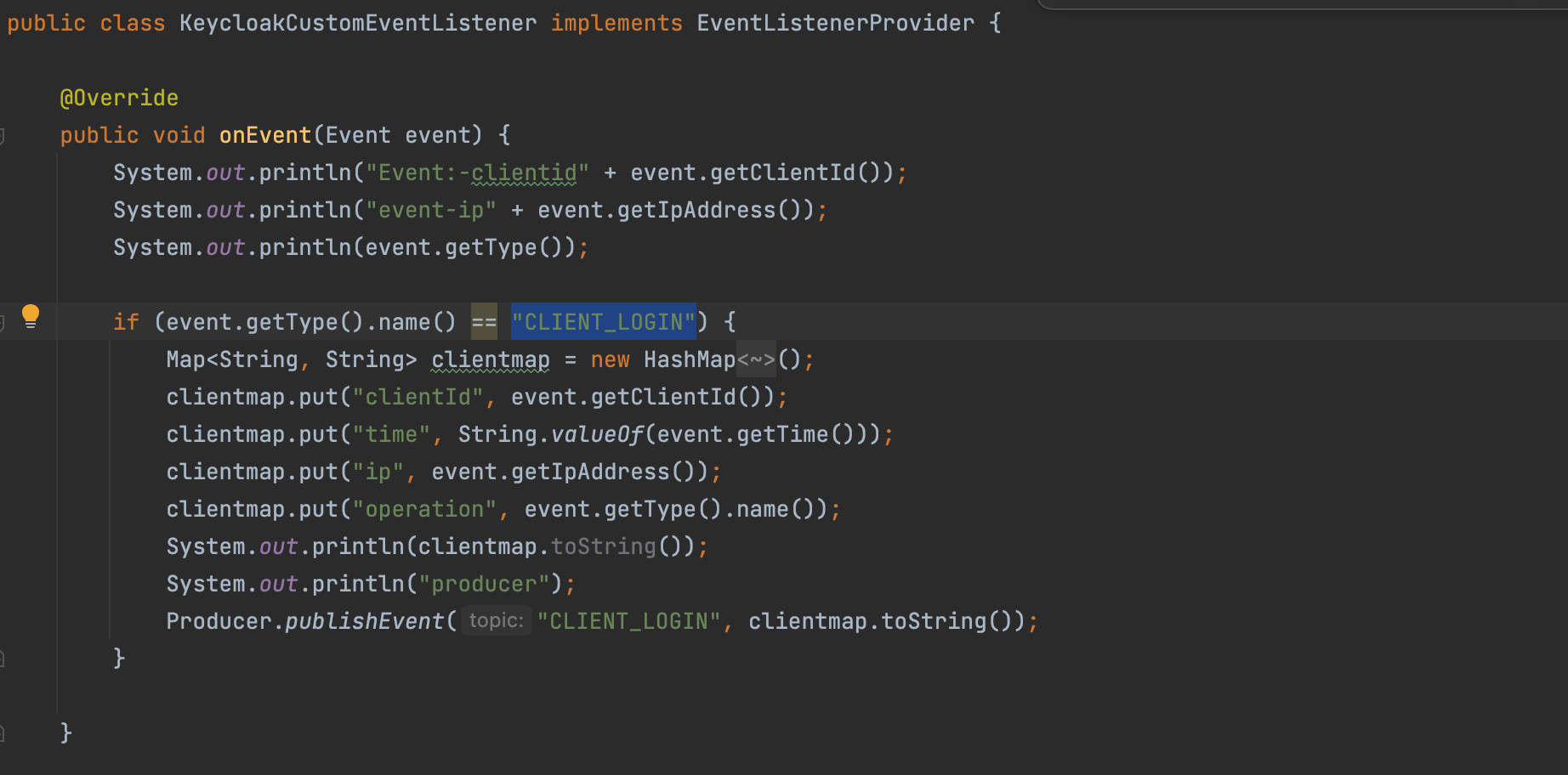

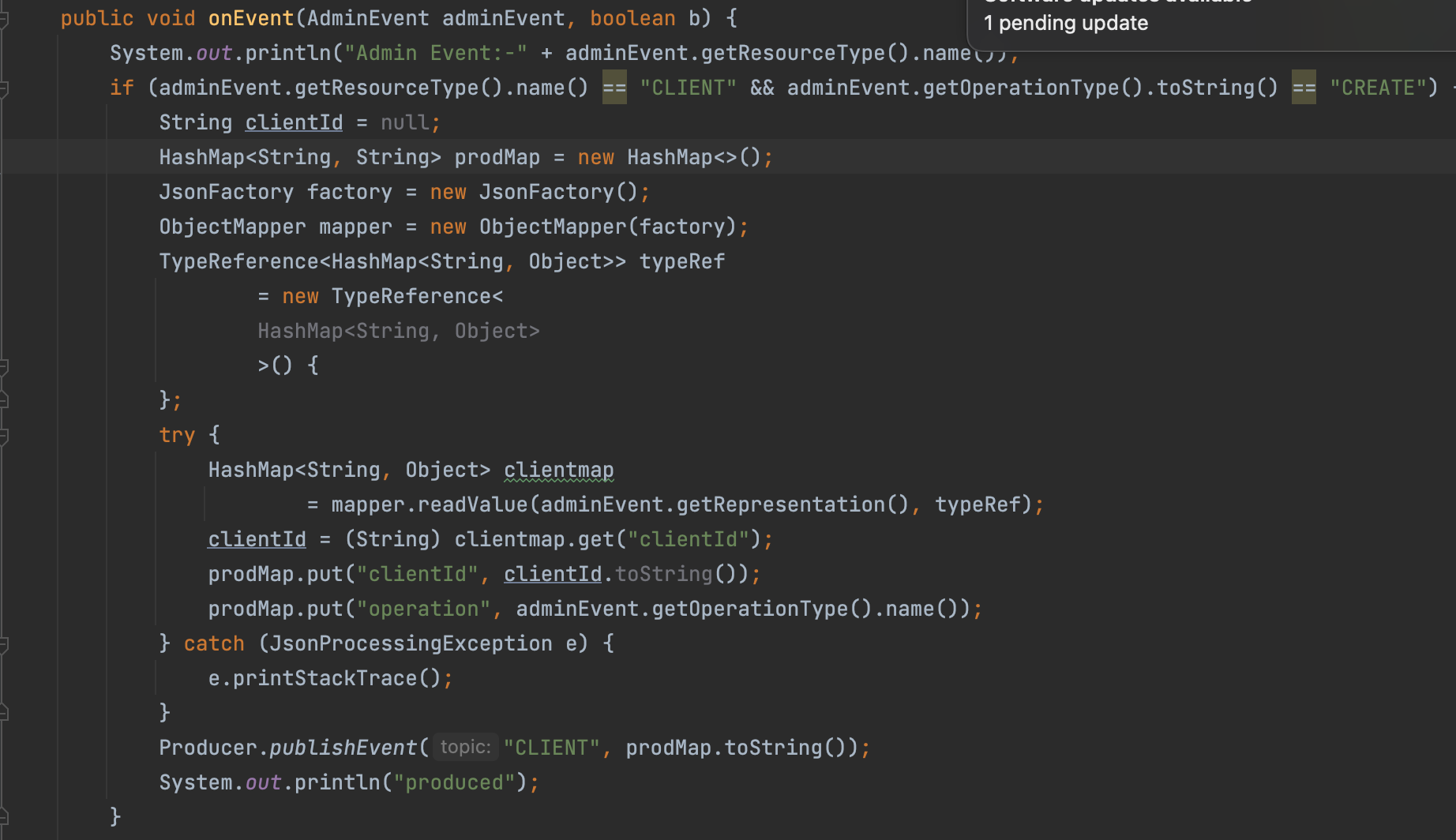

- Custom Event Listener: The event listener provider will log events like login events or admin events in Keycloak and passes the events to the producer component.

- Producer: The producer will publish the Keycloak events to configured Kafka broker.

Apache Kafka

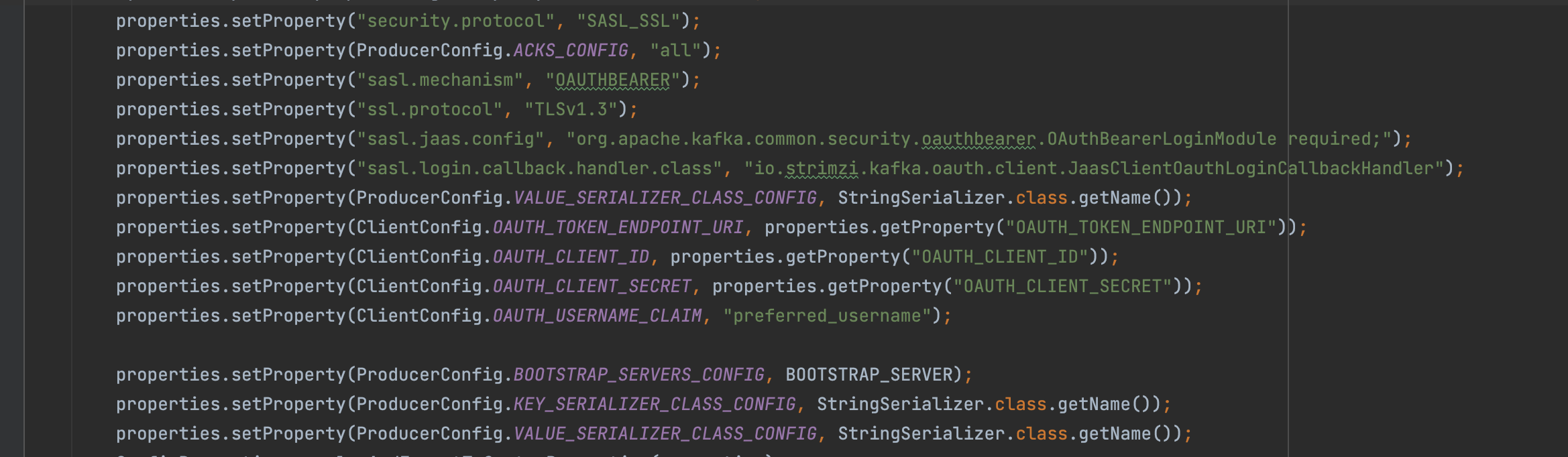

- I will be using Red Hat Openshift Stream for Apache Kafka where you can create a free developer instance in a few clicks. It provides secure protocols like SASL/Oauthbearer. You can use a local instance as well, but it will require configuration.

- Red Hat OpenShift Streams for Apache Kafka: A fully hosted and managed Apache Kafka service or Download Apache Kafka

- I will be using Red Hat Openshift Stream for Apache Kafka where you can create a free developer instance in a few clicks. It provides secure protocols like SASL/Oauthbearer. You can use a local instance as well, but it will require configuration.

Quarkus Consumer Application

- A custom consumer application using Quarkus. Which stores the events in a Postgres database

- Exposes a rest end-point that can be used for further analysis: client login events.

Setup

- Java 11 / Maven

- Git/Github

- Keycloak 17 (Quarkus based)

- Apache Kafka or Red Hat OpenShift Streams for Apache Kafka: A fully hosted and managed Apache Kafka service

- Docker/Podman

Code Repository

$ git clone https://github.com/akoserwal/keycloak-integrations.git

$ cd keycloak-spi-rhosak

$ ls

-> keycloak-event-listener-spi-and-kafka-producer

-> mk-consumer-appLet's Get Started

- Set up a Kafka Cluster in Red Hat OpenShift Streams for Apache Kafka

- In a few minutes, the instance will be up and running

- Create Kafka Topic

- CLIENT

- CLIENT_LOGIN

- Under Connection Details, click on Create service account.

- Configure the access management to allow the service account created in the previous step to have correct ACLs configured.

Configure Custom SPI: keycloak-event-listener-spi-and-kafka-producer

Configure: application.properties

bootstrap=<bootstrap url>

OAUTH_CLIENT_ID=<scrv-acc>

OAUTH_CLIENT_SECRET=<client-secret>For secure communication using SASL OAUTHBEARER security mechanism. Which is supported out of the box by Red Hat OpenShift Streams for Apache Kafka

Build the JAR

mvn clean packageDeploy SPI to Keycloak 17+ (Quarkus)

Copy the bundled jar to `keycloak/providers`

cp keycloak-event-listener-spi-and-kafka-producer.jar /keycloak-x.x.x/providersStart the Keycloak Server

bin ./kc.sh start-dev --http-port 8181 --spi-event-listener-keycloak-custom-event-listener-enabled=true --spi-event-listener-keycloak-custom-event-listener=keycloak-customArguments for enabling the SPI:

--spi-event-listener-keycloak-custom-event-listener-enabled=true

--spi-event-listener-keycloak-custom-event-listener=keycloak-customDerived from io.github.akoserwal.KeycloakCustomEventListenerProviderFactory

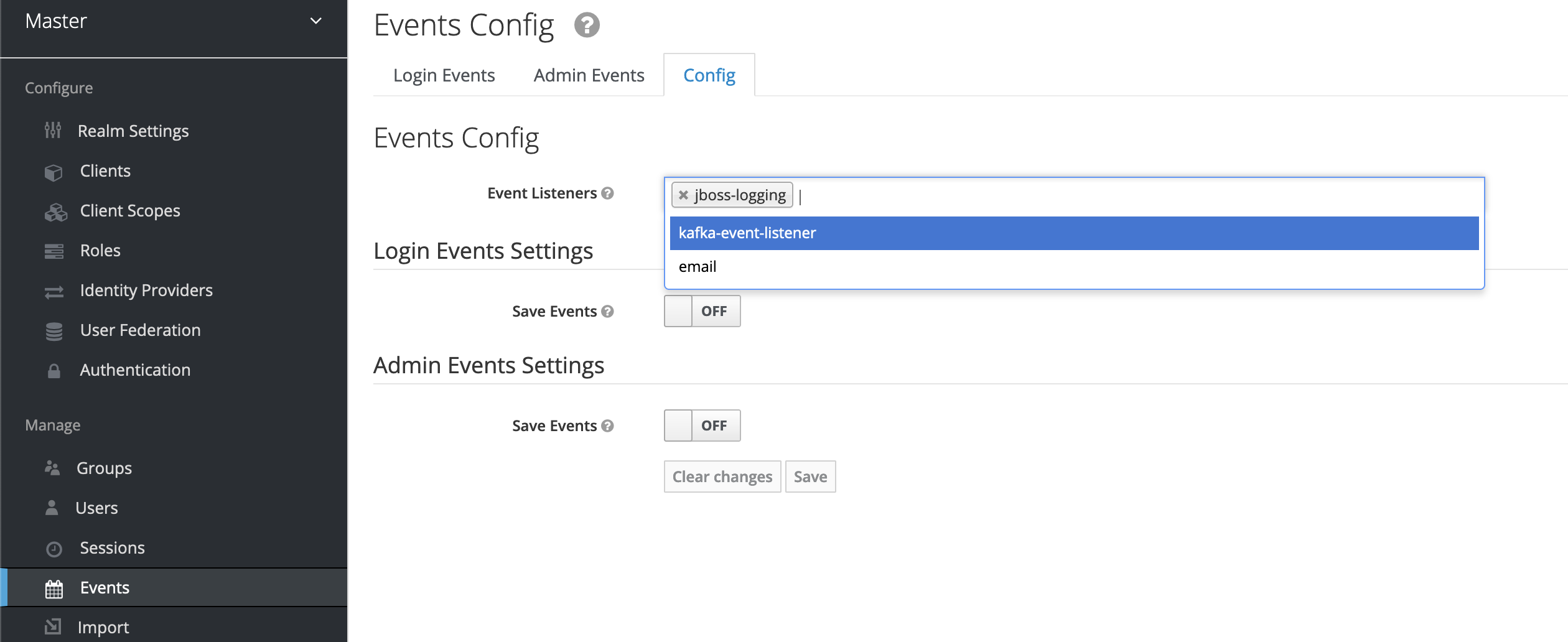

Enable the SPI in the Event Config Section

- Login into keycloak: http://localhost:8181

- Events Config: Enable the Event listener: `kafka-event-listener`

- Enable "Save Events" for Login/Admin Events settings

- Save

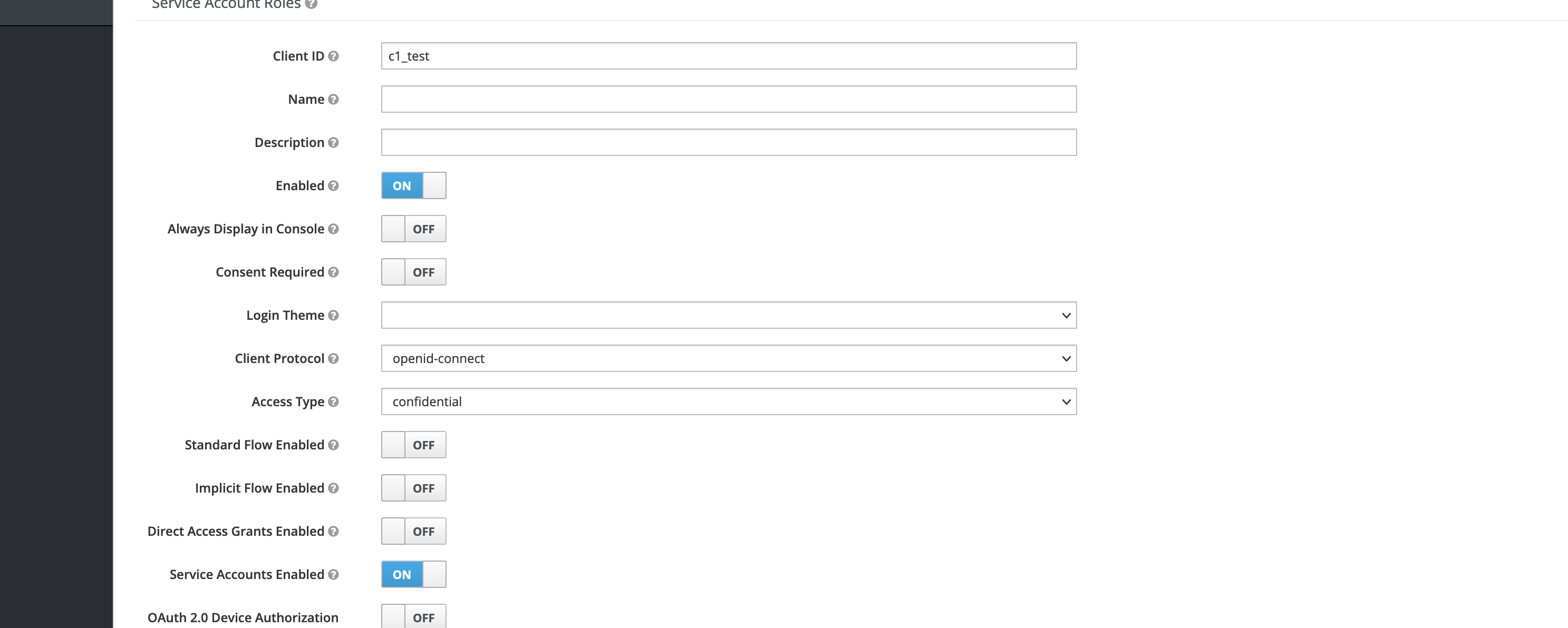

- Create a client (type: confidential and service account enabled)

- Admin event will trigger the code to produce the event to the topic: Client

Create a "CLIENT_LOGIN" event using a service account

curl -k --data "grant_type=client_credentials&client_id=c2_test&client_secret=<client-secret>" http://127.0.0.1:8181/realms/master/protocol/openid-connect/tokenQuarkus Consumer Application

Follow the instruction to Configure the consumer app

- Run the consumer application

./mvnw quarkus:devThe consumer app is logging the CLIENT event when clients get created in Keycloak

Exposed Rest API:

- API http://localhost:8081/service_accounts/

- API Response: This Shows 'c2_test' client made 2 client credentials requests and 'c7' client was created.

Analysis

Identify which clients/service accounts are created and whether they are active during a certain timeframe. Consumer storing the login information and when the clients are created. Either we can use the API exposed or use third-party libraries to do further analysis

- I am using cube.js for building a dashboard from the data source (PostgreSQL).

- Run

docker run -p 4000:4000 \

-v ${PWD}:/cube/conf \

-e CUBEJS_DEV_MODE=true \

cubejs/cube- Configure the schema using the PostgreSQL DB

- Build Query dashboard: Use the schema fields and execute the query. Here you can see when client login attempts (count) are made during this week/hour.

Conclusion

In this post, you managed to create a custom extension to Keycloak. Which published specific events to Kafka cluster securely using OAuth bearer protocol. Consumer application listening to event types: client and client login. Based on these events store these events in the database which can be used for the analysis of the usage pattern and identification of which client is actively used during a timeframe. Thank you for reading it.

Opinions expressed by DZone contributors are their own.

Comments