Introduction to Generative AI: Empowering Enterprises Through Disruptive Innovation

Leveraging deep learning models, generative AI exhibits a unique ability to interpret diverse input types and seamlessly generate novel content across various modalities.

Join the DZone community and get the full member experience.

Join For FreeEditor's Note: The following is an article written for and published in DZone's 2024 Trend Report, Enterprise AI: The Emerging Landscape of Knowledge Engineering.

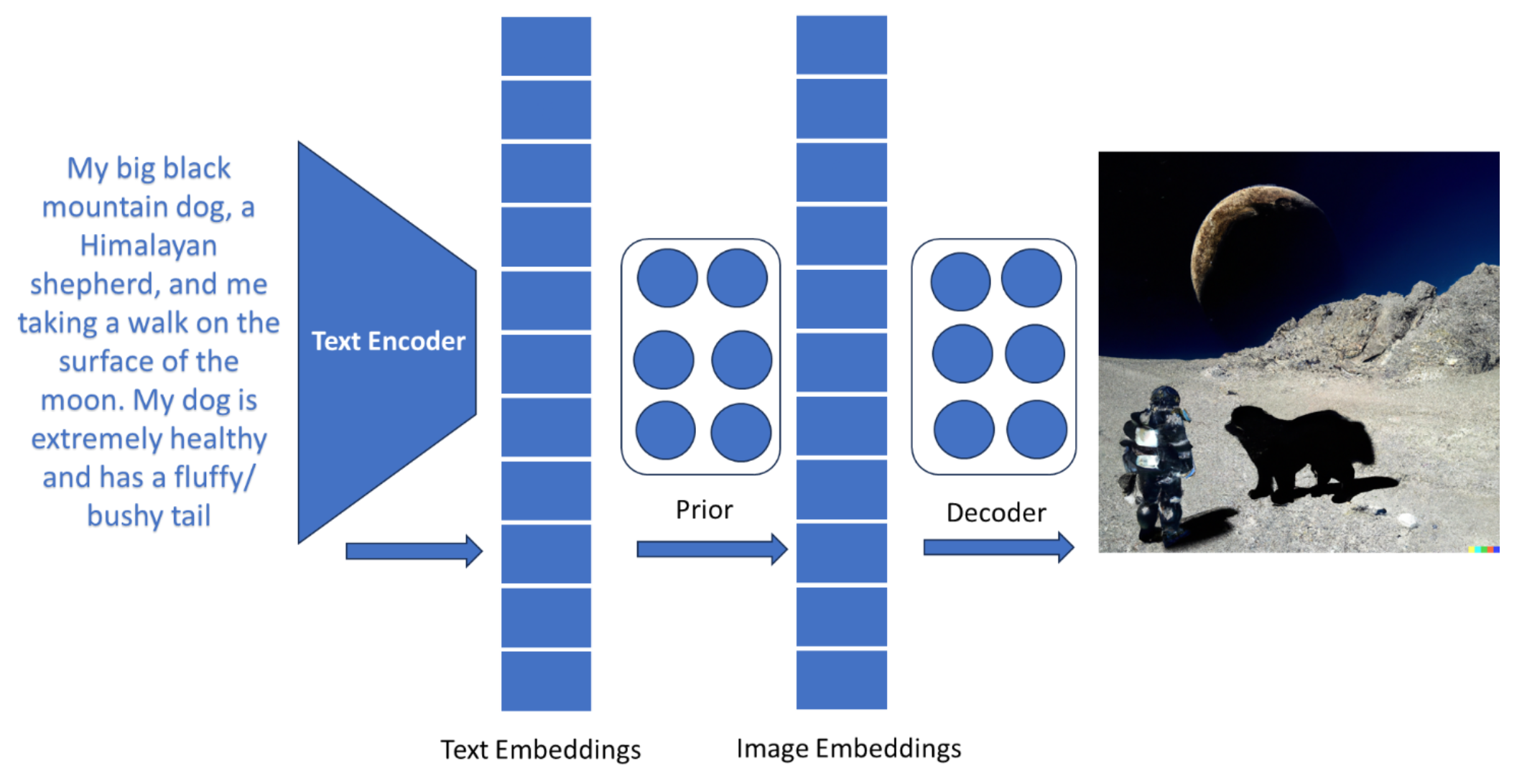

Generative AI, a subset of artificial intelligence (AI), stands as a transformative technology. Leveraging deep learning models, it exhibits a unique ability to interpret inputs spanning text, image, audio, video, or code and seamlessly generate novel content across various modalities. This innovation has broad applications, ranging from turning textual inputs into visual representations to transforming videos into textual narratives. Its proficiency lies in its capacity to generate high-quality and contextually relevant outputs, a testament to its potential in reshaping content creation. An example of this is found in Figure 1, which shows an application of generative AI where text prompts have been converted to an image.

Figure 1. DALL·E 2 generates an image from text prompt

Journey of Generative AI

The fascinating journey of AI started a couple of centuries back, and Table 1 below highlights the key milestones in the evolution of generative AI, covering significant launches and advancements over the years:

Table 1. Key milestones in the evolution of generative AI

| Major Launches | |

|---|---|

1805: First neural network (NN)/linear regression |

1997: Introduction of LSTM |

1925: First recurrent neural network (RNN) architecture |

2014: Variational autoencoder, GAN, GRU |

1958: Multi-layer perceptron — no deep learning |

2017: Transformers |

1965: First deep learning |

2018: GPT, BERT |

1972: Published artificial RNNs |

2021: DALL·E |

1980: Release of autoencoders |

2022: Latent diffusion, DALL·E 2, Midjourney, Stable Diffusion, ChatGPT, AudioLM |

1986: Invention of backpropagation |

2023: GPT-4, Falcon, Bard, MusicGen, AutoGPT, LongNet, Voicebox, LLaMA |

1990: Introduction of GAN/Curiosity |

2024: Sora, Stable Cascade |

1995: Release of LeNet-5 |

Generative AI Across Modalities

Generative AI spans various modalities, as enlisted in Table 2 below, showcasing its versatile capabilities:

Table 2. Generative AI modalities and major open-source tools

| Modality | Tools |

|---|---|

Text |

OpenAI GPT, Transformer models (TensorFlow, PyTorch), BERT (Google) |

Code |

CodeT5, PolyCoder |

Image |

StyleGAN (NVlabs), DALL·E (OpenAI), CycleGAN (junyanz), BigGAN (Google), Stable Diffusion, StableStudio, Waifu Diffusion |

Audio |

WaveNet (DeepMind), Tacotron 2 (Google), MelGAN (descriptinc) |

3D object |

3D-GANs, PyTorch3D |

Video |

Video Generation with GANs, Temporal Generative Adversarial Nets (TGANs) |

How Does Generative AI Work?

Generative AI leverages the pathbreaking models like transformer models, generative adversarial networks, and variational autoencoders to leverage its full potential.

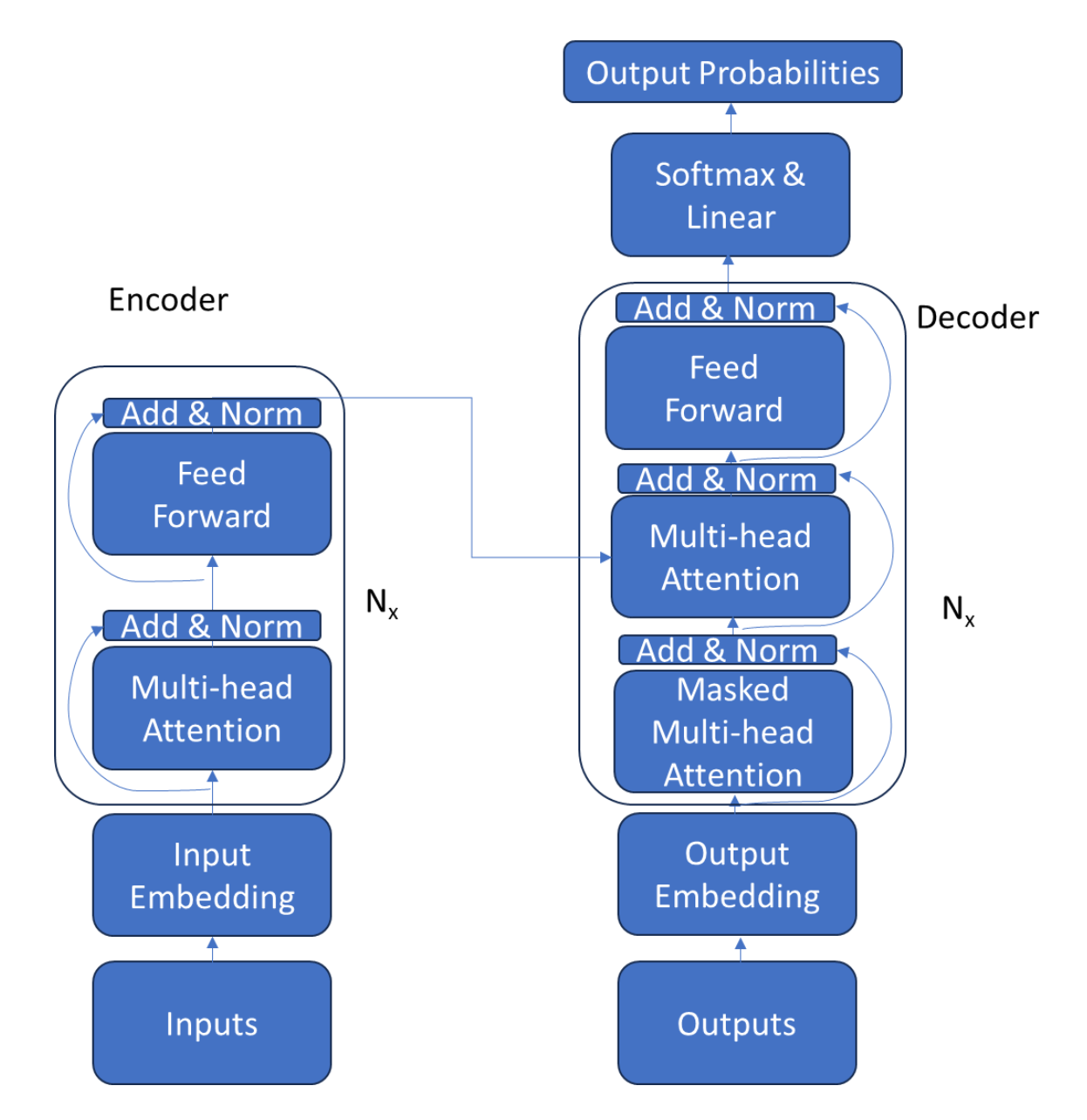

The Transformer Model

The transformer architecture relies on a self-attention mechanism, discarding sequential processing constraints found in recurrent neural networks. The model's attention mechanism allows it to weigh input tokens differently, enabling the capture of long-range dependencies and improving parallelization during training. Transformers consist of an encoder-decoder structure, with multiple layers of self-attention and feedforward sub-layers. Models like OpenAI's GPT series utilize transformer architectures for autoregressive language modeling, where each token is generated based on the preceding context.

The bidirectional nature of self-attention, coupled with the ability to handle context dependencies effectively, results in the creation of coherent and contextually relevant sequences, making transformers a cornerstone in the development of large language models (LLMs) for diverse generative applications like machine translation, text summarization, question answering, and text generation.

Figure 2. Transformer architecture

Generative Adversarial Networks

Comprising two neural networks, namely the discriminator and the generator, generative adversarial networks (GANs) operate through adversarial training to achieve unparalleled results in unsupervised learning. The generator, driven by random noise, endeavors to deceive the discriminator, which, in turn, aims to accurately distinguish between genuine and artificially produced data. This competitive interaction propels both networks toward continuous improvement, generating realistic and high-quality samples. GANs find versatility in a myriad of applications, notably in image synthesis, style transfer, and text-to-image synthesis.

Variational Autoencoders

Variational autoencoders (VAEs) are designed to capture and learn the underlying probability distribution of input data, enabling them to generate new samples that share similar characteristics. The architecture of a VAE consists of an encoder network, responsible for mapping input data to a latent space, and a decoder network, which reconstructs the input data from the latent space representation.

A key feature of VAEs lies in their ability to model the uncertainty inherent in the data by learning a probabilistic distribution in the latent space. This is achieved through the introduction of a variational inference framework, which incorporates a probabilistic sampling process during training. Their applications span various domains, including image and text generation, and data representation learning in complex high-dimensional spaces.

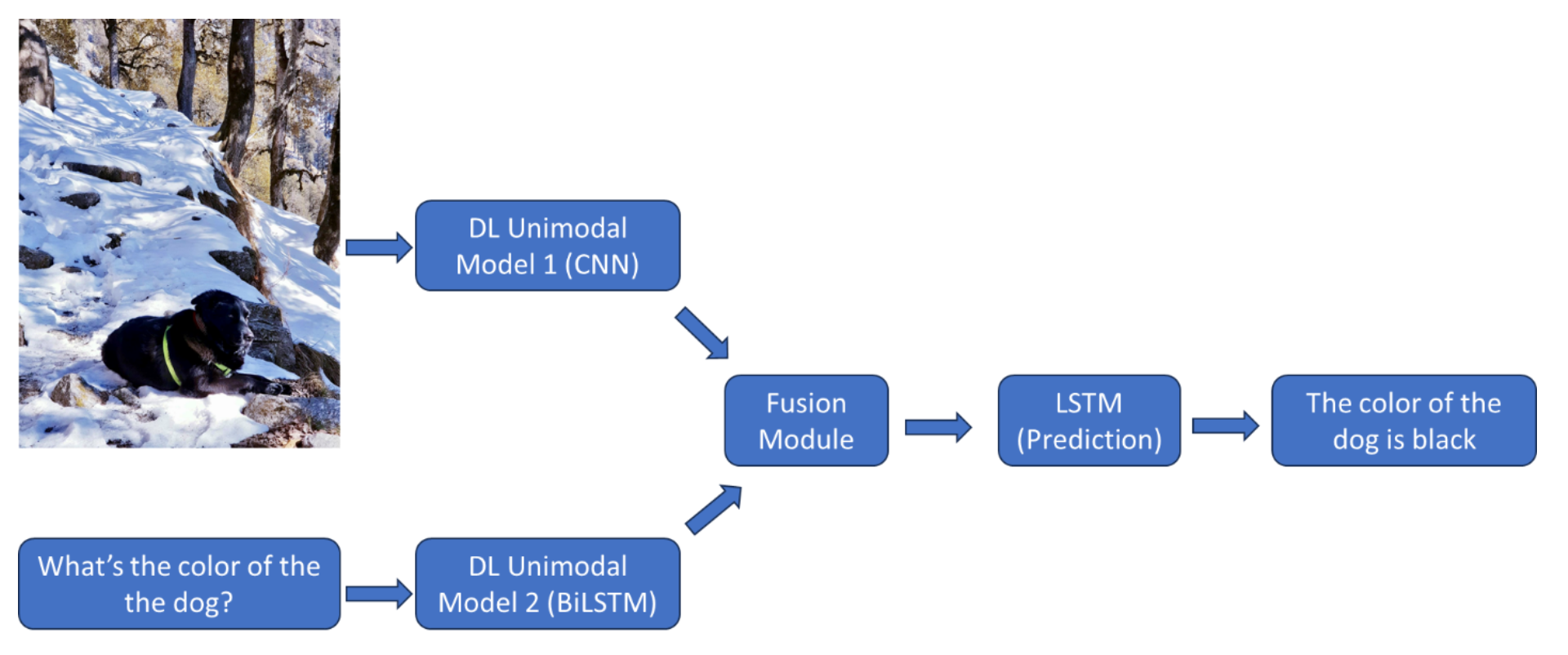

Figure 3. Q/A generation from image

The State of the Art

Generative AI, with its disruptive innovation, leaves a profound impact across the industry.

Generative Use Cases and Applications

Generative AI exhibits a broad range of applications across various industries, revolutionizing processes and fostering innovation. Table 3 showcases how it is reshaping various industries:

Table 3. Applications of generative AI across industries

| Sector | Applications |

|---|---|

Healthcare |

Medical image generation and analysis, drug discovery, personalized treatment plans |

Finance |

Personalized risk assessment and financial advice, compliance monitoring |

Marketing |

Content creation, ad copy generation, personalized marketing campaigns |

Manufacturing |

3D model generation for product design |

Retail |

Personalized product recommendations, virtual try-on experiences |

Education |

Adaptive learning materials, content generation for e-learning platforms |

Legal |

Document summarization, contract drafting, legal research assistance |

Entertainment |

Scriptwriting assistance, video game content generation, music composition |

Human resources |

Employee training content generation |

The Business Benefits

Generative AI offers a myriad of business benefits, including the amplification of creative capabilities, empowering enterprises to autonomously produce expansive and innovative content. It creates significant time and cost efficiencies by automating tasks that previously required human intervention. Hyper-personalized experiences are achieved through customer data, generating recommendations and offers tailored to individual preferences.

Furthermore, generative AI enhances operational efficiency by automating intricate processes, optimizing workflows, and facilitating realistic simulations for training and entertainment. The technology's adaptive learning capabilities allow continuous improvement based on feedback and new data, culminating in refined performance over time. Lastly, generative AI elevates customer interaction with dynamic AI agents capable of providing responses that mimic human conversation, contributing to an enhanced customer experience.

Managing the Risks of Generative AI

Effectively managing the risks associated with the widespread adoption of generative AI is crucial as this technology transforms various business aspects. Ethical guidelines focused on accuracy, safety, honesty, empowerment, and sustainability provide a framework for responsible AI development. Integrating generative AI requires using reliable data, ensuring transparency, and maintaining a human-in-the-loop approach. Ongoing testing, oversight, and feedback mechanisms are essential to prevent unintended consequences.

Generative AI for Enterprises

This section delves into the key methodologies for enterprises to make a transformative leap in innovation and productivity.

Build Foundation Models

Foundation models (FMs) like BERT and GPT are trained on extensive, generalized, and unlabeled datasets, enabling them to excel in diverse tasks, including language understanding, text and image generation, and natural language conversation. These FMs serve as base models for specialized downstream applications, evolving over a decade to handle increasingly complex tasks. The ability to continually learn from data inputs during inference enhances their effectiveness, supporting tasks like language processing, visual comprehension, code generation, human-centered engagement, and speech-to-text applications.

Figure 4. Foundation model

Bring your own model (BYOM) is a commitment to amplifying the platform's versatility, fostering a collaborative environment, and propelling a new era of AI innovation. BYOM's promise lies in the freedom to innovate, offering a personalized approach to AI solutions that align with individual visions. Improving an existing model involves a multifaceted approach, encompassing fine-tuning, dataset augmentation, and architectural enhancements.

Fine-Tuning

While pre-trained language models offer the advantage of being trained on massive datasets and generating text akin to human language, they may not always deliver optimal performance in specific applications or domains. Fine-tuning involves updating pre-trained models with new information or data, allowing them to adapt to tasks or domains. Fine-tuning pre-trained models is crucial for achieving high accuracy and relevance in generating outputs, especially when dealing with specific and nuanced tasks within various domains.

Reinforcement Learning From Human Feedback

The primary objective of reinforcement learning from human feedback (RLHF) is to leverage human feedback to enhance the efficiency and accuracy of ML models, specifically those employing reinforcement learning methodologies to maximize rewards. The RLHF process involves stages such as data collection, supervised fine-tuning of a language model, building a separate reward model, and optimizing the language model with the reward-based model.

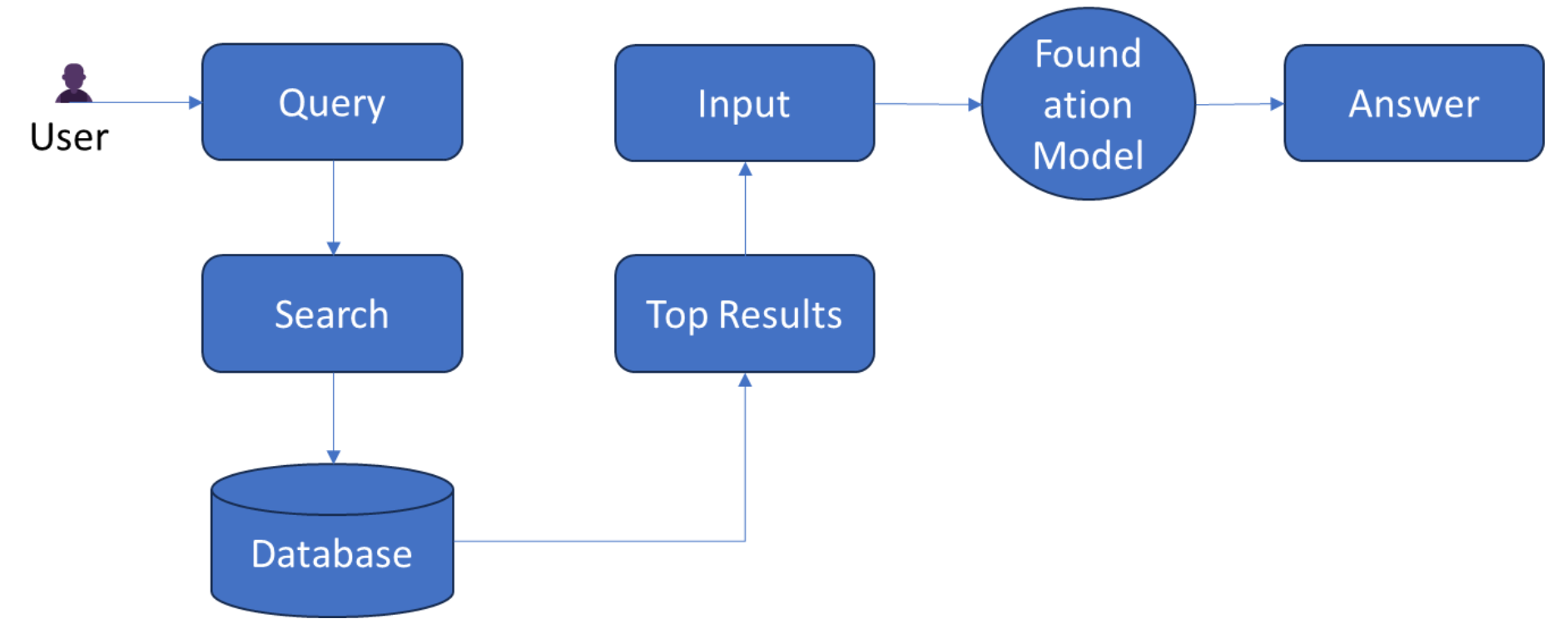

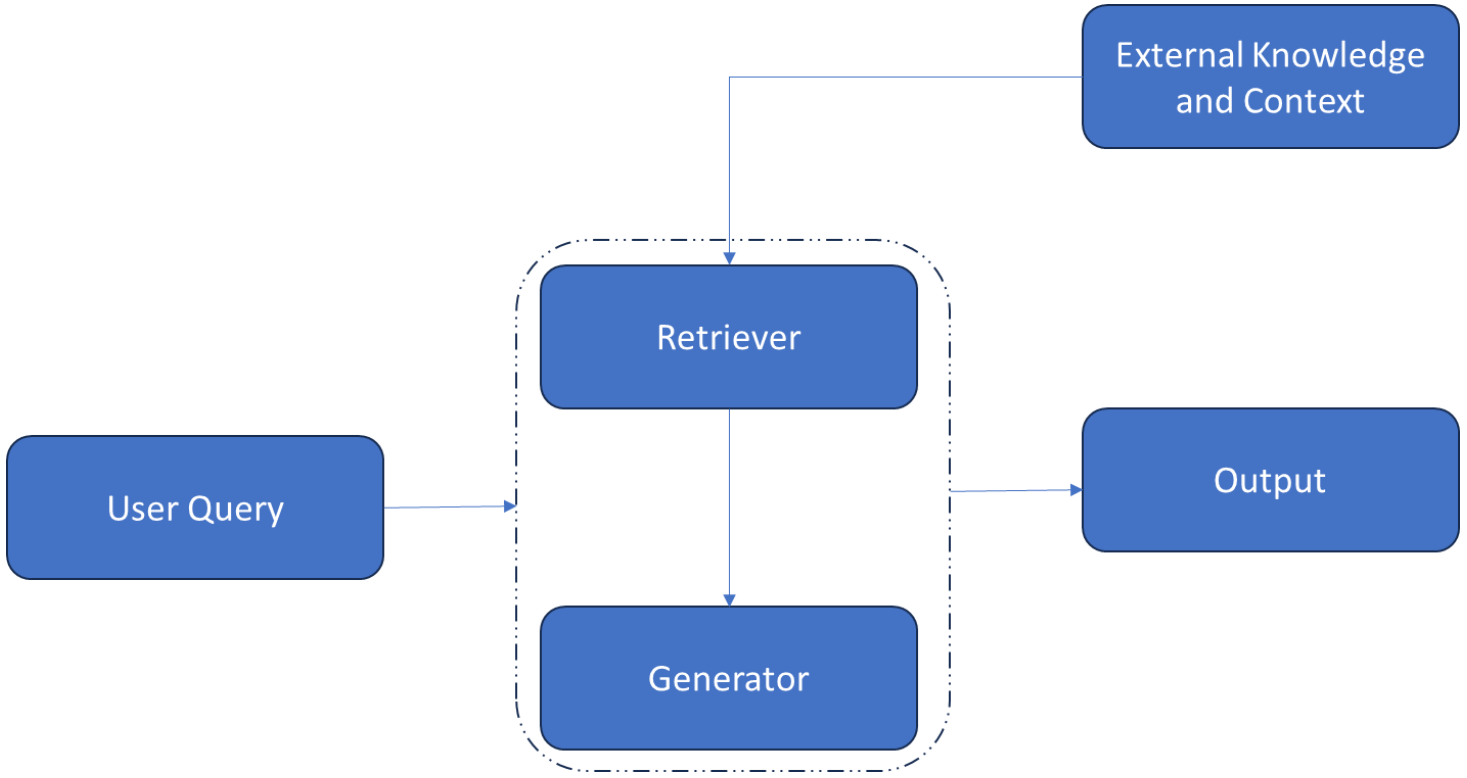

Retrieval Augmented Generation

LLMs are instrumental in tasks like question-answering and language translation. However, inherent challenges, such as potential inaccuracies and the static nature of training data, can impact reliability and user trust. Retrieval-augmented generation (RAG) addresses these issues by seamlessly integrating domain-specific or organizational knowledge into LLMs, enhancing their relevance, accuracy, and utility without necessitating retraining.

Figure 5. Retrieval-augmented generation

The Tech Stack

The LLMOps tech stack encompasses five key areas. The table below exhibits the key components of the five tech stack areas:

Table 4. LLMOps tech stack components

| Stack Area | Key Components |

|---|---|

Data management |

|

Model management |

|

Model deployment |

|

Prompt engineering and optimization |

|

Monitoring and logging |

|

Performance Evaluation

Quantitative methods offer objective metrics, utilizing scores like inception score, Fréchet inception distance, or precision and recall for distributions to quantitatively measure the alignment between generated and real data distributions. Qualitative methods delve into visual and auditory inspection, employing techniques like visual inspection, pairwise comparison, or preference ranking to gauge the realism, coherence, and appeal of generated data. Hybrid methods integrate both quantitative and qualitative approaches like human-in-the-loop evaluation, adversarial evaluation, or Turing tests.

What's Next? The Future of Generative AI

Looking at the future of generative AI, three transformative avenues stand prominently on the horizon.

The Genesis of Artificial General Intelligence

The advent of artificial general intelligence (AGI) heralds a transformative era. AGI aims to surpass current AI limitations, allowing systems to excel in tasks beyond predefined domains. It distinguishes itself through autonomous self-control, self-understanding, and the ability to acquire new skills akin to human problem-solving capacities. This juncture marks a critical moment in the pursuit of AGI, envisioning a future where AI systems possess generalized human cognitive abilities and transcend current technological limitations.

Integrating Perceptual Systems Through Human Senses

Sensory AI stands at the forefront of generative AI evolution. Beyond computer vision, sensory AI encompasses touch, smell, and taste, aiming for a nuanced, human-like understanding of the world. The emphasis on diverse sensory inputs, including tactile sensing, olfactory, and gustatory AI, signifies a move toward human-like interaction and recognition capabilities.

Computational Consciousness Modeling

Focused on attributes like fairness, empathy, and transparency, computational consciousness modeling (CoCoMo) employs consciousness modeling, reinforcement learning, and prompt template formulation to instill knowledge and compassion in AI agents. CoCoMo guides generative AI toward a future where ethical and emotional dimensions seamlessly coexist with computational capabilities, fostering responsible and empathetic AI agents.

Parting Thoughts

This article discussed the foundational concepts to diverse applications across modalities and delved into the mechanisms, highlighting the power of the transformer model and the creativity of GANs and VAEs. The journey encompassed business benefits, risk management, and a forward-looking perspective on unprecedented advancements and the potential emergence of AGI, sensory AI, and artificial consciousness. Finally, it is encouraged to contemplate the future implications and ethical dimensions of generative AI, acknowledging the transformative journey that presents both opportunities and responsibilities in integrating generative AI into our daily lives.

Repositories:

- A curated list of modern Generative Artificial Intelligence projects and services

- Home of CodeT5: Open Code LLMs for Code Understanding and Generation

- StableStudio

- GAN-based Mel-Spectrogram Inversion Network for Text-to-Speech Synthesis

This is an excerpt from DZone's 2024 Trend Report,

Enterprise AI: The Emerging Landscape of Knowledge Engineering.

Read the Free Report

Opinions expressed by DZone contributors are their own.

Comments