IBM Cloud: Deploying Payara Services on OpenShift

This article demonstrates how to deploy Payara Applications on OpenShift using S2I (Source to Image) Builders.

Join the DZone community and get the full member experience.

Join For FreeIn order to deploy highly available and scalable applications and services, an organization needs to be able to manage container runtimes across multiple servers. This is the role of a container orchestration engine like Kubernetes. But building and scaling Kubernetes clusters such that to run containerized services might be hard, hence the so-called container platforms like OpenShift.

Originally proposed by Red Hat in 2010 as a hybrid cloud application platform, OpenShift is currently operated by IBM Cloud, further to the purchase of Red Hat by IBM, in early 2018. Built on RHEL (Red Hat Enterprise Linux) operating system and Kubernetes container orchestration engine, OpenShift supports a very large selection of programming languages and frameworks, such as Java, JavaScript, Python, Ruby, PHP, and many others.

Jakarta EE, as the most adopted Javea enterprise-grade framework, is of course supported by OpenShift in the form of WildFly, EAP (Enterprise Application Platform, formerly JBoss), OpenLiberty, or Quarkus-based pre-built platforms. Despite the potential wealth of all of these supported programming languages and frameworks, not all of the Jakarta EE vendor solutions are included in the out-of-the-box OpenShift services catalog. This is the case with Payara as well.

While the Java enterprise developer is able to deploy in a couple of clicks on OpenShift Jakarta EE applications through integrations like Wildfly, EAP, OpenLiberty, and Quarkus, it isn't the case for Payara Platform applications, which deployment requires a bit more extra trouble. This article demonstrates how.

The OpenShift Developer's Sandbox

The OpenShift Developer's Sandbox is a free and very convenient online platform, which gives immediate access to the whole bunch of the OpenShift features. Once an account is created, the developer may access the OpenShift environment to create projects and applications, to deploy and to scale in or out of them at will, or on the behalf of either the web console or the CLI (Command Line Interpreter) client. It's this second approach that we'll be using in the context of the current post.

To install the CLI client, named oc, it's simple:

- At this link, select the version of the CLI client associated with your operating system.

- Unzip the downloaded archive and make sure that you update the PATH environment such that it reflects the location of the

occommand.

Now, once your oc command installed, you may use it in order to log in. Proceed as follows:

- In the right-upper corner of your OpenShift web console, click on your user name and select the option "Copy login command."

- A new dialog box labeled "Log in with ..." will be displayed. Click on the button "Dev sandbox."

- A new HTML page, having in its left-upper corner a link labeled "Display Token", will be shown. Click the mentioned link.

- An HTML page similar to the one below will be presented to you.

Using the oc login command, as shown above, will help you log in to the OpenShift Developer's Sandbox.

$ oc login --token=sha256~RUV3GD6k18GULtRHIHzE-81TWBxIkA2ckigyUg_wlF4 --server=https://api.sandbox.x8i5.p1.openshiftapps.com:6443

Logged into "https://api.sandbox.x8i5.p1.openshiftapps.com:6443" as "nicolasduminil" using the token provided.

You have one project on this server: "nicolasduminil-dev"

Using project "nicolasduminil-dev".

The token which appears in the image above is short-lived, and, consequently, displaying it here doesn't have any security impact. From now on, you're logged in to the OpenShift Developer's Sandbox and you can use any other oc command against your account.

Building Applications on OpenShift

The primary key to building applications on OpenShift is to use builder images (BI). A BI is a special container image that includes all the binaries and libraries for a given framework or programming language. For example, the OpenShift Developer's Sandbox contains BIs for application servers like Wildfly and EAP or for Java stacks like OpenLiberty and Quarkus.

The build process consists in combining an application's source code with a dedicated BI and creating a so-called custom application image (CAI). Once created, a CAI is stored in a registry that is to be quickly deployed and served to users.

Accordingly, in order to deploy Payara applications on OpenShift, we need to have a dedicated Payara BI. OpenShift provides out-of-the-box BIs for Wildfly, EAP, OpenLiberty, or Quarkus. As for the Payara Platform, we need to provide our own, and to do that, we need to understand the components of an OpenShift deployed application, as follows:

- Custom container images (CCI)

- Image streams (IS)

- Pods

- Build configs (BC)

- Deployment configs (DC)

- Deployments

- Services

Let's try to clarify shortly these concepts.

Custom Container Images (CCI)

As explained previously, in order to deploy on OpenShift a Payara Platform application, we need to construct a Payara CCI. This operation consists in building a Docker image that contains our application code together with all the Payara Platform binaries and libraries. For that purpose, the OpenShift platform provides a dedicated tool, name S2I (Source to Image), which is able to construct a custom image from the following two components:

- A dedicated BI, in our case a specific one for Payara

- The CAI

The whole process is under the control of a component called build config (BC).

Build Config (BC)

A BC contains all the information needed to build an application from its source code. It includes all the information required to build the CAI, for example:

- Application URL

- Name of the BC to be used

- Name of the CAI to be created

- Events that might trigger new builds

The information included in the build config is used by the deployment process, described by the deployment config (DC).

Deployment Config (DC)

The DC component handles operations like deploying and updating an application. It contains the following elements:

- The version of the currently deployed application

- Number of replicas

- Events triggering a redeployment

- Upgrade strategy

The information provided by the DC is further used by the deployment components.

Deployments

A deployment represents a unique version of an application. It is created by the deployment config and the build config components presented above.

Image Streams (IS)

An IS is a link between one or more container images. They serve to automate actions in OpenShift by tracking their components and triggering new deployments whenever these components are updated.

Deploying Payara Applications on OpenShift

Now, after having briefly defined these specific concepts that we need to handle in order to concretely deploy Payara applications on OpenShift, let's see how they may be used in order to implement a workflow able to produce CCIs.

We have alluded briefly S2I as a framework that helps with creating new CCIs by combining BIs with CAIs. As the documentation explains, the S2I process is based on using scripts that support its lifecycle phases. The most important are:

assemble: This script builds the application artifacts from the source and places them in the appropriate directories inside the image.run: This script executes the application.usage: This script informs the user how to properly use the image.- Other optional scripts like

save-atifcatsandtest/run: These scripts won't be used in our example.

According to this structure described above, we can imagine a Docker image defined by the following Dockerfile:

FROM nicolasduminil/payara6-micro-s2i-builder:latest

LABEL maintainer="Nicolas DUMINIL, nicolas.duminil@simplex-software.fr"

ENV BUILDER_VERSION 0.1

LABEL io.k8s.description="Payara 6 Micro S2I Image" \

io.k8s.display-name="Payara 6 Micro S2I Builder" \

io.openshift.expose-services="8080:http" \

io.openshift.tags="builder,nicolasduminil,payara,jakarta ee,microprofile"

COPY ./s2i/bin/ /usr/libexec/s2i

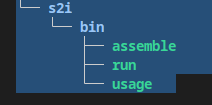

USER 1001The Docker image obtained by building the Dockerfile above may be found on Dockerhub, as well as here on GitHub. It doesn't do much, as you may see, besides extending the base image nicolasduminil/payara6-micro-s2i-builder, defining some useful labels, and copying to /usr/libexec/s2i the content of the directory s2i/bin. The content of this directory is shown below:

Here we find our scripts

Here we find our scripts assemble, run and usage, as described. For example, let's have a look at the assemble script:

#!/bin/bash -e

#

cp -f /tmp/src/*.war ${DEPLOYMENT_DIR}

echo "java -jar ${INSTALL_DIR}/${PAYARA_JAR} --deploymentDir ${DEPLOYMENT_DIR}" > ${INSTALL_DIR}/start.sh

chmod a+rwx ${INSTALL_DIR}/start.shAs an opinionated tool, S2I is leveraging the "convention over configuration" principle and one of these conventions is that the application source is provided in the /tmp/src directory. Hence, our script is copying the application WAR from the source directory to the deployment one and creates a shell file, named start.sh, which runs the Payara server against the deployment directory containing the application WAR.

Once the assemble script has copied the application WAR from the source to the deployment directory, the run script can start the application server against this same WAR. Here is the code:

#!/bin/bash -e

#

echo "### Starting Payara Micro 6.2022.1 with user id: $(id) and group $(id -G)"

echo "### Starting at $(date)"

exec ${INSTALL_DIR}/start.shThe Docker image that we just have seen is our Payara CAI. Now, let's see the BI which is, in fact, the Docker base image of our CAI:

FROM nicolasduminil/s2i-base-java11:latest

LABEL maintainer="Nicolas DUMINIL, nicolas.duminil@simplex-software.fr" description="Payara 6 Micro S2I Builder for OpenShift v3"

ENV VERSION 6.2022.1

ENV PAYARA_JAR payara-micro-${VERSION}.jar

ENV INSTALL_DIR /opt

ENV LIB_DIR ${INSTALL_DIR}/lib

ENV DEPLOYMENT_DIR ${INSTALL_DIR}/deploy

RUN curl -L -0 -o ${INSTALL_DIR}/${PAYARA_JAR} https://s3.eu-west-1.amazonaws.com/payara.fish/Payara+Downloads/6.2022.1/payara-micro-6.2022.1.jar

RUN mkdir -p ${DEPLOYMENT_DIR} \

mkdir -p ${LIB_DIR} \

&& chown -R 1001:0 ${INSTALL_DIR} \

&& chmod -R a+rw ${INSTALL_DIR}

USER 1001

EXPOSE 8080 8181This Dockerfile defines an image that contains the Payara Micro 6.2022.1 server, downloaded from an AWS bucket. It also defines a couple of useful environment variables and creates the deployment directory, having the right attributes required for a generic user whose ID is 1001.

Let's see now how could we combine the BI and the CAI defined above such that to create a CCI and deploy it on OpenShift. The first thing to do is to implement and package an application (for example, a web application) to deploy it on the Payara platform. One of the simplest ways to do that is by running the Maven archetype jakartaee10-basic-archetype available at this GitHub repo.

$ mvn -B archetype:generate \

-DarchetypeGroupId=fr.simplex-software.archetypes \

-DarchetypeArtifactId=jakartaee10-basic-archetype \

-DarchetypeVersion=1.0-SNAPSHOT \

-DgroupId=com.exemple \

-DartifactId=test

[INFO] Scanning for projects...

[INFO]

[INFO] ------------------< org.apache.maven:standalone-pom >-------------------

[INFO] Building Maven Stub Project (No POM) 1

[INFO] --------------------------------[ pom ]---------------------------------

[INFO]

[INFO] >>> maven-archetype-plugin:3.2.1:generate (default-cli) > generate-sources @ standalone-pom >>>

[INFO]

[INFO] <<< maven-archetype-plugin:3.2.1:generate (default-cli) < generate-sources @ standalone-pom <<<

[INFO]

[INFO]

[INFO] --- maven-archetype-plugin:3.2.1:generate (default-cli) @ standalone-pom ---

[INFO] Generating project in Batch mode

[INFO] Archetype repository not defined. Using the one from [fr.simplex-software.archetypes:jakartaee10-basic-archetype:1.0-SNAPSHOT] found in catalog local

[INFO] ----------------------------------------------------------------------------

[INFO] Using following parameters for creating project from Archetype: jakartaee10-basic-archetype:1.0-SNAPSHOT

[INFO] ----------------------------------------------------------------------------

[INFO] Parameter: groupId, Value: com.exemple

[INFO] Parameter: artifactId, Value: test

[INFO] Parameter: version, Value: 1.0-SNAPSHOT

[INFO] Parameter: package, Value: com.exemple

[INFO] Parameter: packageInPathFormat, Value: com/exemple

[INFO] Parameter: package, Value: com.exemple

[INFO] Parameter: groupId, Value: com.exemple

[INFO] Parameter: artifactId, Value: test

[INFO] Parameter: version, Value: 1.0-SNAPSHOT

[INFO] Project created from Archetype in dir: /home/nicolas/test

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 2.462 s

[INFO] Finished at: 2022-12-30T16:41:56+01:00

[INFO] ------------------------------------------------------------------------

The Maven archetype is available in Maven Central but, if you prefer, you can clone it from the Git repository and use it locally. Once you've generated a simple Jakarta EE 10 application, you need to package it as a WAR:

~$ cd test

~/test$ mvn package

[INFO] Scanning for projects...

[INFO]

[INFO] --------------------------< com.exemple:test >--------------------------

[INFO] Building test 1.0-SNAPSHOT

[INFO] --------------------------------[ war ]---------------------------------

[INFO]

[INFO] --- maven-resources-plugin:2.6:resources (default-resources) @ test ---

[INFO] Using 'UTF-8' encoding to copy filtered resources.

[INFO] Copying 1 resource

[INFO]

[INFO] --- maven-compiler-plugin:3.1:compile (default-compile) @ test ---

[INFO] Changes detected - recompiling the module!

[INFO] Compiling 2 source files to /home/nicolas/test/target/classes

[INFO]

[INFO] --- maven-resources-plugin:2.6:testResources (default-testResources) @ test ---

[INFO] Using 'UTF-8' encoding to copy filtered resources.

[INFO] skip non existing resourceDirectory /home/nicolas/test/src/test/resources

[INFO]

[INFO] --- maven-compiler-plugin:3.1:testCompile (default-testCompile) @ test ---

[INFO] Changes detected - recompiling the module!

[INFO] Compiling 1 source file to /home/nicolas/test/target/test-classes

[INFO]

[INFO] --- maven-surefire-plugin:2.12.4:test (default-test) @ test ---

[INFO]

[INFO] --- maven-war-plugin:3.3.1:war (default-war) @ test ---

[INFO] Packaging webapp

[INFO] Assembling webapp [test] in [/home/nicolas/test/target/test]

[INFO] Processing war project

[INFO] Copying webapp resources [/home/nicolas/test/src/main/webapp]

[INFO] Building war: /home/nicolas/test/target/test.war

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 2.848 s

[INFO] Finished at: 2022-12-30T16:45:22+01:00

[INFO] ------------------------------------------------------------------------We have just built our WAR and, before deploying it, we need to run the integration tests against testcontainer, as follows:

~/test$ mvn verify

[INFO] Scanning for projects...

[INFO]

[INFO] --------------------------< com.exemple:test >--------------------------

[INFO] Building test 1.0-SNAPSHOT

[INFO] --------------------------------[ war ]---------------------------------

[INFO]

[INFO] --- maven-resources-plugin:2.6:resources (default-resources) @ test ---

[INFO] Using 'UTF-8' encoding to copy filtered resources.

[INFO] Copying 1 resource

[INFO]

[INFO] --- maven-compiler-plugin:3.1:compile (default-compile) @ test ---

[INFO] Changes detected - recompiling the module!

[INFO] Compiling 2 source files to /home/nicolas/test/target/classes

[INFO]

[INFO] --- maven-resources-plugin:2.6:testResources (default-testResources) @ test ---

[INFO] Using 'UTF-8' encoding to copy filtered resources.

[INFO] skip non existing resourceDirectory /home/nicolas/test/src/test/resources

[INFO]

[INFO] --- maven-compiler-plugin:3.1:testCompile (default-testCompile) @ test ---

[INFO] Changes detected - recompiling the module!

[INFO] Compiling 1 source file to /home/nicolas/test/target/test-classes

[INFO]

[INFO] --- maven-surefire-plugin:2.12.4:test (default-test) @ test ---

[INFO]

[INFO] --- maven-war-plugin:3.3.1:war (default-war) @ test ---

[INFO] Packaging webapp

[INFO] Assembling webapp [test] in [/home/nicolas/test/target/test]

[INFO] Processing war project

[INFO] Copying webapp resources [/home/nicolas/test/src/main/webapp]

[INFO] Building war: /home/nicolas/test/target/test.war

[INFO]

[INFO] --- maven-failsafe-plugin:3.0.0-M5:integration-test (default) @ test ---

[INFO]

[INFO] -------------------------------------------------------

[INFO] T E S T S

[INFO] -------------------------------------------------------

[INFO] Running com.exemple.MyResourceIT

[main] INFO org.testcontainers.utility.ImageNameSubstitutor - Image name substitution will be performed by: DefaultImageNameSubstitutor (composite of 'ConfigurationFileImageNameSubstitutor' and 'PrefixingImageNameSubstitutor')

[main] INFO org.testcontainers.dockerclient.DockerClientProviderStrategy - Loaded org.testcontainers.dockerclient.UnixSocketClientProviderStrategy from ~/.testcontainers.properties, will try it first

[main] INFO org.testcontainers.dockerclient.DockerClientProviderStrategy - Found Docker environment with local Unix socket (unix:///var/run/docker.sock)

[main] INFO org.testcontainers.DockerClientFactory - Docker host IP address is localhost

[main] INFO org.testcontainers.DockerClientFactory - Connected to docker:

Server Version: 20.10.12

API Version: 1.41

Operating System: Ubuntu 22.04.1 LTS

Total Memory: 15888 MB

[main] INFO org.testcontainers.DockerClientFactory - Checking the system...

[main] INFO org.testcontainers.DockerClientFactory - ✔︎ Docker server version should be at least 1.6.0

[main] INFO [payara/server-full:6.2022.1] - Creating container for image: payara/server-full:6.2022.1

[main] INFO [testcontainers/ryuk:0.3.3] - Creating container for image: testcontainers/ryuk:0.3.3

[main] INFO [testcontainers/ryuk:0.3.3] - Container testcontainers/ryuk:0.3.3 is starting: 8cae4abce8aa41105117dc4873eccfeadfa46db9f10e16e43b9e6c758b20b31f

[main] INFO [testcontainers/ryuk:0.3.3] - Container testcontainers/ryuk:0.3.3 started in PT0.84169S

[main] INFO [payara/server-full:6.2022.1] - Container payara/server-full:6.2022.1 is starting: 719b3e17f5fd1a14cf5fe6b6be1cfbe5d2d3236783d5b3813b4290d9433f882dThis integration test deploys our WAR on a Payara Micro 6.2022.1 platform run on the behalf of testcontainers and invokes the endpoint exposed by its REST API.

At that point of our exercise, we have a Jakarta EE 10 web application that we deployed and run against a Payara Micro platform. Let's deploy it on OpenShift now.

The first thing to do, after having logged in to the OpenShift Developer's Sandbox, as shown above, is to create a new application:

~$ cd test/target/

~/test/target$ oc new-app nicolasduminil/s2i-payara6-micro:latest~/. --name test

--> Found container image 22e16f9 (7 days old) from Docker Hub for "nicolasduminil/s2i-payara6-micro:latest"

Payara 6 Micro S2I Builder

--------------------------

Payara 6 Micro S2I Image

Tags: builder, nicolasduminil, payara, jakarta ee, microprofile

* An image stream tag will be created as "s2i-payara6-micro:latest" that will track the source image

* A source build using binary input will be created

* The resulting image will be pushed to image stream tag "test:latest"

* A binary build was created, use 'oc start-build --from-dir' to trigger a new build

--> Creating resources ...

imagestream.image.openshift.io "s2i-payara6-micro" created

imagestream.image.openshift.io "test" created

buildconfig.build.openshift.io "test" created

deployment.apps "test" created

service "test" created

--> Success

Build scheduled, use 'oc logs -f buildconfig/test' to track its progress.

Application is not exposed. You can expose services to the outside world by executing one or more of the commands below:

'oc expose service/test'

Run 'oc status' to view your app.The command oc new-app, as its name says, creates a new OpenShift application. By passing the CAI identifier as an argument to this command, we create an IS that aims at tracking the source code. Accordingly, we need to mention where this source code is located exactly. This is done here by using the expression ~/., meaning the "current directory in the current user's home."

Now, let's use the BI defined previously in order to build the CCI.

~/test/target$ oc start-build --from-dir . test

Uploading directory "." as binary input for the build ...

.

Uploading finished

build.build.openshift.io/test-2 startedThe command oc start-build above builds our CCI by combining the BI defined by the Docker image nicolasduminil/payara6-micro-s2i-builder:latest and the CAI defined by the Docker image nicolasduminil/s2i-payara6-micro:latest.

Now, looking in the OpenShift Sandbox console, you'll see something similar with that:

The next operation that we need to do is to expose the TCP port associated with our REST API endpoint:

~/test/target$ oc expose svc test --port 8080

route.route.openshift.io/test exposedNow we can test our endpoint as follows:

~/test/target$ oc expose svc test --port 8080

route.route.openshift.io/test exposed

nicolas@nicolas-XPS-13-9360:~/test/target$ oc describe route test

Name: test

Namespace: nicolasduminil-dev

Created: 2 minutes ago

Labels: app=test

app.kubernetes.io/component=test

app.kubernetes.io/instance=test

Annotations: openshift.io/host.generated=true

Requested Host: test-nicolasduminil-dev.apps.sandbox.x8i5.p1.openshiftapps.com

exposed on router default (host router-default.apps.sandbox.x8i5.p1.openshiftapps.com) 2 minutes ago

Path: <none>

TLS Termination: <none>

Insecure Policy: <none>

Endpoint Port: 8080

Service: test

Weight: 100 (100%)

Endpoints: 10.129.4.30:8080

nicolas@nicolas-XPS-13-9360:~/test/target$ curl test-nicolasduminil-dev.apps.sandbox.x8i5.p1.openshiftapps.com/test/api/myresource

Got it !Here we need to first get the URL of our REST API on the behalf of the command oc describe. Then, using this URL, we can test our REST endpoint by using the curl utility. Last but not least, we can scale in or out the pods running our application:

~/test/oc scale deploy test --replicas=2

deployment.apps/test scaledThe OpenShift Sandbox console reflects this new update of the number of replicas, as shown below:

Once you finished playing, don't forget to clean up the workspace. You can do it, of course, using the OpenShift console or as shown below:

~/test/target$ oc delete all -l app=test

service "test" deleted

deployment.apps "test" deleted

buildconfig.build.openshift.io "test" deleted

imagestream.image.openshift.io "s2i-payara6-micro" deleted

imagestream.image.openshift.io "test" deleted

route.route.openshift.io "test" deleted

Enjoy!

Opinions expressed by DZone contributors are their own.

Comments