Event Mesh: Point-to-Point EDA

This article recounts a recent presentation from the 2022 EDA Summit as well as provides additional commentary surrounding the presenter's thoughts.

Join the DZone community and get the full member experience.

Join For FreeIn watching the 2022 EDA Summit presentation "Powering Your Real-Time, Event-Driven Enterprise with PubSub + Platform" by Shawn McAllister, CTO of Solace (sponsor of the Summit), I learned of the origins of his company. This article recounts his presentation with my personal synopsis. As he recounted, let’s “start with capital markets…where Solace started."

Presentation Highlights and Additional Commentary

Shawn began by stating: "Capital markets became digital…over 20 years ago…They had, of course, on-prem applications…but the magic really happened in these 'colocation centers,' ... data centers that are not owned by the bank or the buy-side firm... They’re shared, so they’re kind of like the clouds that you have today… and those colocation centers were then located close to the execution venues, where a lot of the trading happened."

Shawn continued by stating that the “various systems inside the colocation center would work together in a real-time, event-driven manner… and would then work with systems…between these data centers. And most of the participants…also have businesses in different financial centers, and need to share information... so they would create what we now call an ‘event mesh:' a network of event brokers all connected together that allows information in New York to be shared in London, in Hong Kong, and Tokyo, in real-time. And this is what we helped our clients do,…to create this ‘event mesh,' which gave them the ability to share information in real-time, in a low-latency, high-performance, highly-resilient...secure manner, as they moved to becoming real-time and distributed."

Shortly afterward, Shawn informed us: “We very oftentimes see Kafka Clusters around the edges of the ‘event mesh,' and those can be event sources, and event sinks." I found this statement extremely revealing, given that we had just been told that, “capital markets… over 20 years ago… would create what we now call an ‘event mesh’… as they moved to becoming real-time and distributed." Interesting because Kafka, which has existed for over 11 years, makes you exactly that: “real-time and distributed."

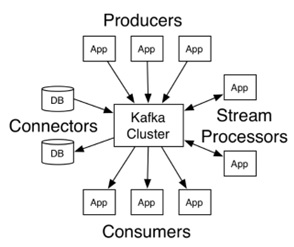

The Kafka Cluster that you see in the stock image below is called a cluster, precisely because it consists of a network of fault-tolerant, distributed "event brokers," each working together in real-time. What’s more, I doubt anyone had even heard the term “event mesh” in the first five years of the existence of Kafka, meaning that an “event mesh” is in no way necessary to become “real-time and distributed."

Returning to the presentation, Shawn went on to say: “A chart from Gartner…released in 2019, where they said… if you used to do messaging… in your traditional platform... what you should be doing is building an event mesh, because Gartner understood that having an event broker, or a single cluster, is just not enough." What Shawn fails to explain, is why a single cluster distributed across the world – event brokers representing little more than IP addresses at the end of the day – “is just not enough.” I watched his presentation until the end as well as others, and I still don’t have the answer to that question.

Shawn continued: “Event mesh is a layer in your architecture that allows… an application anywhere in your architecture to produce an event, and applications anywhere else in your architecture to consume those events… You can connect to your event mesh, produce an event, don’t worry about it any more, and consumers can consume it wherever they’re deployed.” In reality, you can replace the words "event mesh" in the previous sentence with "Kafka Cluster."

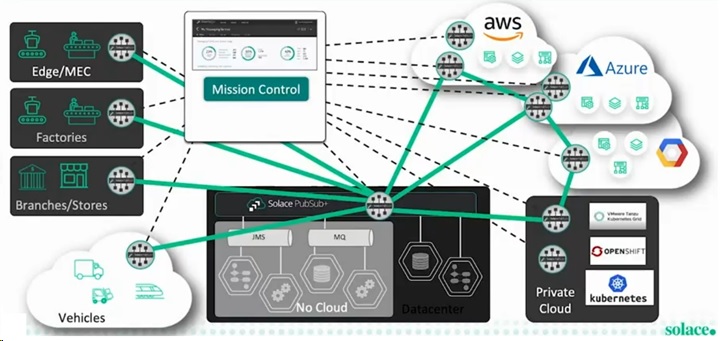

Shawn proceeded by saying: “So how does an event mesh work? Well, in the Solace case, what you do is – and let’s say you want to connect your multiple cloud... your on-prem and non-cloud environment – so you deploy your event brokers in all these locations where you have applications, close to your applications… because it’s a more reliable, faster communication between the apps and your infrastructure. Then you connect the event brokers together, and that forms a network." This is just like if you deploy your individual Kafka brokers – working together in real-time to form a single cluster, “close to your applications,” right?

Next, Shawn stated: “Now let’s talk about the… events flowing over your event mesh. As you add more and more applications to your EDA environment… if you don’t have some kind of event management tool, the business value/the ROI tails-off… Producing more and more events, if you can’t find them and reuse them, means you can’t monetize them as much. People reinvent the wheel, or they use the wrong data sources… because you have no way to manage these events… Certainly, reuse is the number one factor: being able to find the event that I want… is key”.

Looking at the “event mesh” slide below that was associated with this statement, I have great difficulty in understanding how the concept of an event mesh in any way facilitates the discovery of events. Indeed, I wish you luck in ever understanding the endless combination of event flows that might potentially be discovered in the EDA landscape that is illustrated. Additionally, I ask: are all of the events - even when representing exactly the same entity type – likely to use exactly the same payload format or to use exactly the same JSON tags? Probably not (I invite you to take another look at the Kafka Cluster diagram above).

Concluding thoughts from Shawn's presentation, he stated: “In a typical EDA environment, you have Solace ‘event mesh’ in the middle, and we very very typically have Kafka Clusters at the edges, as event sources or sinks, and our customers that we talk to, they want a single tool. They don’t want multiple tools to manage their events, they want just one." So, they probably don’t want an event mesh at all, eh? They probably prefer to have a single Kafka Cluster “in the middle” (which of course becomes essential – for certain events – if the topic of GDPR is ever broached: the true "event mesh killer")?

James Ellwood, a principal engineer at Solace, declared in a separate EDA Summit presentation that: “One of the main features of Solace Cloud is the creation and management of [multiple] PubSub+ Brokers. This operation is performed in many different… regions. This model introduces a whole bunch of interesting problems." So I say don’t do it.

Opinions expressed by DZone contributors are their own.

Comments