Building Hybrid Multi-Cloud Event Mesh With Apache Camel and Kubernetes

A full installation guide for building the event mesh with Apache Camel. We will be using microservice, function, and connector for the connector node in the mesh.

Join the DZone community and get the full member experience.

Join For FreePart 1 || Part 2 || Part 3

This blog is part two of my three blogs on how to build a hybrid multi-cloud event mesh with Camel. In this part, I am going over the more technical aspects. Going through how I set up the event mesh demo.

Recap, the Demo:

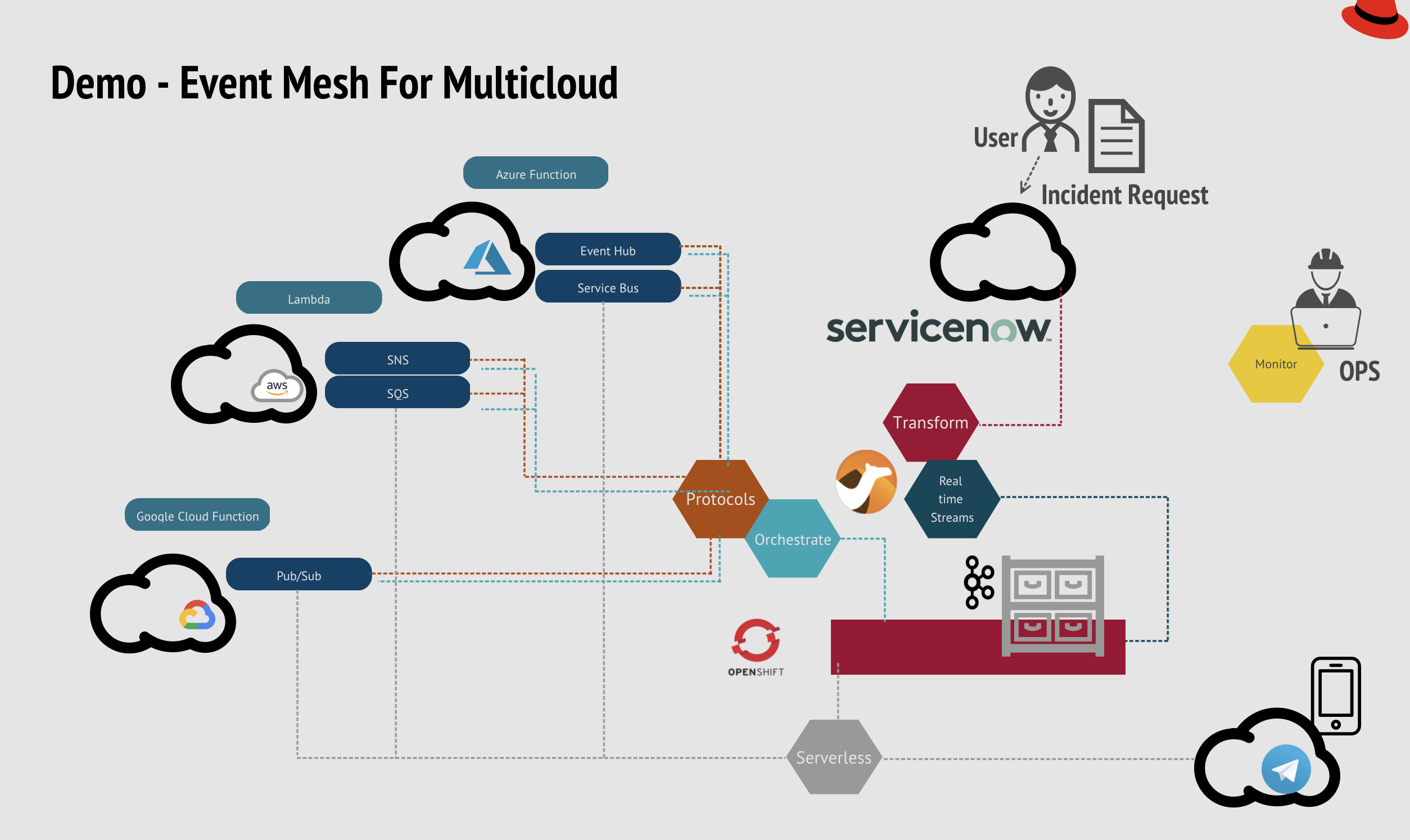

Demo starts by collecting incident tasks from ServiceNow, streams the events to the Kafka cluster in the mesh. The events then orchestrate to AWS, Azure, and GCP, depending on the request type with egress connectors. Ingress connectors are also created for task result updates. And I have also set up a couple of serverless connectors in the mesh that handles notification to Telegram. For more architectural design, please refer to my previous blog.

Prerequisites

For Operations You will need to have the following ready:

- OpenShift platform 4.7 (Red Hat's Kubernetes platform)with Administrator right - We need it to setup the operators that can further assist the self-service and life cycle of functions running on top.

- OpenShift CLI tool - Manage and install components on OpenShift Container Platform projects from the local terminal.

For Developers You will need to have the following ready:

- OpenShift CLI tool - Manage and install components on OpenShift Container Platform projects from the local terminal.

- IDE - VS code is my choice here

- Camel K CLI tool - Manage, run the function and connector on Openshift.

- Maven - For building Camel Quarkus microservice application

- Java 1.11- For building Camel Quarkus microservice application

Environment Setup (Operations)

Log in to your cluster with the CLI,

xxxxxxxxxx

oc login

Here is how you get the login tokens:

Create a project name 'demo.'

xxxxxxxxxx

oc new-project demo

Also need to give appropriate roles in the namespace to the developer.

Install following cluster-wide operators:

- Camel K Operator:

![Camel K Operator GIF]()

- Kafka Operator:

![Kafka Operator GIF]()

- Serverless Operator:

![Serverless Operator GIF]()

Install the following operator in the namespace:

Grafana Operator:

![Grafana Operator GIF]()

Configure and Setup Serverless

Setup Knative Serving in knative-serving namespace:

xxxxxxxxxx

apiVersionoperator.knative.dev/v1alpha1

kindKnativeServing

metadata

nameknative-serving

namespaceknative-serving

spec

Setup Knative Eventing in knative-eventing namespace:

xxxxxxxxxx

apiVersionoperator.knative.dev/v1alpha1

kindKnativeEventing

metadata

nameknative-eventing

namespaceknative-serving

spec

Setup Cluster Monitoring and add monitoring to all camel-app (app-with-metrics):

x

git clone https://github.com/weimeilin79/cameleventmesh.git

cd monitoring

oc apply -f cluster-monitoring-config.yaml -n openshift-monitoring

oc apply -f service-monitor.yaml

You can check if user monitoring is turned on by using:

xxxxxxxxxx

oc -n openshift-user-workload-monitoring get pod

And that is all the work that is needed from the Operation team.

Building the Mesh with Connectors (Developers)

In the project that you will be building the mesh. A streaming cluster is the foundation of the mesh, developers can start by creating their own Kafka instance and topics. There is no need for the OPS team to help set up. Developers can easily self-service.

In the OpenShift console developer view,

- Create a default Kafka Cluster:

![Creating Default Kafka Cluster]()

- Create two topic names: incident-all and gcp-result.

![Creating 2 Topic Names]()

Log in to your cluster with the CLI:

xxxxxxxxxx

oc login

Go to the namespace that you will be building the mesh:

xxxxxxxxxx

oc project demo

Clone the connector code from the GitHub repository:

xxxxxxxxxx

git clone https://github.com/weimeilin79/cameleventmesh.git

Connector to ServiceNow

If you are not familiar with ServiceNow, check out this document to obtain the credentials for the connector.

Now, let's get started. In the folder you cloned. Replace the following cmd with your ServiceNow credentials and run. It creates the secret configuration for you on the OpenShift Platform (Kubernetes). And the next command sets up the destination location to the Streaming foundation.

xxxxxxxxxx

oc create secret generic servicenow-credentials \

--from-literal=SERVICENOW_INSTANCE=REPLACE_ME \

--from-literal=SERVICENOW_OAUTH2_CLIENT_ID=REPLACE_ME \

--from-literal=SERVICENOW_OAUTH2_CLIENT_SECRET=REPLACE_ME \

--from-literal=SERVICENOW_PASSWORD=REPLACE_ME \

--from-literal=SERVICENOW_USERNAME=REPLACE_ME

oc create -f kafka-config.yaml

The connector to ServiceNow will use what we have just configured (meaning you can change this setting according to your environments. Such as staging, UAT, or production). Now it’s time to deploy the connector.

xxxxxxxxxx

cd servicenow

mvn clean package -Dquarkus.kubernetes.deploy=true -Dquarkus.openshift.expose=true -Dquarkus.openshift.labels.app-with-metrics=camel-app

You should be able to see an application deployed in the Developer’s topology view.

Connector to Google Cloud Platform (GCP)

Obtain your google cloud service account key from the GCP platform. Create a gcp-topic under Google pub/sub and make sure to add permissions to your service account, download your service account key under the gcp folder, and name it google-service-acc-key.json.

In our mesh, we need to add the google key into the platform, so the connector can use it to authenticate with GCP. And we can deploy the connector to OpenShift and have it now push events to GCP.

xxxxxxxxxx

cd gcp

oc create configmap gcp-configmap --from-file=google-service-acc-key.json

mvn clean package -Dquarkus.kubernetes.deploy=true -Dquarkus.openshift.expose=true -Dquarkus.openshift.labels.app-with-metrics=camel-app

OPTIONAL: You can create a Google Cloud function that triggered by the gcp-topic and does whatever you want it to do, in my case, just does some simple logging.

Next up, we will use Camel K to listen to result events from GCP. Events are streamed back to the Kafka topic gcp-result. (Allowing monitoring for all Camel K routes.)

xxxxxxxxxx

cd gcp/camelk

kamel run Gcpreader.java

oc patch ip camel-k --type=merge -p '{"spec":{"traits":{"prometheus":{"configuration":{"enabled":true}}}}}'

oc create -f kafka-source.kamelet.yaml

Two instances should appear in the developer topology:

Serverless Connector to Telegram

I also want to introduce Kamlet, which can be used as a Kafka connector, using a GUI interface on OpenShift. It creates a connector from Kafka to Serverless Knative Channel. (If you are not familiar with serverless architecture, check out this blog).

Let’s create a channel that takes in CloudEvent that contains notification to the Telegram. In the developer console, click on Add +, and click on channel.

Once the channel is available, you will see it appear in the topology. Next, we will create the connector from Kafka to the serverless channel. Select Kafka source. And setup the source and sink of the connector.

xxxxxxxxxx

kind: Channel

name: notify

brokers: my-cluster-kafka-bootstrap.demo.svc:9092'

topic: gcp-result

You will see the connector appears in the Developer topology.

Connector to Azure

The next part of the mesh is connecting to Azure. In Azure, set up your Access control (IAM), with appropriate role and access.

In Azure, go to Service Bus, and create a queue called azure-bus and in EventHub, and create an event hub called azure-eventhub. Obtain the SAS Policy Connection String (Primary or Secondary).

OPTIONAL: Create a Azure cloud function that triggered by the azure-bus and does whatever you want it to do, in my case, just does some simple logging.

Deploy the egress Azure connector, similar to before, don't forget to create a secret to store your Azure credentials.

x

cd azure

oc create secret generic azure-credentials \

--from-literal=eventhub.endpoint=REPLACE_ME \

--from-literal=quarkus.qpid-jms.password=REPLACE_ME \

--from-literal=quarkus.qpid-jms.url=REPLACE_ME \

--from-literal=quarkus.qpid-jms.username=REPLACE_ME

mvn clean package -Dquarkus.kubernetes.deploy=true -Dquarkus.openshift.expose=true -Dquarkus.openshift.labels.app-with-metrics=camel-app

Deploy the ingress Azure connector.

xxxxxxxxxx

cd azure/camelk

kamel run Azurereader.java

Connector to AWS

Last part of the mesh, we will set up a connector to Amazon AWS. In AWS, set up your user in IAM, grant the permissions, and set up the policies accordingly. Under SNS, create a topic name sns-topic and set up the access policy for your user in IAM Under SQS, create a topic name sqs-queue and set up the access policy for your user in IAM, and subscribe to the sns-topic we have created earlier on.

OPTIONAL: Create a lambda that subscribes to the sns-topic and does whatever you want it to do, in my case, just does some simple logging.

In the aws.properties, replace it with your AWS credentials:

x

camel.component.kafka.brokers=my-cluster-kafka-bootstrap.demo.svc:9092

accessKey=RAW(REPLACE_ME)

secretKey=RAW(REPLACE_ME)

region=REPLACE_ME

To show you how Camel can help your event mesh agile and lightweight, I am going to just use the Camel K and Kamelet.

x

cd aws/camelk

kamel run AwsCamel.java

cd aws

oc create -f https://raw.githubusercontent.com/apache/camel-kamelets/main/aws-sqs-source.kamelet.yaml

Next, create the serverless connector from AWS SQS to the serverless channel. In the Developer Console, select the SQS source. And set up the source and sink of the connector.

Bonus, Adding an API Endpoint for Incident Ticket Creation

Sometimes, we want to allow automation in the enterprise to avoid human errors. Having the API endpoint will make it very easy for other systems that want to connect to the event mesh.

xxxxxxxxxx

cd serverless/api

oc create secret generic kafka-credential --from-file=incidentapi.properties

kamel run IncidentApi.java

Once you see the new Serverless API pod starts up, you will be able to access it via the route.

Monitor Event Mesh in Grafana

Knowing the status of the mesh, making sure its events are streaming smoothly is important. We want to make sure there is no clog in the mesh. Follow the following steps, it will allow you to create a dashboard in Grafana, that monitors all the connectors in the mesh, plus memory and CPU performances. Any irregular behavior can be caught quickly.

xxxxxxxxxx

oc create -f grafana.yaml

oc adm policy add-cluster-role-to-user cluster-monitoring-view -z grafana-serviceaccount

oc serviceaccounts get-token grafana-serviceaccount

sed "s/REPLACEME/$(oc serviceaccounts get-token grafana-serviceaccount)/" grafana-datasource.yaml.bak > grafana-datasource.yaml

oc create -f grafana-datasource.yaml

After installed, login to the Grafana Dashboard with ID/PWD: root/secret

and import the grafana-dashboard.json.

And congratulations! You have successfully set up the mesh and ready to go!!

Opinions expressed by DZone contributors are their own.

Comments