Building Product to Learn AI, Part 2: Shake and Bake

In part 1, we gathered the crucial "ingredients" for our AI creation — the data. Now, transform that data into a fully functioning Large Language Model (LLM).

Join the DZone community and get the full member experience.

Join For FreeIf you haven't already, be sure to review Part 1 where we reviewed data collection and prepared a dataset for our model to train on.

In the previous section, we gathered the crucial "ingredients" for our AI creation — the data. This forms the foundation of our model. Remember, the quality of the ingredients (your data) directly impacts the quality of the final dish (your model's performance).

Now, we'll transform that data into a fully functioning Large Language Model (LLM). By the end of this section, you'll be interacting with your very own AI!

Choosing Your Base Layer

Before we dive into training, we’ll explore the different approaches to training your LLM. This is like choosing the right flour for your bread recipe — it significantly influences the capabilities and limitations of your final creation.

There are many ways to go about training an ML model. This is also an active area of research, with new methodologies emerging every day. Let’s take a look at the major tried-and-true categories of methods of model development. (Note: These methods are not necessarily mutually exclusive.)

Key Approaches

1. Start From Scratch (Pretraining Your Own Model)

This offers the most flexibility, but it's the most resource-intensive path. The vast amounts of data and compute resources required here mean that only the most well-resourced corporations are able to train novel pre-trained models.

2. Fine-Tuning (Building on a Pre-trained Model)

This involves starting with a powerful, existing LLM and adapting it to our specific meal-planning task. It's like using pre-made dough — you don't have to start from zero, but you can still customize it.

3. Leveraging Open-Source Models

Explore a growing number of open source models, often pre-trained on common tasks, to experiment without the need for extensive pre-training.

4. Using Commercial Off-the-Shelf Models

For production-ready applications, consider commercial LLMs (e.g., from Google, OpenAI, Microsoft) for optimized performance, but with potential customization limits.

5. Cloud Services

Streamline training and deployment with powerful tools and managed infrastructure, simplifying the process.

Choosing the Right Approach

The best foundation for your LLM depends on your specific needs:

- Time and resources: Do you have the capacity for pretraining, or do you need a faster solution?

- Customization: How much control over the model's behavior do you require?

- Cost: What's your budget? Can you invest in commercial solutions?

- Performance: What level of accuracy and performance do you need?

- Capabilities: What level of technical skills and/or compute resources do you have access to?

Moving Forward

We'll focus on fine-tuning Gemini Pro in this tutorial, striking a balance between effort and functionality for our meal-planning model.

Getting Ready to Train: Export Your Dataset

Now that we've chosen our base layer, let's get our data ready for training. Since we're using Google Cloud Platform (GCP), we need our data in JSONL format.

Note: Each model might have specific data format requirements, so always consult the documentation before proceeding.

Luckily, converting data from Google Sheets to JSONL is straightforward with a little Python.

- Export to CSV: First, export your data from Google Sheets as a CSV file.

- Convert CSV to JSONL: Run the following Python script, replacing

your_recipes.csvwith your actual filename:

import csv

import json

csv_file = 'your_recipes.csv' # Replace 'your_recipes.csv' with your CSV filename

jsonl_file = 'recipes.jsonl'

with open(csv_file, 'r', encoding='utf-8') as infile,

open(jsonl_file, 'w', encoding='utf-8') as outfile:

reader = csv.DictReader(infile)

for row in reader:

row['Prompt'] = row['Prompt'].splitlines()

row['Response'] = row['Response'].splitlines()

json.dump(row, outfile)

outfile.write('\n')This will create a recipes.jsonl file where each line is a JSON object representing a meal plan.

Training Your Model

We’re finally ready to start training our LLM. Let’s dive in!

1. Project Setup

- Google Cloud Project: Create a new Google Cloud project if you don't have one already (free tier available).

- Enable APIs: Search for "Vertex AI" in your console, and on the Vertex AI page, click Enable All Recommended APIs.

- Authentication: Search for "Service Accounts," and on that page, click Create Service Account. Use the walkthrough to set up a service account and download the required credentials for secure access.

- Cloud Storage Bucket: Find the "Cloud Storage" page and create a storage bucket.

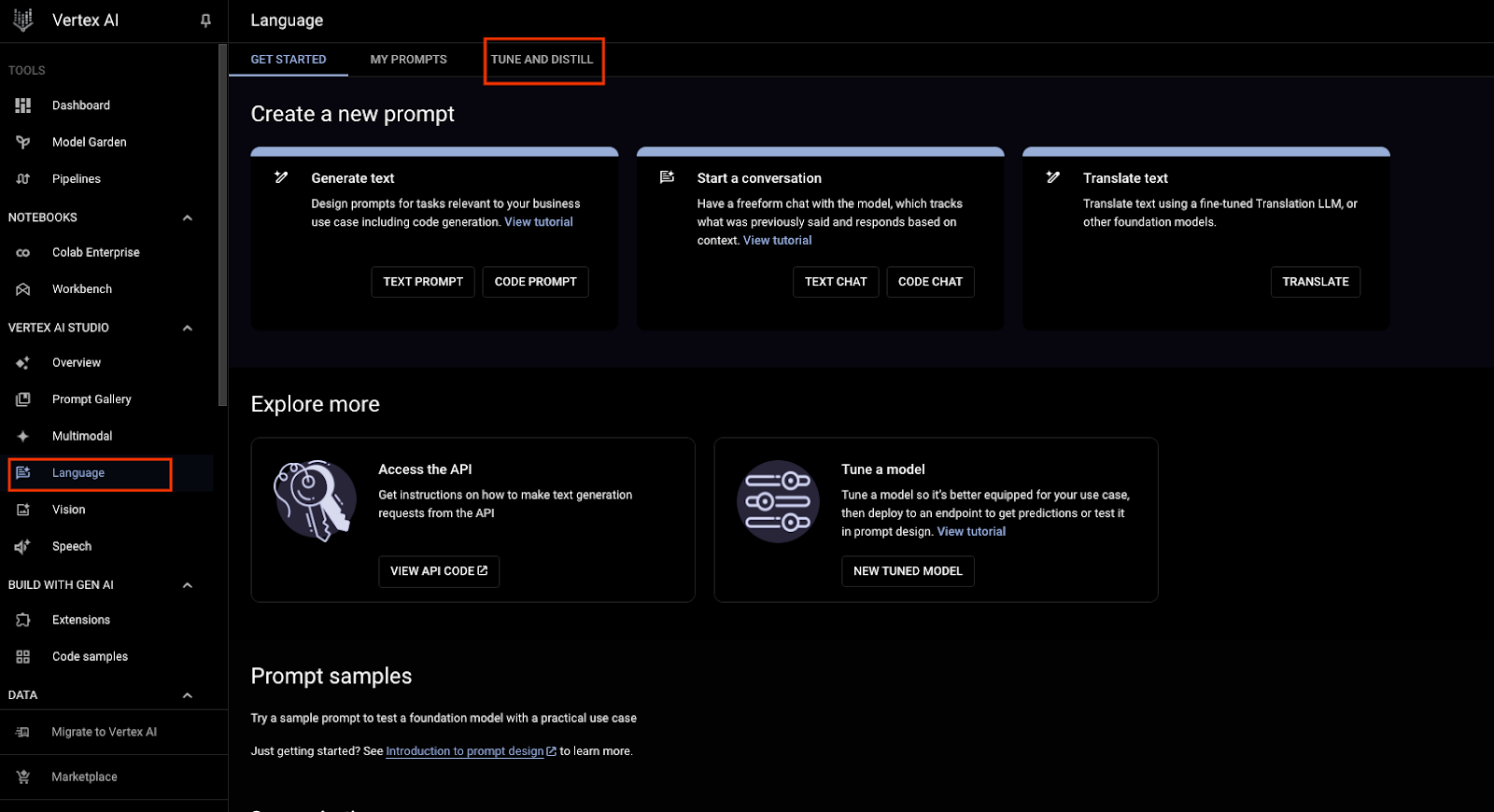

2. Vertex AI Setup

- Navigate to Vertex AI Studio (free tier available).

- Click Try it in Console in a browser where you are already logged in to your Google Cloud Account.

- In the left-hand pane find and click Language.

- Navigate to the “Tune and Distill” tab:

3. Model Training

- Click Create Tuned Model.

- For this example, we’ll do a basic fine-tuning task, so select “Supervised Tuning” (should be selected by default).

- Give your model a name.

- Select a base model: We’ll use Gemini Pro 1.0 002 for this example.

- Click Continue.

- Upload your JSONL file that you generated in Step 2.

- You’ll be asked for a “dataset location.” This is just where your JSONL file is going to be located in the cloud. You can use the UI to very easily create a "bucket" to store this data.

Click start and wait for the model to be trained! With this step, you have now entered the LLM AI arena. The quality of the model you produce is only limited by your imagination and the quality of the data you can find, prepare, and/or generate for your use case.

For our use case, we used the data we generated earlier, which included prompts about how individuals could achieve their specific health goals, and meal plans that matched those constraints.

4. Test Your Model

Once your model is trained, you can test it by navigating to it on the Tune and Distill main page. In that interface, you can interact with the newly created model the same way you would with any other chatbot.

In the next section, we will show you how to host your newly created model to run evaluations and wire it up for an actual application!

Deploying Your Model

You've trained your meal planning LLM on Vertex AI, and it's ready to start generating personalized culinary masterpieces. Now it's time to make your AI chef accessible to the world! This post will guide you through deploying your model on Vertex AI and creating a user-friendly bot interface.

- Create an endpoint:

- Navigate to the Vertex AI section in the Google Cloud Console.

- Select "Endpoints" from the left-hand menu and click "Create Endpoint."

- Give your endpoint a descriptive name (e.g., "meal-planning-endpoint").

- Deploy your model:

- Within your endpoint, click "Deploy model."

- Select your trained model from the Cloud Storage bucket where you saved it.

- Specify a machine type suitable for serving predictions (consider traffic expectations).

- Choose a deployment scale (e.g., "Manual Scaling" for initial testing, "Auto Scaling" for handling variable demand).

- Deploy the model.

Congratulations! You've now trained and tested your very own LLM on Google's Vertex AI. You are now an AI engineer! In the next and final installment of this series, we'll take you through the exciting steps of deploying your model, creating a user-friendly interface, and unleashing your meal-planning AI upon the world! Stay tuned for the grand finale of our LLM adventure.

Opinions expressed by DZone contributors are their own.

Comments