Building an Interactive Chatbot With Streamlit, LangChain, and Bedrock

The article helps you to create a chatbot using low code frontend, LangChain for conversation management, and Bedrock LLM for generating responses.

Join the DZone community and get the full member experience.

Join For FreeIn the ever-evolving landscape of AI, chatbots have become indispensable tools for enhancing user engagement and streamlining information delivery. This article will walk you through the process of building an interactive chatbot using Streamlit for the front end, LangChain for orchestrating interactions, and Anthropic’s Claude Model powered by Amazon Bedrock as the Large Language Model (LLM) backend. We'll dive into the code snippets for both the backend and front end and explain the key components that make this chatbot work.

Core Components

- Streamlit frontend: Streamlit's intuitive interface allows us to create a low-code user-friendly chat interface with minimal effort. We'll explore how the code sets up the chat window, handles user input, and displays the chatbot's responses.

- LangChain orchestration: LangChain empowers us to manage the conversation flow and memory, ensuring the chatbot maintains context and provides relevant responses. We'll discuss how LangChain's ConversationSummaryBufferMemory and ConversationChain are integrated.

- Bedrock/Claude LLM backend: The true magic lies in the LLM backend. We'll look at how to leverage Amazon Bedrock’s claude foundation model to generate intelligent and contextually aware responses.

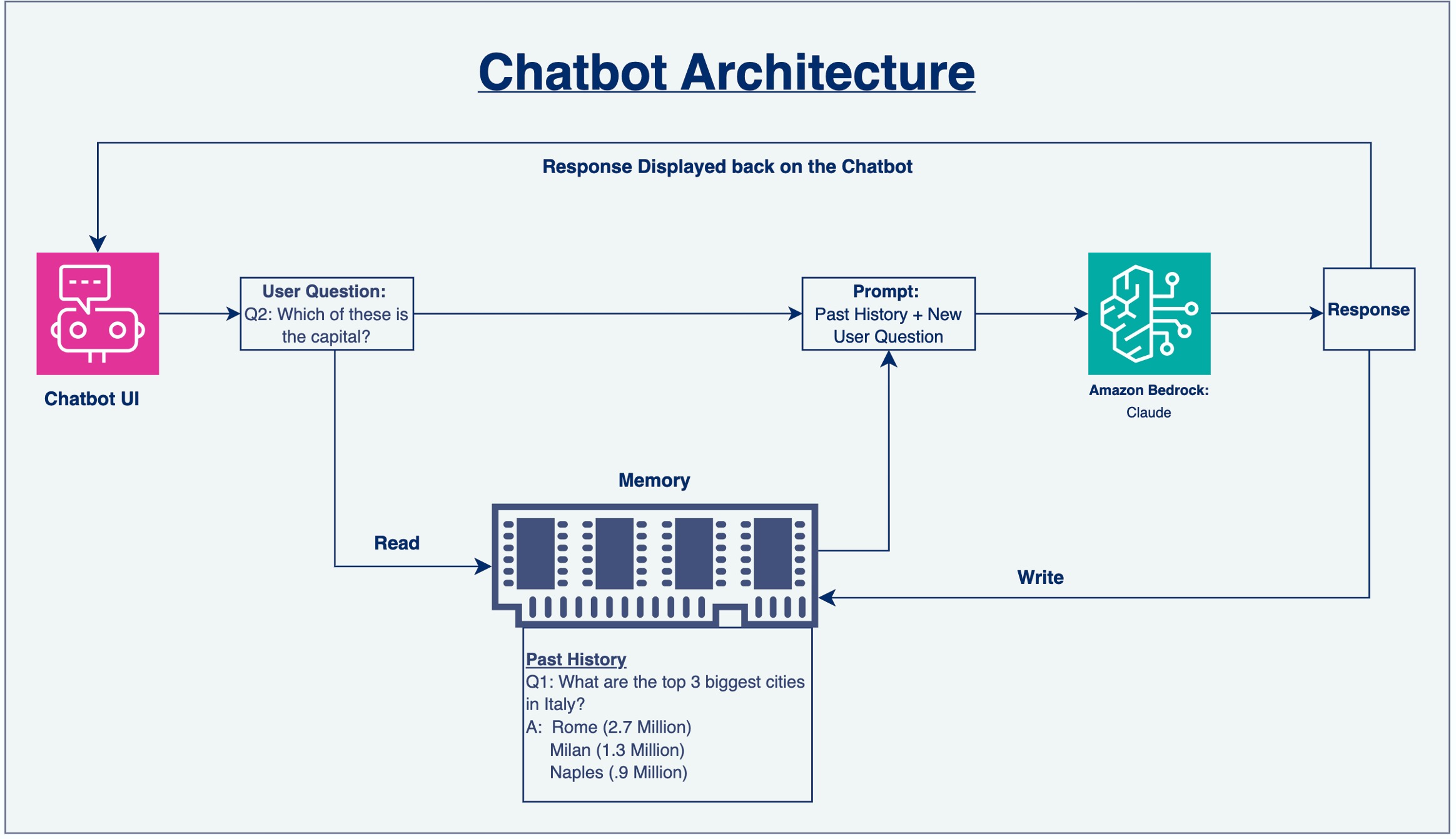

Chatbot Architecture

![chatbot architecture]()

Conceptual Walkthrough of the Architecture

- User interaction: The user initiates the conversation by typing a message into the chat interface created by Streamlit. This message can be a question, a request, or any other form of input the user wishes to provide.

- Input capture and processing: Streamlit's chat input component captures the user's message and passes it on to the LangChain framework for further processing.

- Contextualization with LangChain memory: LangChain plays a crucial role in maintaining the context of the conversation. It combines the user's latest input with the relevant conversation history stored in its memory. This ensures that the chatbot has the necessary information to generate a meaningful and contextually appropriate response.

- Leveraging the LLM: The combined context is then sent to the Bedrock/Claude LLM. This powerful language model uses its vast knowledge and understanding of language to analyze the context and generate a response that addresses the user's input in an informative way.

- Response retrieval: LangChain receives the generated response from the LLM and prepares it for presentation to the user.

- Response display: Finally, Streamlit takes the chatbot's response and displays it in the chat window, making it appear as if the chatbot is engaging in a natural conversation with the user. This creates an intuitive and user-friendly experience, encouraging further interaction.

Code Snippets

Frontend (Streamlit)

import streamlit

import chatbot_backend

from langchain.chains import ConversationChain

from langchain.memory import ConversationSummaryBufferMemory

import boto3

from langchain_aws import ChatBedrock

import pandas as pd

# 2 Set Title for Chatbot - streamlit.title("Hi, This is your Chatbott")

# 3 LangChain memory to the session cache - Session State -

if 'memory' not in streamlit.session_state:

streamlit.session_state.memory = demo.demo_memory()

# 4 Add the UI chat history to the session cache - Session State

if 'chat_history' not in streamlit.session_state:

streamlit.session_state.chat_history = []

# 5 Re-render the chat history

for message in streamlit.session_state.chat_history:

with streamlit.chat_message(message["role"]):

streamlit.markdown(message["text"])

# 6 Enter the details for chatbot input box

input_text = streamlit.chat_input("Powered by Bedrock")

if input_text:

with streamlit.chat_message("user"):

streamlit.markdown(input_text)

streamlit.session_state.chat_history.append({"role": "user", "text": input_text})

chat_response = demo.demo_conversation(input_text=input_text,

memory=streamlit.session_state.memory)

with streamlit.chat_message("assistant"):

streamlit.markdown(chat_response)

streamlit.session_state.chat_history.append({"role": "assistant", "text": chat_response})

Backend (LangChain and LLM)

from langchain.chains import ConversationChain

from langchain.memory import ConversationSummaryBufferMemory

import boto3

from langchain_aws import ChatBedrock

# 2a Write a function for invoking model- client connection with Bedrock with profile, model_id

def demo_chatbot():

boto3_session = boto3.Session(

# Your aws_access_key_id,

# Your aws_secret_access_key,

region_name='us-east-1'

)

llm = ChatBedrock(

model_id="anthropic.claude-3-sonnet-20240229-v1:0",

client=boto3_session.client('bedrock-runtime'),

model_kwargs={

"anthropic_version": "bedrock-2023-05-31",

"max_tokens": 20000,

"temperature": .3,

"top_p": 0.3,

"stop_sequences": ["\n\nHuman:"]

}

)

return llm

# 3 Create a Function for ConversationSummaryBufferMemory (llm and max token limit)

def demo_memory():

llm_data = demo_chatbot()

memory = ConversationSummaryBufferMemory(llm=llm_data, max_token_limit=20000)

return memory

# 4 Create a Function for Conversation Chain - Input text + Memory

def demo_conversation(input_text, memory):

llm_chain_data = demo_chatbot()

# Initialize ConversationChain with proper llm and memory

llm_conversation = ConversationChain(llm=llm_chain_data, memory=memory, verbose=True)

# Call the invoke method

full_input = f" \nHuman: {input_text}"

llm_start_time = time.time()

chat_reply = llm_conversation.invoke({"input": full_input})

llm_end_time = time.time()

llm_elapsed_time = llm_end_time - llm_start_time

memory.save_context({"input": input_text}, {"output": chat_reply.get('response', 'No Response')})

return chat_reply.get('response', 'No Response')Conclusion

We've explored the fundamental building blocks of an interactive chatbot powered by Streamlit, LangChain, and a powerful LLM backend. This foundation opens doors to endless possibilities, from customer support automation to personalized learning experiences. Feel free to experiment, enhance, and deploy this chatbot for your specific needs and use cases.

Opinions expressed by DZone contributors are their own.

Comments