Snowflake Cortex Analyst: Unleashing the Power of Conversational AI for Text-to-SQL

Find out more about how conversational AI for text-to-SQL using Snowflake Cortex Analyst unlocks the potential for data-driven decision-making.

Join the DZone community and get the full member experience.

Join For FreeConversational AI

Conversational AI refers to technologies that enable humans to interact with machines using natural language, either through text or voice. This includes chatbots, voice assistants, and other types of conversational interfaces.

Conversational AI for SQL refers to natural language interfaces that enable users to interact with databases using everyday language instead of writing SQL code. This technology allows non-technical users to query and analyze data without requiring extensive SQL knowledge.

Some key aspects of conversational AI for SQL include:

- Natural Language Processing (NLP): Understanding user queries and converting them into SQL

- Intent identification: Recognizing the user's intent behind the query

- Entity recognition: Identifying specific data entities mentioned in the query

- Query generation: Generating accurate SQL queries based on user input

What Is Snowflake Cortex Analyst?

Snowflake Cortex is a suite of machine learning (ML) and artificial intelligence (AI) tools integrated directly into the Snowflake Data Cloud. Cortex enables users to build, deploy, and manage ML models using Snowflake's scalable and secure infrastructure.

The era of conversational AI has revolutionized the way businesses interact with data. Snowflake Cortex Analyst, a cutting-edge platform, has taken this revolution to the next level by enabling text-to-SQL capabilities. In this article, we'll delve into the potential of Conversational AI for text-to-SQL using Snowflake Cortex Analyst and explore its benefits, applications, and implementation strategies.

Benefits of Conversational AI for Text-to-SQL

- Democratization of data: Empowers non-technical users to access and analyze data

- Increased productivity: Reduces time spent on manual querying and data analysis

- Improved accuracy: Eliminates errors associated with manual SQL coding

- Enhanced user experience: Provides an intuitive and conversational interface

Implementation Guide

Now that we have discussed the Conversational AI and Snowflake Cortex capabilities, let's delve into the implementation strategy. For the rest of this article, I will focus on creating a chatbot to answer business questions based on the data available in the Snowflake table as well as write the SQL queries on the go for the business users.

Data Exploration

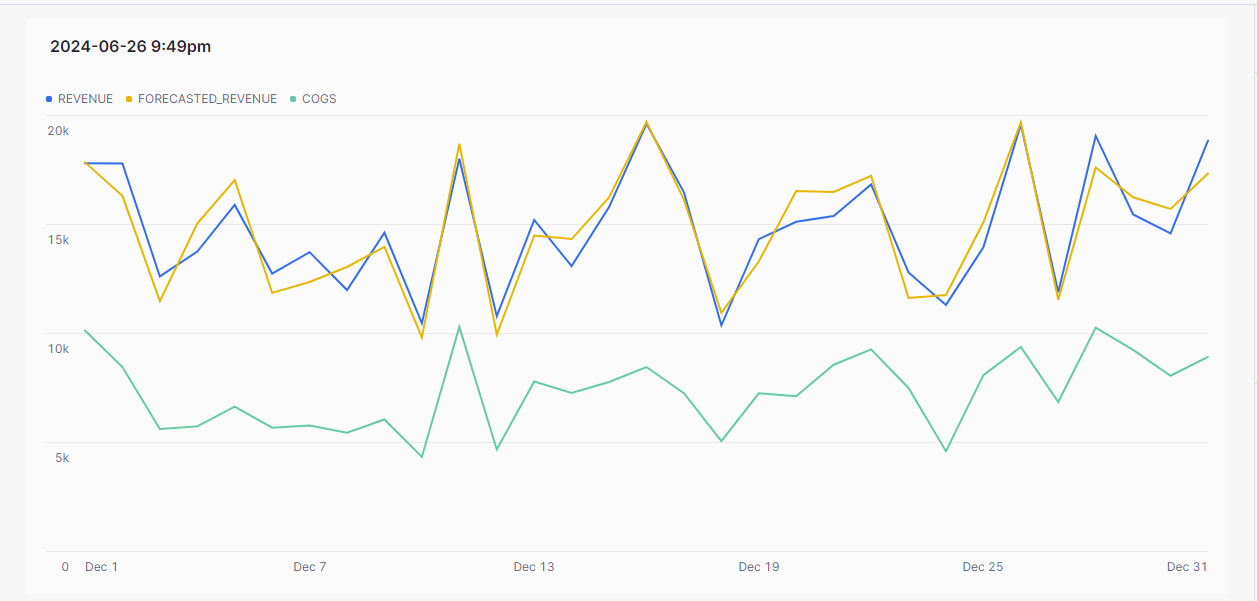

In this section, let's quickly make ourselves familiar with the data we will be using for the demo. I am focusing on daily revenue, cost of goods, and forecasted revenue for a sales organization.

The following query lets you explore the data table.

select * from DAILY_REVENUE WHERE date BETWEEN '2023-12-01' AND '2023-12-31'

Train the AI Model

Having explored the data tables, in this section, we will be focusing on training the model with the existing data and sample queries. Cortex Analyst achieves this part with the help of a semantic file called YAML file. YAML files focus primarily focuses on the data tables, measures, dimensions, time dimensions, and verified queries mapped to the business questions.

For this demo, we would train the model to answer the following questions.

- For each month, what was the lowest daily revenue and on what date did that lowest revenue occur?

- What were daily cumulative expenses in Dec 2023?

The following YAML code block would train the model to answer the above questions as well as write SQLs for the business users.

name: Revenue

tables:

- name: daily_revenue

description: Daily total revenue, aligned with daily "Cost of Goods Sold" (COGS), and forecasted revenue.

base_table:

database: SUPERSTORE

schema: SUPERSTORE_ML_FUNCTIONS

table: daily_revenue

time_dimensions:

- name: date

expr: date

description: date with measures of revenue, COGS, and forecasted revenue.

unique: true

data_type: date

measures:

- name: daily_revenue

expr: revenue

description: total revenue for the given day

synonyms: ["sales", "income"]

default_aggregation: sum

data_type: number

- name: daily_cogs

expr: cogs

description: total cost of goods sold for the given day

synonyms: ["cost", "expenditures"]

default_aggregation: sum

data_type: number

- name: daily_forecasted_revenue

expr: forecasted_revenue

description: total forecasted revenue for a given day

synonyms: ["forecasted sales", "forecasted income"]

default_aggregation: sum

data_type: number

- name: daily_profit

description: profit is the difference between revenue and expenses.

expr: revenue - cogs

data_type: number

- name: daily_forecast_abs_error

synonyms:

- absolute error

- L1

description: absolute error between forecasted and actual revenue

expr: abs(forecasted_revenue - revenue)

data_type: number

default_aggregation: avg

verified_queries:

- name: "daily cumulative expenses in 2023 dec"

question: "daily cumulative expenses in 2023 dec"

verified_at: 1714752498

verified_by: kapils

sql: "

SELECT

date,

SUM(daily_cogs) OVER (

ORDER BY

date ROWS BETWEEN UNBOUNDED PRECEDING

AND CURRENT ROW

) AS cumulative_cogs

FROM

__daily_revenue

WHERE

date BETWEEN '2023-12-01'

AND '2023-12-31'

ORDER BY

date DESC;

"

- name: "lowest revenue each month"

question: For each month, what was the lowest daily revenue and on what date did

that lowest revenue occur?

sql: "WITH monthly_min_revenue AS (

SELECT

DATE_TRUNC('MONTH', date) AS month,

MIN(daily_revenue) AS min_revenue

FROM __daily_revenue

GROUP BY

DATE_TRUNC('MONTH', date)

)

SELECT

mmr.month,

mmr.min_revenue,

dr.date AS min_revenue_date

FROM monthly_min_revenue AS mmr JOIN __daily_revenue AS dr

ON mmr.month = DATE_TRUNC('MONTH', dr.date) AND mmr.min_revenue = dr.daily_revenue

ORDER BY mmr.month DESC NULLS LAST"

verified_at: 1715187400

verified_by: kapilsOnce we have the YAML file ready, the next step is to upload the file into Snowflake STAGE using Snowflake's out-of-the-box features.

Develop Conversational Interface

In this section, I will focus on designing a quick chatbot application using Python Streamlit. This application will act as an interface between the business users and the trained data models to answer critical business questions with simple English statements.

The following Python code would help design the chatbot.

from typing import Any, Dict, List, Optional

import pandas as pd

import requests

import snowflake.connector

import streamlit as st

DATABASE = "SUPERSTORE"

SCHEMA = "SUPERSTORE_ML_FUNCTIONS"

STAGE = "RAW_DATA"

FILE = "sales_timeseries.yaml"

WAREHOUSE = "COMPUTE_WH"

# replace values below with your Snowflake connection information

HOST = "XXXXXXXXX.snowflakecomputing.com" #Snowlfake host details

ACCOUNT = "XXXXXXXXXX" #Snowflake Account Name

USER = "XXXXXXXXX" #Enter your username here

PASSWORD = "XXXXXXXX" #Enter your password here

ROLE = "ACCOUNTADMIN"

if 'CONN' not in st.session_state or st.session_state.CONN is None:

st.session_state.CONN = snowflake.connector.connect(

user=USER,

password=PASSWORD,

account=ACCOUNT,

host=HOST,

port=443,

warehouse=WAREHOUSE,

role=ROLE,

)

def send_message(prompt: str) -> Dict[str, Any]:

"""Calls the REST API and returns the response."""

request_body = {

"messages": [{"role": "user", "content": [{"type": "text", "text": prompt}]}],

"semantic_model_file": f"@{DATABASE}.{SCHEMA}.{STAGE}/{FILE}",

}

resp = requests.post(

url=f"https://{HOST}/api/v2/cortex/analyst/message",

json=request_body,

headers={

"Authorization": f'Snowflake Token="{st.session_state.CONN.rest.token}"',

"Content-Type": "application/json",

},

)

request_id = resp.headers.get("X-Snowflake-Request-Id")

if resp.status_code < 400:

return {**resp.json(), "request_id": request_id} # type: ignore[arg-type]

else:

raise Exception(

f"Failed request (id: {request_id}) with status {resp.status_code}: {resp.text}"

)

def process_message(prompt: str) -> None:

"""Processes a message and adds the response to the chat."""

st.session_state.messages.append(

{"role": "user", "content": [{"type": "text", "text": prompt}]}

)

with st.chat_message("user"):

st.markdown(prompt)

with st.chat_message("assistant"):

with st.spinner("Generating response..."):

response = send_message(prompt=prompt)

request_id = response["request_id"]

content = response["message"]["content"]

display_content(content=content, request_id=request_id) # type: ignore[arg-type]

st.session_state.messages.append(

{"role": "assistant", "content": content, "request_id": request_id}

)

def display_content(

content: List[Dict[str, str]],

request_id: Optional[str] = None,

message_index: Optional[int] = None,

) -> None:

"""Displays a content item for a message."""

message_index = message_index or len(st.session_state.messages)

if request_id:

with st.expander("Request ID", expanded=False):

st.markdown(request_id)

for item in content:

if item["type"] == "text":

st.markdown(item["text"])

elif item["type"] == "suggestions":

with st.expander("Suggestions", expanded=True):

for suggestion_index, suggestion in enumerate(item["suggestions"]):

if st.button(suggestion, key=f"{message_index}_{suggestion_index}"):

st.session_state.active_suggestion = suggestion

elif item["type"] == "sql":

with st.expander("SQL Query", expanded=False):

st.code(item["statement"], language="sql")

with st.expander("Results", expanded=True):

with st.spinner("Running SQL..."):

df = pd.read_sql(item["statement"], st.session_state.CONN)

if len(df.index) > 1:

data_tab, line_tab, bar_tab = st.tabs(

["Data", "Line Chart", "Bar Chart"]

)

data_tab.dataframe(df)

if len(df.columns) > 1:

df = df.set_index(df.columns[0])

with line_tab:

st.line_chart(df)

with bar_tab:

st.bar_chart(df)

else:

st.dataframe(df)

st.title("Cortex Analyst")

st.markdown(f"Semantic Model: `{FILE}`")

if "messages" not in st.session_state:

st.session_state.messages = []

st.session_state.suggestions = []

st.session_state.active_suggestion = None

for message_index, message in enumerate(st.session_state.messages):

with st.chat_message(message["role"]):

display_content(

content=message["content"],

request_id=message.get("request_id"),

message_index=message_index,

)

if user_input := st.chat_input("What is your question?"):

process_message(prompt=user_input)

if st.session_state.active_suggestion:

process_message(prompt=st.session_state.active_suggestion)

st.session_state.active_suggestion = NoneMonitor and Refine

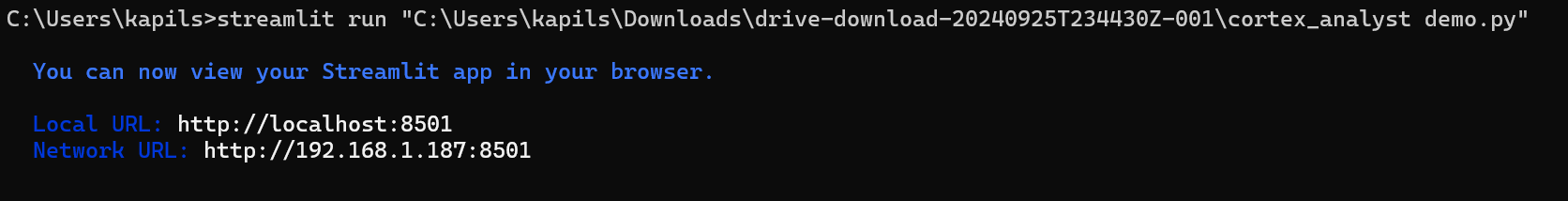

Now that we have the Data Model and Chatbot designed, let's try and monitor the results. If you are running this on your local machine, please install all the necessary Python libraries.

The following command would launch the Streamlit Chatbot application.

streamlit run "C:\demo\cortex_analyst demo.py"

Conclusion

Conversational AI for text-to-SQL using Snowflake Cortex Analyst unlocks unprecedented potential for data-driven decision-making. By bridging the data gap and empowering non-technical users, businesses can:

- Enhance productivity

- Improve accuracy

- Foster data-driven culture

Opinions expressed by DZone contributors are their own.

Comments