LLMops: The Future of AI Model Management

LLMOps enhances MLOps for generative AI, focusing on prompt and RAG management to boost efficiency, scalability, and streamline deployment while tackling resource and complexity challenges.

Join the DZone community and get the full member experience.

Join For FreeBefore we dive into LLMOps, let's first understand what MLOps is. MLOps (Machine Learning Operations) provides a comprehensive platform for all aspects of developing, training, evaluating, and deploying machine learning products, covering management areas such as infrastructure, data, workflows and pipelines, models, experiments, and interactive processes.

When Large Language Models (LLMs) are integrated into MLOps, they have the potential to revolutionize the management of generative artificial intelligence (GenAI) models. LLMOps extends MLOps by specifically focusing on managing GenAI tasks including: prompt management, agent management, and Retrieval-Augmented Generation Operations (RAGOps). RAGOps, an extension of LLMOps, encompasses the management of both documents and databases to enhance GenAI models by incorporating external information.

LLMOps Benefits

LLMOps can largely reduce the workload and raise the efficiency of GenAI, over time becoming even more scalable and implementable. With this operational viewpoint, LLMOps can support sustainability efforts by ensuring documented data and monitored costs and processing. They are highly purposeful in model performance boosts as well. Accuracy, speed, and resource utilization are just some of the strengths of deploying LLMOps for GenAI. It will help in fine-tuning domain specific data, employing different interference techniques to improve memory and load times, cleverly harnessing hardware and software capabilities to influence training, and streamlining processing. RAGOps can fuel LLMs with even higher quality data that brings the possibility of expanding the reaches of what LLMs can answer and handle. LLM chaining is also an innovative feature of LLMOps that can advance GenAI to handle complex and multi-turn tasks while portioning off sub-tasks to appropriate models such as for language or response generation. This can lead to direct measurable achievements, as in one case, 25% increase in CTR (click-through rates) and 15% boost in sales, or another which saw 20% decrease in operational time and 95% delivery success.

LLMOps Processes and Use

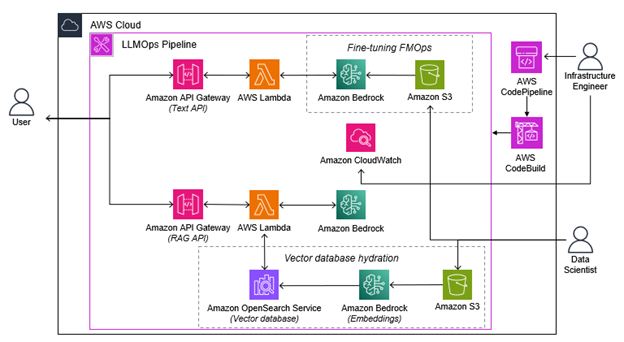

LLMOps can be broken down into a typical series of 3 steps, as seen below through AWS.

These 3 steps consist of integration, deployment, and tuning. Integration involves combining all versions of an application’s code into one single, tested version. Deployment consists of moving the infrastructure and model into both quality assurance and production environments to evaluate performance and undergo evaluation. Finally, tuning involves providing additional data to optimize the model through pre-processing, tuning, evaluation, and registration.

A model can be customized through a variety of methods from fine-tuning to pretraining and to RAG. Fine-tuning uses your own data to adjust the model, pretraining uses a repository of unlabeled data to train, and RAG uses indexed data in a vector database that relies on generation and search to determine information to send to the model.

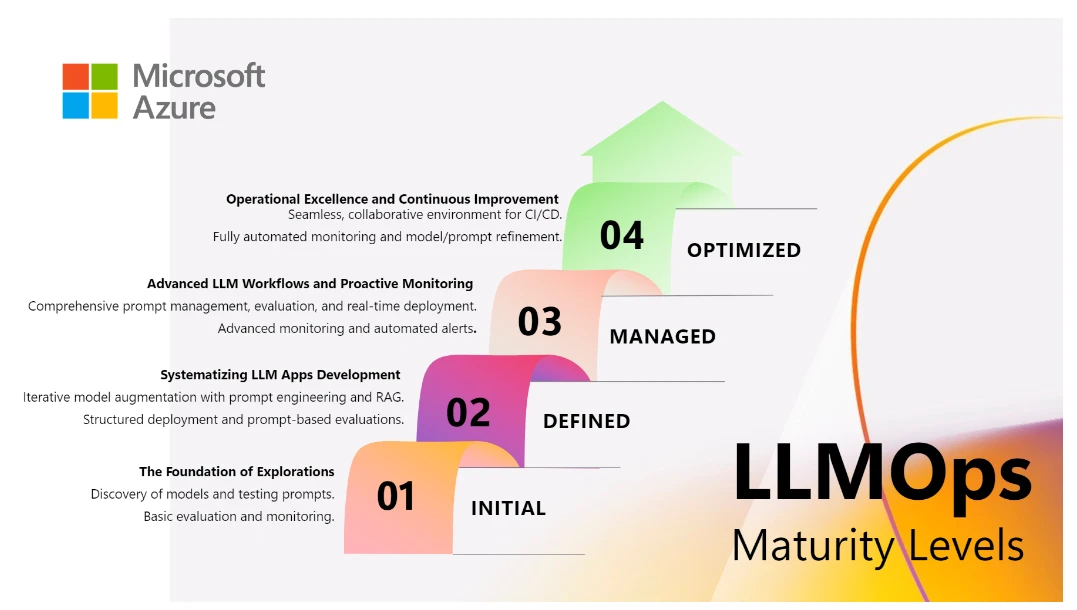

Azure breaks down the process similarly, but differently, in 4 stages: initialization, experimentation, evaluation and refinement, and deployment. They are similar but differ in that initialization clearly defines an objective with structure and flow. Azure’s LLMOps prompt flow allows for centralized code hosting, lifecycle management, multiple deployment targets, A/B deployment, conditional data and model registration, and comprehensive reporting.

As RedHat expands on, LLMOps can really simplify the model deployment stage. Automated deployment is a significant factor of LLMOps. Data collection and monitoring are all so much easier as well. Exploratory data analysis is undergone from collection, to cleaning, to exploration prior to the data preparation and direction into prompt engineering. Patterns are learned in the data with machine learning and a different set of data evaluates the LLM. Fine-tuning sometimes goes through several rounds to improve performance. Review procedures help remove bias and security risk while sticking to performance tracks for the rest of the LLM’s functionality period. APIs can make the LLM available in applications for a variety of functions like text generation and question answering through REST APIs. Human feedback can help to improve performance over the long-term.

Best Practices to Follow to Help Minimize Struggle Points for LLMOps

Some common struggle points emerge with use of LLMOps, but there are best practices in place to help minimize the pain points. These struggles may include intensive resource requirements, mass amounts of required data for training, difficulties with complex model interpretations, and privacy and ethical considerations. Building a resilient infrastructure for the LLM to exist on is crucial. It can be public cloud, multi-cloud, or internally managed, but a hybrid-cloud approach could be beneficial to help with financial burdens. Due to the complexities and data amounts, choosing dedicated instead of universal solutions are advised to allow for more contained scalability. Keeping well-maintained documentation will greatly assist with any governance issues that arise to help cover all bases and provide the context needed in all situations. This will ensure that the training data, training procedures, and monitoring protocols are well-documented and readily available if needed. Logging capabilities, in conjunction with monitoring, is also advisable to assist with governance and debugging. This can help with tracing issues back to the root or involve an evaluation dataset to observe outputs related to particular inputs. Essentially, so long as high-quality data is used, models are trained on appropriate algorithms, they are deployed with security in mind, and monitored in real-time, many struggle points can be overcome.

The Verdict: LLMOps Can Largely Affect the Future of AI Model Management

LLMOps are an extension underneath of MLOps that can greatly benefit the future of GenAI with easing model deployment, enhancing scalability which is at a critical juncture, and bettering resource management to improve efficiency. Based on different usage types, there are specific profiles of LLMOps users that can help explain the involvement with GenAI as depicted below which shows how GenAI Developers will be involved with evaluation and testing as well as prompt engineering and results management.

The LLMOps maturity model hones in on the fact that there is significant complexity to the development and operational deployment of such models. Simple understanding and basic prompts are met in level one. Level two extends to systematic methods and comprehensive prompt flow, including RAG. This then expands to level three which goes into prompt engineering that is more tailored to the needs and automated. Monitoring and maintenance also comes in at this stage. The final stage involves iterative changes that will make the model work at its peak and serious fine-tuning and evaluation are implemented. This model helps to scale LLMOps that will, in the end, help with scaling GenAI applications exponentially.

Opinions expressed by DZone contributors are their own.

Comments