AWS Fargate: Deploying Jakarta EE Applications on Serverless Infrastructures

This article demonstrates how to alleviate the Jakarta EE run-times, servers, and applications, by deploying them on AWS Serverless infrastructures.

Join the DZone community and get the full member experience.

Join For FreeJakarta EE is a unanimously adopted and probably the most popular Java enterprise-grade software development framework. With the industry-wide adoption of microservices-based architectures, its popularity is skyrocketing and during these last years, it has become the preferred framework for professional software enterprise applications and services development in Java.

Jakarta EE applications used to traditionally be deployed in run-times or application servers like Wildfly, GlassFish, Payara, JBoss EAP, WebLogic, WebSphere, and others, which might have been criticized for their apparent heaviness and expansive costs. With the advent and the ubiquitousness of the cloud, these constraints are going to become less restrictive, especially thanks to the serverless technology, which provides increased flexibility, for standard low costs.

This article demonstrates how to alleviate the Jakarta EE run-times, servers, and applications, by deploying them on AWS Serverless infrastructures.

Overview of AWS Fargate

As documented in the User Guide, AWS Fargate is a serverless paradigm used in conjunction with AWS ECS (Elastic Container Service) to run containerized applications. In a nutshell, this concept allows us to:

- Package applications in containers

- Specify the host operating system, the CPU's architecture, and capacity, the memory requirements, the network, and security policies

- Execute in the cloud the whole resulting stack.

Running containers with AWS ECS requires handling a so-called launch type (i.e. an abstraction layer) defining the way to execute standalone tasks and services. There are several launch types that might be defined for AWS ECS-based containers, and Fargate is one of them. It represents the serverless way to host AWS ECS workloads and consists of components like clusters, tasks, and services, as explained in the AWS Fargate User Guide.

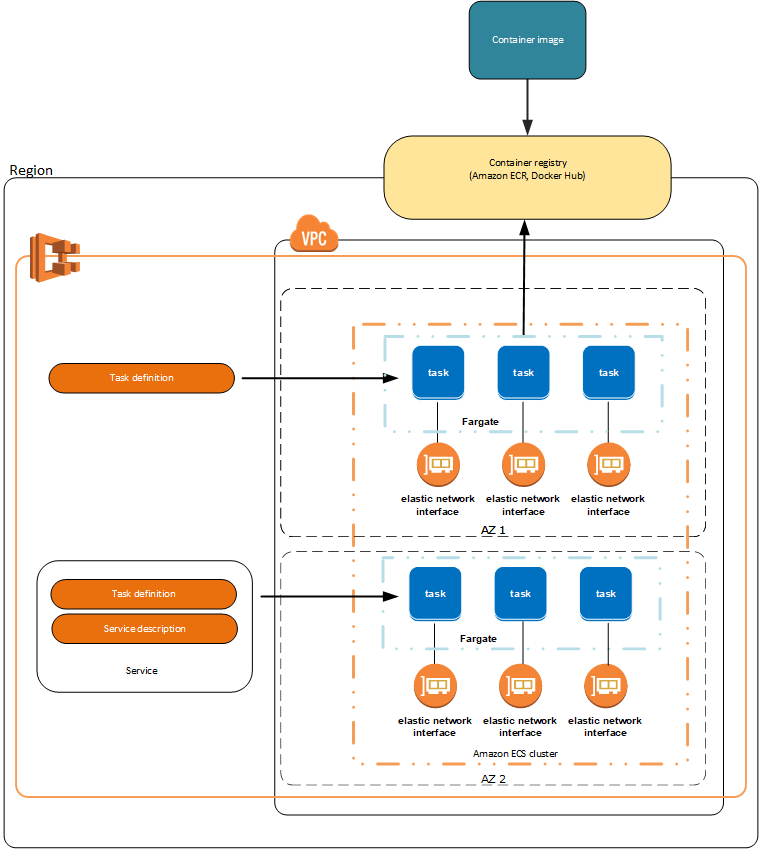

The figure below, extracted from the AWS Fargate documentation, is emphasizing its general architecture:

As the figure above shows, in order to deploy serverless applications running as ECS containers, we need a quite complex infrastructure consisting in:

- A VPC (Virtual Private Cloud)

- An ECR (Elastic Container Registry)

- An ECS cluster

- A Fargate launch type by ECS cluster node

- One or more tasks by Fargate launch type

- An ENI (Elastic Network Interface) by task

Now, if we want to deploy Jakarta EE applications in the AWS serverless cloud as ECS-based containers, we need to:

- Package the application as a WAR.

- Create a Docker image containing the Jakarta EE-compliant run-time or application server with the WAR deployed.

- Register this Docker image into the ECR service.

- Define a task to run the Docker container built from the previously defined image.

The AWS console allows us to perform all these operations in a user-friendly way; nevertheless, the process is quite time-consuming and laborious. Using AWS CloudFormation, or even AWS CLI, we could automatize it, of course, but the good news is that we have a much better alternative, as explained below.

Overview of AWS Copilot

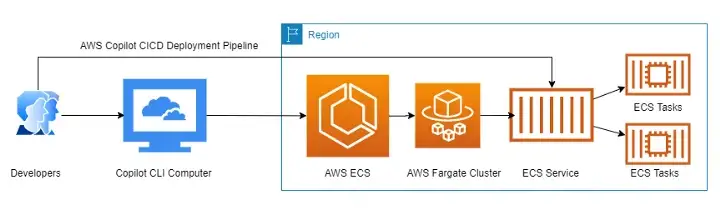

AWS Copilot is a CLI (Command Line Interface) tool that provides application-first, high-level commands to simplify modeling, creating, releasing, and managing production-ready containerized applications on Amazon ECS from a local development environment. The figure below shows its software architecture:

Using AWS Copilot, developers can easily manage the required AWS infrastructure, from their local machine, by executing simple commands which result in the creation of the deployment pipelines, fulfilling all the required resources enumerated above. In addition, AWS Copilot can also create extra resources like subnets, security groups, load balancers, and others. Here is how.

Deploying Payara 6 Applications on AWS Fargate

Installing AWS Copilot is as easy as downloading and unzipping an archive, such that the documentation is guiding you. Once installed, run the command above to check whether everything works:

~$ copilot --version

copilot version: v1.24.0

The first thing to do in order to deploy a Jakarta EE application is to develop and package it. A very simple way to do that for test purposes is by using the Maven archetype jakartaee10-basic-archetype, as shown below:

mvn -B archetype:generate \

-DarchetypeGroupId=fr.simplex-software.archetypes \

-DarchetypeArtifactId=jakartaee10-basic-archetype \

-DarchetypeVersion=1.0-SNAPSHOT \

-DgroupId=com.exemple \

-DartifactId=testThis Maven archetype generates a simple, complete Jakarta EE 10 project with all the required dependencies and artifacts to be deployed on Payara 6. It generates also all the required components to perform integration tests of the exposed JAX-RS API (for more information on this archetype please see here). Among other generated artifacts, the following Dockerfile will be of real help in our AWS Fargate Cluster setup:

FROM payara/server-full:6.2022.1

COPY ./target/test.war $DEPLOY_DIRNow that we have our test Jakarta EE application, as well as the Dockerfile required to run the Payara Server 6 with this application deployed, let's use AWS Copilot in order to start the process of the serverless infrastructure creation. Simply run the following command:

$ copilot init

Note: It's best to run this command in the root of your Git repository.

Welcome to the Copilot CLI! We're going to walk you through some questions

to help you get set up with a containerized application on AWS. An application is a collection of

containerized services that operate together.

Application name: jakarta-ee-10-app

Workload type: Load Balanced Web Service

Service name: lb-ws

Dockerfile: test/Dockerfile

parse EXPOSE: no EXPOSE statements in Dockerfile test/Dockerfile

Port: 8080

Ok great, we'll set up a Load Balanced Web Service named lb-ws in application jakarta-ee-10-app listening on port 8080.

* Proposing infrastructure changes for stack jakarta-ee-10-app-infrastructure-roles

- Creating the infrastructure for stack jakarta-ee-10-app-infrastructure-roles [create complete] [76.2s]

- A StackSet admin role assumed by CloudFormation to manage regional stacks [create complete] [34.0s]

- An IAM role assumed by the admin role to create ECR repositories, KMS keys, and S3 buckets [create complete] [33.3s]

* The directory copilot will hold service manifests for application jakarta-ee-10-app.

* Wrote the manifest for service lb-ws at copilot/lb-ws/manifest.yml

Your manifest contains configurations like your container size and port (:8080).

- Update regional resources with stack set "jakarta-ee-10-app-infrastructure" [succeeded] [0.0s]

All right, you're all set for local development.

Deploy: No

No problem, you can deploy your service later:

- Run `copilot env init` to create your environment.

- Run `copilot deploy` to deploy your service.

- Be a part of the Copilot community !

Ask or answer a question, submit a feature request...

Visit https://aws.github.io/copilot-cli/community/get-involved/ to see how!The process of the serverless infrastructure creation conducted by AWS Copilot is based on a dialog during which the utility is asking questions and accepts your answers.

The first question concerns the name of the serverless application to be deployed. We choose to name it jakarta-ee-10-app. In the next step, AWS Copilot is asking what is the new workload type of the new service to be deployed and proposes a list of such workload types, from which we need to select Load Balanced Web Service. The name of this new service is lb-ws.

Next, AWS Copilot is looking for Dockerfiles in the local workspace and will display a list from which you have either to choose one, create a new one, or use an already-existent image, in which case you need to provide its location (i.e., a DockerHub URL). We choose the Dockerfile we just created previously, when we ran the Maven archetype.

It only remains for us to define the TCP port number that the newly created service will use for HTTP communication. By default, AWS Copilot proposes the TCP port number 80, but we overload it with 8080.

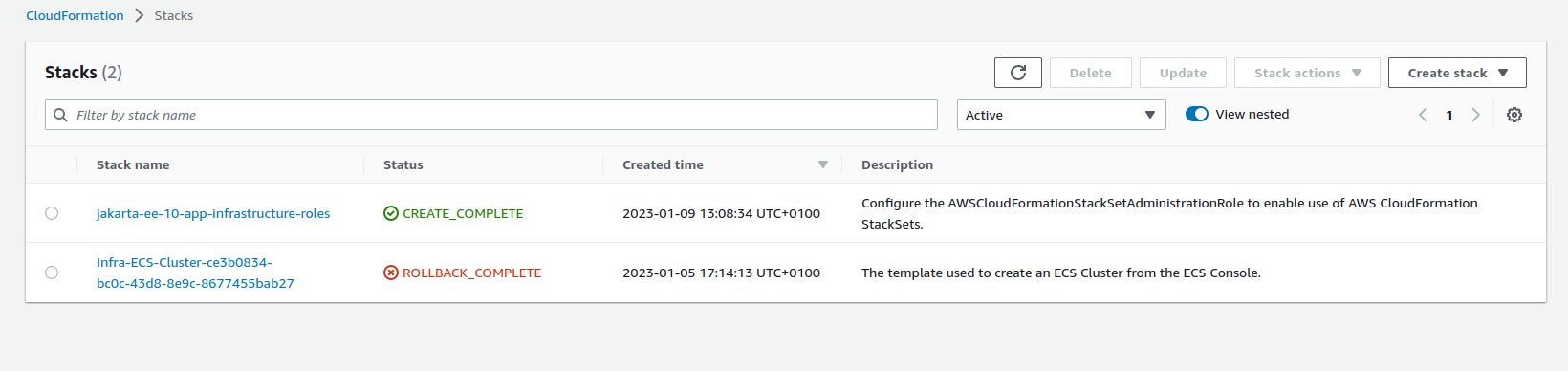

Now, all the required information is collected and the process of infrastructure generation may start. This process consists in creating two CloudFormation stacks, as follows:

- A first CloudFormation stack containing the definition of the required IAM security roles;

- A second CloudFormation stack containing the definition of a template whose execution creates a new ECS cluster.

In order to check the result of the execution of the AWS Copilot initialization phase, you can connect to your AWS console, go to the CloudFormation service, and you will see something similar to this:

As you can see, the two mentioned CloudFormation stacks appear on the screen copy above and you can click on them in order to inspect the details.

We just finished the initialization phase of our serverless infrastructure creation driven by AWS Copilot. Now, let's create our development environment:

$ copilot env init

Environment name: dev

Credential source: [profile default]

Default environment configuration? Yes, use default.

* Manifest file for environment dev already exists at copilot/environments/dev/manifest.yml, skipping writing it.

- Update regional resources with stack set "jakarta-ee-10-app-infrastructure" [succeeded] [0.0s]

- Update regional resources with stack set "jakarta-ee-10-app-infrastructure" [succeeded] [128.3s]

- Update resources in region "eu-west-3" [create complete] [128.2s]

- ECR container image repository for "lb-ws" [create complete] [2.2s]

- KMS key to encrypt pipeline artifacts between stages [create complete] [121.6s]

- S3 Bucket to store local artifacts [create in progress] [99.9s]

* Proposing infrastructure changes for the jakarta-ee-10-app-dev environment.

- Creating the infrastructure for the jakarta-ee-10-app-dev environment. [create complete] [65.8s]

- An IAM Role for AWS CloudFormation to manage resources [create complete] [25.8s]

- An IAM Role to describe resources in your environment [create complete] [27.0s]

* Provisioned bootstrap resources for environment dev in region eu-west-3 under application jakarta-ee-10-app.

Recommended follow-up actions:

- Update your manifest copilot/environments/dev/manifest.yml to change the defaults.

- Run `copilot env deploy --name dev` to deploy your environment.

AWS Copilot starts by asking us what name we want to give to our development environment and continues by proposing to use either the current user default credentials or some temporary credentials created for the purpose. We choose the first alternative.

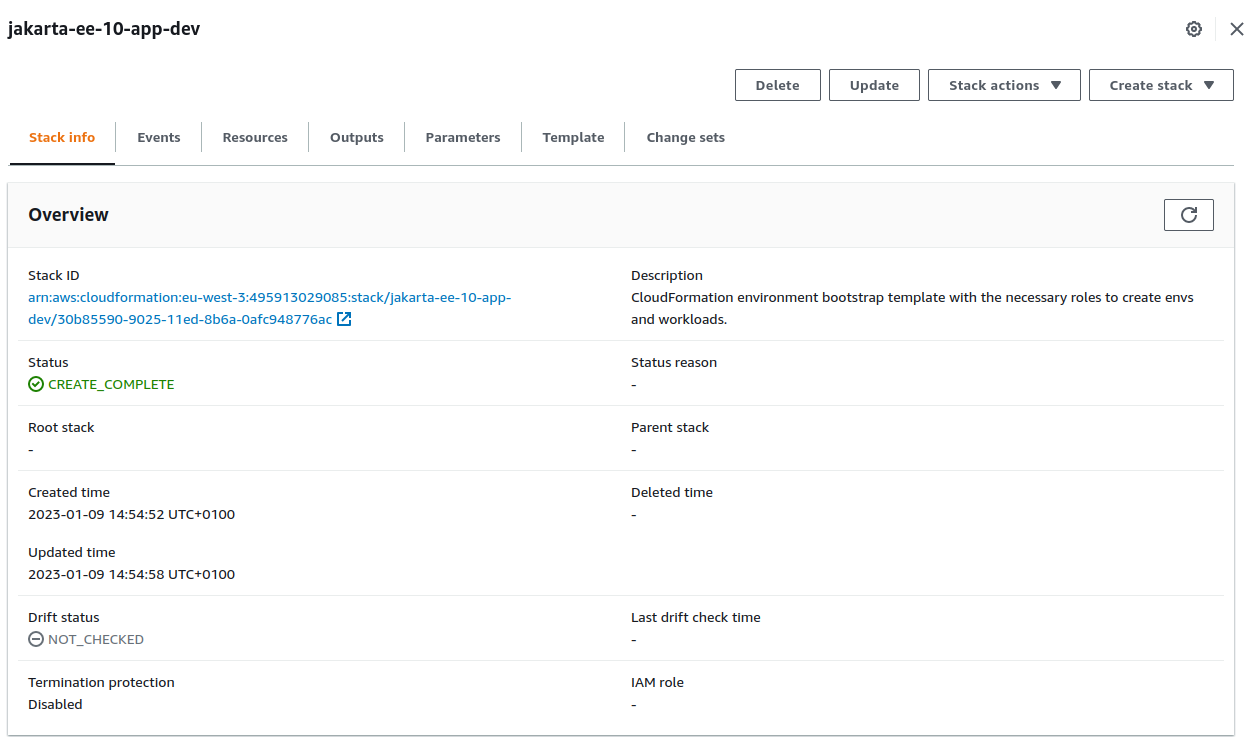

Then, AWS Copilot creates a new stack set, named jakarta-ee-10-app-infrastructure containing the following infrastructure elements:

- An ECR container to register the Docker image resulted further in the execution of the build operation on the Dockerfile selected during the previous step

- A new KMS (Key Management Service) key, to be used for encrypting the artifacts belonging to our development environment

- An S3 (Simple Storage Service) bucket, to be used in order to store inside the artifacts belonging to our development environment

- A new dedicated CloudFormation IAM role which aims at managing resources

- A new dedicated IAM role to describe the resources

This operation may take a significant time, depending on your bandwidth, and, once finished, the development environment, named jakarta-ee-10-app-dev, is created. You can see its details in the AWS console, as shown below:

Notice that the environment creation can be also performed as an additional operation of the first initialization step. As a matter of fact, the copilot init command, as shown above, ends by asking whether you want to create a test environment. Answering yes to this question allows you to proceed immediately with a test environment creation and initialization. For pedagogical reasons, here we preferred to separate these two actions.

The next phase is the deployment of our development environment:

$ copilot env deploy

Only found one environment, defaulting to: dev

* Proposing infrastructure changes for the jakarta-ee-10-app-dev environment.

- Creating the infrastructure for the jakarta-ee-10-app-dev environment. [update complete] [74.2s]

- An ECS cluster to group your services [create complete] [2.3s]

- A security group to allow your containers to talk to each other [create complete] [0.0s]

- An Internet Gateway to connect to the public internet [create complete] [15.5s]

- Private subnet 1 for resources with no internet access [create complete] [5.4s]

- Private subnet 2 for resources with no internet access [create complete] [2.6s]

- A custom route table that directs network traffic for the public subnets [create complete] [11.5s]

- Public subnet 1 for resources that can access the internet [create complete] [2.6s]

- Public subnet 2 for resources that can access the internet [create complete] [2.6s]

- A private DNS namespace for discovering services within the environment [create complete] [44.7s]

- A Virtual Private Cloud to control networking of your AWS resources [create complete] [12.7s]The CloudFormation template created during the previous step is now executed and it results in the creation and initialization of the following infrastructure elements:

- The new ECS cluster, grouping all the stateless required artifacts

- An IAM security group to allow communication between containers

- An Internet Gateway such that the new service be publicly accessible

- Two private and two public subnets

- A new routing table with the required rules such that to allow traffic between public and private subnets

- A private Route53 (DNS) namespace

- A new VPC (Virtual Private Cloud) which aims at controlling the whole bunch of the AWS resources created during this step

Take some time to navigate through your AWS console pages and inspect the infrastructure that AWS Copilot has created for you. As you can see, it's an overladen one and it would have been laborious and time-consuming to create it manually.

The sharp-eyed reader has certainly noticed that creating and deploying an environment, like our development one, doesn't activate any service to it. In order to do that, we need to proceed with our last step: the service deployment. Simply run the command below:

$ copilot deploy

Only found one workload, defaulting to: lb-ws

Only found one environment, defaulting to: dev

Sending build context to Docker daemon 13.67MB

Step 1/2 : FROM payara/server-full:6.2022.1

---> ada23f507bd2

Step 2/2 : COPY ./target/test.war $DEPLOY_DIR

---> Using cache

---> f1b0fe950252

Successfully built f1b0fe950252

Successfully tagged 495913029085.dkr.ecr.eu-west-3.amazonaws.com/jakarta-ee-10-app/lb-ws:latest

WARNING! Your password will be stored unencrypted in /home/nicolas/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

Using default tag: latest

The push refers to repository [495913029085.dkr.ecr.eu-west-3.amazonaws.com/jakarta-ee-10-app/lb-ws]

d163b73cdee1: Pushed

a9c744ad76a8: Pushed

4b2bb262595b: Pushed

b1ed0705067c: Pushed

b9e6d039a9a4: Pushed

99413601f258: Pushed

d864802c5436: Pushed

c3f11d77a5de: Pushed

latest: digest: sha256:cf8a116279780e963e134d991ee252c5399df041e2ef7fc51b5d876bc5c3dc51 size: 2004

* Proposing infrastructure changes for stack jakarta-ee-10-app-dev-lb-ws

- Creating the infrastructure for stack jakarta-ee-10-app-dev-lb-ws [create complete] [327.9s]

- Service discovery for your services to communicate within the VPC [create complete] [2.5s]

- Update your environment's shared resources [update complete] [144.9s]

- A security group for your load balancer allowing HTTP traffic [create complete] [3.8s]

- An Application Load Balancer to distribute public traffic to your services [create complete] [124.5s]

- A load balancer listener to route HTTP traffic [create complete] [1.3s]

- An IAM role to update your environment stack [create complete] [25.3s]

- An IAM Role for the Fargate agent to make AWS API calls on your behalf [create complete] [25.3s]

- A HTTP listener rule for forwarding HTTP traffic [create complete] [3.8s]

- A custom resource assigning priority for HTTP listener rules [create complete] [3.5s]

- A CloudWatch log group to hold your service logs [create complete] [0.0s]

- An IAM Role to describe load balancer rules for assigning a priority [create complete] [25.3s]

- An ECS service to run and maintain your tasks in the environment cluster [create complete] [119.7s]

Deployments

Revision Rollout Desired Running Failed Pending

PRIMARY 1 [completed] 1 1 0 0

- A target group to connect the load balancer to your service [create complete] [0.0s]

- An ECS task definition to group your containers and run them on ECS [create complete] [0.0s]

- An IAM role to control permissions for the containers in your tasks [create complete] [25.3s]

* Deployed service lb-ws.

Recommended follow-up action:

- You can access your service at http://jakar-Publi-H9B68022ZC03-1756944902.eu-west-3.elb.amazonaws.com over the internet.The listing above shows the process of creation of the whole bunch of the resources required in order to produce our serverless infrastructure containing the Payara Server 6, together with the test Jakarta EE 10 application, deployed into it. This infrastructure consists of a CloudFormation stack named jakarta-ee-10-app-dev-lb-ws containing, among others, security groups, listeners, IAM roles, dedicated CloudWatch log groups, and, most important, an ECS task definition having a Fargate launch type that runs the Payara Server 6 platform. This way makes available our test application, and its associated exposed JAX-RS API, at its associated public URL.

You can test it by simply running the curl utility:

curl http://jakar-Publi-H9B68022ZC03-1756944902.eu-west-3.elb.amazonaws.com/test/api/myresource

Got it !Here we have appended to the public URL our JAX-RS API relative URN, as displayed by AWS Copilot. You may perform the same test by using your preferred browser. Also, if you prefer to run the provided integration test, you may slightly adapt it by amending the service URL.

Don't hesitate to go to your AWS console to inspect the serverless infrastructure created by the AWS Copilot in detail. And once finished, don't forget to clean up your workspace by running the command below which removes the CloudFormation stack jakarta-ee-10-app-lb-ws with all its associated resources:

$ copilot app delete

Sure? Yes

* Delete stack jakarta-ee-10-app-dev-lb-ws

- Update regional resources with stack set "jakarta-ee-10-app-infrastructure" [succeeded] [12.4s]

- Update resources in region "eu-west-3" [update complete] [9.8s]

* Deleted service lb-ws from application jakarta-ee-10-app.

* Retained IAM roles for the "dev" environment

* Delete environment stack jakarta-ee-10-app-dev

* Deleted environment "dev" from application "jakarta-ee-10-app".

* Cleaned up deployment resources.

* Deleted regional resources for application "jakarta-ee-10-app"

* Delete application roles stack jakarta-ee-10-app-infrastructure-roles

* Deleted application configuration.

* Deleted local .workspace file.Enjoy!

Opinions expressed by DZone contributors are their own.

Comments