Apache Solr: Get Started, Get Excited!

Join the DZone community and get the full member experience.

Join For Freewe've all seen them on various websites. crappy search utilities. they are a constant reminder that search is not something you should take lightly when building a website or application. search is not just google's game anymore. when a java library called lucene was introduced into the apache ecosystem, and then solr was built on top of that, open source developers began to wield some serious power when it came to customizing search features.

in this article you'll be introduced to apache solr and a wealth of applications that have been built with it. the content is divided as follows:

- introduction

- setup solr

- applications

- summary

1. introduction

apache solr is an open source search server. it is based on the full text search engine called apache lucene . so basically solr is an http wrapper around an inverted index provided by lucene. an inverted index could be seen as a list of words where each word-entry links to the documents it is contained in. that way getting all documents for the search query "dzone" is a simple 'get' operation.

one advantage of solr in enterprise projects is that you don't need any java code, although java itself has to be installed. if you are unsure when to use solr and when lucene, these answers could help. if you need to build your solr index from websites, you should take a look into the open source crawler called apache nutch before creating your own solution.

to be convinced that solr is actually used in a lot of enterprise projects, take a look at this amazing list of public projects powered by solr . if you encounter problems then the mailing list or stackoverflow will help you. to make the introduction complete i would like to mention my personal link list and the resources page which lists books, articles and more interesting material.

2. setup solr

2.1. installation

as the very first step, you should follow the official tutorial which covers the basic aspects of any search use case:

- indexing - get the data of any form into solr. examples: json, xml, csv and sql-database. this step creates the inverted index - i.e. it links every term to its documents.

- querying - ask solr to return the most relevant documents for the users' query

to follow the official tutorial you'll have to download java and the latest version of solr here . more information about installation is available at the official description .

next you'll have to decide which web server you choose for solr. in the official tutorial, jetty is used, but you can also use tomcat. when you choose tomcat be sure you are setting the utf-8 encoding in the server.xml . i would also research the different versions of solr, which can be quite confusing for beginners:

- the current stable version is 1.4.1. use this if you need a stable search and don't need one of the latest features.

- the next stable version of solr will be 3.x

- the versions 1.5 and 2.x will be skipped in order to reach the same versioning as lucene.

- version 4.x is the latest development branch. solr 4.x handles advanced features like language detection via tika, spatial search , results grouping (group by field / collapsing), a new "user-facing" query parser ( edismax handler ), near real time indexing, huge fuzzy search performance improvements, sql join-a like feature and more.

2.2. indexing

if you've followed the official tutorial you have pushed some xml files into the solr index. this process is called indexing or feeding. there are a lot more possibilities to get data into solr:

- using the data import handler (dih) is a really powerful language neutral option. it allows you to read from a sql database, from csv, xml files, rss feeds, emails, etc. without any java knowledge. dih handles full-imports and delta-imports. this is necessary when only a small amount of documents were added, updated or deleted.

- the http interface is used from the post tool, which you have already used in the official tutorial to index xml files.

- client libraries in different languages also exist. (e.g. for java (solrj) or python ).

before indexing you'll have to decide which data fields should be searchable and how the fields should get indexed. for example, when you have a field with html in it, then you can strip irrelevant characters , tokenize the text into 'searchable terms', lower case the terms and finally stem the terms . in contrast, if you would have a field with text in it that should not be interpreted (e.g. urls) you shouldn't tokenize it and use the default field type string. please refer to the official documentation about field and field type definitions in the schema.xml file.

when designing an index keep in mind the advice from mauricio : "the document is what you will search for. " for example, if you have tweets and you want to search for similar users, you'll need to setup a user index - created from the tweets. then every document is a user. if you want to search for tweets, then setup a tweet index; then every document is a tweet. of course, you can setup both indices with the multi index options of solr.

please also note that there is a project called solr cell which lets you extract the relevant information out of several different document types with the help of tika.

2.3. querying

for debugging it is very convenient to use the http interface with a browser to query solr and get back xml. use firefox and the xml will be displayed nicely:

you can also use the velocity contribution , a cross-browser tool, which will be covered in more detail in the section about 'search application prototyping' . to query the index you can use the dismax handler or standard query handler . you can filter and sort the results:

q=superman&fq=type:book&sort=price asc you can also do a lot more ; one other concept is boosting. in solr you can boost while indexing and while querying. to prefer the terms in the title write:

q=title:superman^2 subject:supermanwhen using the dismax request handler write:

q=superman&qf=title^2 subjectcheck out all the various query options like fuzzy search , spellcheck query input , facets , collapsing and suffix query support .

3. applications

now i will list some interesting use cases for solr - in no particular order. to see how powerful and flexible this open source search server is.

3.1. drupal integration

the drupal integration can be seen as generic use case to integrate solr into php projects. for the php integration you have the choice to either use the http interface for querying and retrieving xml or json. or to use the php solr client library . here is a screenshot of a typical faceted search in drupal :

for more information about faceted search look into the wiki of solr .

more php projects which integrates solr:

- open source typo3- solr module

- magento enterprise - solr module . the open source integration is out dated.

- oxid - solr module . no open source integration available.

3.2. hathi trust

the hathi trust project is a nice example that proves solr's ability to search big digital libraries. to quote directly from the article : "... the index for our one million book index is over 200 gigabytes ... so we expect to end up with a two terabyte index for 10 million books"

other examples for libraries:

- vufind - aims to replace opac

- internet archive

- national library of australia

3.3. auto suggestions

mainly, there are two approaches to implement auto-suggestions (also called auto-completion) with solr: via facets or via ngramfilterfactory .

to push it to the extreme you can use a lucene index entirely in ram. this approach is used in a large music shop in germany.

live examples for auto suggestions:

3.4. spatial search applications

when mentioning spatial search, people have geographical based applications in mind. with solr, this ordinary use case is attainable . some examples for this are :

- city search - city guides

- yellow pages

- kaufda.de

spatial search can be useful in many different ways : for bioinformatics, fingerprints search, facial search, etc. (getting the fingerprint of a document is important for duplicate detection). the simplest approach is implemented in jetwick to reduce duplicate tweets, but this yields a performance of o(n) where n is the number of queried terms. this is okay for 10 or less terms, but it can get even better at o(1)! the idea is to use a special hash set to get all similar documents. this technique is called local sensitive hashing . read this nice paper about 'near similarity search and plagiarism analysis' for more information.

3.5. duckduckgo

duckduckgo is made with open source and its "zero click" information is done with the help of solr using the dismax query handler:

the index for that feature contains 18m documents and has a size of ~12gb. for this case had to tune solr: " i have two requirements that differ a bit from most sites with respect to solr:

- i generally only show one result, with sometimes a couple below if you click on them. therefore, it was really important that the first result is what people expected.

- false positives are really bad in 0-click, so i needed a way to not show anything if a match wasn't too relevant.

i got around these by a) tweaking dismax and schema and b) adding my own relevancy filter on top that would re-order and not show anything in various situations. " all the rest is done with tuned open source products. to quote gabriel again: "the main results are a hybrid of a lot of things, including external apis, e.g. bing, wolframalpha, yahoo, my own indexes and negative indexes (spam removal), etc. there are a bunch of different types of data i'm working with. " check out the other cool features such as privacy or bang searches .

3.6. clustering support with carrot2

carrot2 is one of the "contributed plugins" of solr. with carrot2 you can support clustering : " clustering is the assignment of a set of observations into subsets (called clusters) so that observations in the same cluster are similar in some sense. " see some research papers regarding clustering here . here is one visual example when applying clustering on the search "pannous" - our company :

3.7. near real time search

solr isn't real time yet, but you can tune solr to the point where it becomes near real time, which means that the time ('real time latency') that a document takes to be searchable after it gets indexed is less than 60 seconds even if you need to update frequently. to make this work, you can setup two indices. one write-only index "w" for the indexer and one read-only index "r" for your application. index r refers to the same data directory of w, which has to be defined in the solrconfig.xml of r via:

<datadir>/pathto/indexw/data/</datadir>to make sure your users and the r index see the indexed documents of w, you have to trigger an empty commit every 60 seconds:

wget -q http://localhost:port/solr/update?stream.body=%3ccommit/%3e -o /dev/nulleverytime such a commit is triggered a new searcher without any cache entries is created. this can harm performance for visitors hitting the empty cache directly after this commit, but you can fill the cache with static searches with the help of the newsearcher entry in your solrconfig.xml. additionally, the autowarmcount property needs to be tuned, which fills the cache with a newsearcher from old entries.

also, take a look at the article 'scaling lucene and solr' , where experts explain in detail what to do with large indices (=> 'sharding') and what to do for high query volume (=> 'replicating').

3.8. loggly = full text search in logs

feeding log files into solr and searching them at near real-time shows that solr can handle massive amounts of data and queries the data quickly. i've setup a simple project where i'm doing similar things , but loggly has done a lot more to make the same task real-time and distributed. you'll need to keep the write index as small as possible otherwise commit time will increase too great. loggly creates a new solr index every 5 minutes and includes this when searching using the distributed capabilities of solr ! they are merging the cores to keep the number of indices small, but this is not as simple as it sounds. watch this video to get some details about their work.

3.9. solandra = solr + cassandra

solandra combines solr and the distributed database cassandra , which was created by facebook for its inbox search and then open sourced. at the moment solandra is not intended for production use. there are still some bugs and the distributed limitations of solr apply to solandra too. tthe developers are working very hard to make solandra better.

jetwick can now run via solandra just by changing the solrconfig.xml. solandra also has the advantages of being real-time (no optimize, no commit!) and distributed without any major setup involved. the same is true for solr cloud.

3.10. category browsing via facets

solr provides facets , which make it easy to show the user some useful filter options like those shown in the "drupal integration" example. like i described earlier , it is even possible to browse through a deep category tree. the main advantage here is that the categories depend on the query. this way the user can further filter the search results with this category tree provided by you. here is an example where this feature is implemented for one of the biggest second hand stores in germany. a click on 'schauspieler' shows its sub-items:

other shops:

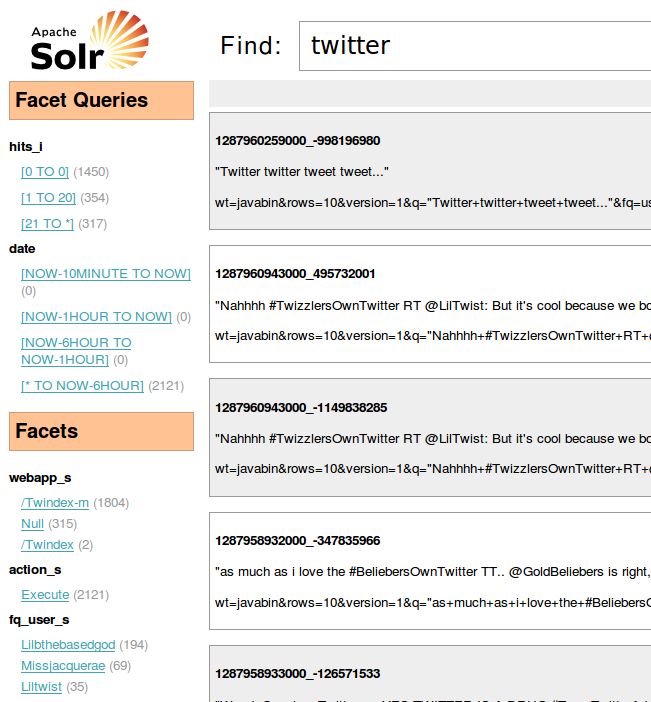

3.11. jetwick - open twitter search

you may have noticed that twitter is using lucene under the hood . twitter has a very extreme use case: over 1,000 tweets per second, over 12,000 queries per second, but the real-time latency is under 10 seconds! however, the relevancy at that volume is often not that good in my opinion. twitter search often contains a lot of duplicates and noise.

reducing this was one reason i created jetwick in my spare time. i'm mentioning jetwick here because it makes extreme use of facets which provides all the filters to the user. facets are used for the rss-alike feature (saved searches), the various filters like language and retweet-count on the left, and to get trending terms and links on the right:

to make jetwick more scalable i'll need to decide which of the following distribution options to choose:

- use solr cloud with zookeeper

- use solandra

- move from solr to elasticsearch which is also based on apache lucene

other examples with a lot of facets:

- cnet reviews - product reviews. electronics reviews, computer reviews & more.

- shopper.com - compare prices and shop for computers, cell phones, digital cameras & more.

- zappos - shoes and clothing.

- manta.com - find companies. connect with customers.

3.12. plaxo - online address management

plaxo.com , which is now owned by comcast, hosts web addresses for more than 40 million people and offers smart search through the addresses - with the help of solr. plaxo is trying to get the latest 'social' information of your contacts through blog posts, tweets, etc. plaxo also tries to reduce duplicates .

3.13. replace fast or google search

several users report that they have migrated from a commercial search solution like fast or google search appliance (gsa) to solr (or lucene). the reasons for that migration are different: fast drops linux support and google can make integration problems. the main reason for me is that solr isn't a black box —you can tweak the source code, maintain old versions and fix your bugs more quickly!

3.14. search application prototyping

with the help of the already integrated velocity plugin and the data import handler it is possible to create an application prototype for your search within a few hours. the next version of solr makes the use of velocity easier. the gui is available via http://localhost:port/solr/browse

if you are a ruby on rails user, you can take a look into flare. to learn more about search application prototyping, check out this video introduction and take a look at these slides.

3.15. solr as a whitelist

imagine you are the new google and you have a lot of different types of data to display e.g. 'news', 'video', 'music', 'maps', 'shopping' and much more. some of those types can only be retrieved from some legacy systems and you only want to show the most appropriated types based on your business logic . e.g. a query which contains 'new york' should result in the selection of results from 'maps', but 'new yorker' should prefer results from the 'shopping' type.

with solr you can set up such a whitelist-index that will help to decide which type is more important for the search query. for example if you get more or more relevant results for the 'shopping' type then you should prefer results from this type. without the whitelist-index - i.e. having all data in separate indices or systems, would make it nearly impossible to compare the relevancy.

the whitelist-index can be used as illustrated in the next steps. 1. query the whitelist-index, 2. decide which data types to display, 3. query the sub-systems and 4. display results from the selected types only.

3.16. future

solr is also useful for scientific applications, such as a dna search systems. i believe solr can also be used for completely different alphabets so that you can query nucleotide sequences - instead of words - to get the matching genes and determine which organism the sequence occurs in, something similar to blast .

another idea you could harness would be to build a very personalized search. every user can drag and drop their websites of choice and query them afterwards. for example, often i only need stackoverflow, some wikis and some mailing lists with the expected results, but normal web search engines (google, bing, etc.) give me results that are too cluttered.

my final idea for a future solr-based app could be a lucene/solr implementation of desktop search. solr's facets would be especially handy to quickly filter different sources (files, folders, bookmarks, man pages, ...). it would be a great way to wade through those extra messy desktops.

4. summary

the next time you think about a problem, think about solr! even if you don't know java and even if you know nothing about search: solr should be in your toolbox. solr doesn't only offer professional full text search, it could also add valuable features to your application. some of them i covered in this article, but i'm sure there are still some exciting possibilities waiting for you!

Opinions expressed by DZone contributors are their own.

Comments