What to Log (and What Not to Log)

The time required to process and analyze logged information is important, especially when there is an urgent need to resolve an unexpected situation. As a result, a period of exploration should be taken to determine what information will be logged and what information will not be logged. In the example noted in the introduction, the DevOps engineer needed to review the consolidated events for an application that encountered an incident. In most cases, the situation needs to be resolved as soon as possible.

This effort could easily include logs from the microservices, databases, client application frameworks, and the security layer. If the logs included aspects that were not pertinent for analysis, additional time will be required to review and discard this type of information.

Consider the following example of logs that are ingested from the authentication/authorization service participating in the centralized log management strategy:

1550149377 INFO Userid (someUserId) successfully logged in from IP 127.0.0.1

1550149382 INFO SSID for someUserId updated to reflect last login

1550149385 WARN Password for someUserId will expire in 26 hours

1550149415 INFO UserId (someUserId) granted access to SomeApplication via token #tokenGoesHere

While the information being logged is important information, it might be best to filter out all the events, except for the following message:

1550149377 INFO Userid (someUserId) successfully logged in from IP 127.0.0.1

In doing so, the number of log messages that would need to be reviewed during a crisis is minimized — especially in cases where hundreds (or thousands) of users are accessing the application.

Sensitive Information

Another aspect to consider is making sure that secretive information (access tokens, database connection strings, encryption keys, account information, user information, etc.) is not stored in the centralized log management solution. In the log example above, the #tokenGoesHere log message should be suppressed from ingestion into the centralized log management solution since that token could be considered sensitive information. If the event is required, the message should be enriched to only ingest the following information:

1550149415 INFO UserId (someUserId) granted access to SomeApplication

Establish Guidelines

The key is to establish guidelines that meet the needs of the entire user community that will utilize the solution. Think of this no differently than how any other application is architected — understanding the limits that are introduced by both not enough log events and too many log events. Once established, this information should be shared with teams who can create the events that are being captured by the centralized log management solution.

Centralized Log Management Checklist

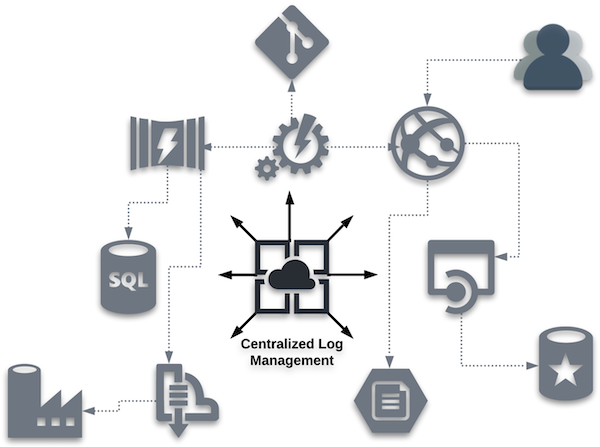

When the decision is made to evaluate centralized log management solutions, the number of available offerings will certainly appear daunting. As a result, it is crucial to understand which features and functionality are important for your implementation. Below are some high-level aspects to consider:

- Zero to 60 – What is involved in getting started? How quickly can a new implementation span from setup and configuration to log analysis and debugging success?

- While the time required to get up to full speed is not an exclusive metric, there is some merit in knowing if the product under review gives the ability to get started quickly without a great deal of setup and configuration.

- Ease of data exploration – Can all types of users easily operate the system and locate data?

- Remember, the user is often more of a data explorer and not a data scientist. Any built-in reports or filters will lead to a better user experience when extracting results from the system.

- Analyst efficiency – How quickly does the system respond, including the ability to create complex searches or filters? Once data is returned, how easy are the results to comprehend and utilize?

- As noted above, time is often the driving component when trying to retrieve information from a centralized log management solution. Again, filters and reporting can help improve end-user efficiency.

- Scalability – How well does the solution work within your organization? Can all systems function within one centralized log management solution or would multiple instances be required? How about five years from now?

- It is important to understand how the centralized log management solution scales as more systems are introduced to the technology. While performance degradation is expected the larger the log data storage pool becomes, understanding the anticipated response time is a valuable metric to use during side-by-side comparisons.

- Storage – What are the storage expectations, where does the storage live, and what are the associated costs of a target implementation?

- It is also important to understand any boundaries around storage, especially with respect to system performance. Ideal log management systems should include the ability to leverage tiered storage for data — which is maintained for historical purposes over analytical purposes — at a lower price point.

- Log completeness – Does the information retained include everything necessary, or is extra effort required to retrieve data from an additional source?

- If you find yourself having to retrieve data from outside the centralized log management solution, there might be a gap in functionality with respect to your requirements.

- Data enrichment functionality – Does enrichment functionality exist? If so, how easy is it to utilize and maintain?

- It might be a good idea to review current log sources and understand more of the edge cases that could require data enrichment exceeding typical enrichment usage patterns.

- Open/closed source – Does the solution utilize an open-source approach? How does this approach line up with other solutions your entity employs?

- Log collectors – How are the log collectors defined? Are they proprietary or have they been created by third parties/vendors? Can these log collectors be centrally managed by the solution, or do they have to be independently managed at the log source level?

- Typically, proprietary developed connectors lag those created by the third party/vendor themself. If the connectors can be managed and configured by the centralized log management solution, there is less need for configuration to be maintained on the log source.

- Configuration as Code – Does the solution employ a "* as Code" approach, allowing the configuration of the centralized log management solution to be stored in a code repository?

- If your organization is embracing the concept of "* as Code," centralized log management solutions that adopt this philosophy will be able to build and configure instances programmatically.

- Class-specific functionality – Does the solution contain features that help a particular class of user (DevOps, security, compliance, ITOps) obtain common results? Do reports exist to locate regulatory exceptions like HIPAA, for example?

- Having functionality built in to assist specific use cases will lessen the time required for such groups to get up to speed and recognize value in the centralized log management solution.

- API functionality – Is there a public API available for the solution?

- Gaining familiarity of any underlying application program interface (API) for the centralized log management solution could further justify or leverage the value returned from implementation.

- Anticipated costs – How is the product licensed?

- Understanding the cost model will allow for product comparisons as time progresses and more components embrace centralized log management within your organization.

- CLM vs. SEIM – Is the target solution a centralized log management (CLM) solution, or is it a security information and event management (SEIM) product? Do your current needs require one solution over the other… or perhaps both solutions?

- The requirements approved for the product will be a guide to understand what type of solution should be considered. It is important, however, to understand the differences between CLM and SEIM.

- ELK Stack – Does the underlying solution leverage the ELK Stack? If not, what integrations exist to leverage these tools to improve the effectiveness and observability of the centralized logging solution?

- The ELK Stack is an ideal collection that works well with centralized log management solutions. It facilitates storing large amounts of data across multiple systems by leveraging products that scale naturally to align with logging needs. A purpose-driven search engine is coupled with a set of tools designed to analyze and observe the centralized log data.

- In every case, it is important to consider what steps are required to leverage the ELK Stack and any associated costs during product evaluations.

Next-Gen Features for Log Management

While the centralized log management checklist section provided features and functionality that should be expected in an acceptable solution, below is a collection of next-generation (next-gen) features for products that offer leading-edge functionality. These features are intended to leverage technology in order to enrich the log management experience.

Recurring Pattern Identification

Having an option to quickly see recurring patterns across all log data allows analysts to isolate issues and unexpected behavior. Implementing the ability to present a patterns perspective will alter the analyst's view of a series of endless log entries to a categorized table, showing something like what is displayed below:

Count Ratio Pattern

----- ------ ---------------------------

52,701 22.17% License key has expired

19,457 7.99% Invalid User ID

18,295 7.25% New customer account created

In the example above, a large percentage of the messages match the pattern where a license key had expired, which may not be easy to locate in a large volume of individual log entries.

Machine Learning and Crowdsourcing

Analysts often find themselves looking for the root cause of an unexpected issue — which can resemble a needle in a haystack. Rather than spending hours trying to narrow down the endless sea of log entries, the concept of machine learning and crowdsourcing support can minimize the time required to identify the root cause. Next-generation solutions that employ machine learning can help present key terms found in the centralized log events, along with the number of occurrences for each term. This enables the analyst to quickly reduce the number of log entries to process, thus making the haystack much smaller.

With a smaller collection of logs to process, these same market leaders provide additional information from sources outside the centralized log management service. For example, opening a given log entry provides the expected information related to the captured log data, but it goes a step further by linking crowdsourced data like:

- Discussion threads related to the logged message or condition

- Blog entries focused on how to correct the situation

- Documentation for the method, function, or class being utilized

- Additional information related to the error itself

Anomaly Detection

Issues that do not appear in the logs often, but that are extremely valuable in nature, are often referred to as anomalies. Next-generation solutions should provide the ability to detect anomalies so they can be surfaced and addressed. Anomaly detection leverages machine learning patterns to automatically isolate and investigate emergent problems based upon unusual behavior.

Once a baseline collection of fields has been specified and a query is established, the centralized log management service builds a model and begins to analyze and capture unusual behavior and events. Those detected events can be surfaced as new alerts in real time to provide instant access to emerging issues.

Data Visualization

Visualizations enable analysts to view data in graphical format, which can allow them to better understand comparisons over time. By defining common elements for the X and Y axis, data in the next-generation centralized log management solution can be leveraged to communicate the state of the events being captured. These visualizations can be combined into a dashboard to communicate the overall status of the environment being monitored by the centralized log management service.

{{ parent.title || parent.header.title}}

{{ parent.tldr }}

{{ parent.linkDescription }}

{{ parent.urlSource.name }}