Working With Vision AI to Test Cloud Applications

Tricentis Tosca's Vision AI simplifies UI testing with mockup-based test creation, self-healing capabilities, and built-in accessibility checks for dynamic applications.

Join the DZone community and get the full member experience.

Join For FreeRecently, I’ve been looking into Tricentis Tosca to better understand how its testing suite can benefit my app development workflow. In my last article about Tosca, I wrote about some of the tool’s visual capabilities, such as QR code testing. Testing QR codes is great if you need an effective way to validate that specific part of your app. Then, I discovered Tosca’s Vision AI tools.

Imagine giving your testing tool some simple visual cues for how your system should work and then building the functionality to make the tests pass. That’s what these tools are designed to do.

Writing tests that reflect how users interact with your app is crucial. But as your interface becomes more visual, testing gets trickier. You’ve likely had to include app-specific markup in your tests, which isn’t ideal — you’re supposed to test the app, not rebuild it in your tests. So, how do you avoid this?

In this article, we will look at how to shift UI testing away from technical implementation details, instead describing visual cues to ensure things work as expected. Let’s dive in!

Mockup-Driven Development

With Vision AI, you can start with a mockup and build the actual UI later. I don’t think I’ve encountered anything like this before. When it comes to interface visuals, I’m used to building tests that are — unfortunately — tied more closely to my implementation details than I’d like. And these tests are fragile.

Vision AI allows me to point my tests to a prototype and train my test suite on the desired behavior. I say “train” because Tosca’s Vision AI features use convolutional neural networks underneath to build test suites using computer vision.

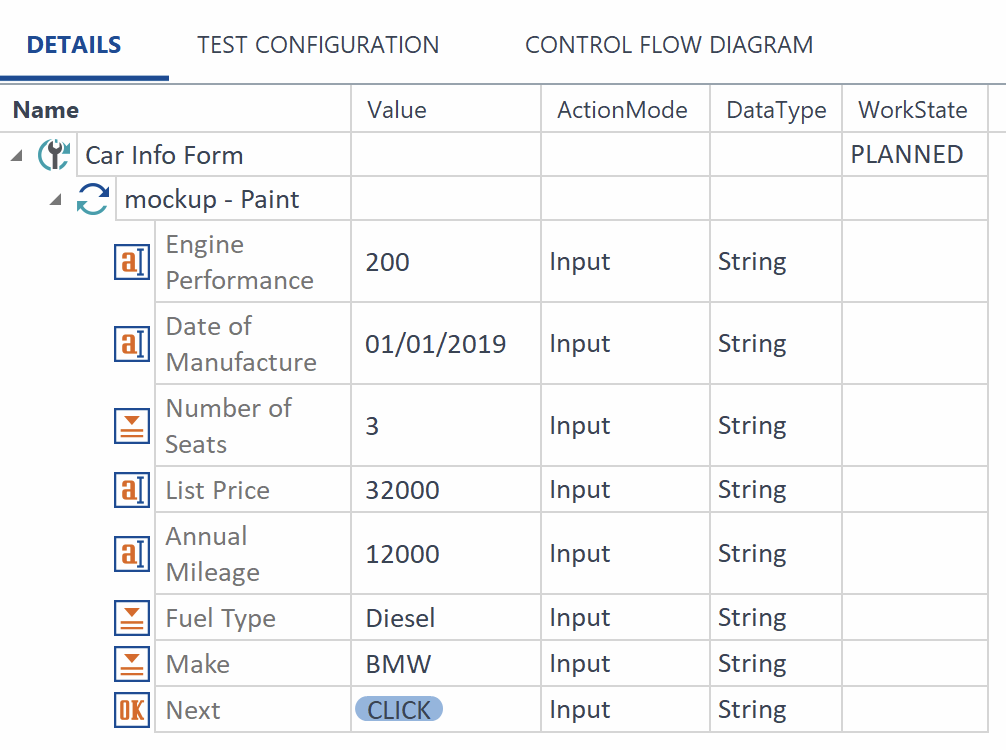

In Tosca Commander, I instructed Tosca to scan an application and set up a new testing module based on whatever application I wanted to use. I took a simple wireframe of a web form and opened it up in Microsoft Paint. Then, I used the Select on screen functionality in the screen scanner to choose the right elements from the page to make a testing module.

As you can see, I simply clicked on the boxes drawn in the wireframe, and Tosca could pick them out from a list of items it saw when it scanned the “application.” Not only that, Tosca could parse out what the mockup likely indicated on the “form” it saw.

From there, to build out a test, all I needed to do was provide the values I wanted Tosca to enter on the form:

Each form element had an icon next to it, indicating what Tosca determined the fields to be (such as dropdowns or text fields). For the Next element, Tosca determined it to be a button.

It was time to run the test. Watching what Tosca did here was a big shock — but in a good way. I didn’t need to write any code at all. Yet, Tosca could enter data on a demo site with these same fields on it. The fields on the demo form were even in a different order than the mockup, but that wasn’t an issue.

As Tosca navigated the form, it looked for the essential properties of each field or element and performed the action or input requested by the test case. As Tosca finds each item, it makes a log of the success or failure of each step, abstracting all the code used to implement it.

Keeping Up With Changes

In addition to building test suites based on a mockup, Vision AI also lets us keep our tests intact even as changes are made to the less critical parts of the page being tested. For example, if Tosca detects that the position of fields has changed, it can still perform the tests you trained it to do previously. Here’s how Tosca handled another implementation of the same form but restructured to take up two columns.

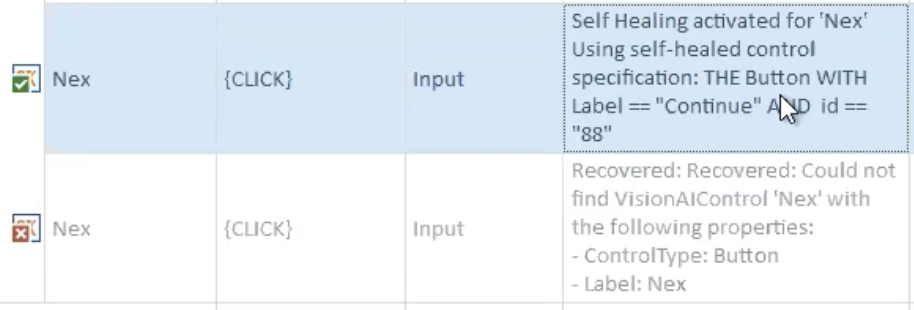

Tosca can deal with position changes, but it can also attempt to “self-heal” if something like a label has changed. For example, consider a button the user must click to continue. When we originally built the form, the button label was Next, but we later changed it to Continue. Tosca will still be able to recognize what to do. All I needed to do was enter a few testing parameters for Tosca to enable self-healing tests. Vision AI uses various algorithms to retry a test until it’s sure it really can’t find the element.

Here’s what a passing test looks like for the example where the button label has changed from Next to Continue.

When Tosca couldn’t find the button label it expected, it self-healed and updated its model to use the label that it was able to find instead. Pretty amazing!

Vision Isn’t Everything

It’s nice to know that the visual aspects of your application are well-tested. However, it’s important to remember that accessibility (a11y) is critical as well. You want to ensure that as many people as possible can use your application. Even though Tosca can use computer vision to verify the visual aspects are working as expected, Tricentis has also designed its development tools for accessibility. The most recent version of Tosca has an updated a11y report detailing information on adherence to WCAG 2.2. You can generate this report for any features under test.

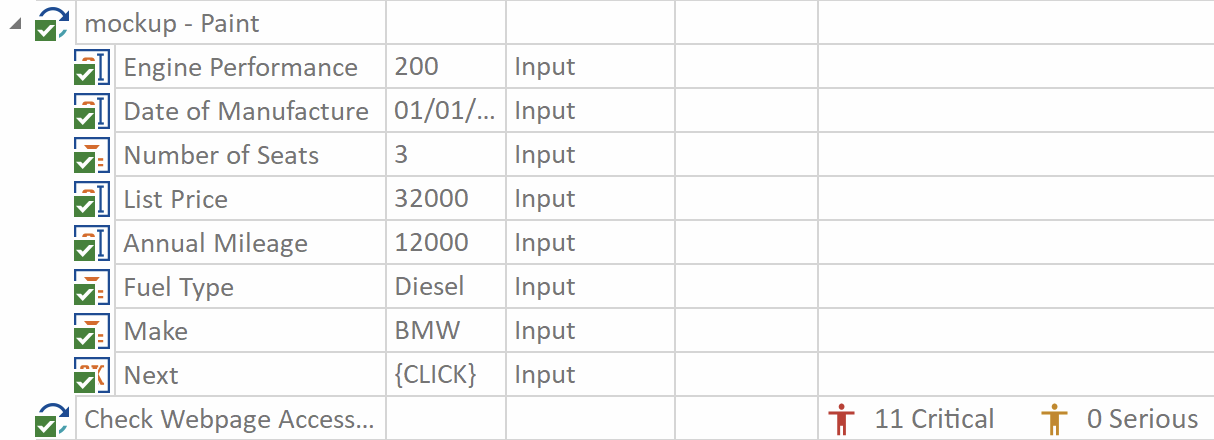

Adding accessibility testing is straightforward and doesn’t require any additional coding. Just drag the Check Webpage Accessibility into your test case, and Tosca will give you a quick summary of the state of the site you’re testing:

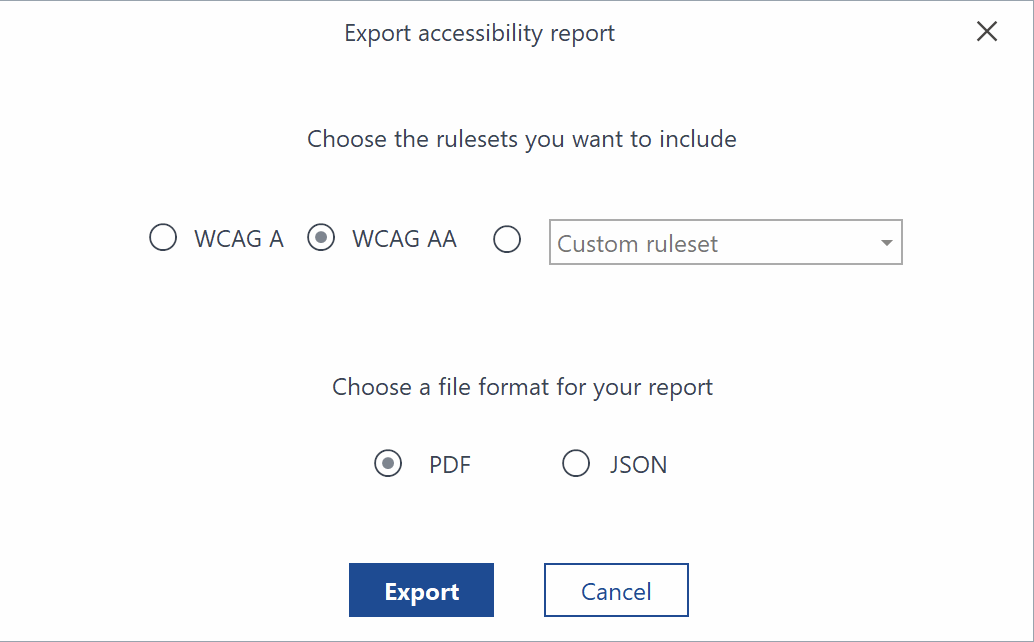

Finding 11 critical issues seems pretty concerning! To look more deeply, you can export the a11y report. You can even choose what standards Tosca should report against:

As soon as the results were compiled, my report opened up, showing me helpful charts about where the issues were and what needed to be fixed. Fortunately, regarding what needed to be fixed across the site, the report showed me that I didn’t have quite as much to do as I had feared.

Conclusion

Vision AI opens up a world of possibilities for simplifying and enhancing UI testing. From building tests directly from mockups to handling changes with ease, it’s a game-changer for anyone dealing with dynamic, visually complex applications. It’s not just about saving time — it’s about making the entire testing process smarter, more reliable, and less tied to brittle implementation details. I’ve barely scratched the surface of what’s possible, but what I’ve seen so far has left me thoroughly impressed.

How are you using it for your app testing workflow? Is it making your testing (and test writing) of visual interfaces faster? Share your stories!

Have a really great day!

Opinions expressed by DZone contributors are their own.

Comments