Using CloudTrail Lake To Enable Auditing of Enterprise Applications

CloudTrail Lake is an AWS security service that can be used to ingest and analyze audit events from enterprise applications.

Join the DZone community and get the full member experience.

Join For FreeFor a long time, AWS CloudTrail has been the foundational technology that enabled organizations to meet compliance requirements by capturing audit logs for all AWS API invocations. CloudTrail Lake extends CloudTrail's capabilities by adding support for a SQL-like query language to analyze audit events. The audit events are stored in a columnar format called ORC to enable high-performance SQL queries.

An important capability of CloudTrail Lake is the ability to ingest audit logs from custom applications or partner SaaS applications. With this capability, an organization can get a single aggregated view of audit events across AWS API invocations and their enterprise applications. As each end-to-end business process can span multiple enterprise applications, an aggregated view of audit events across them becomes a critical need. This article discusses an architectural approach to leverage CloudTrail Lake for auditing enterprise applications and the corresponding design considerations.

Architecture

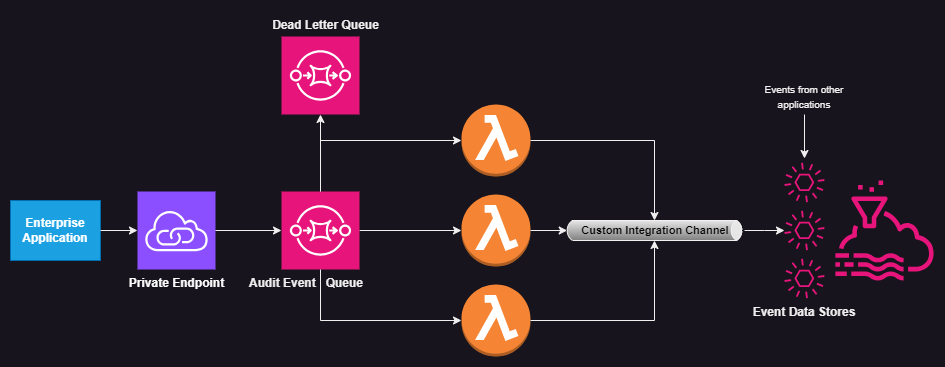

Let us start by taking a look at the architecture diagram.

This architecture uses SQS Queues and AWS Lambda functions to provide an asynchronous and highly concurrent model for disseminating audit events from the enterprise application. At important steps in business transactions, the application will call relevant AWS SDK APIs to send the audit event details as a message to the Audit event SQS queue. A lambda function is associated with the SQS queue so that it is triggered whenever a message is added to the queue. It will call the putAuditEvents() API provided by CloudTrail Lake to ingest Audit Events into the Event Data Store configured for this enterprise application. Note that the architecture shows two other Event Data stores to illustrate that events from the enterprise application can be correlated with events in the other data stores.

Required Configuration

- Start by creating an Event Data Store which accepts events of category

AuditEventLog. Note down the ARN of the event data store created. It will be needed for creating an integration channel.

aws cloudtrail create-event-data-store \

--name custom-events-datastore \

--no-multi-region-enabled \

--retention-period 90 \

--advanced-event-selectors '[

{

"Name": "Select all external events",

"FieldSelectors": [

{ "Field": "eventCategory", "Equals": ["ActivityAuditLog"] }

]

}

]'

- Create an Integration with the source as "My Custom Integration" and choose the delivery location as the event data store created in the previous step. Note the ARN of the channel created; it will be needed for coding the Lambda function.

aws cloudtrail create-channel \

--region us-east-1 \

--destinations '[{"Type": "EVENT_DATA_STORE", "Location": "<event data store arn>"}]' \

--name custom-events-channel \

--source Custom

- Create a Lambda function that would contain the logic to receive messages from an SQS queue, transform the message into an audit event, and send it to the channel created in the previous step using the

putAuditEvents()API. Refer to the next section to understand the main steps to be included in the lambda function logic. - Add permissions through an inline policy for the Lambda function, to be authorized to put audit events into the Integration channel.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Statement1",

"Effect": "Allow",

"Action": "cloudtrail-data:PutAuditEvents",

"Resource": "<channel arn>"

}]

}

- Create a SQS queue of type "Standard" with an associated dead letter queue.

- Add permissions to the Lambda function using an inline policy to allow receiving messages from the SQS Queue.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Statement1",

"Effect": "Allow",

"Action": "sqs:*",

"Resource": "<SQS Queue arn>"

}

]

}

- In the Lambda function configuration, add a trigger by choosing the source as "SQS" and specifying the ARN of the SQS queue created in the previous step. Ensure that

Report batch item failuresoption is selected. - Finally, ensure that permissions to send messages to this queue are added to the IAM Role assigned to your enterprise application.

Lambda Function Code

The code sample will focus on the Lambda function, as it is at the crux of the solution.

public class CustomAuditEventHandler implements RequestHandler<SQSEvent, SQSBatchResponse> {

public SQSBatchResponse handleRequest(final SQSEvent event, final Context context) {

List<SQSMessage> records = event.getRecords();

AWSCloudTrailData client = AWSCloudTrailDataClientBuilder.defaultClient();

PutAuditEventsRequest request = new PutAuditEventsRequest();

List<AuditEvent> auditEvents = new ArrayList<AuditEvent>();

request.setChannelArn(channelARN);

for (SQSMessage record : records) {

AuditEvent auditEvent = new AuditEvent();

// Add logic in the transformToEventData() operation to transform contents of

// the message to the event data format needed by Cloud Trail Lake.

String eventData = transformToEventData(record);

context.getLogger().log("Event Data JSON: " + eventData);

auditEvent.setEventData(eventData);

// Set a source event ID. This could be useful to correlate the event

// data stored in Cloud Trail Lake to relevant information in the enterprise

// application.

auditEvent.setId(record.getMessageId());

auditEvents.add(auditEvent);

}

request.setAuditEvents(auditEvents);

PutAuditEventsResult putAuditEvents = client.putAuditEvents(request);

context.getLogger().log("Put Audit Event Results: " + putAuditEvents.toString());

SQSBatchResponse response = new SQSBatchResponse();

List<BatchItemFailure> failures = new ArrayList<SQSBatchResponse.BatchItemFailure>();

for (ResultErrorEntry result : putAuditEvents.getFailed()) {

BatchItemFailure batchItemFailure = new BatchItemFailure(result.getId());

failures.add(batchItemFailure);

context.getLogger().log("Failed Event ID: " + result.getId());

}

response.setBatchItemFailures(failures);

return response;

}

- The first thing to note is that the type specification for the Class uses

SQSBatchResponse, as we want the audit event messages to be processed as batches. - Each Enterprise application would have its own format for representing audit messages. The logic to transform the messages to the format needed by CloudTrail Lake data schema should be part of the Lambda function. This would allow for using the same architecture even if the audit events need to be ingested into a different (SIEM) tool instead of CloudTrail Lake.

- Apart from the event data itself, the

putAuditEvents()API of CloudTrail Lake expects a source event id to be provided for each event. This could be used to tie the audit event stored in the CloudTrail Lake to relevant information in the enterprise application. - The messages which failed to be ingested should be added to list of failed records in the SQSBatchResponse object. This will ensure that all the successfully processed records are deleted from the SQS Queue and failed records are retried at a later time.

- Note that the code is using the source event id (

result.getID()) as the ID for failed records. This is because the source event id was set as the message id earlier in the code. If a different identifier has to be used as the source event id, it has to be mapped to the message id. The mapping will help with finding the message ids for records that were not successfully ingested while framing the lambda function response.

Architectural Considerations

This section discusses the choices made for this architecture and the corresponding trade-offs. These need to be considered carefully while designing your solution.

- FIFO VS Standard Queues

- Audit events are usually self-contained units of data. So, the order in which they are ingested into the CloudTrail Lake should not affect the information conveyed by them in any manner. Hence, there is no need to use a FIFO queue to maintain the information integrity of audit events.

- Standard queues provide higher concurrency than FIFO queues with respect to fanning out messages to Lambda function instances. This is because, unlike FIFO queues, they do not have to maintain the order of messages at the queue or message group level. Achieving a similar level of concurrency with FIFO queues would require increasing the complexity of the source application as it has to include logic to fan out messages across message groups.

- With standard queues, there is a small chance of multiple deliveries of the same message. This should not be a problem as duplicates could be filtered out as part of the Cloud Data Lake queries.

- SNS Vs SQS: This architecture uses SQS instead of SNS for the following reasons:

- SNS does not support Lambda functions to be triggered for standard topics.

- SQS through its retry logic, provides better reliability with respect to delivering messages to the recipient than SNS. This is a valuable capability, especially for data as important as audit events.

- SQS can be configured to group audit events and send those to Lambda to be processed in batches. This helps with the performance/cost of the Lambda function and avoids overwhelming CloudTrail Lake with a high number of concurrent connection requests.

There are other factors to consider as well such as the usage of private links, VPC integration, and message encryption in transit, to securely transmit audit events. The concurrency and message delivery settings provided by SQS-Lambda integration should also be tuned based on the throughput and complexity of the audit events. The approach presented and the architectural considerations discussed provide a good starting point for using CloudTrail Lake with enterprise applications.

Opinions expressed by DZone contributors are their own.

Comments