Use of AWS Step Function to Modernize Asynchronous Integration With Oracle ERP Cloud – A Case Study

This case study is about the modernization of an existing integration in Oracle Integration Cloud by re-solutioning the same in AWS.

Join the DZone community and get the full member experience.

Join For FreeAWS offers a handful of services for integration. Though AWS is not that way a full-fledged Integration Tool like the well-known ones in the market as Oracle Integration Cloud, Oracle Service Bus or MuleSoft, and many more, there can be potentially effective solutions implemented leveraging the existing service offerings in AWS. This case study is about the modernization of an existing integration in Oracle Integration Cloud by re-solutioning the same in AWS.

Background

It can be an interesting question that why suddenly a Retail business would wish to migrate a live integration to AWS given the current one is already on the cloud (supposedly already taking advantage of the “pay as you use” flavor of Oracle Cloud). The potential reason is the business at the enterprise level, investing more on AWS, was eventually more inclined to have everything on the same cloud than subscribing to another just for the sake of integrations. Another reason was the lack of resources having the proper skills and expertise to support and debug the integration. Nevertheless, there has been a major shift of technical inclination of the businesses towards having things integrated using microservices on a Cloud rather than paying for a dedicated integration product. This case study is also not an exception.

Requirement and Challenge

The requirement was to integrate an in-house application with Oracle ERP Cloud to sync Transfer Orders between the source system and ERP. Moreover, the challenging part of the requirement was to have at least a near-real-time integration (if not real-time) for the same, the reason being a business user initiates the transfer from one inventory to another from the portal of the source application and should wait to see the same is successfully synced in Oracle ERP or not. On the other side, with input like the Item Number (SKU), the source and target inventory details, and the transfer quantities, once fed into the ERP, the internal process within ERP takes a good amount of time to get completed. The existing solution was leveraging the ERP adapter to do the same, and once the Transfer Order was created, a business event was triggered, too, which integration would subscribe to and send the confirmation back to the source application by calling back an API exposed by the source application.

Initial Solution

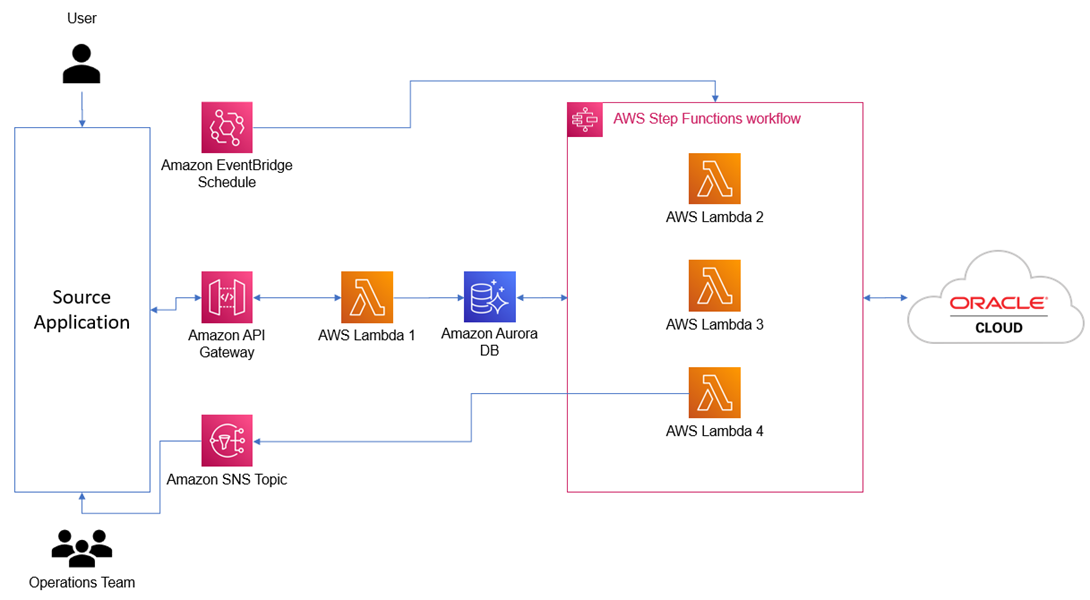

In AWS, not having any strong ERP adapter like OIC was a straightforward disadvantage. The alternative and obvious route relied on Oracle's APIs to do the job. Creating a Transfer Order and having it shipped (which was an implicit part of the requirement) using APIs involves multiple API calls, and typically, there comes a situation where until the Transfer Order gets generated in ERP, the API for the next steps cannot be called. Hence, the clear indication is that the integration cannot be real-time; at the most, it can be near-real-time. Post a lot of discussion around technical limitations, pros and cons with the business stakeholders; the solution was thought to be implemented using the serverless architecture and integration offerings within AWS; the idea is even if it were not a real-time integration after the request payload gets ingested in the database by the Lambda 1 (exposed via API Gateway), it would be executed frequently to get triggered in every 15 minutes to execute a Step Function orchestrating three more Lambda functions to do the job, each Lambda function being a microservice. Lambda 2 would parse the payload, Lambda 3 would create the Transfer Order, and Lambda 4 would Ship the Transfer Order. Lambda 3 and 4 would call multiple ERP APIs as required, and Lambda 4 would have the ERP API call, which had a prerequisite for the success of Lambda 3’s API call. Here, success means when the API call returns a Transfer Order, meaning a TO is created in ERP (it may return blank or nothing if the TO is yet to be generated in ERP). The idea was if the first schedule can’t execute the Lambda 4, it would exit. The following schedule, after 15 minutes, would try again (of course, the initial ERP API calls would be skipped within the Lambda 3 as those would have executed in the first schedule, which can be easily tracked through the states captured in the database. However, it would still execute the Lambda functions just to check the state in the database and not to call the ERP APIs again. With this design, it was seen that eventually, it takes three to four triggers to complete the whole process, i.e., the time span sums up to 30 to 45 minutes for which the user will sit and wait for the response back to their system through an SNS topic. The design looks as below.

Issues and Impacts

After a couple of months, this solution went live; the technical team started getting requests from businesses around the turnaround time of the process, the long time that the user had to sit in front of the system waiting for the response, etc. To summarize, the areas in this design that became painful were:

- Performance – Even though the NFR did not have any high volume of data to be handled (it was a maximum of 100 requests per month), the performance bit from another side. The waiting time was becoming a daily frustration for business users. Surprisingly, during UAT, the turn-around time happened to be quicker (~15 minutes), resulting in no such issue being raised before it went live.

- Cost – Though for a given Transfer Request, the ERP API calls were not being made from the second schedule onwards (in case of the first schedule being unable to close the process), the Lambda functions were getting executed.

Improvement

To address the above issues, the Step Function was further fine-tuned. Below are the changes made to make it a more effective solution.

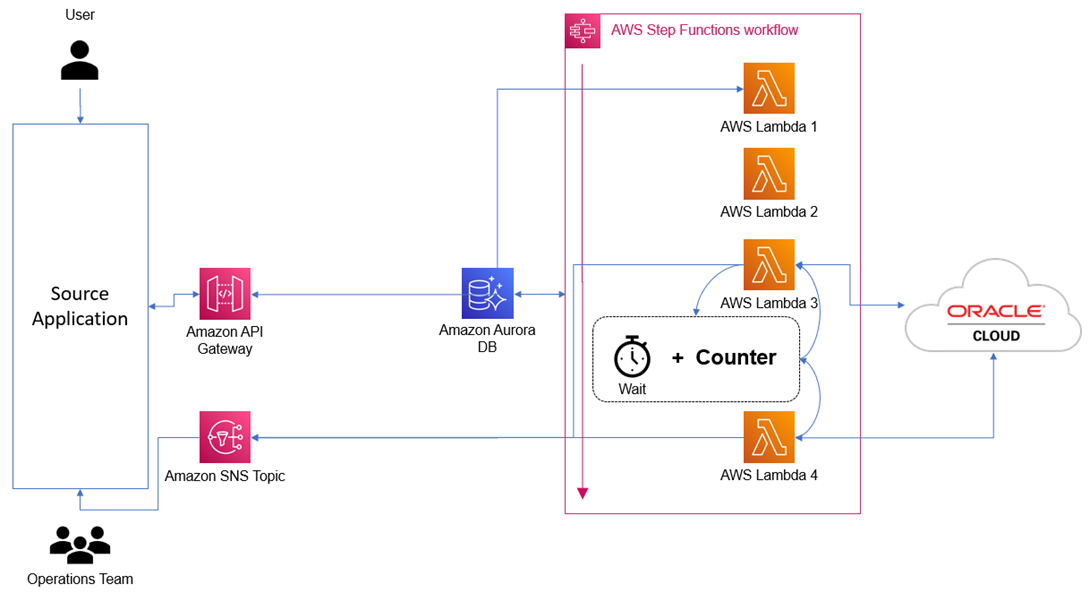

- No Scheduling: The scheduling approach was removed entirely. Instead, Lambda 1 was included in the Step Function workflow, and the Step Function itself was exposed as a REST API via API Gateway.

- Additional Workflow Logic: An additional workflow logic was incorporated between the Lambda 3 and Lambda 4 calls to have an Attempt Limit counter checked and the Lambda 3 response checked (to see if the Transfer Order is generated in ERP) before calling the Lambda 4. A wait time (configurable inside the Step Function) was incorporated, which would make the workflow wait, and if the maximum attempt limit were yet to be exhausted, then the flow would go back to the Lambda 3. If the attempt count gets exhausted (the counter would be incremented inside Lambda 3 and propagated to the Attempt Count Check State of the State Machine) after the maximum limit, then the error would be notified through the SNS topic. Typically, the count and wait time were decided meticulously to keep those optimal so that it would be safe to assume that if the count is exhausted, it indicates some issue that needs to be addressed manually. After the manual fix is done, the API Gateway can be directly invoked by Operations Team to initiate the workflow once again. Alternatively, a daily-once schedule can also be set to avoid this manual invoke of the API.

The above solution addressed the performance issue because it reduced the waiting time of 15 minutes (before each attempt) to the wait time configured within the Step Function, which was set up as five minutes. Hence, eventually, the whole process that took 45 minutes (assuming, in the worst case, the schedule gets triggered after 15 minutes from the API call to the Lambda 1 in the old design) to get completed would now take only 10 minutes (as the Step Function does not have to wait for the schedule to get triggered but the Lambda 3 would be called immediately after the waiting time is elapsed as they are within the same Step Function workflow) maximum, i.e., ~77% improvement in turn around time. Also, the new solution addressed the cost issue (though insignificant as the overall volume would never be high) because it avoids executing the Lambda 2 once that is already executed. The new solution looks as below.

Takeaway

The Step Function happens to be a nice feature within AWS when it comes to orchestration and workflow for modernizing integrations. It can orchestrate almost all possible services of AWS, making it a robust solution option in many cases. Step Function comes with a good workflow studio with nice features evolving and improving from time to time. The backend code is a JSON-based language named Amazon States Language. However, modernization of integrations using Lambda functions may not always be a favorable option, especially when the integration has huge transformation logic and a high volume of transactional requirements; it may shoot up the cost too high in those cases. Small integrations with simple transformation logic can be more suitable for Lambda functions to be leveraged as a serverless solutioning option. But the advantage of Step Function can still be leveraged by orchestrating microservices as the services like ECS, EKS, etc., are supported by Step Function.

Sample Code of Step Function

Below is a sample code with similar orchestration logic described in the improved solution for this Case Study. This is not the exact solution but just an indicative sample that can be helpful as a reference.

{

"Comment": "A description of my state machine",

"StartAt": "CallAPIThroughLambda",

"States": {

"CallAPIThroughLambda": {

"Type": "Task",

"Resource": "arn:aws:states:::lambda:invoke",

"OutputPath": "$.Payload",

"Parameters": {

"FunctionName": "arn:aws:lambda:us-east-1:495125011821:function:callMockAPI:$LATEST",

"Payload.$": "$"

},

"Retry": [

{

"ErrorEquals": [

"Lambda.ServiceException",

"Lambda.AWSLambdaException",

"Lambda.SdkClientException"

],

"IntervalSeconds": 2,

"MaxAttempts": 6,

"BackoffRate": 2

}

],

"Next": "CheckResponse"

},

"SNS Publish Error": {

"Type": "Task",

"Resource": "arn:aws:states:::sns:publish",

"Parameters": {

"TopicArn": "arn:aws:sns:us-east-1:495125011821:dummy_error_topic",

"Message.$": "$"

},

"End": true

},

"CheckAttemptCount": {

"Type": "Choice",

"Choices": [

{

"Variable": "$.counter",

"NumericLessThanEquals": 3,

"Comment": "If Attempt Limit is not exhausted",

"Next": "Wait for 1 minute"

}

],

"Default": "SNS Publish Error",

"Comment": "Wait if response status is not 200"

},

"CheckResponse": {

"Type": "Choice",

"Choices": [

{

"Variable": "$.statusCode",

"NumericEquals": 200,

"Next": "CallAPI2ThroughLambda"

}

],

"Default": "CheckAttemptCount"

},

"CallAPI2ThroughLambda": {

"Type": "Task",

"Resource": "arn:aws:states:::lambda:invoke",

"OutputPath": "$.Payload",

"Parameters": {

"Payload.$": "$",

"FunctionName": "arn:aws:lambda:us-east-1:495125011821:function:callMockAPI2:$LATEST"

},

"Retry": [

{

"ErrorEquals": [

"Lambda.ServiceException",

"Lambda.AWSLambdaException",

"Lambda.SdkClientException"

],

"IntervalSeconds": 2,

"MaxAttempts": 6,

"BackoffRate": 2

}

],

"Next": "SNS Publish Response"

},

"SNS Publish Response": {

"Type": "Task",

"Resource": "arn:aws:states:::sns:publish",

"Parameters": {

"Message.$": "$",

"TopicArn": "arn:aws:sns:us-east-1:495125011821:dummy_topic"

},

"End": true

},

"Wait for 1 minute": {

"Type": "Wait",

"Seconds": 60,

"Next": "CallAPIThroughLambda"

}

}

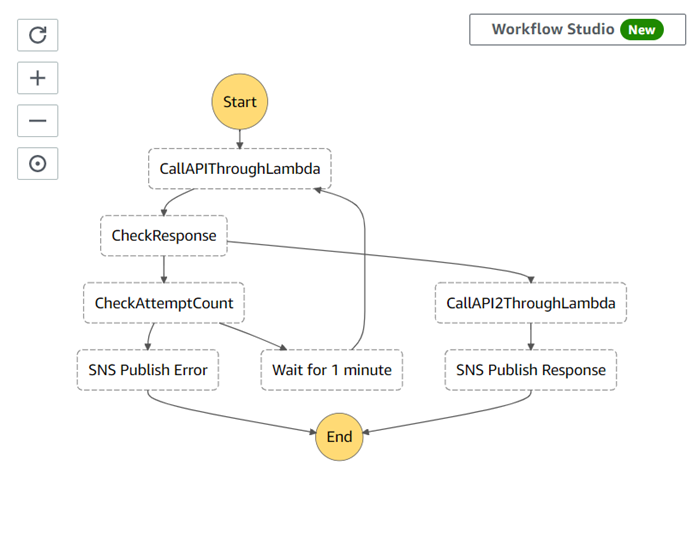

}Respective Graphical Representation in the Workflow Studio

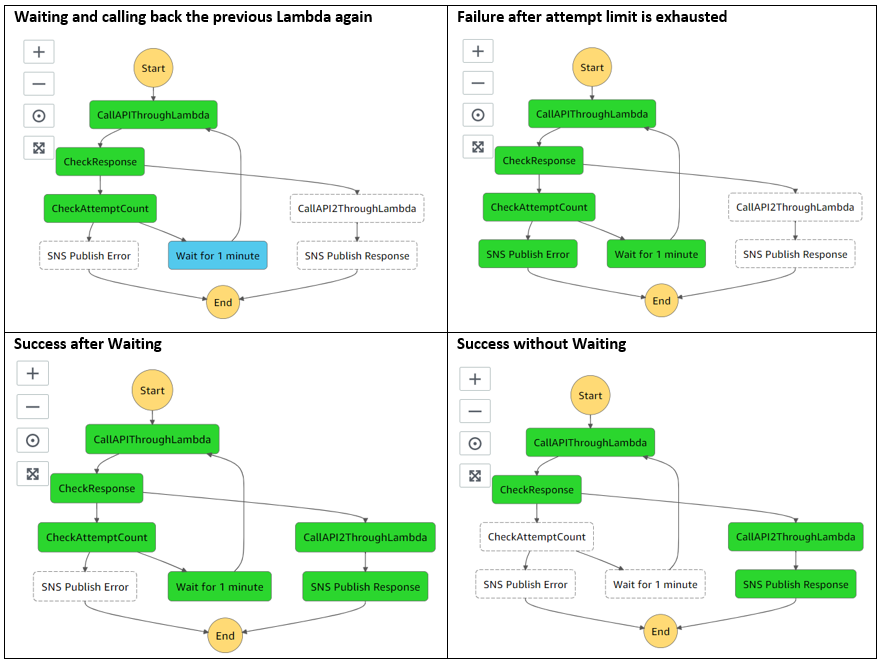

During execution, the instance looks like as below.

Opinions expressed by DZone contributors are their own.

Comments