Understanding API Caching and Its Benefits in Improving Performance and User Experience

API caching improves performance, user experience, and scalability. Future trends include advanced cache invalidation, dynamic content handling, and enhanced security.

Join the DZone community and get the full member experience.

Join For FreeCaching plays a vital role in enhancing the performance and scalability of APIs, allowing for quicker retrieval of data and reducing the load on backend systems. By intelligently storing and serving frequently accessed data, caching can significantly improve response times and overall user experience. In this article, we will build upon the insights shared in our earlier blog post and explore different caching strategies that can be employed to optimize API performance further.

Before we dive in, we'd like to acknowledge the inspiration for this article. We draw upon the valuable insights and expertise shared in the blog post titled "Using Caching Strategies to Improve API Performance" by Toro Cloud, a leading provider of API management solutions. If you haven't read their insightful post yet, we highly recommend checking it out.

Without further ado, let's explore the world of caching strategies and discover how they can revolutionize the performance of your APIs.

Definition and Purpose of API Caching

API caching refers to the process of temporarily storing the responses of API requests to serve subsequent identical requests faster. It involves storing the server's response in a cache and retrieving it directly from the cache instead of making the same request to the server again. This caching mechanism improves performance and reduces the load on the API server.

API Caching Serves Two Primary Purposes:

- Improved Performance: Caching API responses allows subsequent requests to be served faster since the response is retrieved from the cache, eliminating the need to process the request again on the server. This leads to reduced response times and improved overall performance.

- Reduced Server Load: By serving cached responses, API caching reduces the number of requests the server needs to handle. This helps in optimizing server resources and scaling the API infrastructure more efficiently, especially during periods of high traffic or frequent identical requests.

Importance of Performance and User Experience in API Design

In today's digital landscape, performance, and user experience play crucial roles in the success of an API-driven application. Slow API responses and high latency can frustrate users and negatively impact their experience. By implementing API caching, developers can significantly enhance performance, reduce latency, and ensure a smooth and responsive user experience.

API caching is an important aspect of API design, driven by the significance of performance and user experience. Performance plays a crucial role in API design as it directly affects user satisfaction and engagement. Users expect fast and responsive applications and slow API responses can lead to frustration and abandonment. Therefore, optimizing API performance is vital for meeting user expectations. Additionally, delivering a superior user experience gives a competitive advantage in the digital landscape. APIs play a key role in providing data and functionality, and by focusing on performance optimization, developers can enhance user experience and gain an edge over competitors.

Latency, or the delay between making an API request and receiving a response, significantly influences user perception. Even small delays can impact the perceived performance of an application. API caching reduces latency by serving cached responses quickly, resulting in a more responsive and satisfying user experience. Scalability is another important consideration. As an application grows, API infrastructure must handle higher traffic volumes without compromising performance. API caching helps alleviate the load on the server, enabling more efficient scaling.

API caching also offers benefits beyond performance improvements. It optimizes network bandwidth usage by reducing data transfers between the client and server. This can lead to lower costs, especially in scenarios where data transfer is charged or limited. In mobile applications or low-bandwidth environments, API caching becomes even more critical. Caching responses locally on the device or at intermediate caching layers reduces reliance on network requests, resulting in faster data access and an improved user experience, particularly in intermittent or slow connectivity situations.

Overall, API caching is essential for improving performance, enhancing user experience, optimizing scalability, reducing costs, and catering to mobile and low-bandwidth environments. By understanding caching mechanisms and implementing best practices, developers can successfully leverage API caching to deliver efficient and responsive applications.

Overview of the Caching Process

API caching involves storing and retrieving responses from a cache to reduce repetitive computations or data retrieval. Here's a brief overview of the caching process:

When a client sends a request to the API, the API checks if the requested data is already present in the cache. If found (cache hit), the API retrieves the cached response and returns it to the client. This eliminates the need for further processing and significantly improves response time.

If the requested data is not in the cache (cache miss), the API fetches it from the server. The server processes the request, generates a response, and sends it back to the API.

Upon receiving the response, the API caches it for future use, associating it with a unique identifier. Subsequent requests for the same data can be served directly from the cache, resulting in faster response times and reduced server load.

Cache invalidation is crucial to maintain accurate data. When the underlying data changes or expires, the cached response needs to be invalidated. Strategies like time-based expiration or event-driven invalidation can ensure cache consistency.

In summary, API caching optimizes performance and user experience by storing and reusing responses, reducing the need for repetitive computations, and improving response times.

Caching Mechanisms and Strategies

API caching utilizes various mechanisms and strategies to optimize performance and enhance user experience. Here are four common caching mechanisms:

- Client-side caching: Client-side caching involves storing API responses directly on the client device. When the client makes a request, it checks its cache first. If a cached response exists and is valid, it can be used, eliminating the need to send a request to the server. This reduces network latency and improves responsiveness.

- Server-side caching: Server-side caching stores API responses on the server itself. When a request is made, the server checks its cache before processing the request. If a cached response exists, it can be served directly, bypassing the need for computation or data retrieval. Server-side caching helps alleviate the load on the server and improves response times.

- CDN caching: Content Delivery Networks (CDNs) cache API responses in their distributed network of servers located across different geographical regions. When a user makes a request, the CDN determines the closest server and serves the cached response, reducing latency and improving availability. CDN caching is particularly useful for APIs with a global user base.

- Reverse proxy caching: Reverse proxies act as intermediaries between clients and servers. They cache API responses on the server side and serve them directly to clients upon request. Reverse proxy caching is effective for handling high traffic loads, as it offloads the server by responding to requests with cached content, reducing the processing and response time.

By employing these caching mechanisms, APIs can significantly improve performance, reduce server load, and provide a smoother user experience. However, it is crucial to consider cache invalidation strategies, cache consistency, and the appropriate caching scope for different types of data to ensure accurate and up-to-date responses.

Benefits of API Caching

Improved Performance

API caching offers several benefits that contribute to enhanced performance and user experience. Here are two key advantages:

- Reduction in response time: By caching API responses, subsequent requests can be served directly from the cache, eliminating the need for the server to process the same request repeatedly. This significantly reduces response time since the cached response can be retrieved and delivered to the client more quickly than generating a fresh response. Users experience faster loading times and improved responsiveness.

- Minimized server load: Caching responses at various levels (client-side, server-side, CDN, and reverse proxy) helps distribute the load across different components of the architecture. Cached responses can be served directly without invoking resource-intensive processes, such as database queries or complex computations on the server. This reduces the overall load on the server, allowing it to handle more requests efficiently and improving scalability.

By leveraging API caching, organizations can optimize the performance of their APIs, deliver faster and more responsive experiences to users, and effectively handle increased traffic without overburdening their servers.

Enhanced Scalability

API caching provides significant benefits in terms of scalability, allowing systems to handle higher traffic volumes and reducing backend resource consumption. Here are two key advantages:

- Handling higher traffic volumes: Caching helps alleviate the strain on backend servers by serving cached responses instead of processing each request from scratch. This enables the system to handle increased traffic loads more efficiently, ensuring that the API remains responsive even during peak usage periods. Caching acts as a buffer, absorbing a portion of the incoming requests and reducing the direct load on the backend infrastructure.

- Reducing backend resource consumption: By serving cached responses, API caching minimizes the need for backend resources, such as database queries or complex computations, to generate fresh responses for every request. This reduces the strain on the backend infrastructure, freeing up valuable resources that can be allocated to other tasks or requests. As a result, the system can scale more effectively, accommodating a larger user base and supporting higher transaction volumes without overtaxing the backend resources.

Through enhanced scalability facilitated by API caching, organizations can ensure their systems remain robust and performant even under heavy loads, allowing for seamless user experiences and uninterrupted service delivery.

Better User Experience

API caching plays a crucial role in improving the user experience by providing several advantages:

- Faster loading times: With API caching, frequently accessed data or responses are stored locally, allowing them to be retrieved quickly without the need for repeated server processing. As a result, API calls can be served directly from the cache, leading to significantly faster loading times for users. This helps eliminate delays and ensures a smoother and more responsive user experience.

- Reduced latency: By minimizing the round-trip time between clients and servers, API caching reduces latency. Caching eliminates the need for the client to wait for a server response by serving the cached data directly, resulting in faster data retrieval and reduced network latency. This reduction in latency contributes to a snappier and more interactive user experience.

- Consistent data availability: API caching ensures consistent availability of data by storing frequently accessed responses. In scenarios where the backend services may experience downtime or temporary issues, the cached responses can be served, providing uninterrupted access to essential data. This ensures a more reliable user experience, as users can continue to interact with the API and access relevant information even when the backend is temporarily unavailable.

By delivering faster loading times, reduced latency, and consistent data availability, API caching significantly enhances the overall user experience, leading to increased user satisfaction and engagement with the application or service.

Caching Considerations and Best Practices

Cache Invalidation Strategies

To ensure the accuracy and freshness of cached data, appropriate cache invalidation strategies should be implemented. Here are three common approaches:

- Time-based expiration: In this strategy, each cached item is assigned an expiration time or duration, after which it is considered invalid. When a client requests data, the cache checks if the cached item has expired. If it has, the cache fetches the latest data from the server and updates the cache. Time-based expiration is useful when the data is not frequently updated and has a relatively stable lifespan.

- Event-driven invalidation: This strategy involves invalidating cached items based on specific events or triggers. For example, if a resource is modified or updated, an event is triggered to invalidate the corresponding cache entry. This approach ensures that the cache is always up to date with the latest data and avoids serving stale information to clients. Event-driven invalidation is suitable for scenarios where data changes frequently or when real-time updates are required.

- Manual cache clearing: In certain cases, cache invalidation may need to be performed manually based on specific criteria. This could involve clearing the cache for specific resources, specific users, or during maintenance operations. Manual cache clearing provides control over when and which cache entries should be invalidated, but it requires careful management to ensure consistency and avoid unintended side effects.

Implementing a combination of these cache invalidation strategies can provide a robust and efficient caching system that balances data freshness and performance. It's important to choose the appropriate strategy based on the characteristics of the data being cached and the requirements of the application or service.

Handling Cache Coherence and Consistency

To maintain cache coherence and consistency in API caching, several best practices should be followed. Firstly, cache synchronization is essential in distributed systems with multiple cache instances. Coordinating cache updates across all instances can be achieved through distributed caching mechanisms or cache coordination techniques like invalidation messages or update notifications.

Secondly, performing cache updates within the same transaction as data modifications helps maintain coherence between the cache and the underlying data source. By ensuring atomic updates, both the data and cache entries are updated simultaneously.

Consistent cache update policies are also crucial. Clearly define when and how cache updates should occur, considering factors such as data update frequency, the impact of stale data, and available resources for cache updates. Consistency in cache update policies across the caching infrastructure prevents inconsistencies in cached data.

Additionally, implementing cache validation mechanisms is important. Techniques like checksums, versioning, or comparison with the original data source can validate the consistency of cached data. Regularly validating the cached data helps detect and resolve inconsistencies promptly, ensuring a reliable and accurate cache.

By adhering to these best practices, cache coherence and consistency can be effectively managed, resulting in improved performance and a seamless user experience.

Caching Dynamic Content

Caching dynamic content poses unique challenges compared to static content caching. Dynamic content typically includes personalized data, user-specific information, or frequently changing data. However, caching dynamic content can still offer performance benefits if implemented correctly.

One approach is to identify portions of dynamic content that can be cached without compromising its freshness or accuracy. This can involve separating static and dynamic components within a response and caching only the static parts while dynamically generating the dynamic elements upon each request.

Another technique is to leverage cache headers and directives to control caching behavior. Setting appropriate cache-control headers, such as "no-cache" or "must-revalidate," allows the caching infrastructure to determine the caching duration and expiration rules for dynamic content.

Furthermore, employing intelligent cache invalidation mechanisms for dynamic content is crucial. This can involve utilizing event-driven triggers or observing changes in the underlying data source to determine when to invalidate and refresh the cached dynamic content.

It's important to strike a balance between caching dynamic content to improve performance and ensuring the content remains up-to-date and relevant. By implementing caching strategies specifically tailored to dynamic content, it's possible to achieve optimal performance while maintaining the necessary freshness and accuracy for a seamless user experience.

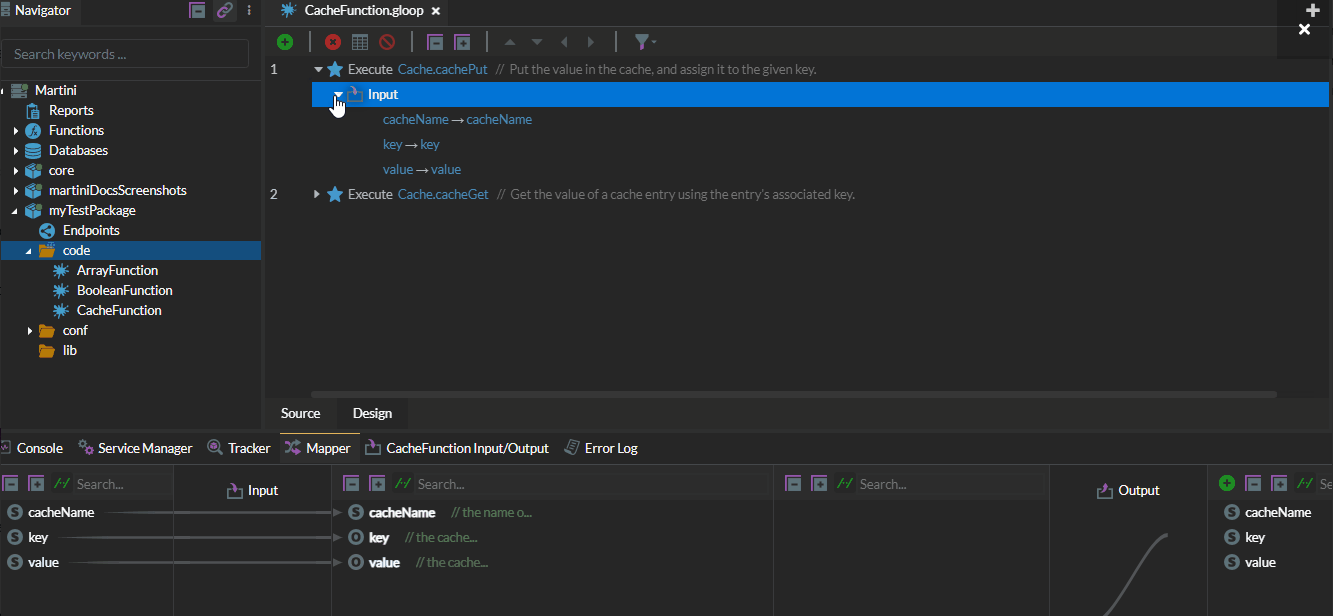

Cache Functions

Enterprise class integration platforms will typically include a caching function to facilitate caching of dynamic or static data. Below is a snippet showing how to use Cache function in the integration platform Martini:

Examples of API Caching Implementation

Caching Static Data

One common use case for API caching is caching static data. Static data refers to information that remains unchanged or updates infrequently. By caching static data, you can significantly improve performance and reduce unnecessary backend requests.

For example, consider an e-commerce application that displays product categories. These categories are unlikely to change frequently. By caching the category data at the API level, subsequent requests for the same data can be served directly from the cache, eliminating the need to fetch it from the backend every time. This reduces response times and alleviates the load on the server.

To implement caching for static data, you can set an appropriate expiration time for the cache entries or use a cache-invalidation mechanism triggered only when the data changes. This ensures that the cached data remains fresh and accurate while maximizing the benefits of caching.

By effectively caching static data, you can enhance the responsiveness of your API and improve overall user experience, especially for data that doesn't require real-time updates.

Caching Frequently Accessed Data

Another valuable application of API caching is caching frequently accessed data. This includes data that is requested by multiple clients or data that is computationally expensive to generate. Caching such data can lead to significant performance improvements and reduce the load on backend systems.

For instance, consider a social media platform that displays the latest trending posts. These posts may be accessed by numerous users simultaneously. By implementing caching for this frequently accessed data, you can store the computed results in the cache and serve subsequent requests directly from the cache. This avoids the need to repeat the expensive computation each time a user requests the trending posts, resulting in faster response times and reduced server load.

To cache frequently accessed data effectively, you can employ strategies such as time-based expiration or event-driven invalidation. Time-based expiration involves setting an expiration time for the cache entries, while event-driven invalidation clears the cache when specific events occur, such as when new posts are added to the trending list.

By caching frequently accessed data, you can optimize the performance of your API and ensure a seamless user experience, especially for data that requires frequent retrieval and computation.

Caching Personalized Content

API caching can also be leveraged to cache personalized content, providing a tailored experience to individual users. Personalized content can include user-specific data, recommendations, or customized results based on user preferences.

For example, imagine an e-commerce platform that recommends products based on a user's browsing history and past purchases. Instead of recalculating recommendations for each request, the API can cache personalized recommendations for each user. This allows subsequent requests to be served directly from the cache, reducing response times and improving the overall user experience.

To implement caching for personalized content, it is crucial to consider cache invalidation strategies that account for changes in user preferences or updated data. Event-driven invalidation can be used to clear the cache when user-related events occur, such as when a user adds new items to their wishlist or makes a purchase.

By caching personalized content, API providers can deliver customized experiences efficiently, enhancing user engagement and satisfaction. It allows users to access their personalized data quickly, eliminating the need for repetitive computations and improving overall performance.

Tools and Technologies for API Caching

Caching Frameworks

Popular caching frameworks for API caching include Redis, Memcached, Varnish, and Squid. These frameworks offer efficient ways to manage caching in your application. Redis provides fast read and write operations, advanced features like data persistence, and distributed caching. Memcached is a high-performance, distributed memory caching system with easy integration. Varnish is an HTTP reverse proxy cache that improves API performance with advanced caching strategies. Squid offers cache hierarchies, content revalidation, and access controls. These caching frameworks enhance performance, reduce server load, and optimize resource utilization in API caching implementations. Choose the framework based on specific requirements, programming language, stack, and scalability needs.

Content Delivery Networks (CDNs)

Content delivery networks (CDNs) play a crucial role in API caching by distributing cached content across multiple geographically dispersed servers. CDNs like Cloudflare, Akamai, and Amazon CloudFront have built-in caching capabilities that can accelerate API performance and improve user experience. These CDNs store and serve cached API responses from edge servers located closer to end-users, reducing latency and network congestion. CDNs also provide features such as SSL/TLS termination, DDoS protection, and content optimization. Leveraging a CDN for API caching ensures faster content delivery, scalability, and global availability of cached data. Consider the CDN's network coverage, caching options, pricing, and integration options when selecting a CDN for API caching.

Reverse Proxies

Reverse proxies are another powerful tool for API caching. They sit between the client and the API server, intercepting and handling requests on behalf of the server. Reverse proxies like Nginx, Varnish, and HAProxy can be configured to cache API responses and serve them directly to clients, reducing the load on the backend API server. These proxies store cached responses in memory or on disk, allowing for quick retrieval and delivery. Reverse proxies also offer additional features such as load balancing, request routing, and SSL/TLS termination. By effectively caching and serving API responses, reverse proxies enhance performance, scalability, and user experience. Consider factors like configurability, caching flexibility, and integration options when choosing a reverse proxy for API caching.

Monitoring and Measuring Cache Performance

Metrics to Track Cache Effectiveness

To assess the effectiveness of API caching, it is important to monitor and measure specific metrics. Here are some key metrics to track:

- Cache hit rate: This metric calculates the percentage of requests that are served from the cache without needing to access the backend server. A higher cache hit rate indicates a more effective caching strategy.

- Cache utilization: This metric measures the percentage of cache capacity being utilized. It helps identify whether the cache is properly sized and utilized optimally.

- Cache efficiency: This metric assesses how efficiently the cache is utilized by measuring the ratio of cache hits to cache misses. A higher cache efficiency indicates a more efficient use of caching resources.

- Cache eviction rate: This metric tracks the frequency at which items are evicted or removed from the cache. A high eviction rate may indicate cache inefficiencies or the need for better cache eviction strategies.

- Response time distribution: By analyzing the distribution of response times, you can identify potential bottlenecks or performance issues. Tracking metrics like average response time, median response time, and percentiles can help assess cache performance.

Monitoring these metrics regularly allows you to gauge the effectiveness of your caching implementation, identify areas for improvement, and make informed decisions to optimize cache performance.

Analyzing Cache Hit Rates and Miss Rates

Analyzing cache hit rates and miss rates is crucial for evaluating cache performance. The cache hit rate represents the proportion of requests served from the cache, indicating the efficiency of caching. A higher hit rate signifies better performance and reduced load on the backend. On the other hand, the cache miss rate measures the percentage of requests that were not found in the cache. A lower miss rate indicates effective caching and optimized resource utilization. By monitoring and analyzing these rates, you can gain insights into cache effectiveness, optimize caching strategies, and ensure optimal performance.

Performance Optimization Based on Cache Analytics

Cache analytics provide valuable insights for performance optimization. By analyzing cache usage patterns and performance metrics, you can identify areas for improvement and optimize caching strategies. These insights can help you fine-tune cache expiration policies, adjust cache size, and optimize cache invalidation strategies. Additionally, analyzing cache performance can uncover potential bottlenecks or issues that impact overall system performance. Leveraging cache analytics allows you to continually refine and optimize your caching implementation, leading to enhanced performance and improved user experience.

Challenges and Considerations in API Caching

Cache Invalidation Complexities

Cache invalidation is a critical challenge in API caching. Ensuring that cached data remains valid and up-to-date can be complex, especially when dealing with dynamic or frequently changing content. Implementing effective cache invalidation strategies, such as time-based expiration or event-driven invalidation, is essential to maintain data consistency and prevent serving stale information. However, managing cache invalidation requires careful consideration of data dependencies, handling concurrent updates, and coordinating cache clearing across distributed systems. Addressing these complexities is crucial to maintain cache integrity and provide accurate and reliable data to API consumers.

Cache Coherence and Consistency Maintenance

Maintaining cache coherence and consistency is another significant challenge in API caching. When multiple cache instances are involved, ensuring that they have consistent and synchronized data can be complex. Coordinating cache updates and handling cache coherence across distributed systems require careful design and implementation. Techniques such as cache invalidation protocols, data synchronization mechanisms, and distributed caching strategies can help address these challenges. Maintaining cache coherence and consistency is essential to prevent data inconsistencies and provide accurate and reliable responses to API consumers.

Balancing Caching Strategies With Real-Time Data Updates

One of the challenges in API caching is finding the right balance between caching strategies and real-time data updates. Caching improves performance by serving cached responses, but it can pose difficulties when dealing with frequently changing data. Finding the optimal caching duration or implementing efficient cache invalidation mechanisms becomes crucial to ensure that users receive up-to-date information. Strategies like time-based expiration, event-driven invalidation, or manual cache clearing can be employed to strike a balance between caching benefits and timely data updates. Balancing caching strategies with real-time data updates is necessary to provide accurate and current information to API consumers.

Recap of API Caching Benefits

In conclusion, API caching plays a crucial role in enhancing performance and improving the user experience. By implementing caching mechanisms such as client-side caching, server-side caching, CDN caching, and reverse proxy caching, API responses can be served more quickly and efficiently. The benefits of API caching include improved performance through reduced response times and minimized server load, enhanced scalability to handle higher traffic volumes, and reduced backend resource consumption. Additionally, API caching contributes to a better user experience by providing faster loading times, reduced latency, and consistent data availability. However, caching considerations and best practices, such as cache invalidation strategies and addressing cache coherence and consistency, must be carefully managed. By leveraging tools and technologies like caching frameworks, CDNs, and reverse proxies, API caching can be effectively implemented. Monitoring cache performance through metrics such as cache hit rates and miss rates enables optimization and fine-tuning. Despite the challenges posed by cache invalidation complexities, cache coherence, and balancing caching strategies with real-time data updates, API caching remains a valuable technique for improving API performance and user satisfaction.

Importance of Implementing Effective Caching Strategies

The implementation of effective caching strategies in API design is crucial for maximizing performance and delivering a seamless user experience. By caching API responses at various levels such as client-side, server-side, CDN, and reverse proxy, significant benefits can be achieved. Caching improves performance by reducing response time and alleviating server load, resulting in faster and more efficient data retrieval. It enhances scalability by efficiently handling increased traffic volumes and reducing the consumption of backend resources. Additionally, caching contributes to a better user experience with faster loading times, reduced latency, and consistent data availability. However, it is essential to consider cache invalidation strategies, maintain cache coherence and consistency, and strike a balance between caching and real-time data updates. By leveraging caching frameworks, CDNs, and reverse proxies, developers can implement robust caching solutions. Monitoring cache performance through metrics such as cache hit rates and miss rates helps optimize and fine-tune caching strategies. Despite the challenges, implementing effective caching strategies is vital for optimizing API performance and ensuring user satisfaction.

Future Trends in API Caching

API caching plays a crucial role in improving performance and enhancing user experience. It offers benefits such as reduced response time, minimized server load, improved scalability, and consistent data availability. However, as technology evolves, future trends in API caching are expected to emerge. Some of these trends may include advancements in cache invalidation techniques, better handling of dynamic content caching, and improved security measures to mitigate cache poisoning and protect sensitive data. Additionally, as real-time data becomes more prevalent, finding the right balance between caching and real-time updates will be a focus. Furthermore, advancements in caching frameworks, CDNs, and reverse proxies are anticipated, providing developers with more efficient tools for caching. Monitoring and measuring cache performance will also continue to evolve with more sophisticated metrics and analytics. Overall, the future of API caching holds exciting possibilities for further optimizing performance and delivering exceptional user experiences.

Opinions expressed by DZone contributors are their own.

Comments