Understanding and Solving the AWS Lambda Cold Start Problem

In this article, get up and running fast with AWS Lambda’s provisioned concurrency, and SnapStart features. Get faster response times and reduced latency.

Join the DZone community and get the full member experience.

Join For FreeWhat Is the AWS Lambda Cold Start Problem?

AWS Lambda is a serverless computing platform that enables developers to quickly build and deploy applications without having to manage any underlying infrastructure. However, this convenience comes with a downside—the AWS Lambda cold start problem. This problem can cause delays in response times for applications running on AWS Lambda due to its cold start problem, which can affect user experience and cost money for businesses running the application.

In this article, I will discuss what causes the AWS Lambda cold start problem and how it can be addressed by using various techniques.

What Causes the AWS Lambda Cold Start Problem?

The AWS Lambda cold start problem is an issue that arises due to the initialization time of Lambda functions. It refers to the delay in response time when a user tries to invoke a Lambda function for the first time. This delay is caused by the container bootstrapping process, which takes place when a function is invoked for the first time. The longer this process takes, the more pronounced the cold start problem becomes, leading to longer response times and degraded user experience.

How To Mitigate the Cold Start Problem

AWS Lambda functions are a great way to scale your applications and save costs, but they can suffer from the “cold start” problem. This is where the function takes longer to respond when it has not been recently used. Fortunately, there are ways to mitigate this issue, such as pre-warming strategies for AWS Lambda functions. Pre-warming strategies help ensure that your Lambda functions are always ready and responsive by running them periodically in advance of when they are needed. Additionally, you can also warm up your Lambda functions manually by using the AWS Console or API calls. By taking these steps, you can ensure your applications will be able to respond quickly and reliably without any issues caused by cold starts. In the following sections, I’ll discuss two possible ways to avoid the cold start problem:

1. Lambda Provisioned Concurrency

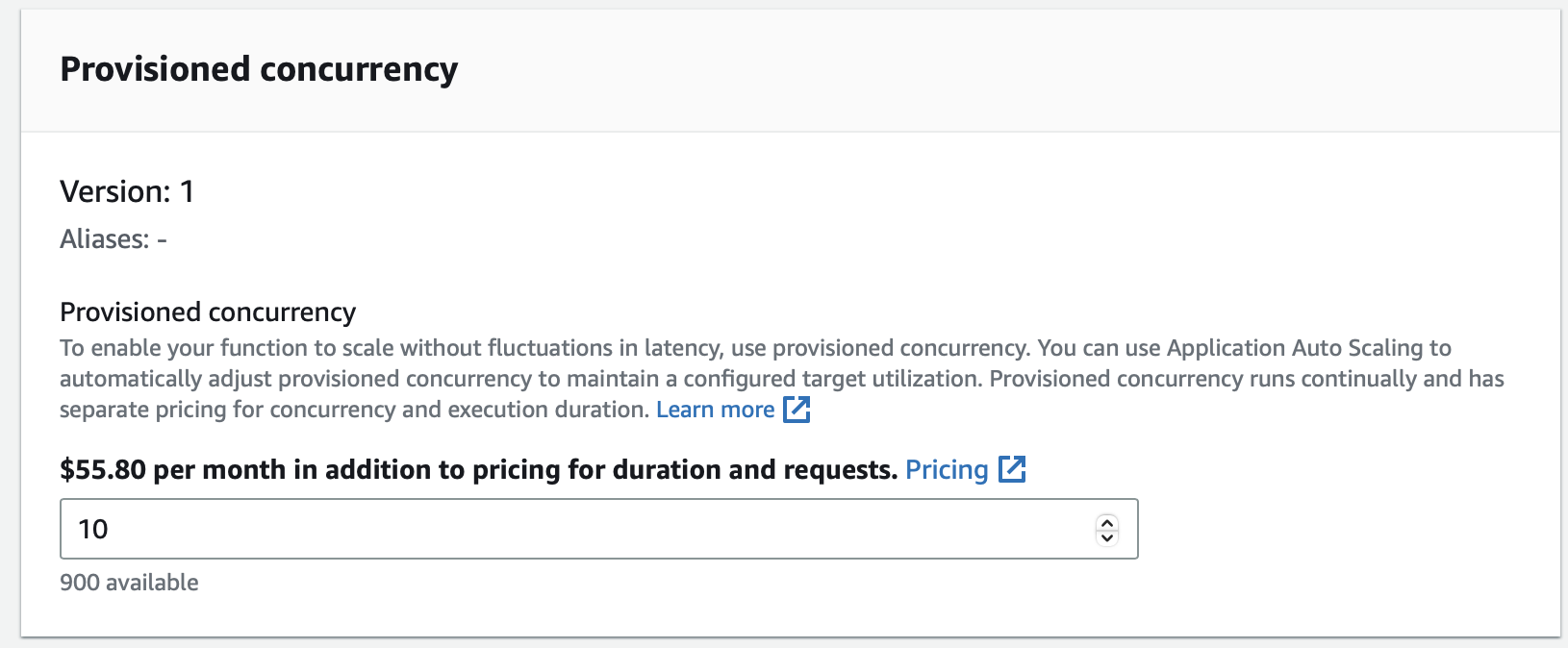

Lambda provisioned concurrency is a feature that allows developers to launch and initialize execution environments for Lambda functions. In other words, this facilitates the creation of pre-warmed Lambdas waiting to serve incoming requests. As this is pre-provisioned, the configured number of provisioned environments will be up and running all the time even if there are no requests to cater to. Therefore, this contradicts the very essence of serverless environments. Also, since environments are provisioned upfront, this feature is not free and comes with a considerable price. I created a simple Lambda function (details in the next section) and tried to configure provisioned concurrency to check the price—following is the screenshot.

However, if there are strict performance requirements and cold starts are show stoppers then certainly, provisioned concurrency is a fantastic way of getting over the problem.

2. SnapStart

The next thing I’ll discuss that can be a potential game changer is SnapStart. Amazon has released a new feature called Lambda SnapStart at re:invent 2022 to help mitigate the problem of cold start. With SnapStart, Lambda initializes your function when you publish a function version. Lambda takes a Firecracker micro VM snapshot of the memory and disk state of the initialized execution environment, encrypts the snapshot, and caches it for low-latency access. When you invoke the function version for the first time, and as the invocations scale up, Lambda resumes new execution environments from the cached snapshot instead of initializing them from scratch, improving startup latency. The best part is that, unlike provisioned concurrency, there is no additional cost for SnapStart. SnapStart is currently only available for Java 11 (Corretto) runtime.

To test SnapStart and to see if it’s really worth, I created a simple Lambda function. I used the Spring Cloud function and did not try to create a thin jar. I wanted the package to be bulky so that I can see what SnapStart does. Following is the function code:

public class ListObject implements Function<String, String> {

@Override

public String apply(String bucketName) {

System.out.format("Objects in S3 bucket %s:\n", bucketName);

final AmazonS3 s3 = AmazonS3ClientBuilder.standard().withRegion(Regions.DEFAULT_REGION).build();

ListObjectsV2Result result = s3.listObjectsV2(bucketName);

List<S3ObjectSummary> objects = result.getObjectSummaries();

for (S3ObjectSummary os : objects) {

System.out.println("* " + os.getKey());

}

return bucketName;

}

}At first, I uploaded the code using the AWS management console and tested it. I used a bucket, which is full of objects. Following is the screenshot of the execution summary.

Note: the time required to initialize the environment was 3980.17 ms. That right there is the cold start time and this was somewhat expected as I’m working with a bulky jar file.

Subsequently, I turned on SnapStart from the “AWS console”—> “Configuration”—> “General Configuration”—> “Edit.” After a while, I executed the function again and following is the screenshot of the execution summary.

Note: here, “Init duration” has been replaced by “Restore duration” as with SnapStart, Lambda will restore the image snapshot of the execution environment. Also, it’s worth noting that the time consumed for restoration is 408.34 ms, which is significantly lower than the initialization duration. The first impression about SnapStart is that it is definitely promising and exciting. Let’s see what Amazon does with it in the coming days.

In addition, Amazon announced, at re:invent 2022, that they are working on further advancements in the field of serverless computing, which could potentially eliminate the cold start issue altogether. By using Lambda SnapStart and keeping an eye out for future developments from AWS, developers can ensure their serverless applications are running smoothly and efficiently.

Best Strategies to Optimize Your Serverless Applications on AWS Lambda

Serverless applications on AWS Lambda have become increasingly popular as they offer a great way to reduce cost and complexity. However, one of the biggest challenges with serverless architectures is the AWS Lambda cold start issue. This issue can cause latency issues and affect user experience. As a result, optimizing Lambda functions for performance can be a challenge.

To ensure that your serverless applications run smoothly, there are some best practices you can use to optimize their performance on AWS Lambda. These strategies include reducing latency with warm Lambdas, optimizing memory usage of Lambda functions, and leveraging caching techniques to improve the response time of your application. Also, as discussed in the above sections, provisioned concurrency and SnapStart are great ways to mitigate the Lambda cold start issue. With these strategies in place, you can ensure your serverless applications run as efficiently as possible and deliver the best user experience.

Opinions expressed by DZone contributors are their own.

Comments