Transforming Service To Redirect-Based Long-Polling

Learn a way to transform a standard synchronous service into a redirect-based long-polled service. The core of the idea is to utilize HTTP302 response status.

Join the DZone community and get the full member experience.

Join For FreeMotivation

In the beginning, given a service that can serve requests fast (for example, as it handles a low amount of data) and serves a relatively small amount of clients. As time passes, it is a common phenomenon that both the number of clients and the response time start to grow.

In this case, one of the possible changes to make is not to serve the requests in a synchronous way

anymore but to let the requests trigger asynchronous jobs. With this change, the client does not receive the result data immediately but only an identifier to the job, and the client is free to pool the status of the job anytime.

When this change is made, it is worth considering the impact on the clients: changing from one synchronous call to polling is a typical example of both service and client code changing (and gaining extra complexity). Therefore, if the number of clients is high (or not even exactly known, in the case of public service), it might be adequate to look for a way that impacts only the service.

One of the possible ways can be introducing long-polling combined with HTTP redirects. A complete example of all three stages is provided on GitHub.

State 0: Synchronous Service

As the initial status, consider the following synchronous service controller method:

@GetMapping

public BusinessObject getTheAnswer() {

final BusinessObject result = businessService.doInternalCalculation();

return result;

}Let's assume that the execution time of businessService.doInternalCalculation() grows as time passes by.

At this stage, the clients are simply firing a REST call to the endpoint of the service.

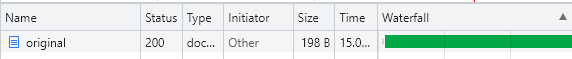

Invoking the example service (OriginalController), the network traffic pattern is simple:

Only one REST call is being made, which returns HTTP 200, and the result can be found in the response body.

State 1: Introducing (Long) Polling

As stated above, the main change is to let the service start (or even just queue up) a job upon a received REST call. After this change, the controller's above-presented method is changed, and the controller gains a new method (see LongPollingController for actual implementation details):

@GetMapping

public JobStatus getTheAnswer() {

final Future<BusinessObject> futureResult = ... submit it to a task executor ...;

final var id = ... assign an ID to the task ...;

return JobStatus( ... which should contain ID at least, in this case ... );

}

@GetMapping("/{id}")

public JobStatus getJobStatus(@PathVariable int id) {

// Find the job based on the ID

final Future<BusinessObject> futureResult = ...;

// Wait for result (but wait no longer as the defined timeout)

final BusinessObject result = futureResult.get(... timeout ...);

// 1. If timeout happened

return JobStatus( ... containing the fact, that the job is still running ... );

// 2. If result is there

return JobStatus( ... containing the business result ...);

}Note the following:

- The client has to adapt to use not only the first but also the new rest endpoint

- The client has to be aware of the structure of

JobStatus - Based on the

JobStatusattributes, the client has to poll for the result

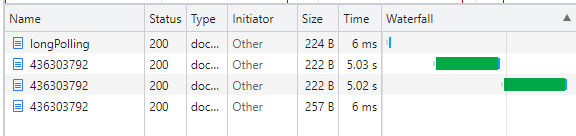

This is also reflected in the network traffic pattern:

The client had to fire up multiple requests against multiple endpoints. All the requests returned with HTTP 200, regardless of whether the result is already available.

State 2: Introducing Redirects

In order to avoid leveraging the client with the points above, the service can utilize HTTP redirect responses. To do so, the service shall change both methods as follows (see the concrete changes in RedirectLongPollingController):

@GetMapping

public RedirectView getTheAnswer() {

final Future<BusinessObject> futureResult = ... submit it to a task executor ...;

final var id = ... assign an ID to the task ...;

return new RedirectView(... pointing to the other endpoint defined in this controller ...);

}

@GetMapping("/{id}")

public Object getJobStatus(@PathVariable int id) {

// Find the job based on the ID

final Future<BusinessObject> futureResult = ...;

// Wait for result (but wait no longer as the defined timeout)

final BusinessObject result = futureResult.get(... timeout ...);

// 1. If timeout happened

return RedirectView(... pointing to this endpoint...);

// 2. If result is there

return result;

}Note that in this variant, the client — given that the REST client that is used follows the redirects by default - has nothing to change in its code. The client does not have to introduce new DTOs (such as JobStatus in State 1), nor explicitly firing new REST calls.

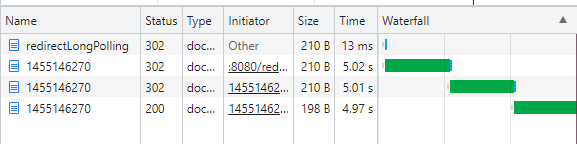

The network pattern shows a different flow as in State 1:

In this case, the client had to fire up one request, which lead to a chain of redirects, which were followed automatically. HTTP 200 response code is used only when the actual business result is available; as long as it is not available, HTTP 302 is returned.

Further Notes

About Other Possibilities

It is important to mention that this solution should not be automatically preferred over performance optimization (as it is common that, in the beginning, the new services are not optimized for response time) and scaling.

Still, in given circumstances (especially when the use case enables longer response times), it can be valid to prefer change to long-polling over performance optimization (which might mean a deep reworking of a complex logic) and scaling (as that can lead to a relevant increase of operation costs).

About Limitations

The idea behind the shown solution is based on two assumptions:

- That every network element is going to support redirections (which might not be true in the case of firewalls)

- That the client is going to follow redirections (which is a common default setting in most of the REST clients, but the service can not actually probe nor enforce it)

When these assumptions are not true for an actual setup, the presented approach can not be followed.

About Architecture (Direction of Dependency)

Although it is true that in this way, the service is gaining extra complexity in order to help the clients adapt to the new way of communication (which means, in the best case, that the client has nothing to change at all), the architecture is not changing in the manner of directions of dependencies. The service is still not going to depend on the clients, just enabling them to hold less complexity.

About HTTP Verbs

In the example above, we could point out that in Stages 1 and 2, a GET REST request leads to a new job submitted and administrated by the server, which is not perfectly passing to HTTP GET anymore. The followings should still be taken into consideration:

- It is true that the technical state of the service has changed. Still, the business state has not (at least, given that the actual business process does not change the business state)

- The usage can be and should be idempotent: the server should recognize the case when two requests with the same business meaning have arrived and should not queue the second task

- This would also give a performance benefit for all the clients who are requesting something which is already on the way to being calculated

- This also decreases the load on the server, as the same process will not be triggered twice

Conclusion

In conclusion, we can state that the demonstrated way can free the clients from applying any change as well as can potentially lead to better average response times and lower server utilization (by avoiding running the same flow multiple times).

Opinions expressed by DZone contributors are their own.

Comments