Thoughts on Server-Sent Events, HTTP/2, and Envoy

Join the DZone community and get the full member experience.

Join For FreeIn a distributed system, moving data efficiently between services is no small task. It can be especially tricky for a frontend web application that relies on polling data from many backend services.

I recently explored solutions to this problem for Grey Matter — specifically, how we could reduce traffic to some of the most requested services in our network. Our web app had the following characteristics:

Polls services for updates every 5 seconds.

Doesn’t need bidirectional communication.

Doesn’t need to support legacy browsers.

Communicates with services behind an Envoy-based proxy.

I looked into two server-push solutions — WebSockets and Server-Sent Events (SSE). In the end, I realized WebSockets were overkill for our use case and decided to focus on SSE, mainly because it’s just plain old HTTP and lets us take advantage of some really cool features of HTTP/2.

A Quick Primer on SSE

SSE is part of the HTML5 EventSource standard and basically just gives us an API for managing and parsing events from long-running HTTP connections.

To implement an SSE client, instantiate the EventSource object and start listening for events. When this code is loaded in the browser, it will open up a TCP connection and send an HTTP request to the server, telling it to push events down the line when it has them. We can even listen for custom events, like dad jokes:

xxxxxxxxxx

const source = new EventSource(`https://localhost:7080/events`, {

withCredentials: true

});

source.addEventListener("dadJoke", function(event) {

console.log(event.data)

});

If the connection drops, EventSource will send an error event and automatically try to reconnect!

The server implementation is also very straightforward. For a server to become an SSE server, it needs to:

Set the appropriate headers (text/event-stream).

Keep track of connected clients.

Maintain a history of messages so clients can catch up on missed messages (optional).

Send messages in a specific format — a block of text terminated by a pair of newlines:

xxxxxxxxxx

id: 150

event: dadJoke

retry: 10000

data: They're making a movie about clocks. It's about time.

That’s pretty much it! There are plenty of SSE server packages out there to make things even easier, or you can roll your own.

You may also like: Using HTTPS in Mule.

Issues With SSE

There are a few common drawbacks you’ll read about with SSE:

No native support for binary types.

Unilateral communication.

No IE support.

No way to add headers with the EventSource object.

Max of 6 client connections from a single host.

The first 4 points weren’t so much of an issue for us. We don’t need to send anything but JSON to the browser. The client doesn’t need to push data to the server. There are polyfills for IE, and we can attach any headers or route-specific data using our proxy.

The last one is a big deal. One of the biggest perceived limitations of SSE is the browser’s max parallel connection limit, which is set to 6 per domain in most modern browsers following the HTTP/1.1 spec. I read that HTTP/2 solves this issue, so I dove in a bit further to understand how.

From Client-Pull to Server-Push

In standard HTTP, the client asks the server for a resource and the server responds. It’s a 1:1 deal.

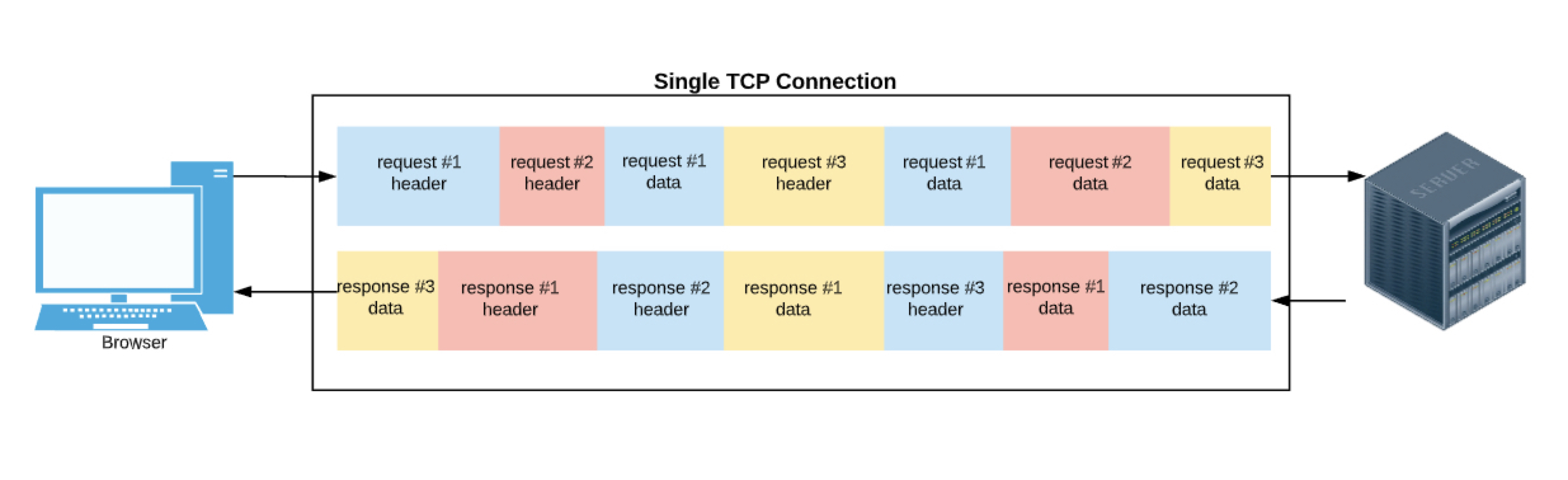

When HTTP/1.1 came along, it introduced the concept of “pipelining”, where multiple HTTP requests are sent on a single TCP connection. That way, a client can request a bunch of resources from the server at the same time but only has to open up one connection.

There was a huge problem with the implementation though — the server had to respond in the order it received requests from the client because there was no other way to identify which request went with which response. This leads to something called the head-of-line blocking problem (HOL), where if the client sends requests A, B, and C, but request A requires a lot of server resources, B and C are blocked until A finishes.

Along comes HTTP/2, which was developed to overcome performance problems with HTTP/1.x. It gives us compressed HTTP headers, request prioritization. Most importantly, it allows us to multiplex multiple requests over a single TCP connection. Wait, didn’t we already have that last one with HTTP/1.1? Yes and no; because of issues like the HOL problem, there was a lack of reliable pipelining support in browsers, so the web development community went with homegrown solutions for reusing connections instead — domain sharding, concatenation, spriting, and inlining assets.

HTTP/2 is a real multiplexing solution because of how the underlying “framing layer” works. Instead of HTTP/1.x’s plaintext data, HTTP/2 messages are broken down into smaller parts, like headers and request/response bodies. Then, they're encoded.

These small, encoded chunks are called “frames” and include an ID, so they can be associated with a particular message. That means they don’t need to be sent all at once — frames that belong to different messages can be interleaved. When these frames reach their destination, they can be reassembled and decoded into a standard HTTP message by HTTP/2 compatible servers.

As long as connections are behind the same hostname, turning on HTTP/2 will give us as many requests as we want over the same connection!

There is another implication here for SSE . Since an SSE stream is just a long-running HTTP request, we can have as many individual SSE streams as we want over the same connection. We can send messages from a server to and from the client AND from a client to and from a server.

SSE and Envoy

We have a sweet setup here so far — HTTP/2 provides the efficient data transport layer, while SSE gives us a native web API and messaging format for the client.

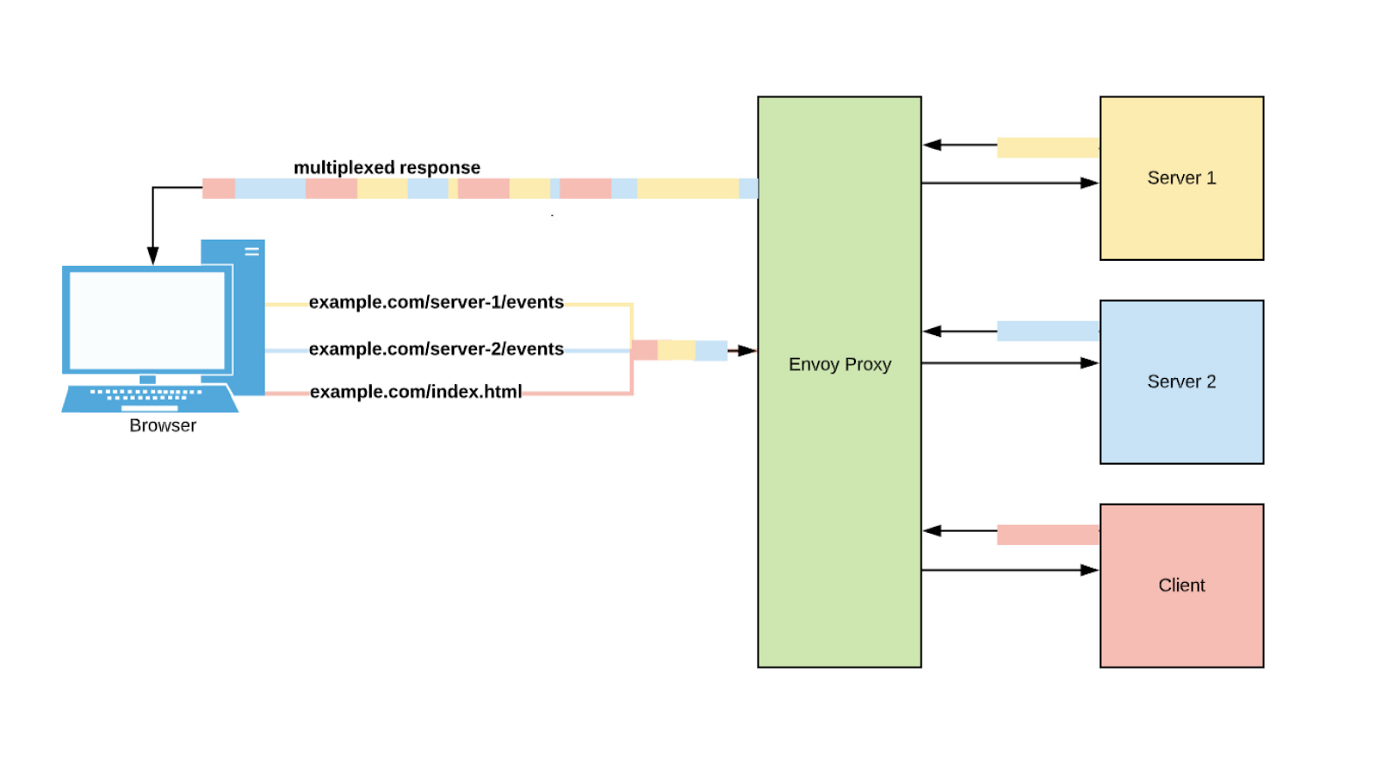

Now, I was curious if I could get this working in a distributed system, where the server(s) and client are deployed in individual containers behind a gateway or “edge” proxy. This proxy handles all incoming requests and routes them to the appropriate place, which is important because it lets us put everything behind the same hostname (“example.com” in the diagram below). That way, we can take advantage of a multiplexed connection. Here’s the setup:

You can see we have two event streams multiplexed over a single connection, AND we’re getting the static assets (index.html) over that connection as well!

I’ve reproduced this setup in a small docker-compose — two SSE servers pushing out dad jokes every 10 seconds and a client that renders the events in the browser. Try it out for yourself by cloning the repo here and then navigating to https://localhost:8080 with your developer tools open.

xxxxxxxxxx

git clone https://github.com/kaitmore/simple-sse

cd simple-sse

docker-compose up -d

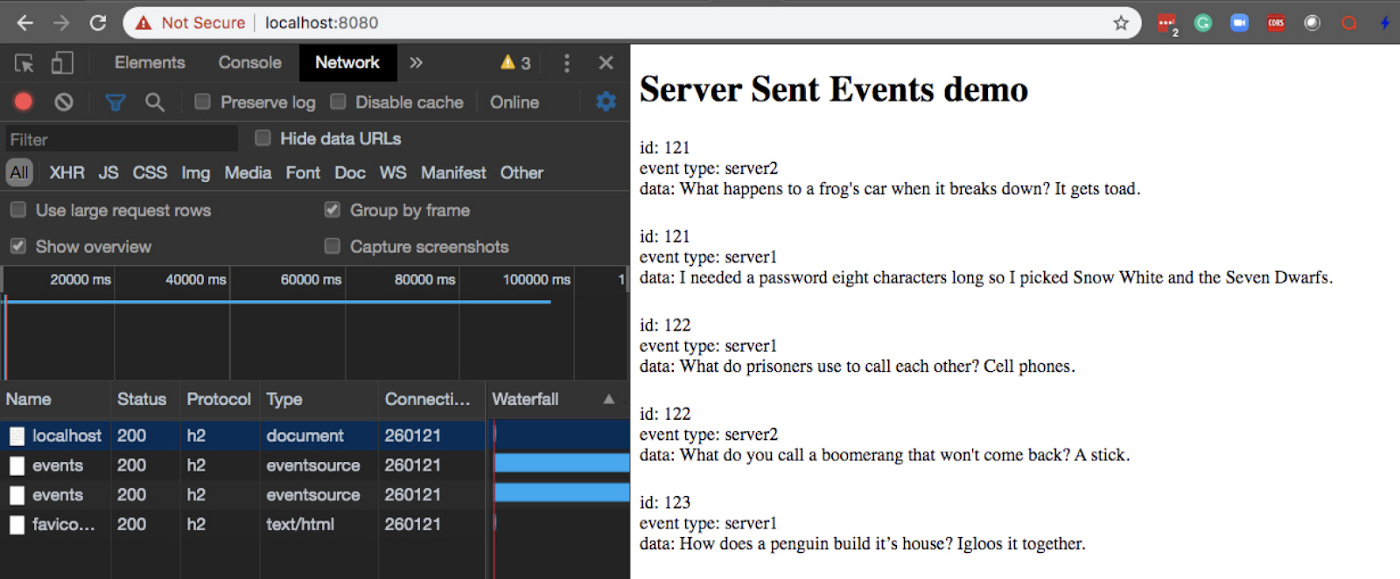

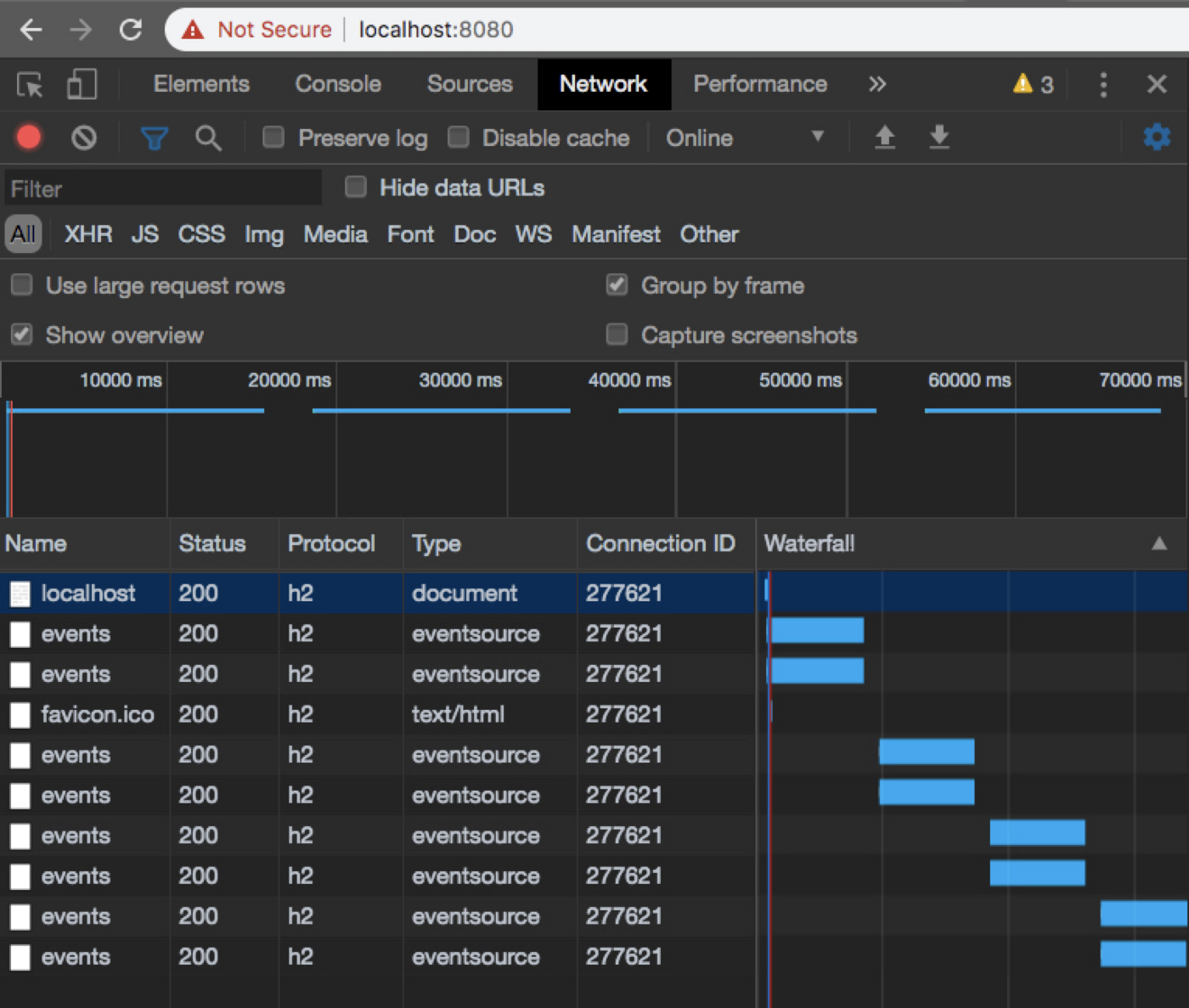

In your Network tab, you should see requests to our 2 event servers using the h2 protocol, as well as the responses rendered on the page (you might have to wait a moment):

You might be wondering, how do you know the requests/responses are being multiplexed? I see two streams listed there!

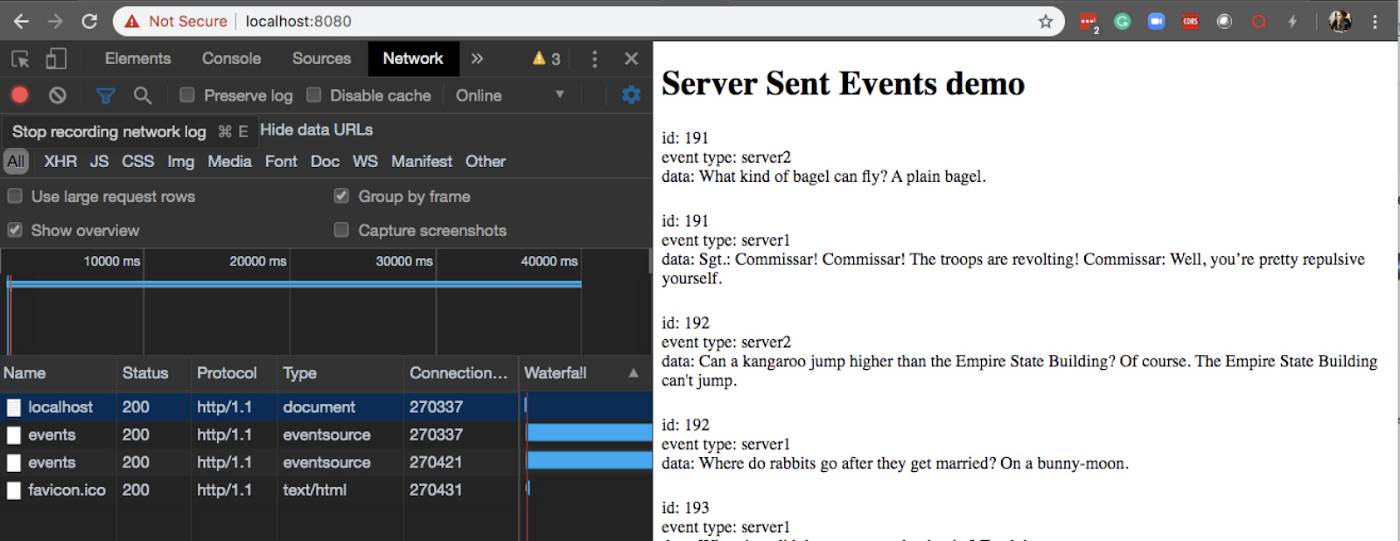

Check out the “Connection ID” column, and notice how they’re all the same: 260121. The items listed in your Network tab are requests, not TCP connections. If we were to run this same docker-compose with HTTP/2 disabled, you would see each request has a different connection ID*:

*Well… kinda. localhost (index.html) and the first event stream actually have the same connection ID because after index.html is returned, that connection is freed up to be reused by the next request.

Envoy Configuration

Configuring Envoy to work with SSE took a bit of experimentation. You can see the final configuration here.

Enabling HTTP/2

The first thing I needed to do was enable HTTP/2. According to Envoy’s docs, the alpn_protocolsfield needs to be set anywhere that has a tls_context* so that it will accept HTTP/2 connections. I also found it was necessary to set http2_protocol_options on every cluster that wants HTTP/2, even though I wasn’t specifying any options.

Configuring Timeouts

Once I had http/2 enabled, I quickly noticed something funky was going on. A new event stream seemed to be created every 10–15 seconds. The waterfall shows that the connection is indeed dropped so the browser tries to reconnect:

After some googling I stumbled upon this little gem in the Envoy docs FAQ:

“This [route-level] timeout defaults to 15 seconds, however, it is not compatible with streaming responses (responses that never end), and will need to be disabled. Stream idle timeouts should be used in the case of streaming APIs as described elsewhere on this page.”

Updating all the route definitions to disable timeouts solved the issue:

xxxxxxxxxx

routes:

match:

prefix: "/"

route:

cluster: client

timeout: 0s # Disable the 15s default timeout

Another timeout to note is the stream_idle_timeout, which controls the amount of time a connection can stay open without receiving any messages. The default is 5m and can be disabled in the same way as the route-level timeout above.

Wrapping up

Server-sent events give us a robust alternative to polling with a built-in web API, automatic reconnects, custom events, and HTTP/2 support. Pairing SSE with Envoy as a gateway lets us take advantage of that HTTP/2 support by proxying to different streaming servers under a single hostname — reducing network chatter and speeding up our UI.

Footnote: HTTP/2’s Server Push vs. SSE

When I was first learning about HTTP/2 and SSE, I kept reading about “HTTP/2 server push” and I didn’t quite understand how these things related. Are they the same? Are they competing technologies? Can they be used together?

Turns out, they are different. Server push is a way to send assets to a client before they ask. A common example would be a frontend that requests index.html. The server sends back this requested file, but instead of the browser parsing and requesting other resources in index.html, the server already knows what the client wants and “pushes” out the rest of the static assets — like style.css and bundle.js. Instead of 3 calls to the server for each of these assets, it’s done over one HTTP connection. Server push is taking advantage of the same underlying technology but the use case is slightly different.

Further Reading

Opinions expressed by DZone contributors are their own.

Comments