AI Governance: Building Ethical and Transparent Systems for the Future

This article takes a deep dive into AI governance, including insights surrounding its challenges, frameworks, standards, and more.

Join the DZone community and get the full member experience.

Join For FreeWhat Is AI Data Governance?

Artificial Intelligence (AI) governance refers to the frameworks, policies, and ethical standards that guide AI technologies' development, deployment, and management. It encompasses a range of considerations, such as data privacy, algorithmic transparency, accountability, and fairness in AI systems, aiming to ensure that these technologies operate according to societal values and legal standards.

What Is the Significance of AI Data Governance?

As AI continues to permeate various aspects of life, from healthcare to finance, a pressing need emerges to address inherent ethical concerns, such as bias, discrimination, and the potential for misuse. Effective governance structures help establish accountability by clarifying who is responsible when AI systems perform poorly or cause harm. They also promote transparency by ensuring that the methodologies behind AI decision-making are clear to users, fostering trust and allowing for informed scrutiny.

Moreover, the rapid advancement of AI technologies necessitates a proactive approach to governance that addresses current challenges and anticipates future risks. By instituting rigorous governance frameworks, organizations can mitigate potential negative impacts while harnessing the benefits of AI, creating a balance between innovation and ethical responsibility. In sum, AI governance plays a crucial role in fostering a technological ecosystem that is ethical, transparent, and ultimately beneficial for society as a whole.

Challenges in AI Governance

The complex landscape of AI governance presents significant challenges that must be addressed to ensure the ethical and transparent use of artificial intelligence systems. One of the most pressing issues is the presence of bias, which can adversely affect fairness in decision-making processes. Algorithms trained on data that inadvertently reflect societal biases can perpetuate discrimination, thereby undermining trust and equity in AI applications. Addressing bias necessitates comprehensive strategies that focus on algorithmic adjustments and involve a critical examination of the data used in training.

Another critical challenge revolves around security and privacy. The integration of AI in various sectors increases the risk of data breaches and misuse of sensitive information, compromising user privacy. Organizations must develop robust governance frameworks prioritizing data protection and enforcing stringent security measures, ensuring compliance with emerging regulations designed to safeguard personal information from unauthorized access and exploitation.

Additionally, issues of accountability and transparency are central to the debate on AI governance. As AI systems become more autonomous, understanding their decision-making processes becomes increasingly difficult, leading to a lack of transparency that can erode public trust. Governance frameworks need to establish clear accountability mechanisms to determine who is responsible for AI-driven decisions, especially when those decisions lead to negative outcomes or exacerbate existing inequalities. This requires transparent reporting practices and stakeholder engagement to foster an environment where AI systems are held accountable.

Effective AI governance is crucial for navigating these challenges, calling for a holistic and adaptable approach that incorporates ethical standards, robust risk management, and collaborative regulation across international borders. By addressing these core issues, organizations can help ensure that AI technologies are deployed responsibly and beneficially.

AI Governance Frameworks and Standards

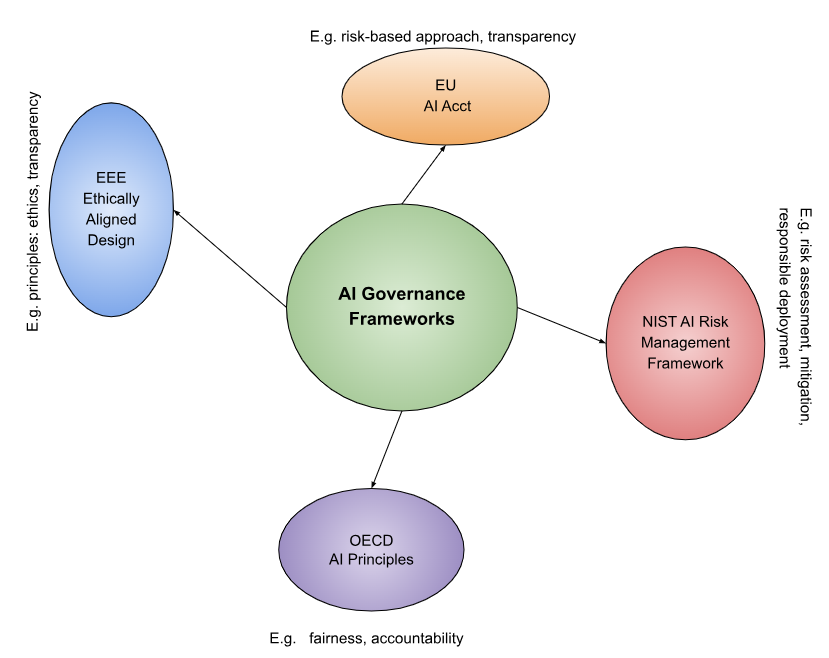

AI governance frameworks play a crucial role in ensuring artificial intelligence technologies' ethical and transparent deployment. Key frameworks such as the EU AI Act and the NIST AI Risk Management Framework provide comprehensive guidelines for organizations to navigate the complex landscape of AI regulations and ethical considerations.

The EU AI Act adopts a risk-based approach to regulating AI, categorizing systems based on their risk level and setting different compliance requirements accordingly. It emphasizes transparency, requiring organizations to inform users when they are interacting with AI systems and disallowing certain harmful applications, such as governmental social scoring. Similarly, the NIST AI Risk Management Framework focuses on helping organizations identify, assess, and mitigate risks associated with AI, thus promoting responsible AI system deployment across various sectors. Other notable frameworks include the OECD AI Principles and the IEEE's Ethically Aligned Design, which underscore the importance of fairness, transparency, and accountability in AI systems.

These frameworks collectively guide organizations by offering structured approaches that align AI practices with ethical principles and societal values. They highlight essential principles such as transparency, accountability, and fairness, ensuring that AI technologies are developed and utilized responsibly. By adhering to these frameworks, organizations can foster public trust, ensure compliance with legal and ethical standards, and minimize risks such as bias and discrimination in AI decision-making processes. Consequently, AI governance frameworks are instrumental in shaping a landscape where AI innovations contribute positively to society while adhering to established moral and legal guidelines.

Best Practices for AI Governance

In the realm of AI governance, best practices play a crucial role in fostering ethical and transparent AI systems. A primary focus should be on ensuring data privacy, which involves implementing robust measures to safeguard personal information and comply with regulations such as GDPR. Transparency is equally important: organizations must provide clear insights into how AI algorithms operate, enabling stakeholders to understand decision-making processes. Additionally, fairness in AI applications should be prioritized to prevent discrimination and bias, requiring regular assessments and adjustments to algorithms. Continuous monitoring of AI systems is essential to identify any issues or unintended consequences over time. Lastly, establishing comprehensive ethical guidelines and encouraging collaboration with interdisciplinary teams, including ethicists, legal experts, and technologists, can create a holistic approach to governance that addresses diverse concerns and fosters public trust.

AI Governance in Practice

In recent years, several organizations have pioneered successful AI governance initiatives, illustrating the vital role that governance plays in ensuring ethical and transparent AI systems. One notable example is the Organisation for Economic Co-operation and Development (OECD), which has developed the OECD AI Principles, aimed at providing a framework for countries to align their AI policies with ethical standards and societal values. This initiative emphasizes the importance of accountability, transparency, and stakeholder engagement. Similarly, the European Union’s Artificial Intelligence Act, passed into law in 2024, sets a bold precedent for comprehensive regulations governing AI development and application that prioritize safety and human rights, directly influencing governance frameworks beyond its borders.

Companies that have adopted robust AI governance structures have often experienced significant benefits. Successful governance not only reduces risks associated with bias and compliance but also enhances organizational reputation and stakeholder trust. For instance, as organizations implement ethical guidelines and accountability measures for AI usage, they have been able to mitigate model bias and improve data integrity, leading to better decision-making processes. This, in turn, fosters a competitive advantage in the marketplace as customers increasingly demand transparency and ethical practices. Furthermore, companies that actively engage in self-governance of AI systems integrating technical controls with organizational policies are better equipped to navigate the evolving regulatory landscape and capitalize on new market opportunities resulting from ethical AI practices. Overall, these examples underscore the critical importance of AI governance in promoting responsible AI development that aligns with societal expectations and legal requirements.

The Future of AI Governance

As the field of artificial intelligence continues to advance at an unprecedented rate, the evolution of AI governance will likely become increasingly sophisticated and multifaceted. Future governance frameworks are expected to integrate diverse stakeholder perspectives, including those of governments, industries, and civil society. This will be essential in addressing the complex ethical dilemmas and social implications that arise from AI technologies. Furthermore, as AI systems become more autonomous and integrated into critical sectors, robust regulatory frameworks will need to evolve to facilitate accountability, enhance safety, and ensure that these systems serve the broader interests of humanity.

The importance of global collaboration in shaping fair and ethical AI systems cannot be overstated. AI technology transcends national boundaries, making it imperative for countries to work together to establish common standards and best practices. By fostering international partnerships, governments and organizations can share knowledge and resources, thereby enhancing the efficacy of AI governance. Moreover, global collaboration will help to bridge gaps in regulatory approaches and mitigate risks associated with disparities in technological development. Ultimately, a unified effort will contribute to the creation of equitable AI systems that promote transparency, respect individual rights, and ensure inclusive benefits for all members of society.

Conclusion

In conclusion, the necessity for AI governance has become increasingly evident as artificial intelligence systems proliferate and influence various aspects of society. Effective governance frameworks are essential to ensure that AI technologies are developed and deployed in an ethical manner, safeguarding against biases, ensuring accountability, and protecting user privacy. By establishing clear guidelines and standards, stakeholders can foster trust in AI systems and promote their responsible use within diverse fields, from healthcare to finance.

Moreover, it is imperative that all stakeholders, including policymakers, industry leaders, and researchers, prioritize the development of responsible AI practices. This call to action encourages collaboration across sectors to establish comprehensive governance models that not only address the immediate challenges but also anticipate future ethical dilemmas. By embracing a proactive approach to AI governance, we can work towards an innovative future where AI serves the greater good while respecting fundamental human rights and values.

Here are some popular and authoritative resources that delve into AI governance, offering resources, frameworks, and insights:

- World Economic Forum (WEF): The WEF provides a dedicated platform for AI governance, discussing frameworks, policies, and global collaborations to ensure responsible AI deployment. Their initiatives focus on transparency, fairness, and ethics in AI systems.

- Partnership on AI (PAI): PAI is a multi-stakeholder organization that brings together researchers, companies, and policymakers to create best practices and frameworks for AI governance, with an emphasis on fairness, accountability, and explainability.

- OECD AI Policy Observatory: The OECD AI Policy Observatory offers tools and resources for governments and organizations to design and implement AI policies, with a focus on transparency, robustness, and inclusivity.

- AI Governance by Modulos: Modulos.ai provides a comprehensive guide to AI governance, highlighting essential elements such as data privacy, algorithmic transparency, and ethical considerations in AI implementation.

- NextGen Invent: This site explores AI governance strategies and frameworks, emphasizing model explainability, bias mitigation, and stakeholder engagement as pillars of responsible AI.

These resources are valuable for understanding the complexities of AI governance and implementing ethical AI practices. You can explore these platforms to gain deeper insights and practical tools.

Additional Reference Websites

Opinions expressed by DZone contributors are their own.

Comments