The Evolution of Incident Management from On-Call to SRE

Incident management has evolved considerably over the last couple of decades.

Join the DZone community and get the full member experience.

Join For FreeIncident management has evolved considerably over the last couple of decades. Traditionally having been limited to just an on-call team and an alerting system, today it has evolved to include automated incident response combined with a complex set of SRE workflows.

Importance of Reliability

While the number of active internet users and people consuming digital products has been on the rise for a while, it is actually the combination of increased user expectations and competitive digital experiences that have led organizations to deliver super Reliable products and services.

The bottom line is, customers have the right to seek reliable software, and the right to expect the product to work when they really want it. And it is the responsibility of the organizations to build Reliable products.

But having said that, no software can be 100% reliable. Even achieving 99.9% reliability is a monumental task. As engineering infrastructure grows more complex by the day, the possibility of Incidents becomes inevitable. But triaging and remediating the issues quickly with minimal impact is what will make all of the difference.

From the Vault: Recapping Incidents and Outages from the Past

Let’s look back at some notable outages from the past that have had a major impact on both businesses and end users alike.

October 2021: A mega outage took down Facebook, WhatsApp, Messenger, Instagram, and Oculus VR…for almost five hours! And no one could use any of those products during those five hours.

November 2021: A downstream effect of a Google Cloud outage led to outages across multiple GCP products. This also indirectly impacted many non-Google companies.

December 2022: An incident corresponding to Amazon’s Search issue impacted at least 20% of all global users for almost an entire day.

Jan 2023: Most recently, the Federal Aviation Authority (FAA) suffered an outage due to a failed scheduled maintenance causing 32,578 flights to be delayed and a further 409 to get cancelled together. And needless to say, the monetary impact was massive. Share prices of numerous U.S. air carriers fell steeply in the immediate aftermath.

Reliability Trends as of 2023

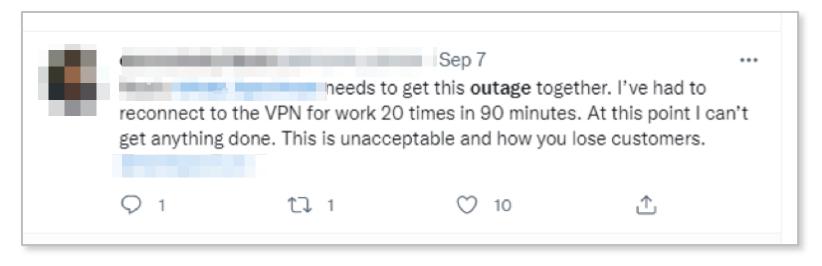

These are just a few of the major outages that have impacted users on a global scale. In reality, incidents such as these are not uncommon and are far more frequent. While businesses and business owners bear the brunt of such outages, the impact is experienced by end users too, resulting in a poor user/customer experience (UX/CX).

Here are some interesting stats as a result of poor CX/UX:

- It takes 12 positive user experiences to make up for one unresolved negative experience

- 88% of web visitors are less likely to return to a site after a bad experience

- And even a 1 second delay in page load can cause a 7% loss in customers

And that is why resolving incidents quickly is CRITICAL! But (literally :p) the million dollar question is how to effectively deal with incidents? Let’s address this by probing into the challenges of incident management in the first place.

State of Incident Management Today

Evolving business and user needs have directly impacted incident management practices.

- Increasingly complex systems have led to increasingly complex incidents.

The use of public cloud and Microservices architecture has made it difficult to find out what went wrong, e.g.: which service is impacted, does the outage have an upstream/downstream on other services, etc. Hence incidents are complex too. - User expectations have grown considerably due to increased dependency on technology.

The widespread adoption of technologies has led to more dependency on technology. This has made them more comfortable using it, and as a result, they are unwilling to put up with any kind of downtime or bad experience that they may face. - Tool sprawl amid evolving business needs adds to the complexity.

The increasing number of tools within the tech stack to address complex requirements and use cases only adds to the complexity of incident management.

“...you want teams to be able to reach for the right tool at the right time, not to be impeded by earlier decisions about what they think they might need in the future.” - Steve McGhee, Reliability Advocate, SRE, Google Cloud

Evolution of Incident Management

Over the years, the scope of activities associated with Incident Management has only been growing. And most of the evolution that’s taken place can be bucketed into one of the four categories: technology, people, process, and tools.

Technology

| When? | What was it like? |

|---|---|

| 15 years ago |

|

| 7 years ago |

|

| Today |

|

People

| When? | What was it like? |

|---|---|

| 15 years ago |

|

| 7 years ago |

|

| Today |

|

Process

| When? | What was it like? |

|---|---|

| 15 years ago |

|

| 7 years ago |

|

| Today |

|

Tools

| When? | What was it like? |

|---|---|

| 15 years ago |

|

| 7 years ago |

|

| Today |

|

Problems Adjusting to Modern Incident Management

Now is the ideal time to address issues that are holding engineering teams back from doing incident management the right way.

Managing Complexity

Service ownership and visibility are the foremost contributing factors preventing engineering teams from maximizing their time at hand during incident triage. This is a result of the adoption of distributed applications, in particular microservices.

An irrational number of services makes it hard to track service health and their respective owners. Tool sprawl (a great number of tools within the tech stack) makes it even more difficult to track dependencies and ownership.

Lack of Automation

Achieving a respectable amount of automation is still a distant dream for most incident response teams. Automating their entire infrastructure stack through incident management will make a great deal of a difference in improving MTTA and MTTR.

The tasks that are still manual, with great potential for automation during incident response are:

- Ability to quickly notify the On-Call team of service outages/service degradation

- Ability to automate incident escalations to the senior/ more experienced responders/ stakeholders

- Providing the appropriate conference bridge for communication and documenting incident notes

Poor Collaboration

A poor effort put into collaboration during an incident is a major reason keeping response teams from doing what they do best. The process of informing members within the team, across the team, within the organization, and outside of the organization must be simplified and organized.

Activities that can improve with better collaboration are

- Bringing visibility of service health to team members, internal and external stakeholders, customers, etc. with a status page

- Maintaining a single source of truth in regard to incident impact and incident response

- Doing the root cause analysis or postmortems or incident retrospectives in a blameless way

Lack of Visibility into Service Health

One of the most important (and responsible) activities for the response team is to facilitate complete transparency about incident impact, triage, and resolution to internal and external stakeholders as well as business owners. The problems:

- Absence of a platform such as a status page, that can keep all stakeholders informed of impact timelines, and resolution progress

- Inability to track the health of the dependent upstream/downstream services and not just the affected service

Now, the timely question to probe is: what should Engineering teams start doing? And how can organizations support them in their reliability journey?

What Can Engineering Leaders/Teams Do to Mitigate the Problem?

The facets of incident management today can be broadly classified into 3 categories:

- On-call alerting

- Incident response (automated and collaborative)

- Effective SRE

Addressing the difficulties and devising appropriate processes and strategies around these categories can help engineering teams improve their incident management by 90%. Certainly sounds ambitious, so let's understand this in more detail.

On-Call Alerting and Routing

On-call is the foundation of a good reliability practice. Three are two main aspects to on-call alerting and they are highlighted below.

a. Centralizing Incident Alerting and Monitoring

The crucial aspect of on-call alerting is the ability to bring all the alerts into a single/centralized command center. This is important because a typical tech stack is made up of multiple alerting tools monitoring different services (or parts of the infrastructure), put in place by different users. An ecosystem that can bring such alerts together will make Incident Management much more organized.

b. On-Call Scheduling and Intelligent Routing

While organized alerting is a great first step, effective Incident Response is all about having an On-Call Schedule in place and routing alerts to the concerned On-Call responder. And in case of non-resolution or inaction, escalating it to the most appropriate engineer (or user).

Incident Response (Automated and Collaborative)

While on-call scheduling and alert routing are the fundamentals, it is incident response that gives structure to incident management.

a. Alert Noise Reduction and Correlation

Oftentimes, teams get notified of unnecessary events. And more commonly, during the process of resolution, engineers tend to get notified for similar and related alerts, which are better off addressing the collective incident and not just the specific incident. Hence with the right practices in place, incident/alert fatigue can be handled with automation rules for suppressing alerts and deduplicating alerts.

b. Integration and Collaboration

Integrating the infrastructure stack with tools well within the response process can possibly be the simplest and easiest way to organize incident response. Collaboration can improve by establishing integrations with:

- ITSM tools for ticket management

- ChatOps tools for communication

- CI/CD tools for deployment/ quick rollback

Effective SRE

Engineering reliability into a product requires the entire organization to adopt the SRE mindset and buy into the ideology. While on-call is at one end of the spectrum, SRE (site reliability engineering) can be thought of being at the other end of the spectrum.

But what exactly is SRE?

For starters, SRE should not be confused with what DevOps stands for. While DevOps focuses on Principles, SRE emphasizes the focus on Activities instead. SRE is fundamentally about taking an engineering approach to systems operations in order to achieve better reliability and performance. It puts a premium on monitoring, tracking bugs, and creating systems and automation that solve the problem in the long term.

While Google was the birthplace of SRE, many top technology companies such as LinkedIn, Netflix, Amazon, Apple, and Facebook have adopted it and benefitted highly from doing that.

POV: Gartner predicts that, by 2027, 75% of enterprises will use SRE practices organization-wide, up from 10% in 2022.

What difference will SRE make?

Today users are expecting nothing but the very best. And an exclusive focus on SRE practices will help in:

- Providing a delightful User experience (or Customer experience)

- Improving feature velocity

- Providing fast and proactive issue resolution

How does SRE add value to the business?

SRE adds a ton of value to any business that is digital-first. Below mentioned are some of the key points:

- Provides an engineering-driven and data-driven approach to improve customer satisfaction

- Enables you to measure toil and save time for strategic tasks

- Leverage Automation

- Learn from Incident Retrospectives

- Communicate with Status Pages

The bottom line is, Reliability has evolved. You have to be proactive and preventive.

Teams will have to fix things faster and keep getting better at it.

And on that note, let’s look at the different SRE aspects that engineering teams can adopt for better incident management:

a. Automated Response Actions

Automating manual tasks and eliminating toil is one of the fundamental truths on which SRE is built. Be it automating workflows with Runooks, or automating response actions, SRE is a big advocate of automation, and response teams will widely benefit from having this in place.

b. Transparency

SRE advocates for providing complete visibility into the health status of services and this can be achieved by the use of Status Pages. It also puts a premium on the need to have greater transparency and visibility of service ownership within the organization.

c. Blameless Culture

During times of an incident, SRE stresses greatly on blaming the process and not the individuals responsible for it. This blameless culture of not blaming individuals for outages goes a long way in fostering a healthy team culture and promoting team harmony. This process of doing RCAs is called incident retrospectives or postmortems.

d. SLO and Error Budget Tracking

This is all about using a metric-driven approach to balance reliability and innovation. It encourages the use of SLIs to keep track of service health. By actively tracking SLIs, SLOs and error budgets can be in check, thus not breaching customer any of the customer SLAs.

Published at DZone with permission of Vardhan NS. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments