Adhering to Privacy Laws When Preserving System History

Privacy laws worldwide prohibit access to sensitive data in the clear. It is no different when persisting to operational logs.

Join the DZone community and get the full member experience.

Join For FreePrivacy laws worldwide prohibit access to sensitive data in the clear such as passport numbers and email addresses. It is no different when persisting to operational logs. One approach can be to anonymize the data before persisting it. However, this only allows for technical and business investigations. Another approach is to rely on the operating system to prevent unauthorized access using security groups or some such mechanism. This might be the easiest way to gain compliance. However, this approach relies heavily on human effort, and humans make mistakes. Furthermore, system administrators with root access will be able to view sensitive data in the clear.

Another approach that comes to mind is to share a symmetric key between the system doing the logging and all entities vetted for read access. In such a scheme, the application will encrypt selectively before writing to the log, allowing for users to decrypt when required. This approach begs the normal questions of how to share the key securely for the first time or during the key rotations mandated by the organization’s security policies. Not to mention the security risk of so many entities having access to the key.

This post will, at the hand of a few sample applications, detail another way how this can be accomplished using format-preserving encryption in a secure fashion.

Principles

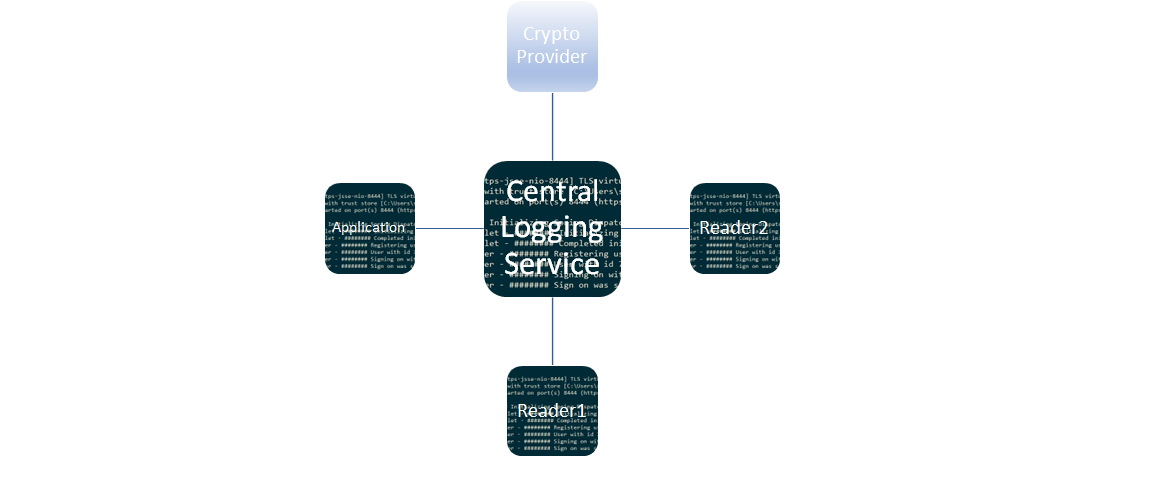

Architecture

Any solution that preserves system history always consists of an application that logs and a few readers that read the logs. Should the details be written to a text file, there exists hundreds of client applications such as Tail, less, or Notepad to access the logs. Other readers/viewers, such as Splunk and ELK, use sophisticated data transfer, processing, and storage functionalities.

Any time data must be encrypted, an additional component is required to manage and perform this securely. This post refers to this system as the crypto provider. This crypto provider typically abstracts other functionalities and hardware, such as Cryptographic Operations, Key Management, and Hardware Security Modules.

It is an unfortunate truth that any store-bought solution never entirely fulfills a business’ requirements and might have to be put behind another application that will amend and/or add functionalities. In this write-up, this is referred to as the central logging service or façade.

From the above, it should be clear that the architecture can be depicted as follows:

The sample applications that will be discussed lower down use Fortanix Data Security Manager as the crypto provider. It is very versatile and offers Hardware Security Modules on the cloud, which can work out more economically. It also complies mostly with the principles of design that will be described next.

Assign Rights to Groups, Not Individual Entities

One does not want to share the same account for reading and/or writing, as this can only be accomplished by sharing the password or key around. It is far better practice to add user/application accounts to a group and assign the rights to perform cryptographic operations to this group. Each entity then authenticates using its own credentials, and rights can be given or withdrawn as required by group addition or removal.

Indirect Referencing of Keys

It is bad practice to change code at multiple places every time a new key is rotated in. It is far better to abstract rotations away from the log reader and writer by referencing a key indirectly. Should a new key be rotated, a single change is consequently required to map the key alias to the ID of the new key.

A Format Preserving Key for Each Type of Secret

It is possible to use one key to encrypt all data types. Since there is no way to determine the words that require decryption from those that do not, a mechanism will then have to be coded to keep track of the words that were encrypted. A better way is to use a key for each type and to preserve the format after encryption. This can be done by using regex to differentiate between the various types, such as email addresses, passport numbers, and normal words. This regex pattern can then be associated with the correct key to use. Format should be preserved so that this mechanism also works in reverse during decryption.

Caching and Centralization

As always, certain things will have to be cached to time-optimize calls to the endpoints exposed by the crypto provider.

The first thing that may require caching is the mapping from a key alias to its key ID. Calling the crypto provider every time to determine the ID of a key increases processing time unnecessarily.

Typically, there will be a call to sign into an entity’s account, followed by a subsequent call to retrieve the relevant group’s access token. Therefore, the second thing that may require caching is any access/bearer tokens associated with the security group. A short-lived session key that is shared by the writer and reader(s) can be used to retrieve the access token from the cache. A periodic refresh of the session key can be enforced by the very frequent eviction of entries from the cache once they reached their time of expiration. This may also increase security since the actual token is not shared with all and sundry, and access to it can be monitored in one central place.

Encryption or decryption failures can arise due to key rotations or the changing of an access token at the Crypto Provider. Due to this, recovery from cryptographic failure should always be attempted at least once by the clearing of the cache, followed by another attempt at the operation in question.

Implementation

The sample implementation utilizes four applications that are available on GitHub as follows:

Application |

URL |

Façade between clients and Crypto provider |

|

Secured Message Factory for Log4J2 |

|

Sample reader |

|

Sample application that illustrates integration of secured logger |

Writing From Client

The application ac-slogger has to be compiled and assembled towards a jar for inclusion on the classpath of the application that logs to the file.

Log4J2 was used to demonstrate the encryption of sensitive material due to the simplicity of extending it to use a different implementation of the MessageFactory interface:

public Message newMessage(String message) {

String providerPassword = System.getProperty("cryptoProviderPassword");

String providerUsername = System.getProperty("cryptoProviderUsername");

EncryptionRequestBean request = EncryptionRequestBean.builder().

email(providerUsername).

password(providerPassword).

appId("f2aac19d-b464-4c04-979e-af1937399f5c").

alg("AES").

mode("FPE").

accountId("85542a03-1574-4e9a-a2ae-587ab161465c").

sessionId(sessionId).

plain(message).build();

EncryptionResponseBean response = client.encrypt(request);

sessionId = response.getSessionId();

return new ParameterizedMessage("######## " + response.getCipherText());

}In this snippet, one can see how the central logging service is called to encrypt the qualifying words of the message that was passed into Log4J.

Access to the central component is established using an entity’s username and password. Both are passed in as environment -D* variables on the command line. The custom MessageFactory extension is triggered by registering it as an environment variable:

LOG4J_MESSAGE_FACTORY=za.co.s2c.ac.slogger.TokenizingMessageFactoryReading From Client

A simple console application demonstrates unlocking the encrypted content by reading lines from the standard input. Common Linux diagnostic tools such as less, tail, and grep can therefore pipe content into the application.

The relevant code is:

Scanner scanner = new Scanner(System.in);

while (scanner.hasNext()) {

String next = scanner.nextLine();

if (next.startsWith(":q"))

break;

DecryptionRequestBean request = DecryptionRequestBean.builder().

email(username).

password(password).

appId("f2aac19d-b464-4c04-979e-af1937399f5c").

alg("AES").

mode("FPE").

accountId("85542a03-1574-4e9a-a2ae-587ab161465c").

sessionId(sessionId).cipher(next).build();

DecryptionResponseBean response = client.decrypt(request);

sessionId = response.sessionId;

System.out.println("# " + response.plain);The console input is sent line by line to the central service for decryption. The username and password of the user’s account must be provided on the command line.

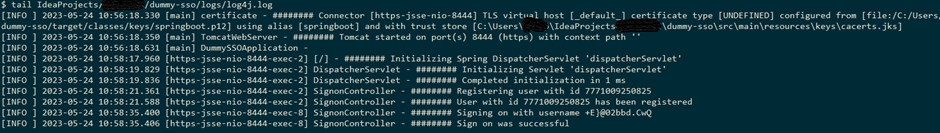

Two screenshots illustrate the usage of the log reader. Tailing the log, one can see encrypted South African ID numbers (consisting of 13 numbers) and email addresses while the format has been preserved:

Note the entries arising from library/framework code appear intermixed with application entries as expected.

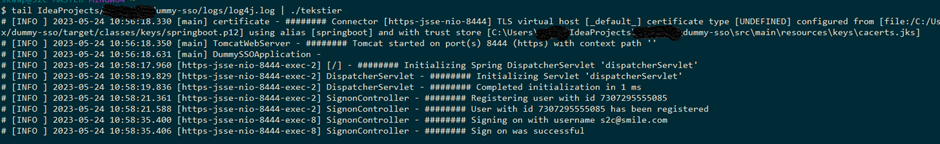

Piping the above into a batch file calling the decryption application results in the following:

The encrypted email address and ID number from higher up now appear in the clear.

Logging by Central Logging Service

The sample application is not much different from that of an API gateway in that it caches and enforces access controls.

The encryption and decryption of log entries are performed by two endpoints exposed inside the central logging component. Encryption and decryption are realized similarly. Hence, only the code for encryption is provided:

StringTokenizer st = new StringTokenizer(request.getPlain());

while (st.hasMoreElements()) {

String toCheck = st.nextToken();

String lastChars = "";

char lastChar = toCheck.charAt(toCheck.length() - 1);

if (lastChar == '.' || lastChar == ',' || lastChar == ';' || lastChar == ':') {

toCheck = toCheck.substring(0, toCheck.length() - 1);

lastChars = String.valueOf(lastChar);

}

if (EmailValidator.getInstance().isValid(toCheck)) {

processedMessage.append(encryptToken(request, appSecret, EMAIL_KEY, toCheck)).append(lastChars);

} else if (isIDNumber(toCheck)) {

processedMessage.append(encryptToken(request, appSecret, ID_NR_KEY, toCheck)).append(lastChars);

} else {

processedMessage.append(toCheck);

}

processedMessage.append(" ");

}The log entry is passed in as part of the request and is tokenized into words. Every word is checked to see whether it matches one of the patterns requiring encryption. Should it be required, the sensitive material is sent off to the Crypto Provider specifying the key to encrypt under. Otherwise, it is left unencrypted. Lastly, the words are added back in sequence before being returned to Log4J to be written to the log. There probably are quicker ways to do this, but its clarity suffices the purpose of the demonstration.

It is, lastly, important to realize that since the format is preserved by encryption, decryption proceeds in the exact same fashion. Should format not be preserved, a mechanism must be put in place to track which words require decryption.

Hardening the Cache and Other Security Concerns

The first line of defense, as always, is to use SSL/TLS connections. Sensitive material such as the password and eventual session ID that gives access to the façade should never be in the clear. Therefore, the rest services of both the sample application and central component have been encrypted using HTTPS. There are, furthermore, mechanisms implemented to allow the use of the provided keys and self-signed certificates in local development environments.

The central logging component boots from Spring Boot. It was, therefore, a matter of adding the correct Hazelcast dependencies to have access to a shared memory cache. Hazelcast, however, should be configured correctly to prevent unwanted and malicious applications from joining the cluster and thus gaining access to sensitive material. There are ample guides on the internet on how this is done.

This implementation caches key details and part of an access token:

- Key alias maps to the key ID

- Account password maps to access the token portion

Due to sensitivity, the caching of the secret portion has been further hardened by encrypting it with a one-time pad. The session key of the central component is longer than the length of the secret, so it can be safely used as the key.

The cache is cleared upon failure of a cryptographic operation. However, Hazelcast should also be configured to evict entries every hour or so. This added security allows for the crypto provider’s access token to be expired at longer intervals. This can lead to improvement in performance and operations, depending on how things are implemented at the crypto provider.

Relevant code snippets are:

Random randomGenerator = new Random(new Date().getTime());@PostMapping("crypto/encrypt")

public EncryptionResponse encrypt(@RequestBody EncryptionRequest request) {

log.info("Encryption requested: " + request);

String sessionId = password2PseudoAppSecret.get(request.getPassword()) != null ? request.getSessionId() : generateSessionKey();

StringBuffer processedMessage = new StringBuffer();

try {

String appSecret = determineAppSecret(sessionId, request.getEmail(), request.getPassword(), request.getAccountId(), request.getAppId());

****** Encryption code provided elsewhere ******

// lastly only cache once sure that all worked out

password2PseudoAppSecret.put(request.getPassword(), doOneTimePad(appSecret, sessionId));

} catch (Throwable t) {

if (password2PseudoAppSecret.get(request.getPassword()) == null) {

// nothing to be done as full process did run without taking data from the cache

log.error(t.getMessage(), t);

log.error("Encryption failed.");

throw t;

}

log.info("Clearing cache and trying encryption again.");

// do it again in full in case cache was stale

password2PseudoAppSecret.remove(request.getPassword());

keyCache.clear();

return encrypt(request);

}

EncryptionResponse response = EncryptionResponse.builder().sessionId(sessionId).cipherText(processedMessage.toString()).build();

log.info("Encryption finished: " + response);

return response;

} private String determineAppSecret(String sessionId,

String email,

String password,

String accountId,

String appId) {

String appSecret = password2PseudoAppSecret.get(password);

if (appSecret != null) {

appSecret = decodePseudoAppCred(appSecret, sessionId);

} else {

AppCredentialBean appCredential = getAppApiKey(email, password, accountId, appId);

appSecret = appCredential.getCredential().getSecret();

}

return appSecret;

} private String doOneTimePad(String appSecret, String password) {

byte[] out = xor(appSecret.getBytes(), password.getBytes());

return new String(encoder.encode(out)).replaceAll("\\s", "");

}

private String decodePseudoAppCred(String appSecret, String key) {

byte[] out = xor(decoder.decode(appSecret), key.getBytes());

return new String(out);

} private static byte[] xor(byte[] a, byte[] key) {

byte[] out = new byte[a.length];

for (int i = 0; i < a.length; i++) {

out[i] = (byte) (a[i] ^ key[i % key.length]);

}

return out;

}Lastly, sensitive material such as passwords and the central services’ session ID should never be serialized. In this implementation, Lombok assisted in achieving the immutability of beans with minimal code. Sensitive materials have therefore been appropriately annotated where needed:

@lombok.ToString.Exclude

private transient String password;Final Thoughts

Above serves as an example of how to achieve compliance with what privacy laws state should not be persisted in the clear during preserving of system history. It allows for vetted users to decrypt using their own accounts instead of one shared account. Access can therefore be controlled by closing an account or removing it from a security group. Though things might work differently for other architectures, such as ELK and Splunk, the principles remain the same. Only the implementation will differ.

Since logging data away can be time-consuming, it should be done in a non-blocking fashion using non-blocking rest calls or event queues. Log4J2 used here should thus be used in asynchronous mode.

Should your security provider not adhere to some of the principles outlined above, you will have to code the deficiency away in the central component. Fortanix DMS, however, ticked most of the boxes and resulted in minimal code being written in this layer.

I am surprised how well things turned out, and all organizations out there should take a hard and serious look at how secure their preservation of system history really is. Security breaches will not go away, but regulators will increasingly lose patience!

Published at DZone with permission of Jan-Rudolph Bührmann. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments