Telemetry Pipelines Workshop: Integrating Fluent Bit With OpenTelemetry, Part 1

Take a look at integrating Fluent Bit with OpenTelemetry for use cases where this connectivity in your telemetry data infrastructure is essential.

Join the DZone community and get the full member experience.

Join For FreeAre you ready to get started with cloud-native observability with telemetry pipelines?

This article is part of a series exploring a workshop guiding you through the open source project Fluent Bit, what it is, a basic installation, and setting up the first telemetry pipeline project. Learn how to manage your cloud-native data from source to destination using the telemetry pipeline phases covering collection, aggregation, transformation, and forwarding from any source to any destination.

In the previous article in this series, we looked at how to enable Fluent Bit features that will help avoid the telemetry data loss we saw when encountering backpressure. In this next article, we look at part one of integrating Fluent Bit with OpenTelemetry for use cases where this connectivity in your telemetry data infrastructure is essential.

You can find more details in the accompanying workshop lab.

There are many reasons why an organization might want to pass their telemetry pipeline output onwards to OpenTelemetry (OTel), specifically through an OpenTelemetry Collector. To facilitate this integration, Fluent Bit from the release of version 3.1 added support for converting its telemetry data into the OTel format.

In this article, we'll explore what Fluent Bit offers to convert pipeline data into the correct format for sending onwards to OTel.

Solution Architecture

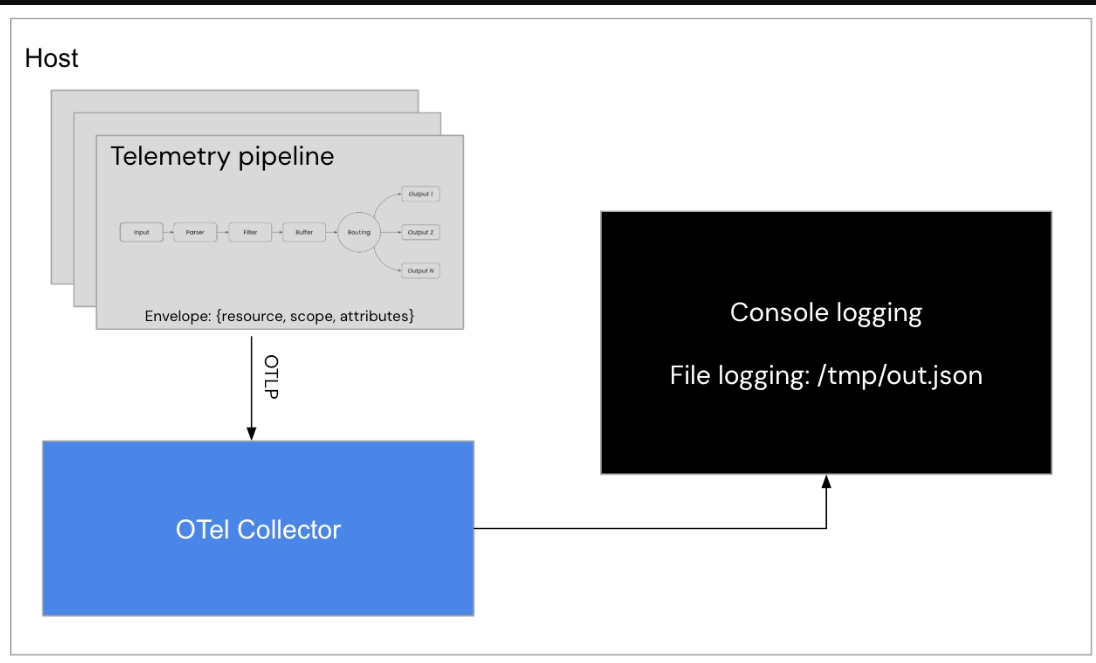

Before we dive into the technical solution, let's examine the architecture of what we are doing and the components involved. The following diagram is an architectural overview of what we are building. We can see the pipeline collecting log events and processing them into an OTel Envelope to satisfy the OTel schema (resource, scope, attributes), pushing to an OTel Collector, and finally, to both the collectors' console output and onwards to a separate log file:

The solution will be implemented in a Fluent Bit configuration file using the YAML format and initially, we will be setting up each phase of this solution a step at a time, testing it to ensure it's working as expected.

Initial Pipeline Configuration

We begin the configuration of our telemetry pipeline in the input phase with a simple dummy plugin generating sample log events and the output section of our configuration file workshop-fb.yaml as follows:

# This file is our workshop Fluent Bit configuration.

#

service:

flush: 1

log_level: info

pipeline:

# This entry generates a success message for the workshop.

inputs:

- name: dummy

dummy: '{"service" : "backend", "log_entry" : "Generating a 200 success code."}'

# This entry directs all tags (it matches any we encounter)

# to print to standard output, which is our console.

#

outputs:

- name: stdout

match: '*'

format: json_lines

(Note: All examples below are shown running this solution using container images and features Podman as the container tooling. Docker can also be used, but the specific differences are left for the reader to solve.)

Let's now try testing our configuration by running it using a container image. The first thing that is needed is to open in our favorite editor, a new file called Buildfile-fb. This is going to be used to build a new container image and insert our configuration. Note this file needs to be in the same directory as our configuration file, otherwise adjust the file path names:

FROM cr.fluentbit.io/fluent/fluent-bit:3.1.4 COPY ./workshop-fb.yaml /fluent-bit/etc/workshop-fb.yaml CMD [ "fluent-bit", "-c", "/fluent-bit/etc/workshop-fb.yaml"]

Now we'll build a new container image, naming it with a version tag, as follows using the Buildfile-fb and assuming you are in the same directory:

$ podman build -t workshop-fb:v12 -f Buildfile-fb STEP 1/3: FROM cr.fluentbit.io/fluent/fluent-bit:3.1.4 STEP 2/3: COPY ./workshop-fb.yaml /fluent-bit/etc/workshop-fb.yaml --> b4ed3356a842 STEP 3/3: CMD [ "fluent-bit", "-c", "/fluent-bit/etc/workshop-fb.yaml"] COMMIT workshop-fb:v4 --> bcd69f8a85a0 Successfully tagged localhost/workshop-fb:v12 bcd69f8a85a024ac39604013bdf847131ddb06b1827aae91812b57479009e79a

Run the initial pipeline using this container command and note the following output. This runs until exiting using CTRL+C:

$ podman run --rm workshop-fb:v12

...

[2024/08/01 15:41:55] [ info] [input:dummy:dummy.0] initializing

[2024/08/01 15:41:55] [ info] [input:dummy:dummy.0] storage_strategy='memory' (memory only)

[2024/08/01 15:41:55] [ info] [output:stdout:stdout.0] worker #0 started

[2024/08/01 15:41:55] [ info] [sp] stream processor started

{"date":1722519715.919221,"service":"backend","log_entry":"Generating a 200 success code."}

{"date":1722519716.252186,"service":"backend","log_entry":"Generating a 200 success code."}

{"date":1722519716.583481,"service":"backend","log_entry":"Generating a 200 success code."}

{"date":1722519716.917044,"service":"backend","log_entry":"Generating a 200 success code."}

{"date":1722519717.250669,"service":"backend","log_entry":"Generating a 200 success code."}

{"date":1722519717.584412,"service":"backend","log_entry":"Generating a 200 success code."}

...

Up to now, we are ingesting logs, but just passing them through the pipeline to output in JSON format. Within the pipeline after ingesting log events, we need to process them into an OTel-compatible schema before we can push them onward to an OTel Collector. Fluent Bit v3.1+ offers a processor to put our logs into an OTel envelope.

Processing Logs for OTel

The OTel Envelope is how we are going to process log events into the correct format (resource, scope, attributes). Let's try adding this to our workshop-fb.yaml file and explore the changes to our log events. The configuration of our processor section needs an entry for logs. Fluent Bit provides a simple processor called opentelemetry_envelope and is added as follows after the inputs section:

# This file is our workshop Fluent Bit configuration.

#

service:

flush: 1

log_level: info

pipeline:

# This entry generates a success message for the workshop.

inputs:

- name: dummy

dummy: '{"service" : "backend", "log_entry" : "Generating a 200 success code."}'

processors:

logs:

- name: opentelemetry_envelope

# This entry directs all tags (it matches any we encounter)

# to print to standard output, which is our console.

#

outputs:

- name: stdout

match: '*'

format: json_lines

Let's now try testing our configuration by running it using a container image. Build a new container image, naming it with a version tag as follows using the Buildfile-fb, and assuming you are in the same directory:

$ podman build -t workshop-fb:v13 -f Buildfile-fb STEP 1/3: FROM cr.fluentbit.io/fluent/fluent-bit:3.1.4 STEP 2/3: COPY ./workshop-fb.yaml /fluent-bit/etc/workshop-fb.yaml --> b4ed3356a842 STEP 3/3: CMD [ "fluent-bit", "-c", "/fluent-bit/etc/workshop-fb.yaml"] COMMIT workshop-fb:v4 --> bcd69f8a85a0 Successfully tagged localhost/workshop-fb:v13 bcd69f8a85a024ac39604013bdf847131ddb06b1827aae91812b57479009euyt

Run the initial pipeline using this container command and note the same output as we saw with the source build. This runs until exiting using CTRL+C:

$ podman run --rm workshop-fb:v13

...

[2024/08/01 16:26:52] [ info] [input:dummy:dummy.0] initializing

[2024/08/01 16:26:52] [ info] [input:dummy:dummy.0] storage_strategy='memory' (memory only)

[2024/08/01 16:26:52] [ info] [output:stdout:stdout.0] worker #0 started

[2024/08/01 16:26:52] [ info] [sp] stream processor started

{"date":4294967295.0,"resource":{},"scope":{}}

{"date":1722522413.113665,"service":"backend","log_entry":"Generating a 200 success code."}

{"date":4294967294.0}

{"date":4294967295.0,"resource":{},"scope":{}}

{"date":1722522414.113558,"service":"backend","log_entry":"Generating a 200 success code."}

{"date":4294967294.0}

{"date":4294967295.0,"resource":{},"scope":{}}

{"date":1722522415.11368,"service":"backend","log_entry":"Generating a 200 success code."}

...

The work up to now has been focused on our telemetry pipeline with Fluent Bit. We configured log event ingestion and processing to an OTel Envelope, and it's ready to push onward as shown here in this architectural component:

The next step will be pushing our telemetry data to an OTel collector. This completes the first part of the solution for our use case in this article, be sure to explore this hands-on experience with the accompanying workshop lab linked earlier in the article.

What's Next?

This article walked us through integrating Fluent Bit with OpenTelemetry up to the point that we have transformed our log data into the OTel Envelope format. In the next article, we'll explore part two where we push the telemetry data from Fluent Bit to OpenTelemetry completing this integration solution.

Stay tuned for more hands-on material to help you with your cloud-native observability journey.

Published at DZone with permission of Eric D. Schabell, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments