Step-By-Step Guide To Enable JSON Logging on OpenShift

JSON log parsing is important for the application to create a custom index in Elasticsearch. Learn here how to enable JSON logging on Red Hat OpenShift 4.

Join the DZone community and get the full member experience.

Join For FreeOpenShift Logging’s API enables you to parse JSON logs into a structured object and forward them to either OpenShift Logging-managed Elasticsearch or any other third-party system.

Prerequisites

You will need to install the following technologies before beginning this exercise:

Sample application printing the logs in JSON format

Deploy Sample Application

Deploy the sample application in the demo namespace. Below is the log for the application:

{"Thread":"Camel (MyCamel) thread #1 - timer://foo", "Level":"INFO ", "Logger":"simple-route" , "Message":">>> 957"}

Normally, the ClusterLogForwarder custom resource (CR) forwards that log entry in the message field. The message field contains the JSON-quoted string equivalent of the JSON log entry, as shown in the following example.

{"message":"{\"Thread\":\"Camel (MyCamel) thread #1 - timer://foo\", \"Level\":\"INFO \",\ "Logger\":\"simple-route\" ,\ "Message\":\">>> 957\"}”

After enabling JSON parse, the JSON-structured log entry is in a structured field, as shown in the following example. This does not modify the original message field.

{"structured":{"Thread":"Camel (MyCamel) thread #1 - timer://foo", "Level":"INFO ", "Logger":"simple-route" , "Message":">>> 957"

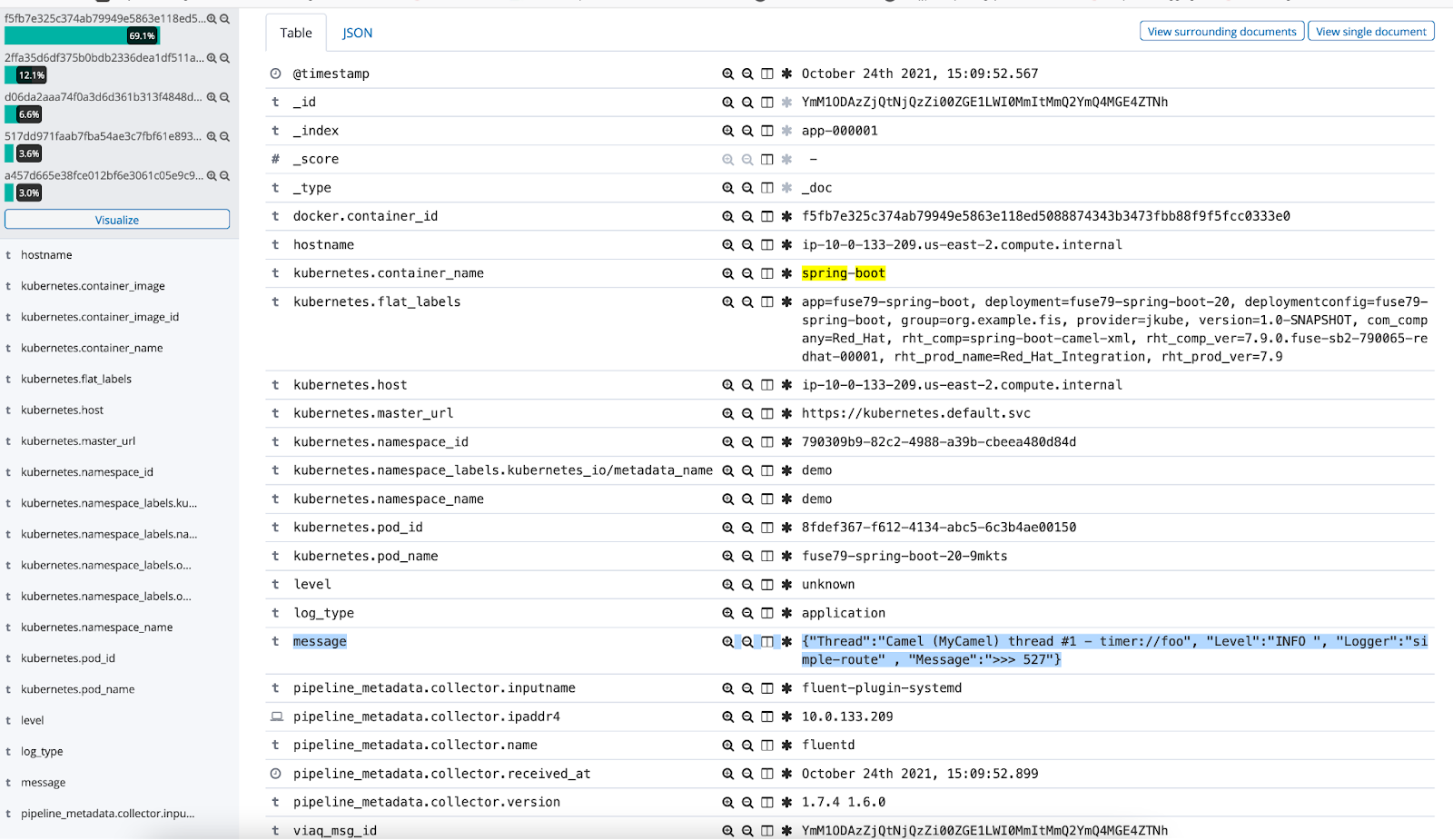

Verify the Logs in Kibana

The application logs are represented in the message field.

Enable JSON Logging

Enable the JSON parse using the ClusterLogForwarder. Below is the sample CR which enables JSON for applications deployed in demo namesapce.

apiVersion: logging.openshift.io/v1

kind: ClusterLogForwarder

metadata:

name: instance

namespace: openshift-logging

spec:

inputs:

- application:

namespaces:

- demo

name: input-demo

outputDefaults:

elasticsearch:

structuredTypeKey: kubernetes.namespace_name

structuredTypeName: demo-index-name

pipelines:

- inputRefs:

- input-demo

name: pipeline-a

outputRefs:

- default

parse: json

- inputRefs:

- infrastructure

- application

- audit

outputRefs:

- defaultRefer to the document to know about the parameter and other options.

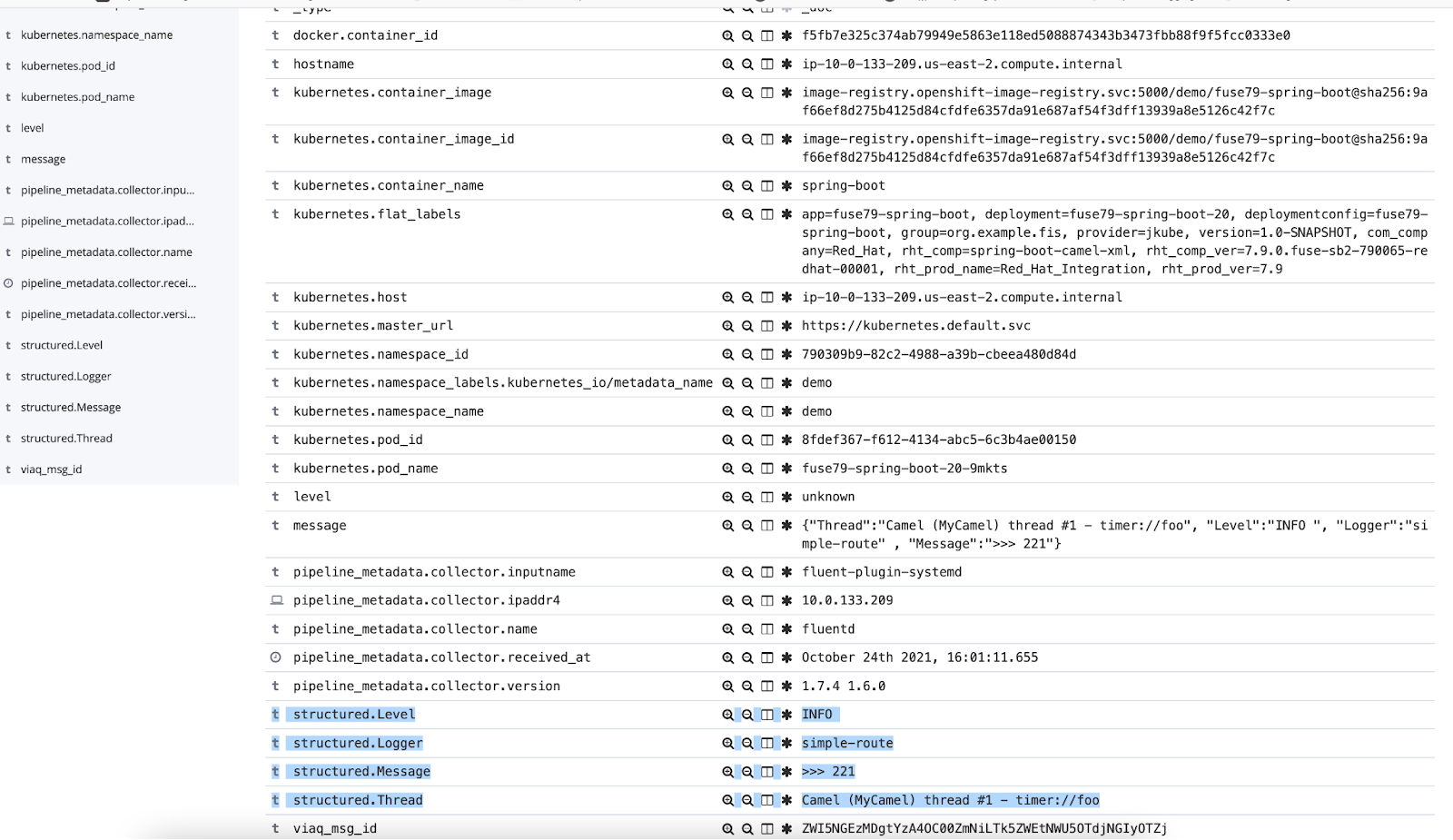

Verify the Logs in Kibana

After enabling the JSON parse, the fluend create the separate index for demo application and parse the logs to additional structured fields.

#Application Log

{"Thread":"Camel (MyCamel) thread #1 - timer://foo", "Level":"INFO ", "Logger":"simple-route" , "Message":">>> 221"}

#Kibana

Additional structured fields are created based on the logs.

t structured.Level INFO

t structured.Logger simple-route

t structured.Message >>> 221

t structured.Thread Camel (MyCamel) thread #1 - timer://foo

Conclusion

Thanks for reading! I hope this article helps you get started with the JSON logging on OpenShift.

Opinions expressed by DZone contributors are their own.

Comments