Migration of Microservice Applications From WebLogic to Openshift

Learn from our experiences with seamless migration of Spring Boot microservices from WebLogic to OpenShift and practices for parallel running on both platforms.

Join the DZone community and get the full member experience.

Join For FreeThe need for an environment to put software applications into service is a concept that is contemporary with the history of software development. While the software dimension of the business changes, there are also changes and improvements in technology, CI/CD practices, usage scenarios, and operational expectations — the environmental practices that allow the software to serve.

In this article, we will discuss our experiences with the seamless migration of Spring Boot (version 2.5.6) microservice applications from Oracle WebLogic to the Red Hat OpenShift Container Platform. Also, the practices to ease and ensure the parallel running of applications in both platforms will be discussed.

At the initial installation phase, due to operational restrictions, we couldn't deploy our microservices to OpenShift. After some time, increasing the usage rate on production and having the need for a horizontally scalable environment in order to respond to this growth drove us to address those restrictions and migrate our microservices from WebLogic to OpenShift.

Since the process is about migrating active production applications (for now, we are talking about 30 microservices), a parallel run of the WebLogic and OpenShift environments was a must. For this purpose, the use of profiles and environment variables offers lots of ease. While parallel running, the management of network traffic through these environments was handled in Apache and Ngnix platforms, which are beyond the scope of this article.

Parallel Run: Using Profiles

During the parallel run of our applications both in Oracle WebLogic and OpenShift platforms, we use the following profiles;

- Spring Boot profiles

- Maven profiles

- Logback profiles

Spring Boot Profiles

Since the runtime needs of our applications (such as DataSource configurations) are satisfied in different ways in different platforms, Spring Boot profiles addresses our problem very well. We use two profiles: openshift and weblogic, representing OpenShift and WebLogic environments respectively. In application (or bootstrap) YAML files, we declare these profiles by separating them with triple dashes (---). Here is a sample application.yaml file:

spring:

config:

activate:

on-profile: weblogic

application:

name: my-service

management:

endpoints:

web:

exposure:

include: "*"

server:

port: 8082

servlet:

context-path: /my-service

---

spring:

config:

activate:

on-profile: openshift

application:

name: my-service

management:

endpoints:

web:

exposure:

include: "*"We'll cover some usages of these profiles under the following sections.

Maven Profiles

Like runtime needs, build time needs also differ from one environment to another. For instance, when we deploy WAR files into WebLogic, JAR execution with java -jar works in OpenShift. This difference can be achieved by declaring different profiles in pom.xml. For our example and needs, different package output formats are declared in project.packaging property in the pom.xml file. Here is a sample pom.xml file section about profile declaration:

...

<profiles>

<profile>

<id>weblogic</id>

<activation>

<activeByDefault>true</activeByDefault>

</activation>

<properties>

<project.packaging>war</project.packaging>

</properties>

</profile>

<profile>

<id>openshift</id>

<properties>

<project.packaging>jar</project.packaging>

</properties>

</profile>

</profiles>

...For the sake of debugging purposes; it is handy to use the active-profiles goal of the maven-help-plugin as the following pom.xml excerpt:

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-help-plugin</artifactId>

<version>3.2.0</version>

<executions>

<execution>

<id>show-profiles</id>

<phase>compile</phase>

<goals>

<goal>active-profiles</goal>

</goals>

</execution>

</executions>

</plugin>Logback Profiles

We are using Logback for logging. In reality, there is no logging profiles as we intended here. The "profile" refers to springProfile here and is being used for differentiation of logging behaviors for different Spring profiles. For our scenario, there are different application container environments. This can be achieved via putting a logback-spring.xml under the resources directory of every Spring Boot project. The contents are almost the same for each microservice application.

<?xml version="1.0" encoding="UTF-8"?>

<configuration scan="true" scanPeriod="3 seconds">

<springProfile name="weblogic">

<include file="${DOMAIN_HOME}/config/logconfig/my-service/logback-included.xml"/>

</springProfile>

<springProfile name="openshift">

<include file="${LOG_CONFIG_PATH}/logback-included.xml"/>

</springProfile>

</configuration>Here, we differentiate the included file from every Spring Profile. With this, hard-coded configurations for logging behaviors are minimized.

Parallel Run: Environment Variables

As we saw in logback-spring.xml above, we utilize environment variables in both environments to differentiate behaviors. In WebLogic, environment variables are being introduced in WebLogic instances and start scripts. On the other hand, in OpenShift we introduce environment variables in deployment.yaml file of the application. Here is a sample deployment.yaml file in which some environment variables are declared:

...

containers:

env:

- name: LOG_CONFIG_PATH

value: /opt/app/conf/logconfig

- name: REPORT_CONFIG_PATH

value: /opt/app/conf/reportconfig

- name: APP_LOG_PATH

value: /opt/app/log

- name: DATABASE_URL

valueFrom:

secretKeyRef:

name: database-secrets

key: db_url

- name: DATABASE_USERNAME

valueFrom:

secretKeyRef:

name: database-secrets

key: db_username

- name: DATABASE_PASSWORD

valueFrom:

secretKeyRef:

name: database-secrets

key: db_password

- name: OS_ENVIRONMENT_INFO

valueFrom:

secretKeyRef:

name: environment-variables

key: OS_ENVIRONMENT_INFO

...Here, we use not only static values but also values from OpenShift secrets.

DataSource Configurations

In WebLogic, we, as developers, only deal with JNDI names in terms of DataSource configurations. Nearly everything about DataSources is managed under WebLogic connection pools in the admin console by our operation team. But in OpenShift, the DataSourceource configurations cost a bit more for the developer side than they cost in WebLogic. After delivering a small example about the difference between two environments, how DataSource configurations are being achieved in OpenShift to catch our experience in WebLogic will be explained.

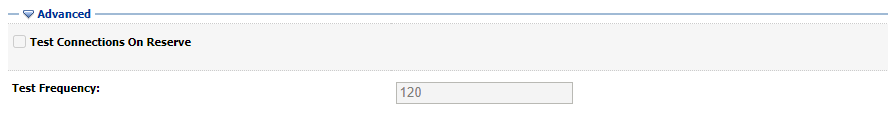

Below is the WebLogic configuration for testing (aka checking) period for unused connections to check whether they are still alive or not.

In OpenShift, this should be specifically defined. Otherwise, it is disabled by default. (We are using Hikari library). Below is the configuration we made for this purpose:

spring.datasource.hikari.keepaliveTime=120000

As we explained in earlier sections, we took advantage of Spring profiles for differentiating OpenShift and WebLogic DataSource configurations. Here is the related section of our application.yml for DataSource configuration:

spring:

config:

activate:

on-profile: weblogic

application:

name: my-service

datasource:

jndi-name: db/my_app

---

spring:

config:

activate:

on-profile: openshift

application:

name: my-service

datasource:

driver-class-name: oracle.jdbc.OracleDriver

url: ${DATABASE_URL}

username: ${DATABASE_USERNAME}

password: ${DATABASE_PASSWORD}

hikari:

keepalive-time: 120000OpenShift Route Timeouts

The last configuration we will cover for this article's scope is the OpenShift route timeout configuration. Since there is no route concept in WebLogic like in OpenShift, we didn't have such a configuration before. So, after migrating our applications to OpenShift platform and declaring services and routes, we were starting to face unexpected timeouts while calling our APIs. After some research, we overcame this issue by defining the annotation below for each and every route in our project:

kind: Route

apiVersion: route.openshift.io/v1

metadata:

name: my-service

namespace: my-namespace

annotations:

haproxy.router.openshift.io/timeout: 120s

...Summary

In this article, we shared our experience with the seamless migration of Spring Boot microservice applications from WebLogic to OpenShift, and we also shared our practices for a parallel run in both environments. The practices shared above may not be the ultimate and best fitting solutions, but hopefully, they give you some ideas for dealing with such a painful migration process.

Opinions expressed by DZone contributors are their own.

Comments