Containerization and Helm Templatization Best Practices for Microservices in Kubernetes

Learn best practices for containerizing a microservice with Spring Boot applications using the Docker image in Kubernetes utilizing Helm charts.

Join the DZone community and get the full member experience.

Join For FreeMicroservices empower developers to rapidly build applications that are easy to deploy, monitor, and configure remotely. Let's look at the best practices for containerizing a microservice (in our model, we use Spring Boot applications) using the Docker image in K8s utilizing Helm charts.

Best Practices in Dockerizing a Microservice

Spring Boot applications with the uber-container approach are independent units of deployments. This model is great for environments like virtual machines or Kubernetes clusters since the application carries all it requires with it. Docker gives us a way to bundle dependencies. It is essential to put the whole Spring Boot JAR into the Docker image.

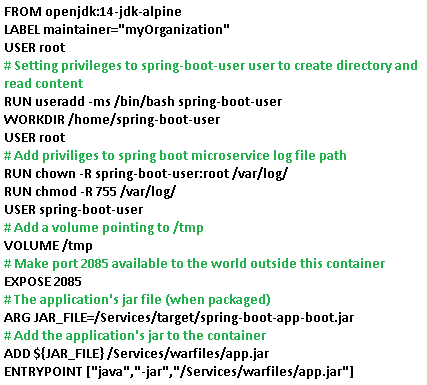

The single-layer approach is simple, fast, and straightforward to use. The outcome is a working Docker image that runs precisely in the way you would expect a Spring Boot application to run. Thus, we can make a Docker file in our Spring Boot project:

Docker file:

Challenges of Docker Containers

Securing the Docker container is a tedious job because of the many floating layers in the Docker environment:

- Containers: You might have multiple Docker container images, each for a microservice, and can have multiple instances of each image running at different schedules. All these images must be secured and monitored.

- Docker daemon: To keep the containers it hosts safe, this must be secured.

- Container host: If you host your containers in VMs, servers, or a Kubernetes Service like AKS.

- Networks and APIs: That facilitate communication between containers.

- External volumes and storage systems.

Use Container Images From Trusted Source

There are many publicly available container-images. Images from untrusted sources are non-verified and may crash the entire environment. To overcome this, we should always pull images from official trusted repositories, such as those on Docker Hub, or you can go for custom images as the root layer instead of public images. We can also use image scanning tools like Clair to identify vulnerabilities in it.

Minimize the Configuration

Notice that the base image is openjdk:14-jdk-alpine. The alpine images are smaller than the standard OpenJDK library images from Docker Hub. It creates a layer for the Spring Boot application to run. The image defined by your file should generate containers that can be stopped and destroyed, then rebuilt and replaced with an absolute minimum set up and configuration.

Install Only Required Packages

To lessen intricacy, conditions, document sizes, and fabricate times, abstain from introducing extra or superfluous bundles since they may be ideal to have. For example, you do not need to include a text editor in a SQL Server image.

Keep Minimal Layers

To maximize container performance, it is important that you reduce the number of layers. Commands like run, add, and copy create additional layers and other instructions do not modify the size of the build. To reduce size of the build, wherever possible, use multistage builds and copy only the required artifacts into the final build.

Scan the Source Code for Vulnerability

Usually, the Docker build is a combination of source code and packages from upstream sources. The specific image comes from a trusted registry. The image could incorporate packages, or could originate from single or multiple untrusted sources. We can use source code analysis tools to determine whether the code contains known security vulnerabilities by downloading the sources of all packages in your images.

Run as Non-Root User

Running jars with user permissions assists with moderating a few security threats. So, an important improvement to the Docker file is to run the app as a non-root user. Add a Working directory and grant permission for this user to that directory. In production, the best practice is to let a Docker container run without root permissions. Since we are using Kubernetes to orchestrate our containers, we can explicitly prevent containers from starting as root using the MustRunAsNonRoot directive in a pod security policy.

API and Network Security

Microservice containers communicate with each other via APIs and networks. Since our Docker container is hosted in AKS, we need to secure the APIs and network architectures for Kubernetes and need to monitor the APIs and network activity for anomalies.

Best Practices in Helm Templatizing a Microservice in K8s

Now that we containerized our Spring Boot microservice and after we pushed the image to any container registry like ACR, we need to deploy it to a Kubernetes cluster. The deployment of a microservice to a K8s cluster might become challenging as it is related to creating a number of various K8s resources such as pods, replica sets, services, config maps, secrets, etc. Helm helps with that. Along these lines, the release is fundamentally instructing Helm what chart to deploy and its values.

Let’s talk about the best practices of Helm charts for microservices that are easy to maintain, roll back, and upgrade.

One Chart per Microservice

Packaging all the manifests required to deploy a microservice, like deployment.yaml, ingress.yaml, and service.yaml, into one template is the best approach. It lets us manage deployment in one go and help us upgrade and rollback in a much simpler way.

Adding Deployment Files

This deployment files tells us how the pod must be deployed in Kubernetes cluster. The deployment file is placed inside the templates subfolder of the Helm charts folder.

Deployments

Instead of deploying each pod, the best approach for stateless applications is the deployment model. It is described in deployment.yaml of kind Deployment. You can update deployments to change the configuration of pods, container image used, or attached storage.

StatefulSets

To maintain the state of applications beyond an individual pod lifecycle, such as storage, we go for StatefulSets. It is defined in file as statefulset.yaml and declared of kind StatefulSet.

My Spring Boot microservice is a stateless app, so I am going with the deployment.yaml file.

Parameterizing Helm Using Values.yaml

When Helm starts parsing, it first hits the values.yaml file. For maintainability of the helm charts it’s always good to move the configurations of the application which is used across the files present in templates folder of that chart, which is used to deploy the application in the values.yaml. Let us look at sample values.yaml.

Use Only Authorized Images in Your Deployment

Use private registries to store your approved images and make sure you only push approved images to these registries. Using Docker images from untrusted sources will cause a security threat. It is as good as deploying a program from an unauthorized vendor on a production server. Avoid that. In our example, we have built our custom image and pushed to Azure Container Registry which is a private registry. If you do not specify an image version, the latest is used by default. Although this can be convenient in development, it is a bad idea for production usage because the latest is clearly being mutated every time a new image is built.

Initialize the Container Pods Using Init Containers

Sometimes the container needs initialization before becoming ready. Before the pod reaches the ready state all the steps for initialization can be moved to another container which does the groundwork. For changing the directory permissions , create directory and copy files to it , downloading files and more we can opt for an init container.

We can even use the init container to run the pods in a specific order. There can be multiple init containers in a pod each for different initializing tasks.

Implement Liveness and Readiness Probes Health Check

We will lose our revenue if the pod is unhealthy; we need to monitor the health of the containers through probes.

- Liveness probes: We are telling the Kubelet to kill the container if this probe fails.

- Readiness probes: If this probe fails, we are telling the kubelet to not route traffic to this container where the spring boot application is running.

Liveness and readiness probes help to check the health of pod during deployments, restarts, and upgrades. These probes must be properly configured, or it may result in pod terminations on initializing or may start receiving user requests before they are ready.

Specify the Configuration with Config Maps and Secrets

The Config Map is a key-value pair YAML file which will be absorbed by the pods for the configuration data. It stores non-sensitive, unencrypted configuration information. In case of sensitive information, you must use Secrets. For our deployment, Imagepullsecrets for ACR registry is created as a secret. In our application, we have a few configurations that are not sensitive, so we have used config maps. Then, we should mount the above-mentioned configuration properties into the application's pods. When defining the Deployment YAML file, we must mount config maps (spring-boot-app-config) as volumes in the pod, as shown above deployment.yaml.

Specifying Resource Quotas for the Namespace

Resource quotas are a mechanism for the K8s admin to monitor resources consumption between teams so that they do not consume beyond their allowed limits. To operate your microservices application at scale, a system should be able to continuously scale within the allowed usage of multiple resources up and down the supply chain. Resource quotas are very powerful. Let’s look at how you can use them to restrict CPU and memory resource usage.

If we try to deploy a pod more than this limit in this namespace, we will get forbidden error from server.

Managing Resources for the Containers

Specifying the resources requests and limits in the deployment.yaml tells kube scheduler to place the pod in which node. The resource types are CPU, memory, ephemeral storage, and others. Specifying resource requests and limits will help evade containers getting crashed because of unavailability of resources or wounding up and using most of resources. For example, when a process in the container tries to consume more than the allowed amount of memory, the system kernel terminates the process that attempted the allocation with an out-of-memory (OOM) error.

Configuring Pod Replicas for high Availability

To ensure high availability of pods, we need to configure more than one replica for the pods. Kubernetes controllers like Replica Sets, Deployments, and StatefulSets can be configured for pod replication. Running more than one instance of your Pods guarantees that deleting a single pod will not cause downtime. The number is replicas is based on the load on the microservice application requirement. Now in our Helm chart, the replica count is specified in Values.yaml, which is modified as below.

Applying Pod Affinity Rules

We may experience situations in which we need to control the manner in which pods are scheduled on nodes. Deploying multiple pods under the same node can be achieved by specifying the pod affinity. For example, a Nginx web server pod and a Spring Boot microservice pod both must be scheduled in the same node. This will reduce the network downtime between the web app and the backend microservice In different situations, you might need to drive that on the same node we will not run more than one instance of the same pod. Pod anti-affinity rules will forestall this situation. Analyze your workload and update pod affinity strategies for the deployments.

Scheduling Auto Scalers for Deployments

Kubernetes has auto-scaling capabilities for the deployments in the form of horizontal pod auto scaler (HPA), vertical pod auto scaler (VPA), and cluster auto-scaling based on resource consumption or external metrics.

- HPA: Scales by adding or removing replicas of pod.

- VPA: Scales the resources of a pod by increasing or decreasing

- Cluster Auto Scaler: Scales the cluster size by adding or removing worker nodes.

To configure the HPA to auto scale your app, you must create a horizontal pod auto scaler resource, which defines what metric to monitor for your app.

Rollback the Release on Failure

Monitor the Helm release or deployment using any Kubernetes monitoring tools. If you get any failures, Helm has a unique feature rollback that will help us roll back to previous release or any specific release. This enables us to have zero downtime in continuous deployments.

xxxxxxxxxx

helm rollback flags RELEASE REVISION

The first argument of the rollback command is the name of a release, and the second is a revision (version) number. If you would like to roll back to the previous release, these arguments can be ignored. The following Helm command can be used to display the revision numbers.

xxxxxxxxxx

helm history RELEASE

Achieve Zero Downtime Deployment Updates Using Rolling Updates

Helm deployment updates can be achieved with zero downtime by gradually updating pod instances with the latest ones by configuring rolling updates. The new pods will be scheduled on Nodes with available resources. In our values.yaml, refer to Deployment Strategy.

Type:RollingUpdateis the default values or we can specifyRecreate.maxUnavailable: This field specifies during the update process the maximum number of unavailable pods. This value can be of type a number (we have used 2) or a percentage of ideal pods. This is an optional field.maxSurge: This field says the maximum number of pods that can be deployed over the ideal number of Pods. This value can be of type a number or a percentage of ideal pods (default value is 25%). This is an optional field.

RBAC

In Helm, role-based access control is the best approach to contain the user or application specific service account operations within scope. Using RBAC, we can achieve the following:

- Administrators: Permissions for privileged actions, e.g. creating new roles

- Users: Limit permissions to manage resources (create, update, view, delete) in a specific namespace or cluster.

RBAC resources are ServiceAccount (namespaced), Role (namespaced), ClusterRole, RoleBinding (namespaced), and ClusterRoleBinding. Let‘s take a look at the sample Helm RBAC. Here, we are restricting the scope of users in group development team in Azure AD (rolebinding) with get, list, and watch permissions in the mentioned resource and API groups (role).

Configure Service Accounts for Your Pods

Service accounts are used to authenticate the process in the container pods to communicate to the api server. The cluster itself assigns default service account whenever a pod is created without service account in the same namespace . We can create a service account object like below and refer to it in our deployment as shown above.

On the off chance that we indicate automountServiceAccountToken: false, we can bypass the automounting of API credentials. This service account is authenticated to pull the image from acr and specified in pod spec as it is referred in helm chart deployment.yaml

Secure Your Domain With TLS Connection

TLS helps secure the communication between microservices deployed by our Helm which runs in a public domain. We must create certs to delegate TLS connection. These self-signed certificates help Helm, and Ingress Controller makes sure they are taking instructions from the authoritative sources only. The outcome is the incoming connections from SSL authenticated clients will only be accepted by our custom namespace. Run the following commands to install a cert-manager.

And configure a certificate and update it in your cluster and update the same in ingress.yaml as fixed in the above values.yaml.

Enabling Pod Security Policy

To mitigate security risks and uphold secure configurations within your Kubernetes environment the incredible option is to configure psp. you can use Kubernetes Pod security policies for restricting access the host process or network , running containers with special permissions, restrict the permissions of the user running the containers, allow access to the host filesystem. In light of the need of your cluster choose the right policy.

Apply PSP in the cluster to run the pod as unprivileged user and let us modify the docker file and deployment yaml to run as non-root user.

Debugging Helm

Once our Helm charts are deployed, Kubernetes API server may reject our helm manifest. The best practices are to identify these issues and fix them upfront. This is achieved by debugging the Helm chart using the following Helm commands:

- Helm lint to identify the YAML parser error and check that chart is in line with best practices.

- Helm install –dry-run –install to identify the YAML parser error and it outputs the rendered template and any errors in it.

Running Kubescore for Helm Charts

To deploy secure and reliable applications in Kubernetes, we can use Kubescore. The Kubescore static Kubernetes object analysis tool provides list of recommendations to improve the security and resiliency of our microservices. Kubescore can be installed via prebuilt binaries or Docker.

Critical validations by Kubescore:

- Setting up resource limits for containers

- Enabling network policy for pod with recommended ingress rules

- PodDisruptionPolicy configured for Deployments

- PodAntiAffinity configured for Deployment’s host

- Configuring health probes and readiness probes should not be identical to liveness probes

- Container security context; run as high number user/group, do not run as root or with privileged root fs

- Use a stable API

The following command validates Helm chart using Kubescore.

Helm 3 Features

- No more tiller: With cluster Kubernetes RBAC, managing tiller became difficult and less dependency with tiller release management. The security complexity Helm charts removed the need for tiller and enables client only-architecture.

- More secure: Direct communication with Kubernetes API server and the permissions-based kube config file and can restrict the user access.

- Removal of Helm Init: With the removal of tiller and XDG configuration, there is no need for Helm initialization and Helm state is maintained automatically.

- Release is scoped to Namespace: Release information is now stored in the namespace with the release. This scopes the release specific to that namespace instead all release sharing the same tiller namespace.

- Helm CLI command changes: In order to realign with other package managers, a few commands are renamed.

- helm delete -> helm uninstall (--purge is taken by default)

- helm inspect -> helm show

- helm fetch -> helm pull

- JSON schema validation in charts allows you to provide typed values and have them packaged up and gives you early failure detection and error reporting.

- Three-way strategic Merge patches in upgrade strategy allows the old and new state on disk to be examined in the context of the live state in the running cluster. This prevents unexpected incidents caused by uncommitted production updates.

Migrating Helm Charts From Helm 2 to Helm 3

Now, we have used Helm 2 and we need migrate to Helm 3. This requires an understanding of how the Helm 2 client is set up. We need to understand a few things. How many clusters are managed by the client? How many tiller instances are used? What releases were deployed in each tiller namespace? You will want to read about essential changes, but most charts will work unmodified after the API version changes. A Helm 2 to Helm 3 plugin will take care of migration.

Opinions expressed by DZone contributors are their own.

Comments