SSL Performance Overhead in MySQL

Join the DZone community and get the full member experience.

Join For Freenote: this is part 1 of what will be a two-part series on the performance implications of using in-flight data encryption.

some of you may recall my security webinar from back in mid-august; one of the follow-up questions that i was asked was about the performance impact of enabling ssl connections. my answer was 25%, based on some 2011 data that i had seen over on yassl’s website, but i included the caveat that it is workload-dependent, because the most expensive part of using ssl is establishing the connection. not long thereafter, i received a request to conduct some more specific benchmarks surrounding ssl usage in mysql, and today i’m going to show the results.

first, the testing environment. all tests were performed on an intel core i7-2600k 3.4ghz cpu (8 cores, ht included) with 32gb of ram and centos 6.4. the disk subsystem is a 2-disk raid-0 of samsung 830 ssds, although since we’re only concerned with measuring the overhead added by using ssl connections, we’ll only be conducting read-only tests with a dataset that fits completely in the buffer pool. the version of mysql used for this experiment is community edition 5.6.13, and the testing tools are sysbench 0.5 and perl. we conduct two tests, each one designed to simulate one of the most common mysql usage patterns. first, we examine connection pooling, often seen in the java world, where some small set of connections are established by, for example, the servlet container and then just passed around to the application as needed, and one-request-per-connection, typical in the lamp world, where the script that displays a given page might connect to the database, run a couple of queries, and then disconnect.

test 1: connection pool

for the first test, i ran sysbench in read-only mode at concurrency levels of 1, 2, 4, 8, 16, and 32 threads, first with no encryption and then with ssl enabled and key lengths of 1024, 2048, and 4096 bits. 8 sysbench tables were prepared, each containing 100,000 rows, resulting in a total data size of approximately 256mb. the size of my innodb buffer pool was 4gb, and before conducting each official measurement run, i ran a warm-up run to prime the buffer pool. each official test run lasted 10 minutes; this might seem short, but unlike, say, a pcie flash storage device, i would not expect the variable under observation to really change that much over time or need time to stabilize. the basic sysbench syntax used is shown below.

#!/bin/bash

for ssl in on off ;

do

for threads in 1 2 4 8 16 32 ;

do

sysbench --test=/usr/share/sysbench/oltp.lua --mysql-user=msandbox$ssl --mysql-password=msandbox \

--mysql-host=127.0.0.1 --mysql-port=5613 --mysql-db=sbtest --mysql-ssl=$ssl \

--oltp-tables-count=8 --num-threads=$threads --oltp-dist-type=uniform --oltp-read-only=on \

--report-interval=10 --max-time=600 --max-requests=0 run > sb-ssl_${ssl}-threads-${threads}.out

done

done

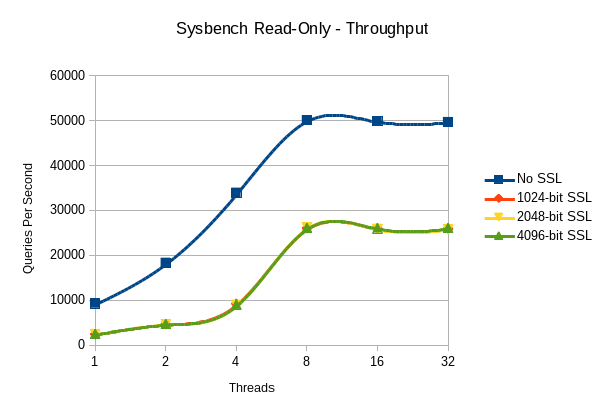

if you’re not familiar with sysbench, the important thing to know about it for our purposes is that it does not connect and disconnect after each query or after each transaction. it establishes n connections to the database (where n is the number of threads) and runs queries though them until the test is over. this behavior provides our connection-pool simulation. the assumption, given what we know about where ssl is the slowest, is that the performance penalty here should be the lowest. first, let’s look at raw throughput, measured in queries per second:

the average throughput and standard deviation (both measured in queries per second) for each test configuration is shown below in tabular format:

|

# of threads

ssl key size |

1 | 2 | 4 | 8 | 16 | 32 |

|---|---|---|---|---|---|---|

| ssl off | 9250.18 (1005.82) | 18297.61 (689.22) | 33910.31 (446.02) | 50077.60 (1525.37) | 49844.49 (934.86) | 49651.09 (498.68) |

| 1024-bit | 2406.53 (288.53) | 4650.56 (558.58) | 9183.33 (1565.41) | 26007.11 (345.79) | 25959.61 (343.55) | 25913.69 (192.90) |

| 2048-bit | 2448.43 (290.02) | 4641.61 (510.91) | 8951.67 (1043.99) | 26143.25 (360.84) | 25872.10 (324.48) | 25764.48 (370.33) |

| 4096-bit | 2427.95 (289.00) | 4641.32 (547.57) | 8991.37 (1005.89) | 26058.09 (432.86) | 25990.13 (439.53) | 26041.27 (780.71) |

so, given that this is an 8-core machine and io isn’t a factor, we would expect throughput to max out at 8 threads, so the levelling-off of performance is expected. what we also see is that it doesn’t seem to make much difference what key length is used, which is also largely expected. however, i definitely didn’t think the encryption overhead would be so high.

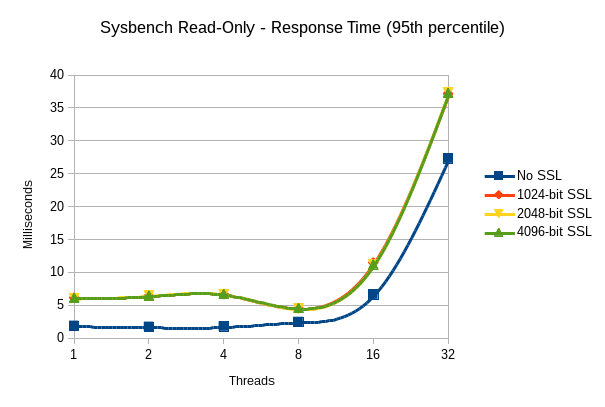

the next graph here is 95th-percentile latency from the same test:

and in tabular format, the raw numbers (average and standard deviation):

|

# of threads

ssl key size |

1 | 2 | 4 | 8 | 16 | 32 |

|---|---|---|---|---|---|---|

| ssl off | 1.882 (0.522) | 1.728 (0.167) | 1.764 (0.145) | 2.459 (0.523) | 6.616 (0.251) | 27.307 (0.817) |

| 1024-bit | 6.151 (0.241) | 6.442 (0.180) | 6.677 (0.289) | 4.535 (0.507) | 11.481 (1.403) | 37.152 (0.393) |

| 2048-bit | 6.083 (0.277) | 6.510 (0.081) | 6.693 (0.043) | 4.498 (0.503) | 11.222 (1.502) | 37.387 (0.393) |

| 4096-bit | 6.120 (0.268) | 6.454 (0.119) | 6.690 (0.043) | 4.571 (0.727) | 11.194 (1.395) | 37.26 (0.307) |

with the exception of 8 and 32 threads, the latency introduced by the use of ssl is constant at right around 5ms, regardless of the key length or the number of threads. i’m not surprised that there’s a large jump in latency at 32 threads, but i don’t have an immediate explanation for the improvement in the ssl latency numbers at 8 threads.

test 2: connection time

for the second test, i wrote a simple perl script to just connect and disconnect from the database as fast as possible. we know that it’s the connection setup which is the slowest part of ssl, and the previous test already shows us roughly what we can expect for ssl encryption overhead for sending data once the connection has been established, so let’s see just how much overhead ssl adds to connection time. the basic script to do this is quite simple (non-ssl version shown):

#!/usr/bin/perl

use dbi;

use time::hires qw(time);

$start = time;

for (my $i=0; $i<100; $i++) {

my $dbh = dbi->connect("dbi:mysql:host=127.0.0.1;port=5613",

"msandbox","msandbox",undef);

$dbh->disconnect;

undef $dbh;

}

printf "%.6f\n", time - $start;

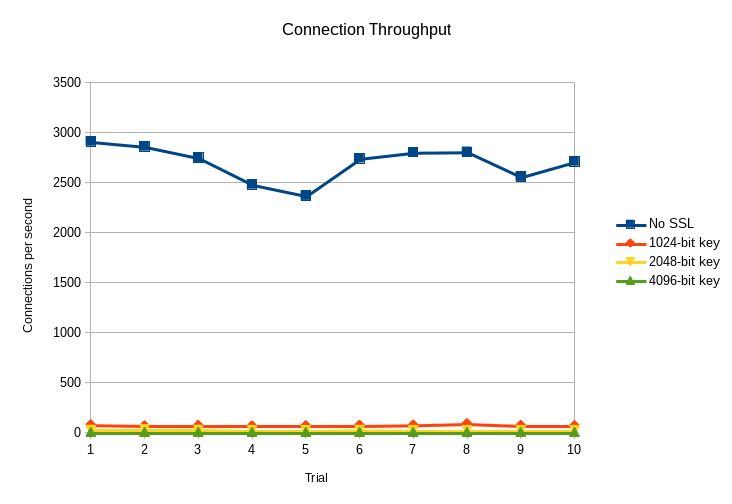

as with test #1, i ran test #2 with no encryption and ssl encryption of 1024, 2048, and 4098 bits, and i conducted 10 trials of each configuration. then i took the elapsed time for each test and converted it to connections per second. the graph below shows the results from each run:

here are the averages and standard deviations:

| encryption | average connections per second | standard deviation |

| none | 2701.75 | 165.54 |

| 1024-bit | 77.04 | 6.14 |

| 2048-bit | 28.183 | 1.713 |

| 4096-bit | 5.45 | 0.015 |

yes, that’s right, 4096-bit ssl connections are 3 orders of magnitude slower to establish than unencrypted connections. really, the connection overhead for any level of ssl usage is quite high when compared to the unencrypted test, and it’s certainly much higher than my original quoted number of 25%.

analysis and parting thoughts

so, what do we take away from this? the first thing is, of course, is that ssl overhead is a lot higher than 25%, particularly if your application uses anything close to the one-connection-per-request pattern. for a system which establishes and maintains long-running connections, the initial connection overhead becomes a non-factor, regardless of the encryption strength, but there’s still a rather large performance penalty compared to the unencrypted connection.

this leads directly into the second point, which is that connection pooling is by far a more efficient method of using ssl if your application can support it.

but what if connection pooling isn’t an option, mysql’s ssl performance is insufficient, and you still need full encryption of data in-flight? run the encryption component of your system at a lower layer – a vpn with hardware crypto would be the fastest approach, but even something as simple as an ssh tunnel or openvpn *might* be faster than ssl within mysql. i’ll be exploring some of these solutions in a follow-up post.

and finally… when in doubt, run your own benchmarks. i don’t have an explanation for why the yassl numbers are so different from these (maybe yassl is a faster ssl library than openssl, or maybe they used a different cipher – if you’re curious, the original 25% number came from slides 56-58 of this presentation ), but in any event, this does illustrate why it’s important to run tests on your own hardware and with your own workload when you’re interested in finding out how well something will perform rather than taking someone else’s word for it.

Published at DZone with permission of Peter Zaitsev, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments