Servlet 3.0 Async Support in Spring and Performance Misconceptions

Learn how to improve your application's performance without increasing code complexity by simply tweaking Tomcat configurations.

Join the DZone community and get the full member experience.

Join For FreeIt is possible to improve the performance of application servers using Servlet 3.0 async, but is it necessary?

Before We Start

Spring makes it easy to write Java applications. With Spring Boot, it became even easier. Spring Boot allows us to quickly create Spring applications: build and run a Java application server with embedded Tomcat and your own controller in less than 5 minutes. Well, I admit I used Spring Initializer for that:

In the simple Spring Boot project I created, the HTTP servlet and the servlet container were automatically generated. The HTTP servlet is the Spring class DispatcherServlet (actually extends HTTP servlet), not MyController class. So why is MyController code running? After all, the servlet container (Tomcat in our case) only recognizes servlets. Tomcat calls the http servlet method doGet to execute the GET request from the client.

@RestController

public class MyController {

@GetMapping(value = "/ruleTheWorld")

public String rule(){

return "Ruling...";

}

}Spring is all about the annotations. We marked our class as controller by adding @RestController. When the application starts, DispatcherServlet is initialized accordingly and holds a map that associates /ruleTheWorld with rule(). Rumor has it that "thread per request" architecture is something we should avoid, so I decided to check how I could execute my request on a thread other than the Tomcat thread. How could I instruct Spring to run the controller code on a different thread? A hint: it is not annotation. I am not talking about @Async, this annotation indeed executes the code asynchronously, but not in conjunction with Tomcat. Tomcat somehow needs to know that the HTTP servlet completed processing, and that it needs to return the response to the client. For this reason, Servlet 3.0 came up with the startAsync method that returns a context:

public void doGet(HttpServletRequest req, HttpServletResponse resp) {

...

AsyncContext acontext = req.startAsync();

...

}After the HTTP servlet gets the AsyncContext object, any thread that executes acontext.complete() triggers Tomcat to return the response to the client. Surprisingly, I got this behavior by just returning Callable:

@RestController

public class MyController {

@GetMapping(value = "/ruleTheWorld")

public Callable<String> rule(){

System.out.println("Start thread id: " + Thread.currentThread().getName());

Callable<String> callable = () -> {

System.out.println("Callable thread id: " + Thread.currentThread().getName());

return "Ruling...";

};

System.out.println("End thread id: " + Thread.currentThread().getName());

return callable;

}

}The output:

Start thread id: http-nio-8080-exec-1

End thread id: http-nio-8080-exec-1

Callable thread id: MvcAsync1By the order of the logs, you can see that the Tomcat thread (http-nio-8080-exec-1) was back to its pool before the request was completed and that the request was processed by a different thread (MvcAsync1). This time, no annotation is involved. It turns out that Spring uses the return value to decide whether or not to run startAsync. The same is true if I return DeferredResult. The code below was extracted from the Spring Framework repository. The handler of the Callable return value executes the request asynchronously.

public class CallableMethodReturnValueHandler implements HandlerMethodReturnValueHandler {

...

@Override

public void handleReturnValue(@Nullable Object returnValue, MethodParameter returnType,

ModelAndViewContainer mavContainer, NativeWebRequest webRequest) throws Exception {

...

Callable<?> callable = (Callable<?>) returnValue;

WebAsyncUtils.getAsyncManager(webRequest).startCallableProcessing(callable, mavContainer);

}

}

Performance Misconceptions

So why not make all my controllers return Callable? It sounds like my application can scale better, as the Tomcat threads are more available to accept requests. Let's take a common example of a controller - call other REST service. The other service will be very simple. It gets the time to sleep and sleeps:

@RequestMapping(value="/sleep/{timeInMilliSeconds}", method = RequestMethod.GET)

public boolean sleep(@PathVariable("timeInMilliSeconds") int timeInMilliSeconds) {

try {

Thread.sleep(timeInMilliSeconds);

} catch (InterruptedException e) {

e.printStackTrace();

}

return true;

}We can assume that the sleeping service has enough resources and won't be our bottleneck. Also, I have set the number of Tomcat threads for this service to be 1000 (our service will have only 10 to quickly reproduce scale issues). We want to examine the performance of this service:

@RestController

public class MyControllerBlocking {

@GetMapping(value = "/ruleTheWorldBlocking")

public String rule() {

String url = "http://localhost:8090/sleep/1000";

new RestTemplate().getForObject(url, Boolean.TYPE);

return "Ruling blocking...";

}

}In line 5, we can see the request is to sleep 1 second. Our service maximum number of Tomcat threads is 10. I will use Gatling to inject 20 requests every second for 60 seconds. Overall, while all the Tomcat threads are busy with processing requests, Tomcat holds the waiting requests in a requests queue. When a thread becomes available, a request is retrieved from the queue and is processed by that thread. If the queue is full, we get a "Connection Refused" error, but since I didn't change the default size (10,000) and we inject only 1200 requests total (20 requests per second for 60 seconds) we won't see that. The client timeout (set in Gatling configuration) is 60 seconds. These are the results:

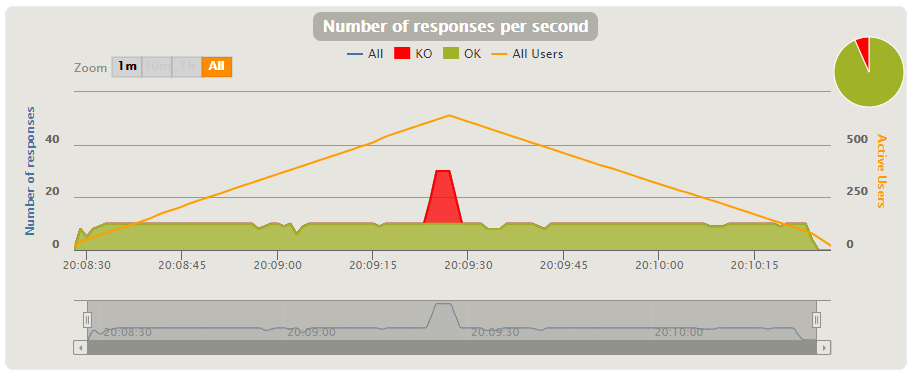

Some of the clients got timeouts. Why? Gatling calls 20 requests per second, while our service can process 10 requests every 1 second so we accumulate 10 requests in the Tomcat requests queue every second. Meaning, at second 60, the requests queue holds at least 600 requests. Can the service process all the requests with 10 threads in 60 seconds (the client timeout)? The answer is no. Processing time of one request is more than 1 second. Response time = processing time + latency time. Therefore, at least one of the clients would wait for a response for more than 60 seconds (timeout error).

Let's run the same test with the same code, but return Callable<String> instead of String:

public class MyCallableController {

@GetMapping(value = "/ruleTheWorldAsync")

public Callable<String> rule(){

return () -> {

String url = "http://localhost:8090/sleep/1000";

new RestTemplate().getForObject(url, Boolean.TYPE);

return "Ruling...";

};

}

}

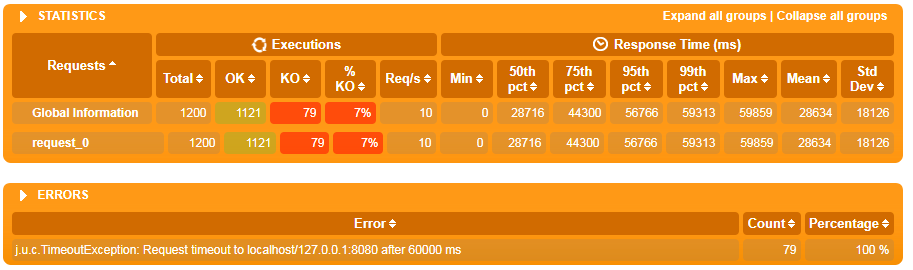

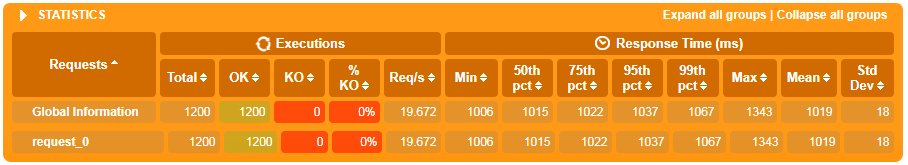

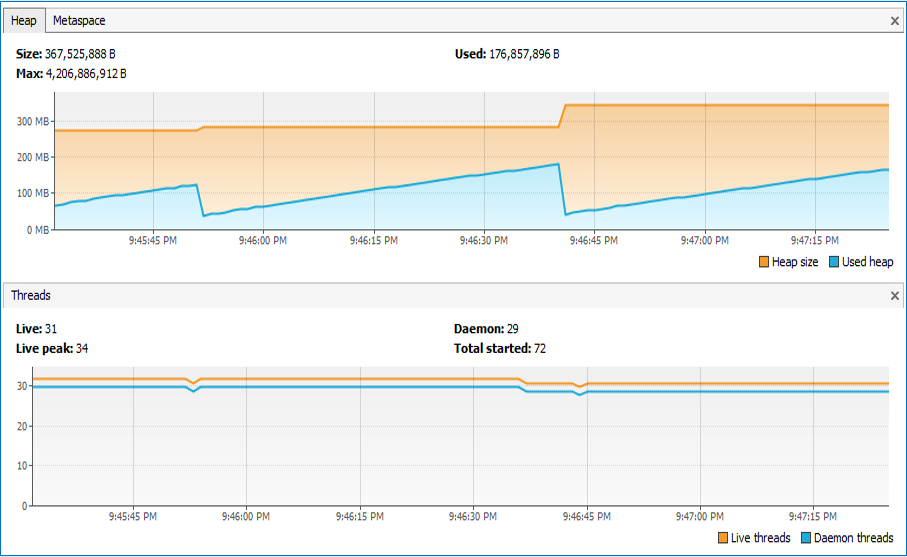

Everything looks good. All 1200 requests were successful. I even reduced the response time from 56.7 seconds to 1.037 seconds (if you look at the 95% of requests column). What happened? As mentioned before, returning Callable releases the Tomcat thread and processing is executed on another thread. The other thread will be managed by the default Spring MVC task executor. I use VisualVM to monitor the threads. Let's compare the resources of both executions.

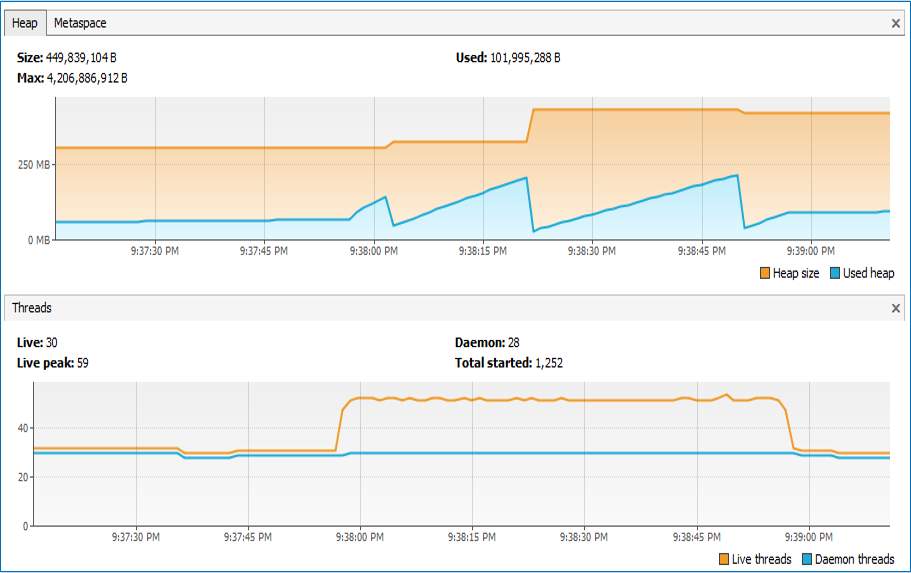

Monitoring of Blocking Controller

Monitoring of Nonblocking Controller

We actually improved performance by adding resources. Does that mean that releasing Tomcat threads was necessary in this case? Note that the request to sleeping-service is still blocking, but it is blocking a different thread (Spring MVC executer thread). According to the threads graph, it looks like we used an additional 29 threads. Let's see what happens if we add those threads to the 10 Tomcat threads and use the blocking approach. So the next test will be on the same blocking controller, but we'll change the number of Tomcat threads from 10 to 39.

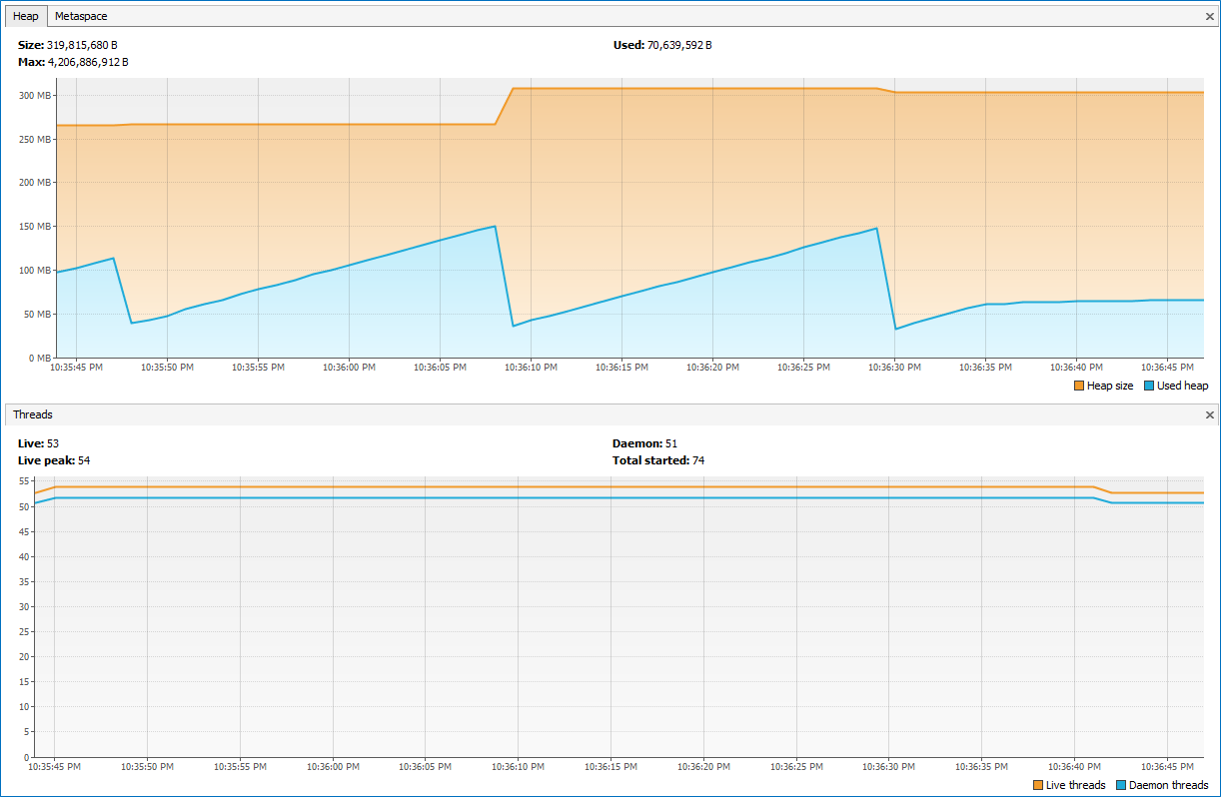

Monitoring of Blocking Controller With 39 Tomcat Threads

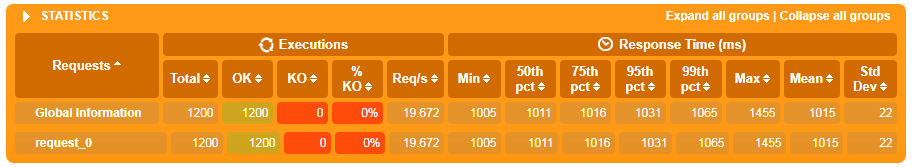

Gatling Result of Blocking Controller With 39 Tomcat Threads

With the blocking approach (39 threads), the response time is a bit better. We used less memory in the heap although we have more threads (total is 53) at the start and end, compared to the nonblocking threads chart (30). We may gain auto creation/deletion threads when using the nonblocking approach, but Tomcat also has this ability. The thing is, it is not noticeable in small numbers. For example, when defining a limit of 1000 threads for the sleeping-service, Tomcat allocated only 25 threads (at peak, 125 threads).

Conclusion

Servlet 3.0 async increases the complexity of the code, whereas it's possible to achieve similar results by just tweaking a few Tomcat configurations.

The benefit of releasing Tomcat threads is clear when it comes to a single Tomcat server with a few WARs deployed. For example, if I deploy two services and service1 needs 10 times the resources as service2, Servlet 3.0 async allows us to release the Tomcat threads and maintain a different thread pool in each service as needed.

Servlet 3.0 async is also relevant when if the processing request code uses nonblocking API (in our case, we use blocking API to call the other service).

Comments are welcome, especially if you can recommend a successful implementation of Servlet 3.0 async in a similar situation.

Opinions expressed by DZone contributors are their own.

Comments