Spinning up a Node.js Server in a Container on AWS with EC2

I needed to get a Node.js Express server running in a Docker container, then deploy that container to an EC2 instance on AWS, and I took notes. Feel free to copy.

Join the DZone community and get the full member experience.

Join For FreeFor a project at work, I needed to get a Node.js Express server running in a Docker container, then deploy that container to an EC2 instance on AWS. I took notes along the way because I was sure I'd need to do something like this again someday. Then, I figured—since I took notes—I might as well share my notes.

You can generalize my use case for your own needs. It doesn't have to be a Node.js server. It can be any Docker container image that you need to deploy to AWS EC2, as long as you know what port(s) on the container you need to expose to the outside world.

Are you ready to get started? Here we go!

1. Build the Docker Image

For this post, we'll keep it simple by deploying a basic Node.js Express server with a single endpoint. I initialized the Node.js project, added express, and then wrote the following index.js file:

const PORT = 8080;

const express = require('express');

const app = express();

app.get('/', async (req, res, next) => {

res.send('Hello world.');

});

app.listen(PORT, () => {

console.log(`Server is listening on port ${PORT}`);

});Note that the server in my example is listening on port 8080. This is what my project folder looks like:

$ tree -L 1

.

├── index.js

├── node_modules

├── package.json

└── yarn.lock

1 directory, 3 filesTo make this project deployable as a Docker container, we write a Dockerfile, which we put in the project root folder.

FROM node:14-alpine

WORKDIR /usr/src/app

COPY package*.json /usr/src/app

RUN npm install

COPY . .

EXPOSE 8080

CMD ["node", "index.js"]My project runs on Node v14.16.0. You can run node --version to determine your version, and then find the corresponding Node alpine base image. With your Dockerfile written, build the image.

$ docker build --file ./Dockerfile --tag my-node-server .

...

Successfully built a6df3f2bda72

Successfully tagged my-node-server:latest

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

my-node-server latest a6df3f2bda72 2 minutes ago 123MBYou can test your container on your local machine:

$ docker run -d -p 3000:8080 my-node-server:latest

c992be3580b1c27c81f6e2af54f9f49bf82f977df36d82c7af02c30e4c3b321d

$ curl localhost:3000

Hello world.

$ docker stop c992be3580Notice that I ran my container with -p 3000:8080, which exposes the port 8080 on my container (the one which my Node.js server is listening on) to port 3000 on my local machine. This is what allows us to send a request to localhost:3000 and get a response from our server. Later, when we're running in an AWS EC2, we'll expose port 8080 on our container to port 80 on our EC2 instance.

2. Prepare Your AWS ECR Repository

Our EC2 machine will need to fetch our container image before it can run it. To do that, we need to push our container image to AWS ECR. But before we can do that, we need to prepare our repository and set up access. Make sure you've installed the AWS CLI.

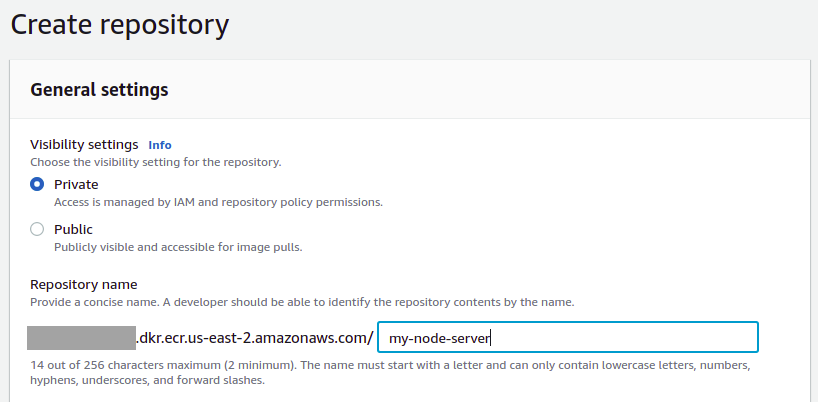

Create an ECR Repository

First, at AWS ECR, create a new repository. For our AWS region, we'll use us-east-2 (Ohio).

We create a private repository called my-node-server, keeping all of the remaining default settings.

Soon, we'll need to use docker login to access our repository and push our container image. To log in, we'll need an authentication token for our registry. Make sure you have created an IAM user with programmatic access and that you've already run aws configure to use that IAM user's credentials.

Create an IAM policy to Allow ecr:GetAuthorizationToken

Your IAM user will need permission to ecr:GetAuthorizationToken. On the AWS IAM policies page, create a new policy with the following JSON:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "ecr:GetAuthorizationToken",

"Resource": "*"

}

]

}Provide a name for your new policy (for example: ecr-get-authorization-token). Attach the policy to your IAM user.

Create an IAM Policy to Allow Uploading to Your ECR Repository

Your IAM user will also need permission to upload container images to your ECR repository. Create another IAM policy with the following JSON, making sure to set the Resource to the ARN of your repository.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"ecr:BatchCheckLayerAvailability",

"ecr:BatchGetImage",

"ecr:CompleteLayerUpload",

"ecr:DescribeImages",

"ecr:DescribeRepositories",

"ecr:GetDownloadUrlForLayer",

"ecr:GetRepositoryPolicy",

"ecr:InitiateLayerUpload",

"ecr:ListImages",

"ecr:PutImage",

"ecr:UploadLayerPart"

],

"Resource": "arn:aws:ecr:us-east-2:1539********:repository/my-node-server"

}

]

}Provide a name for your new policy (for example: ecr-upload-to-my-node-server-repo), and attach this policy to your IAM user also.

3. Push Container Image to AWS ECR

Now, we're ready to push our container image up to AWS ECR.

Tag Your Local Container Image

The container image we created was tagged my-node-server:latest. We need to tag that image with our ECR registry, repository, and (optional) image tag name. For this, you will need the URI of the ECR repository you created above.

$ docker tag my-node-server:latest \

1539********.dkr.ecr.us-east-2.amazonaws.com/my-node-server:latest

$ docker images

REPOSITORY TAG IMAGE ID

1539********.dkr.ecr.us-east-2.amazonaws.com/my-node-server latest a6df3f2bda72

my-node-server latest a6df3f2bda72Your repository URI will, of course, have your AWS Account ID and region.

Log in to Your Container Registry

With your IAM user authorized to ecr:GetAuthorizationToken, you can get the token and use it with the docker login command. Make sure the region you're getting the authorization token for is the same region that you're trying to log into.

$ aws ecr get-login-password --region us-east-2 | docker login \

--username AWS \

--password-stdin 1539********.dkr.ecr.us-east-2.amazonaws.com

...

Login SucceededPush Your Container to AWS ECR

Now that we're logged in, we can push our container up to our registry.

$ docker push 1539********.dkr.ecr.us-east-2.amazonaws.com/my-node-server:latest

The push refers to repository [1539********.dkr.ecr.us-east-2.amazonaws.com/my-node-server]

7ac6ec3e6477: Pushed

f56ccac17bd2: Pushed

91b00ce18dd1: Pushed

58b7b5e46ecb: Pushed

0f9a2482a558: Pushed

8a5d6c9c178c: Pushed

124a9240d0af: Pushed

e2eb06d8af82: Pushed

latest: digest: sha256:9aa81957bd5a74b3fc9ab5da82c7894014f6823a2b1e61cd837362107dc062e5 size: 1993Our container image with our Node.js server is now at AWS ECR!

The hard part is done. Now, we can spin up our EC2 instance.

4. Spin Up EC2 Instance

Head over to the main EC2 page, making sure that you're using the same region (us-east-2) as you did in previous steps. Click on "Launch Instance."

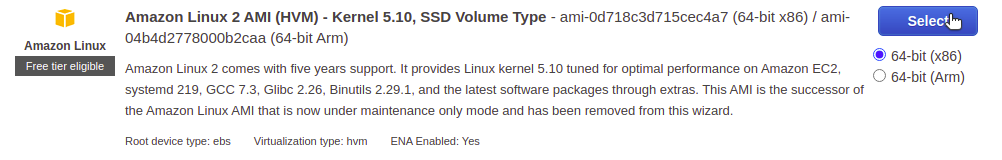

We'll launch a "free tier eligible" Amazon Linux 2 AMI instance. Choose the 64-bit (x86) version and click "Select."

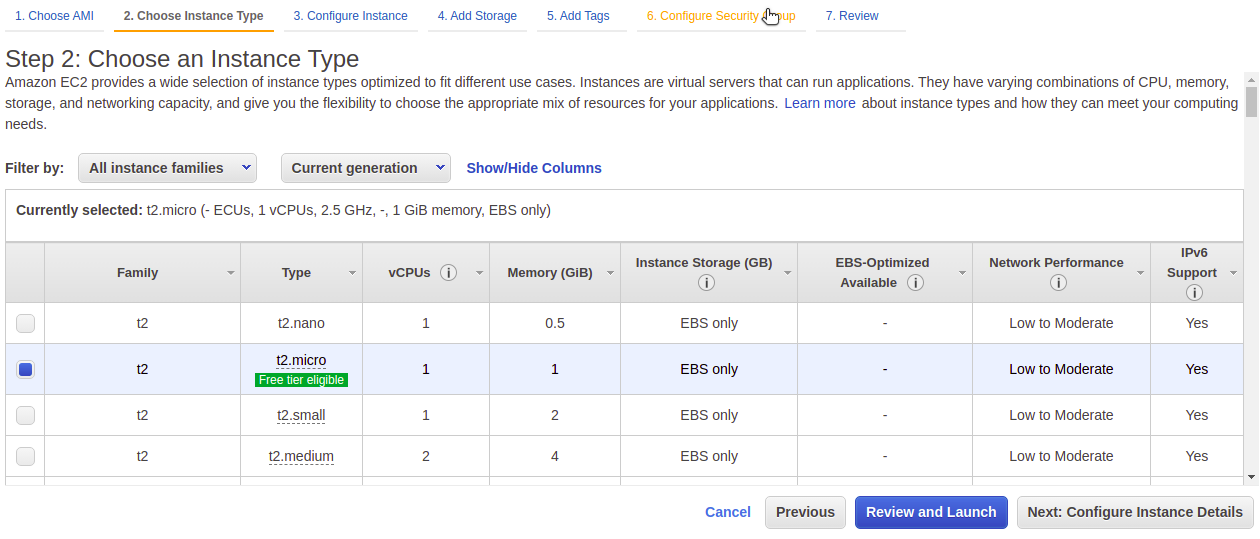

To stay in the free tier for this learning experience, we'll choose the t2.micro instance type. Then, we'll skip ahead to the "Configure Security Group" page.

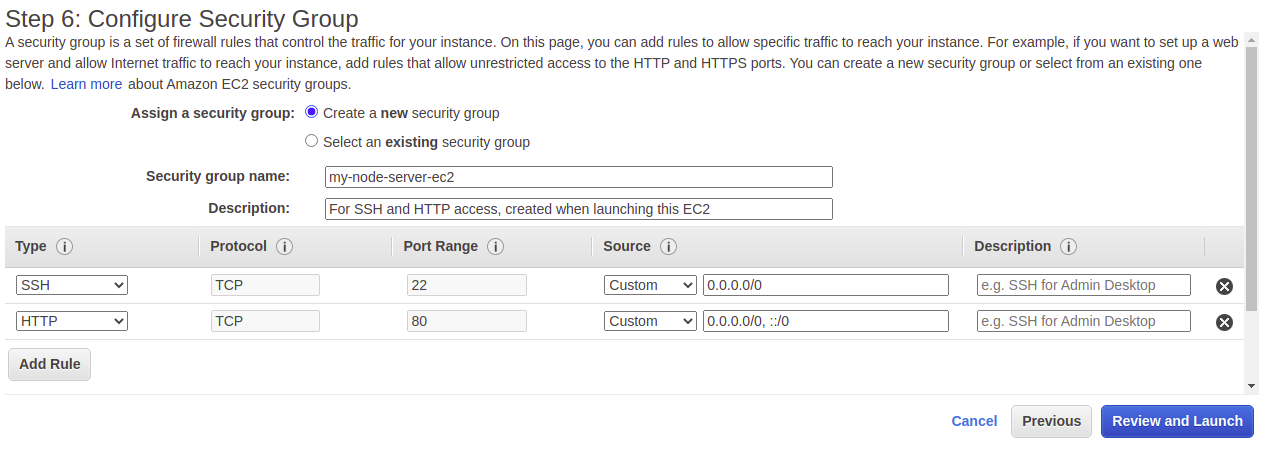

For our EC2 instance's security group, we will create a new security group, providing a name and a description. Our EC2 will need to allow for SSH (TCP at port 22) and HTTP access (TCP at port 80). AWS may warn you that you might want to restrict traffic to a whitelist of IP addresses. For a production-grade deployment, you may want to consider taking more security measures than what we're doing here for demonstration purposes.

Click on "Review and Launch," and then click on "Launch."

You'll see a dialog for creating a key pair for SSH access to your EC2 instance. Select "Create a new key pair," select "RSA" as the key pair type, and then give your key pair a name. Then, click on "Download Key Pair."

Store the downloaded private key file in a secure place. Then, click on "Launch Instances."

Your EC2 instance may take a few minutes to spin up.

5. Connect to EC2 to Install and Run Docker

Once our EC2 instance is running, we'll set it up to run our Docker container.

Connect to Your EC2 Instance

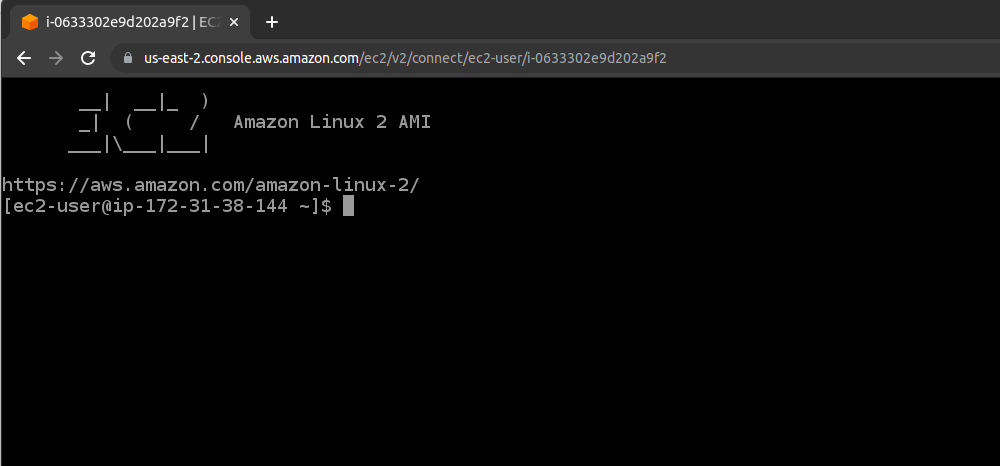

On the EC2 instances page, select the instance you just launched, and then click on "Connect." There are instructions for using an SSH client with the private key you just downloaded. You can also use the EC2 Instance Connect option here in the AWS console. Click on "Connect."

This will open a new tab in your browser, and you'll have an0 in-browser terminal with command-line access to your EC2 instance.

Install and Startup Docker

In that EC2 terminal, run the following commands to install Docker to your instance:

[ec2-user@ip-172-31-38-144 ~]$ sudo yum update -y

...

No packages marked for update

[ec2-user@ip-172-31-38-144 ~]$ sudo amazon-linux-extras install docker

...

Installed size: 285 M

Is this ok [y/d/N]: y

...

[ec2-user@ip-172-31-38-144 ~]$ sudo service docker start

[ec2-user@ip-172-31-38-144 ~]$ sudo chmod 666 /var/run/docker.sockRun aws configure to Set up IAM User Credentials

At the EC2 command line, you will need to run aws configure, using the same IAM user credentials as you have on your local machine so that you can run similar AWS CLI commands.

[ec2-user@ip-172-31-38-144 ~]$ aws configure

AWS Access Key ID [None]: AKIA****************

AWS Secret Access Key [None]: z8e*************************************

Default region name [None]: us-east-2

Default output format [None]: jsonLog in to Your Container Registry

Just like we did when pushing our image from our local machine to ECR, we need to log in to our registry (from within our EC2) so that we can pull our image.

[ec2-user@ip-172-31-38-144 ~]$ $ aws ecr get-login-password --region us-east-2 | docker login \

--username AWS \

--password-stdin 1539********.dkr.ecr.us-east-2.amazonaws.com

...

Login SucceededPull Down Container Image

Now that we're logged in, we pull down our container image.

[ec2-user@ip-172-31-38-144 ~]$ docker pull 1539********.dkr.ecr.us-east-2.amazonaws.com/my-node-server:latest

latest: Pulling from my-node-server

a0d0a0d46f8b: Pull complete

4684278ccdc1: Pull complete

cb39e3b315fc: Pull complete

90bb485869f4: Pull complete

32c992dbb44a: Pull complete

4d7fffd328bd: Pull complete

562d102dfc97: Pull complete

d7de8aedebed: Pull complete

Digest: sha256:9aa81957bd5a74b3fc9ab5da82c7894014f6823a2b1e61cd837362107dc062e5

Status: Downloaded newer image for 1539********.dkr.ecr.us-east-2.amazonaws.com/my-node-server:latest

1539********.dkr.ecr.us-east-2.amazonaws.com/my-node-server:latestRun Docker

With our container image pulled down, we can run it with Docker. Remember, we want to expose port 8080 on our container to port 80 on our EC2 instance (which is the port open to the world for HTTP access).

[ec2-user@ip-172-31-38-144 ~]$ docker run -t -i -d \

-p 80:8080 1539********.dkr.ecr.us-east-2.amazonaws.com/my-node-server

8cb7c337b9d5f39ea18a60a69f5e1d2d968f586b06f599abfada34f3fff420c16. Test With an HTTP Request

We've set up and connected all the pieces. Finally, we can test access to our server. Note that we've only set up our EC2 instance and network for responding to HTTP (not HTTPS) requests. We'll cover the additional configurations for HTTPS and a custom domain in a future article.

To test our setup, we simply need to make curl requests to the public IPv4 address (or the public IPv4 DNS address, which is an alias) for our EC2 instance.

$ curl http://3.14.11.142

Hello world.

$ curl http://ec2-3-14-11-142.us-east-2.compute.amazonaws.com

Hello world.Note that we're not specifying a port in our requests, which means we're using the default port (80) for HTTP requests. When we started up our container with docker run, we associated port 80 on our EC2 instance with the open port 8080 on our container. We also set up our security group to allow traffic from port 80.

Conclusion

Getting a basic server up and running as a container in an AWS EC2 may feel complicated. Granted, it is a lot of steps, but they are straightforward. Your own use case may be different (server implementation, container needs, ports to expose), but the process will still be pretty much the same. In our next article, we'll take what we've done a step further by setting up a custom domain and accessing our server via SSL/HTTPS.

Opinions expressed by DZone contributors are their own.

Comments