Simulating Split Brain Scenarios in an Akka Cluster

This deep dive into split brain scenarios in Akka clusters covers what they are, how they form, and most importantly, how to fix them.

Join the DZone community and get the full member experience.

Join For FreeWith the microservice revolution, the idea of having small services that can be easily scaled up or down to handle different loads has flourished. This brought about the need for services to have their own cluster and be responsible for managing it.

But managing a cluster is not a simple task — many things can go wrong.

Recently, I've been working on a service that makes use of an Akka cluster to distribute actors across replicas of the service. I was faced with the following questions: What is a split brain? How can it be resolved? How can we test it? This article explains what split brain scenarios are, different strategies on how to handle these scenarios, and how to simulate a split brain using a sample application.

Akka Cluster

Let's start by defining what an Akka cluster is. As defined by the Akka documentation:

"Akka Cluster provides a fault-tolerant decentralized peer-to-peer based cluster membership service with no single point of failure or single point of bottleneck. It does this using gossip protocols and an automatic failure detector."

A node within the cluster is uniquely identified by a hostname:port:uid. One of the nodes within the cluster is elected as a leader by the members of the cluster. The leader is responsible for managing node membership and cluster convergence.

What Is a Split Brain Scenario?

A split brain scenario is a state in which some nodes in a cluster cannot communicate with each other for some reason or another. This can be caused by many factors, such as network problems, unresponsive nodes (due to garbage collection, CPU overload) and node crashes (due to hardware failures, application crashes). Really, anything can go wrong.

Why Do You Want to Recover From Such a State?

A split brain in an Akka cluster can be catastrophic when the actors in the cluster maintain internal state — especially when using cluster sharding or cluster singletons. Cluster sharding is used to distribute actors across the cluster and access them using a logical identifier, while a cluster singleton ensures that there is only one instance of an actor of a specific type running in the cluster at any given time.

When a split brain occurs, cluster sharding and cluster singletons will not behave as expected. Since the cluster is split into sub-clusters, which cannot communicate with each other, cluster sharding migrates any shards that are mapped to unreachable nodes to the nodes that are still reachable, while a cluster singleton will re-create any singleton actors that were hosted by the unreachable nodes.

So.. what causes the catastrophe? Imagine having the actors in a cluster using cluster sharding, persisting their state to a database (using Akka persistence, for example). When a split brain occurs, there could be an actor in every network partition reading and writing to the same entity. This can result in inconsistent and possibly corrupted data.

How to Detect a Split Brain

Detection of unreachable nodes is a built-in feature in Akka clusters. The cluster uses a failure detector — where each node is monitored by other nodes. Once a node is marked as unreachable, this information is distributed to the other nodes through the gossip protocol. A more detailed explanation of how this works can be found in the Akka documentation.

How to Resolve Split Brain Scenarios

Split brain scenarios are resolved by having a split brain resolver for your service (you didn't see that coming, did you?).

Split Brain Resolver

A split brain resolver is just an actor running on each node of the cluster. This actor listens to cluster events and reacts accordingly. Typical cluster events that communicate the state of cluster members are: MemberUp, MemberJoined, UnreachableMember, ReachableMember, and LeaderChanged. For a full list of events, visit the Akka documentation here.

These events are published as a result of the gossip protocol. Using these events, every split brain resolver actor on every node can monitor and keep track of the state of the cluster and its members. When a split brain occurs, every node can detect that there are unreachable members — and the leader in every network partition can decide what to do, according to the resolving strategy being used.

Split Brain Resolution Strategies

There is no silver bullet when resolving split brain scenarios. The following are common strategies used to resolve such scenarios, but you can create your own strategy if none of these accommodate your needs. Each strategy has its unique properties that make it favorable to use in particular situations, such as cluster elasticity, service availability, and risk of incorrect resolution. The selection of a strategy depends on the requirements and intended use of the service. Each strategy has its benefits and drawbacks, so choose wisely!

Static Quorum

This strategy is for services that require some definite number of nodes to be functional, referred to as the quorum size. Using this strategy, the split brain resolver actor of the leader node would remove the unreachable nodes if the number of reachable nodes is greater than the configured quorum size. Otherwise, the reachable nodes are removed. The maximum number of nodes must not exceed quorum size * 2 - 1. If this happens, then both sides may remove each other, resulting in two separate clusters and a split brain situation.

Keep Majority

This strategy will keep the nodes in the partition that has the majority of reachable nodes and remove the partitions that are in minority. This strategy is ideal for networks where nodes are added dynamically. The downside of this strategy is that if the network is split in more than two partitions, and none of them has the majority, the whole cluster is terminated.

Keep Oldest

Using this strategy, the partition with the oldest node is kept and the other partitions are removed. This is useful when using cluster singletons, as the singleton instance is located on the oldest node. To avoid shutting down the whole cluster if the oldest node crashes, a flag down-if-alone can be set to indicate that when the cluster ends up in such a situation, the oldest node is removed and the rest of the cluster is kept running.

In such case, the cluster singleton instance is started on the next oldest node. However, a similar situation can occur if the oldest node and another node are partitioned from the rest of the nodes; all the other nodes are removed, resulting in just 2 nodes in the cluster.

Keep Referee

This strategy only keeps the partition that contains a specific node, called the referee. This strategy is ideal if there is some node that is critical to have an operational cluster. If the referee is removed from the cluster, all nodes are downed. The drawback of this strategy is that there is a single point of failure.

Simulating Split Brain Scenarios

Split brain scenarios are impossible to predict and difficult to simulate. However, a split brain resolver can be tested out by simulating some specific scenarios. In this section, split brain scenarios will be simulated using Docker by introducing network partitions.

Introducing the Einstein App

To simulate such scenarios, I have created a simple Spring Boot app named Einstein. The purpose of this app is to give an estimate of a person's intelligence quotient (IQ) when provided his/her name. Einstein was a bright person capable to provide us with estimates quickly. The source code for the app can be found here.

This application has two specific requirements:

- High availability: The application should be accessible all the time, even under stress as millions of people may be asking Einstein their IQ.

- Consistent: Once Einstein assigns an IQ value to a name, it remains the same forever. This means that if your name is Matthew and Einstein says that you have an IQ of 125, it will be 125 for every Matthew who queries their IQ.

Application Architecture

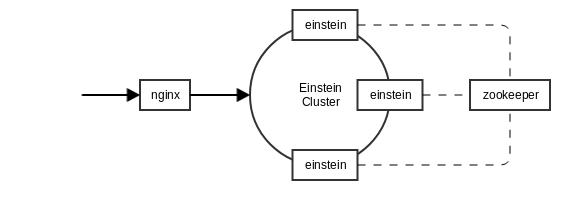

The app is composed of three services:

- einstein: the service which calculates the person's IQ. It is accessed through a GET endpoint.

- zookeeper: used for service discovery and cluster management

- nginx: a proxy that receives all HTTP requests and forwards them to the einstein service

To fulfill the first requirement, the einstein service is designed to be operated in a cluster. This would give reassurance that if one instance of the service fails, the Einstein app is still functional and can deliver the IQ values requested by the users. Due to the cluster, we have a potential risk of having a split brain — which will be simulated later on.

To fulfill the second requirement, the einstein service uses the actor model with cluster sharding. In this model, an actor is assigned a single name, is responsible for calculating the IQ, and for returning the same value whenever asked for it. In this way, any requests get the IQ of a specific name and will be processed by the same actor. Cluster sharding distributes the actors across the cluster and allows us to access the actors using a logical identifier (the person's name in this case).

Building the Application

As a prerequisite to build the application, you must have Docker and Maven installed. Then just execute mvn clean install to build the app and mvn docker:build to generate the docker images for einstein and nginx. If you have difficulties in building the app because of the split brain resolver dependency (akka-cluster-custom-downing_2.11), follow the instructions in the readme section of the repository.

Starting the Application With Docker

As a prerequisite, you must have docker-compose installed. To start the application, go to the docker directory and execute the following command:

docker-compose -f application.yml up

This will start all three services, however there is only one instance of the einstein service. To have a cluster of einstein services, there needs to be more than one service running. The following command gets multiple instances of this service up:

docker-compose -f application.yml up --scale einstein=3 einstein zookeeper nginxAsking for an IQ

To get an IQ value for a name, perform a GET request on the following URL:

http://localhost:8080/iq/{name}

Simulation

The procedure to simulate a split brain is quite easy when you think about it; disable the network connection between the nodes. So... let me take you through the procedure:

Make sure to add the NET_ADMIN capability for the einstein service. This is required to alter the routing tables of a container.

einstein:

image: einstein:latest

depends_on:

- zookeeper

cap_add:

- NET_ADMIN- Launch the app using docker-compose, with three einstein services as described in the Starting the Application with Docker section.

- Get the container IDs and IP addresses by inspecting the default Docker network:

docker network inspect docker_default. We are interested in the Containers section of the output of this command:

"Containers": {

"1e6d890d3edaea275bfb42cfc129c13f416d1ff0aa6b01818ae77e75aaaea143": {

"Name": "docker_einstein_2",

"EndpointID": "ff60e9c84a405148910c273d1d8a14ea053738261cbed17bf6c3fe6afd3f6089",

"MacAddress": "02:42:ac:13:00:04",

"IPv4Address": "172.19.0.4/16",

"IPv6Address": ""

},

"6826c4cd2f95fa377d1dfdbdbb4cb34658905c8d3f5c8e963b40d3c1502b8518": {

"Name": "docker_einstein_3",

"EndpointID": "1cddc06cb767a8b68ef56cefe45622e34b0d34bdba8ea02ef6c72d46b36381d9",

"MacAddress": "02:42:ac:13:00:05",

"IPv4Address": "172.19.0.5/16",

"IPv6Address": ""

},

"be9a6bc71403fa39794350ca63c47cc940f9b9e388b5c943a3ed02a1d5518942": {

"Name": "docker_zookeeper_1",

"EndpointID": "69444a1ce4ae0ccee7b260261b8372f762ef009d035b0e0e037b019c816f1a62",

"MacAddress": "02:42:ac:13:00:02",

"IPv4Address": "172.19.0.2/16",

"IPv6Address": ""

},

"c0eaaea62b9db0935e91794296500b4e5c367c99f10096b4546cb08e14e30ce0": {

"Name": "docker_einstein_1",

"EndpointID": "21118dd28e5a734f7eaa3d6e93773e21e2dc8e06e662dc3fd8e767200b83d5c8",

"MacAddress": "02:42:ac:13:00:03",

"IPv4Address": "172.19.0.3/16",

"IPv6Address": ""

},

"e864b4f873e6be9a8b92001a8f5a21427918eead39f0873ca314cf2f46bc44e1": {

"Name": "docker_nginx_1",

"EndpointID": "4a3d528ea92ca189dabdd22633c6894561dc007662097b4186fb49344ad03291",

"MacAddress": "02:42:ac:13:00:06",

"IPv4Address": "172.19.0.6/16",

"IPv6Address": ""

}

}We will partition the einstein cluster such that docker_einstein_1 cannot communicate with docker_einstein_2 and docker_einstein_3. First, we have to bash into docker_container_1 using docker exec:

docker exec -i -t c0ea /bin/bashAlter the routing table to drop any packets going to the other two einstein instances:

root@c0eaaea62b9db:/# ip route add prohibit 172.19.0.5 && ip route add prohibit 172.19.0.4Voilà! We have a split brain. Now you can observe closely to what is happening in the cluster and how such situation is being handled.

Splitting the Network Without a Resolver

To remove the split brain resolver, just remove the dependency akka-cluster-custom-downing-2.12, comment the split brain resolver configuration in the application.conf file, rebuild the project, and regenerate the images. If this requires too much effort, you can check out the branch split-brain-without-resolver and rebuild everything.

So, what happens when we have a split brain and we don't resolve it? Let's try it out! Go through the brain-splitting process as described above with three einstein nodes. As soon as one node is split from the other two, Akka immediately marks node 2 and node 3 as unreachable from node 1 and vice versa. Requesting the IQ of a person would result in a timeout and the cluster is not functional anymore. The following message can be observed in the logs of the einstein containers:

The ShardCoordinator was unable to update a distributed state

within 'updating-state-timeout': 5000 millis (retrying)Since we have a split brain, when requesting the IQ of a person, cluster sharding cannot update the distributed state for all the nodes of the cluster. Therefore, it fails. There is one edge case when the cluster still functions. This happens when the following events take place:

A person's IQ is requested before the split brain occurs. The actor is created on some node in the cluster.

Split brain occurs.

The same person's IQ is requested again, and nginx forwards the request to a node that resides on the same network partition as the node that hosts the actor.

The cluster can't function properly when we have unreachable nodes. So let's try to remove these unreachable nodes using Akka's auto downing feature. This feature is a naive approach to quickly re-stabilize the cluster by marking unreachable nodes as down after being in an unreachable state for a configured amount of time. This is not recommended to use in production as this does not resolve split brain scenarios! To enable this feature, just uncomment the auto-down-unreachable-after property in the application.conf file and regenerate the docker images. After starting the services and creating the split brain, wait until the configured number of seconds elapse and perform at least three GET requests for a specific name. What happened there? We get two different values for the same name. Einstein got confused this time!

This is what happened: After the configured amount of time elapsed, each side of the cluster removed the unreachable nodes, forming two separate clusters on each side of the partition. The cluster shards that were previously mapped to the unreachable nodes are now re-mapped to the available nodes. Therefore, when nginx forwards the request to different clusters, we get different results! We have two actors representing the same entity, which is dangerous.

Splitting the Network With a Resolver

Let's observe what happens when we have a split brain resolver in place. Re-add the split brain resolver or check out the master branch, rebuild the project, and regenerate the images. We will use the Keep Majority strategy to resolve any split brain scenarios.

First, get the IQ for a name and record the value returned. Then, simulate the split brain again and observe what happens. After some time, you should see the following message in the logs:

docker_einstein_1 exited with code 1

This is the expected outcome. Using the Keep Majority strategy, the resolver keeps the network partition with the majority of the nodes. In this case, the split brain resolver of node 1 recognized that it was in minority and terminated itself.

Now, get the IQ for the same name again. This can have two different outcomes:

einstein provides us with the original IQ value

einstein provides a new IQ value

Why doesn't einstein always provide us with the original IQ value? A new IQ value is provided when the actor for the specific name you chose resides in node 1, which got terminated. In such a case, a new actor needs to be created on either node 2 or node 3. Since the IQ value is not persisted, a new random number is generated. To have the same IQ value throughout, the app needs to be altered to include a database service, such as MySQL. However, if you keep on asking einstein for the IQ of this person, it will keep on returning the same value.

Conclusion

A service that runs in an Akka cluster requires a split brain resolver to be able to function correctly when a network partition occurs. It is not feasible to have a technical operations team investigate and resolve these issues on the fly. A split brain resolver, which is present in every node of the cluster, resolves split brain scenarios by keeping a specific network partition and terminating the others. The preferred network partition to keep running in such situations is chosen according to the resolution strategy used.

In this article, we've described what split brain scenarios are, common strategies on how to resolve them, and how to simulate such scenarios. We have only simulated one scenario, but using this technique, you can simulate any scenario. It would be interesting if these scenarios are automated so that any updates to the split brain resolver can be automatically tested. I hope that this article has given you a good overview of split brains in Akka clusters.

Happy split-braining!

Opinions expressed by DZone contributors are their own.

Comments